Day 2 - Learning HNSW

Utkarsh Swaroop Shrivastava

Utkarsh Swaroop ShrivastavaYo! I am late again. Worry not! I will keep up with blogging soon

So today I want to tell you about something amazing I learned. It goes by Hierarchical Navigable Small World

From chatgpt

HNSW (Hierarchical Navigable Small World) is an efficient algorithm for performing approximate nearest neighbor (ANN) searches in high-dimensional spaces. ANN search is commonly used for tasks involving vector embeddings, such as image retrieval, text similarity, or recommendation systems, where the goal is to find the closest vectors (in terms of similarity) to a query vector.

3 algorithms I learned about are: Probablility Skip list, Navigable Small World and HNSW

Probability Skip List

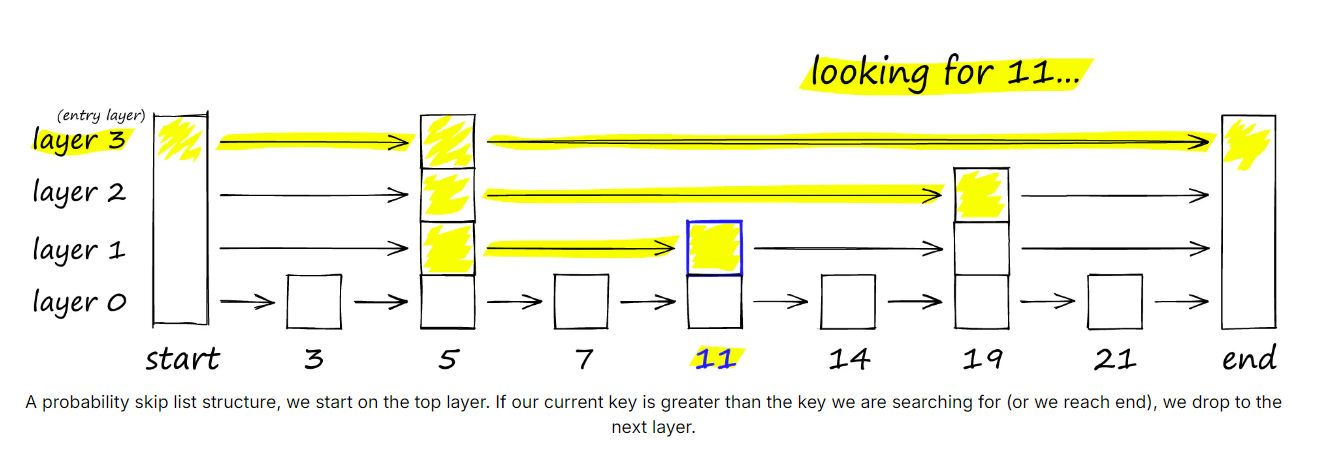

It allows fast search like a sorted array, while using a linked list structure for easy (and fast) insertion of new elements (something that is not possible with sorted arrays). Skip lists work by building several layers of linked lists. On the first layer, we find links that skip many intermediate nodes/vertices. As we move down the layers, the number of ‘skips’ by each link is decreased.

To search a skip list, we start at the highest layer with the longest ‘skips’ and move along the edges towards the right (below). If we find that the current node ‘key’ is greater than the key we are searching for — we know we have overshot our target, so we move down to previous node in the next level.

Navigable Small World

NSW is like a graph with edges and vertices. When searching an NSW graph, we begin at a pre-defined entry-point. This entry point connects to several nearby vertices. We identify which of these vertices is the closest to our query vector and move there.

We repeat the greedy-routing search process of moving from vertex to vertex by identifying the nearest neighboring vertices in each friend list. Eventually, we will find no nearer vertices than our current vertex — this is a local minimum and acts as our stopping condition.

Navigable small world models are defined as any network with (poly/)logarithmic complexity using greedy routing. The efficiency of greedy routing breaks down for larger networks (1-10K+ vertices) when a graph is not navigable

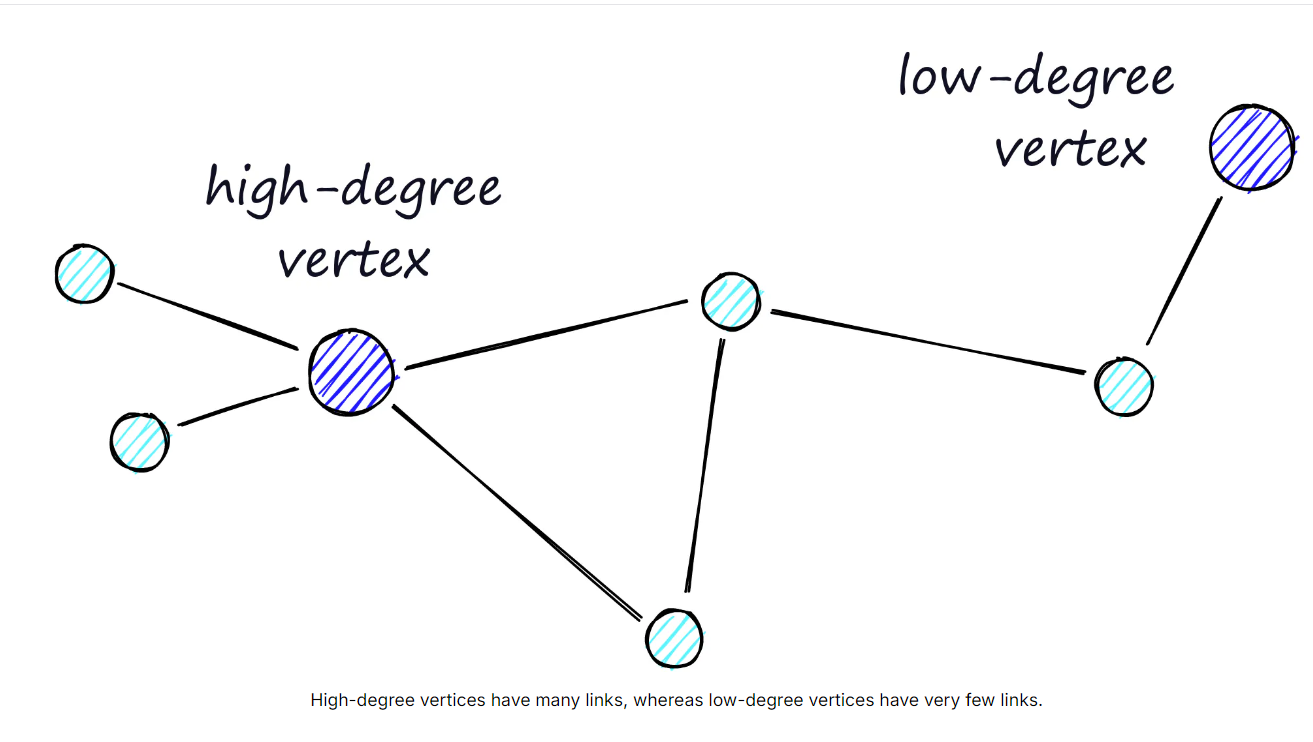

The routing (literally the route we take through the graph) consists of two phases. We start with the “zoom-out” phase where we pass through low-degree vertices (degree is the number of links a vertex has) — and the later “zoom-in” phase where we pass through higher-degree vertices

Hierarchical Navigable Small World

HNSW: As you might have guessed already it is the evolved version of NSW. And what is new?

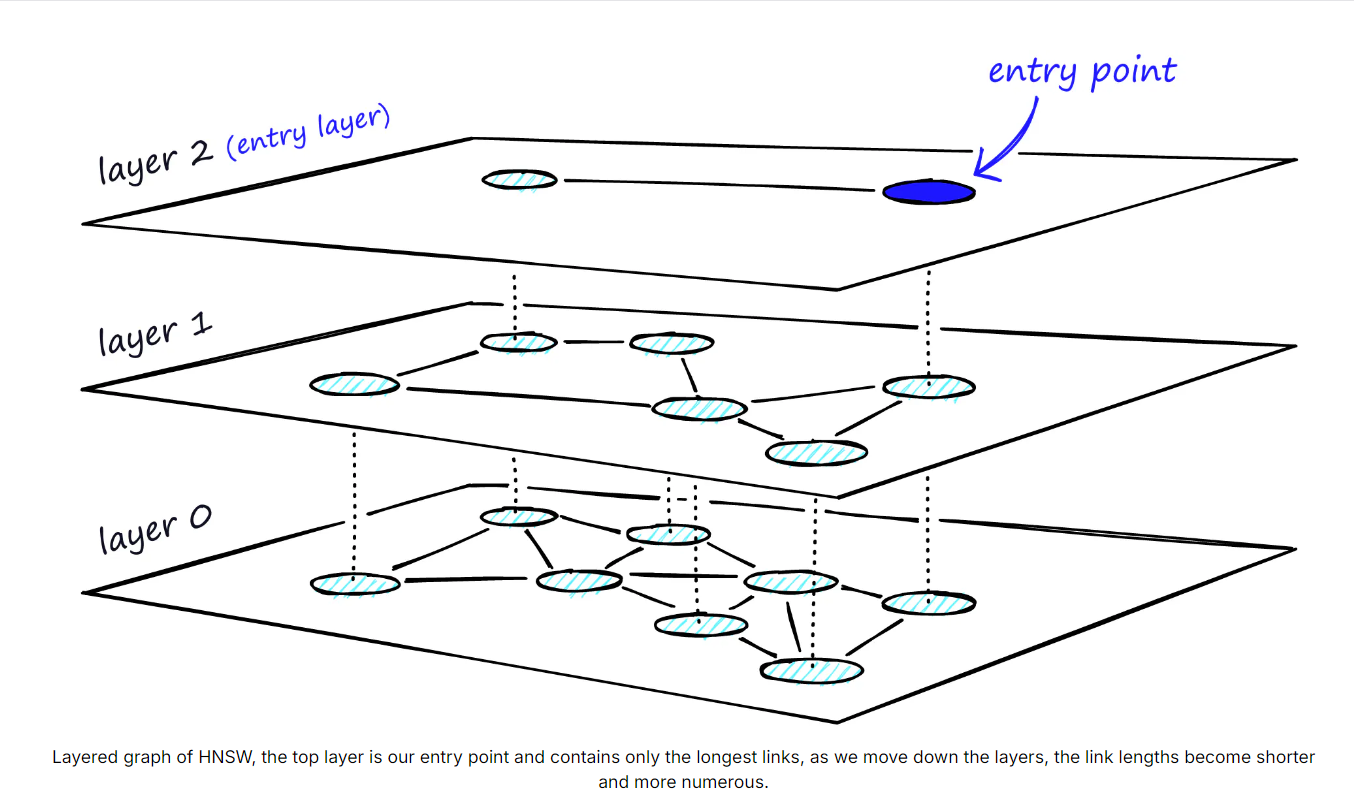

The similarity with ProbabilitySkipList. It is a combination of both NSW and SkipList.

During the search, we enter the top layer, where we find the longest links. These vertices will tend to be higher-degree vertices (with links separated across multiple layers), meaning that we, by default, start in the zoom-in phase described for NSW.

We traverse edges in each layer just as we did for NSW, greedily moving to the nearest vertex until we find a local minimum. Unlike NSW, at this point, we shift to the current vertex in a lower layer and begin searching again. We repeat this process until finding the local minimum of our bottom layer — layer 0.

If you are interested in learning more: https://www.pinecone.io/learn/series/faiss/hnsw/

This is the article I read as well as copied some(most IG) of the lines here from.

Subscribe to my newsletter

Read articles from Utkarsh Swaroop Shrivastava directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by