Modernize Your Document Management with Azure AI and Generative AI: Advance OCR, Intelligent Tagging, and NER

Pablo Salvador Lopez

Pablo Salvador Lopez

Is your company still manually processing millions of documents, classifying them by hand, or using outdated, inaccurate scripts to extract important information?

Or perhaps you are deep into your GenAI efforts and have built advanced knowledge bases, but now you're looking to integrate new data sources. What about the untagged or unprocessed documents? How do you efficiently categorize them and index the extracted data into your vector databases at scale?

We will explore the latest technologies, including advanced Optical Character Recognition (OCR) with Azure AI Document Intelligence and Named Entity Recognition (NER) using LLM solutions. After reading this post, you will be equipped to automate and enhance your document classification and entity extraction processes, boosting your knowledge bases and upstream applications. Let's get started! 🚀

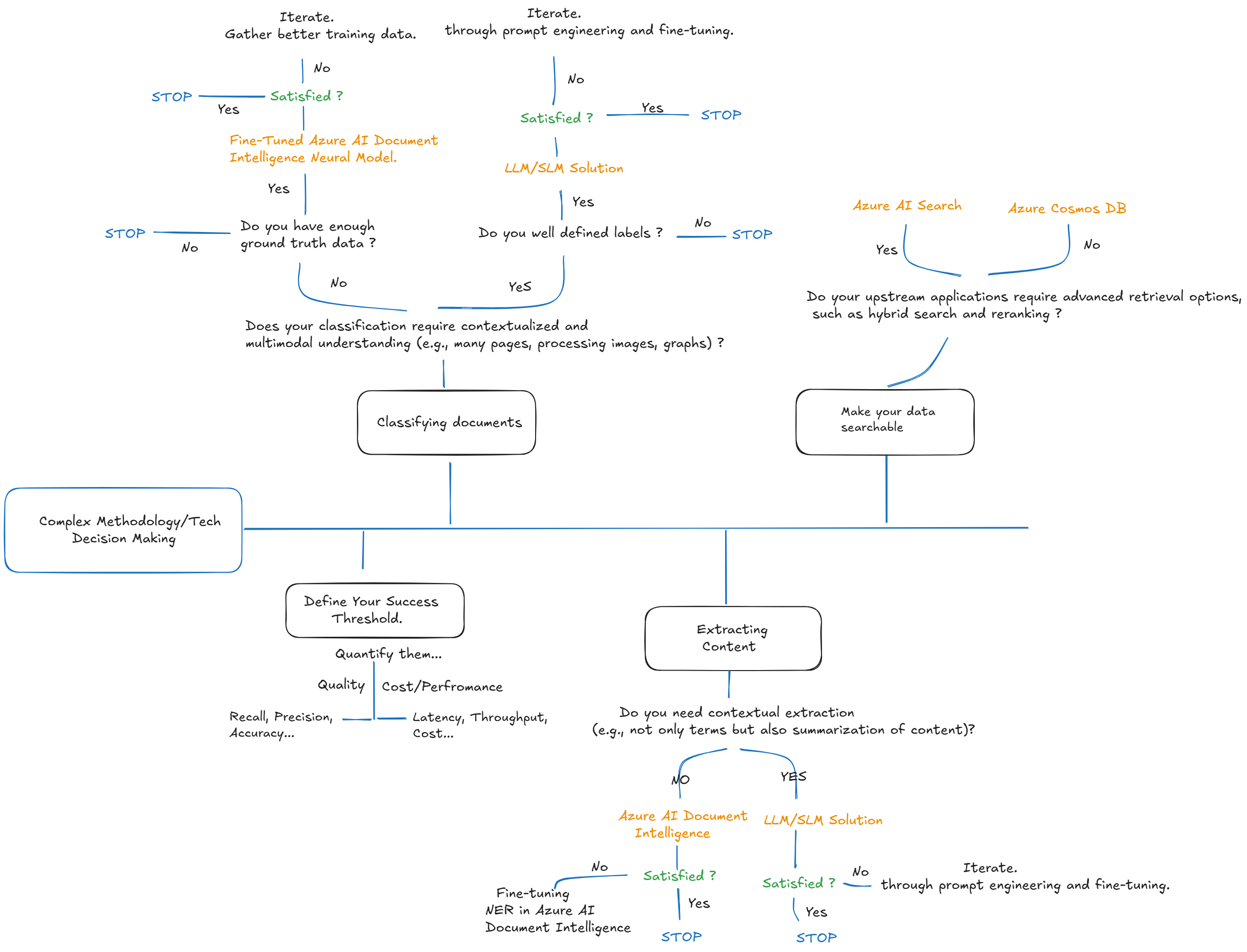

Technology-Driven Decision Making

In engineering decision-making, if there's one crucial factor that stands out, it is the choice of technology. Committing to a technology and spending months developing workarounds can either make or break your project. Therefore, it's essential to invest effort upfront in thorough testing, research, and selecting the right technology that meets your needs. As part of my development process, I put on my architect hat and create decision maps to explore how to align my needs with the right technology.

Let's break down my decision-making process. Our goal is to process untagged documents, whether they are from old archives or new documents like vendor bills, and extract key elements to make them searchable and vectorize the content for our semantic layer in our enterprise search or RAG systems.

To achieve this, we need to break the problem into three main stages: Document Classification, Content Extraction, and Making Data Searchable.

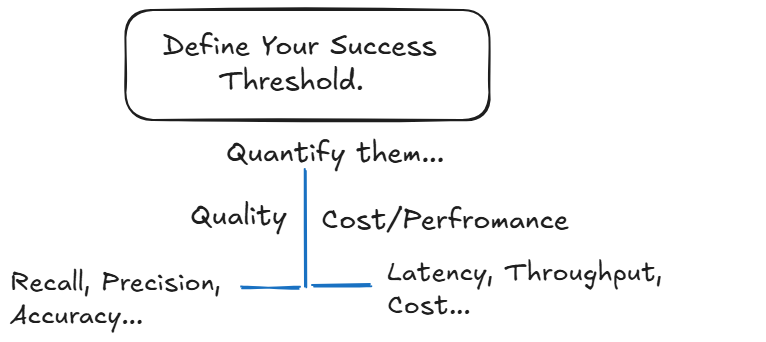

Define Your Threshold of Success

As you move into later stages of your project, establishing clear validation frameworks is crucial. Many companies struggle to compare each stage with their own criteria, which can lead to inefficiencies. To simplify this process, focus on two critical aspects: quality and performance.

For document classification, data scientists typically use metrics like F1 scores, recall, and precision in multi-class distributions. But don't overlook the performance of your system. What are your required latency and throughput levels? Additionally, consider the total cost of ownership and return on investment.

For example, LLM solutions might offer higher accuracy but can require up to two months of engineering effort for development and maintenance. On the other hand, a fine-tuned neural model from Document Intelligence can be set up in just 20 minutes and offers greater scalability for many solutions. The choice depends on your specific needs, but it’s essential to evaluate these quantitative metrics carefully.

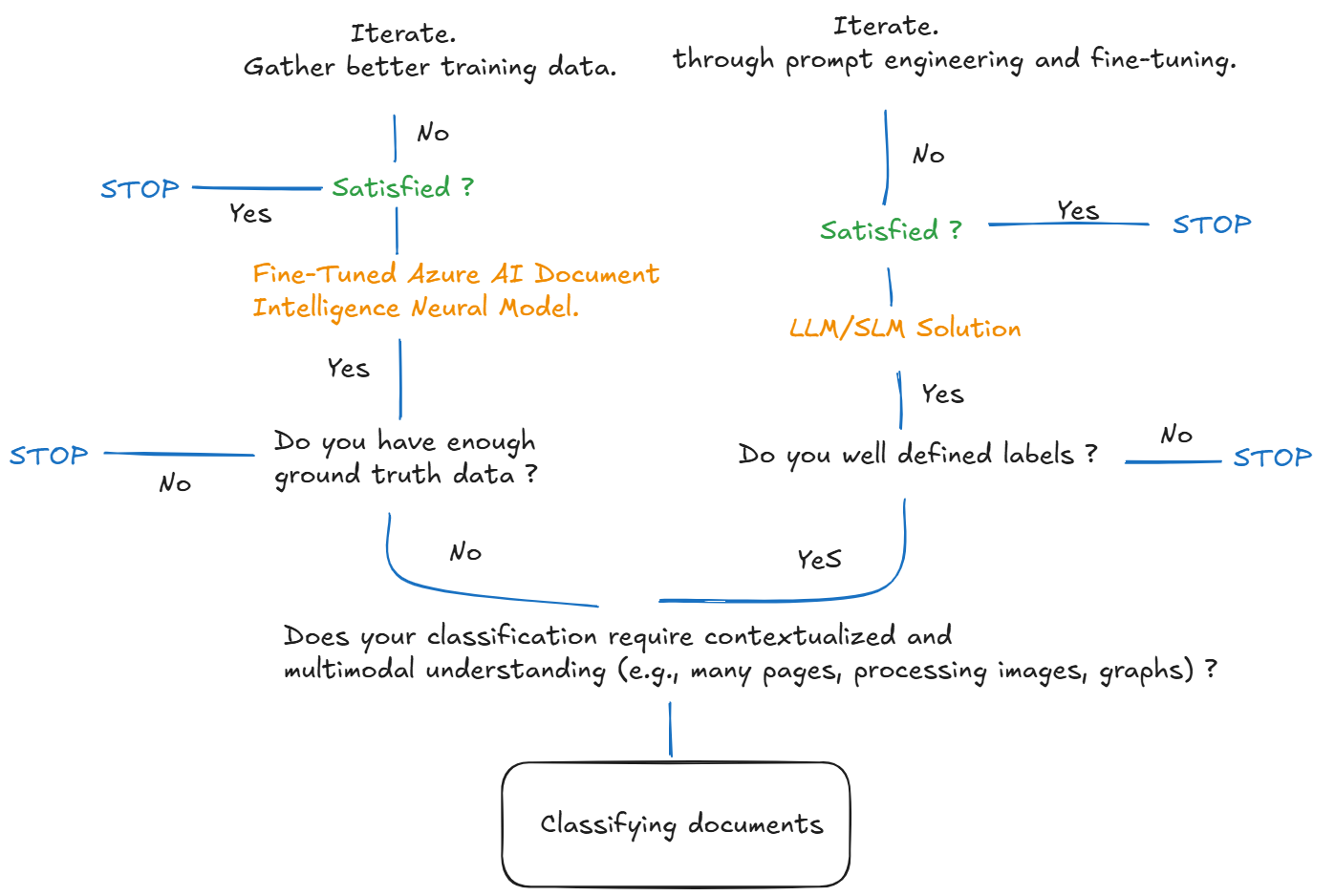

Document Classification

For document classification, you can use LLM/SLM technologies and Azure AI Document Intelligence.

LLM/SLM Approaches:

OCR + LLM (Text + LLM = Label) First, scan the document using OCR, then pass it to an LLM/SLM for extraction of the targeted information.

Utilize Multimodal Models (Image + LLM = Label) - Advanced multimodal models like GPT-4 Omni or phi-3 vision can directly accept images for classification.

Azure AI Document Intelligence:

- Azure AI Document Intelligence offers advanced OCR and Named Entity Recognition (NER) capabilities. For classification, it allows you to fine-tune pre-trained models within minutes, hosting them in the cloud and enabling inference at scale while customizing labels and classifications to fit your specific needs.

Choosing the best approach for your use case...

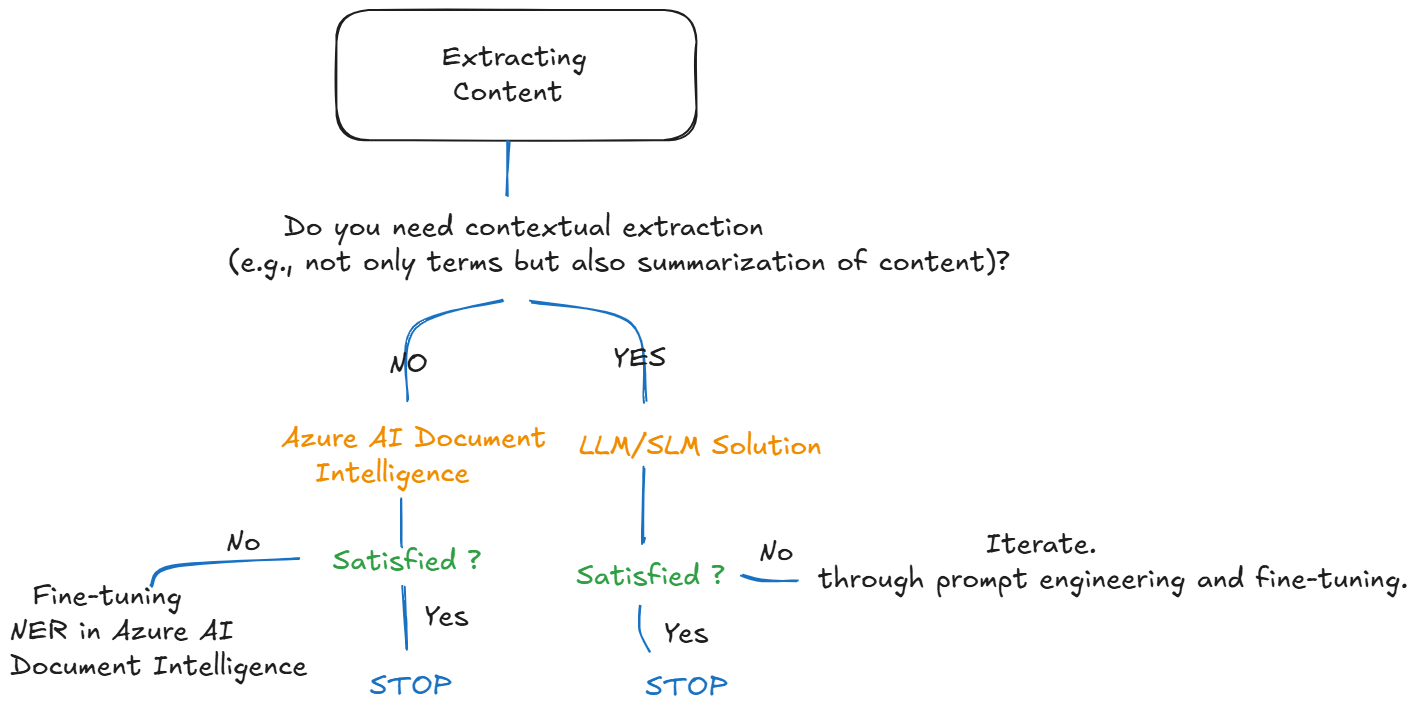

Content Extraction

For content extraction, the key factors are the complexity and variability of your document layouts. Azure Document Intelligence offers a state-of-the-art solution for OCR and provides a robust method using pre-trained neural network models to extract content (key-value pairs) from documents. You can also fine-tune these pre-trained models. However, LLMs provide a broader understanding layer that can adapt to different languages and document layouts, making accurate contextual assumptions that significantly aid in content extraction.

For example, if you want to extract vendor bill information such as {"price": "44556", "currency": "$"}, you can use pre-trained models like prebuilt-invoice or further fine-tune them in AI Document Intelligence. However, if contextual understanding and handling variability are critical due to the complexity and low quality of these bills, I found that LLMs offer superior performance in these areas, especially with prompt engineering and added flexibility.

Choosing the best approach for your use case...

LLM/SLM Approaches:

OCR + LLM (Text + LLM = NER) First, scan the document using OCR, then pass it to an LLM/SLM for extraction of the targeted information.

Utilize Multimodal Models (Image + LLM = NER) - Advanced multimodal models like GPT-4 Omni or phi-3 vision can directly accept images for classification.

Azure AI Document Intelligence:

- Azure AI Document Intelligence offers advanced OCR and Named Entity Recognition (NER) capabilities. For classification, it allows you to fine-tune pre-trained models within minutes, hosting them in the cloud and enabling inference at scale while customizing labels and classifications to fit your specific needs.

Make Your Data Searchable

The first two stages focus on processing the documents, such as understanding and extracting the data. Now, we want to make it searchable, discoverable, and accessible through natural language. This allows users to find what they need by simply asking, without having to search through SQL queries or document repositories.

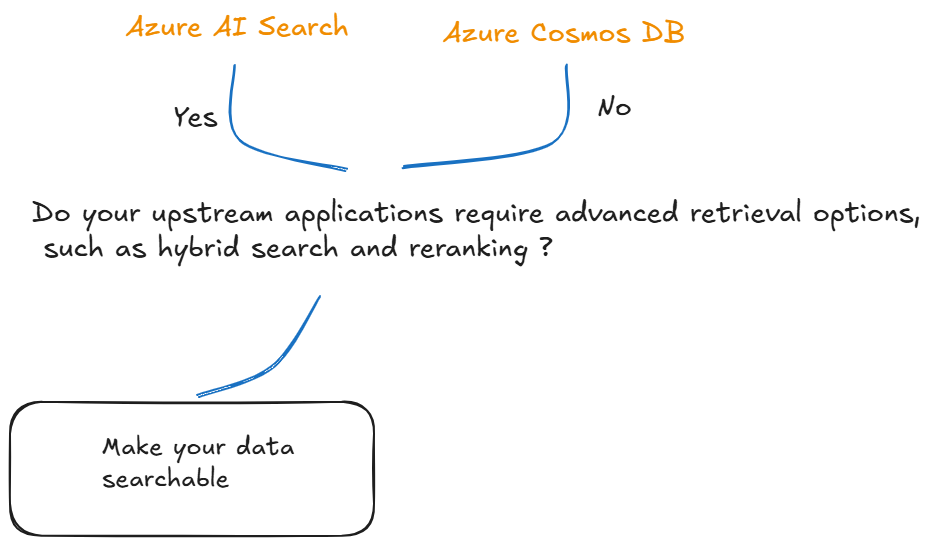

There are two strong options in Azure for vector databases—both allow you to index embeddings, making data available across your company for semantic queries and retrieval. My criteria here focus mostly on quality and the key differences between the two options: Azure AI Search's ability to enable hybrid search and what we call L2 ranking effectively within the out-of-the-box capabilities makes it a winner, especially for most RAG patterns. Not to minimize what Cosmos DB does for you, Cosmos DB can serve as your operational database and also support vector search on top of that. So, the choice depends on your current use case needs and in-house expertise, but both are excellent options.

Case Study: Making Your Enterprise Unlabeled Document Archives Searchable

Problem We Are Tackling

Enterprises often have millions of documents stored in their archives. These documents are usually unprocessed or manually processed, leading to inefficiencies and human error. Our goal is to categorize ("tag") these documents into 16 initial categories using fine-tuned neural document intelligence models. After classification, we will focus on the documents labeled as invoices and enable a chat with your invoices functionality. This involves tackling an OCR (Optical Character Recognition), classification, and NER (Named Entity Recognition) problem simultaneously, which will enable companies to tag their documentation, vectorize, and index it into a vector database, making their data searchable.

To be precise, we will be asking a specific question in natural language like What are the dates and total amounts of the invoices issued by Management Science Associates, Inc.? Please provide a brief summary of each invoice. If there are many invoices, summarize the top 5. If there is only one invoice, provide its details.

Our Solution

The Data

To illustrate our problem statement, we are using the RVL-CDIP dataset, which consists of 400,000 grayscale images in 16 classes, with 25,000 images per class. These images are from scanned documents. For this prototype, I selected 100 samples per class and split this into 70% for training and 30% for validation.

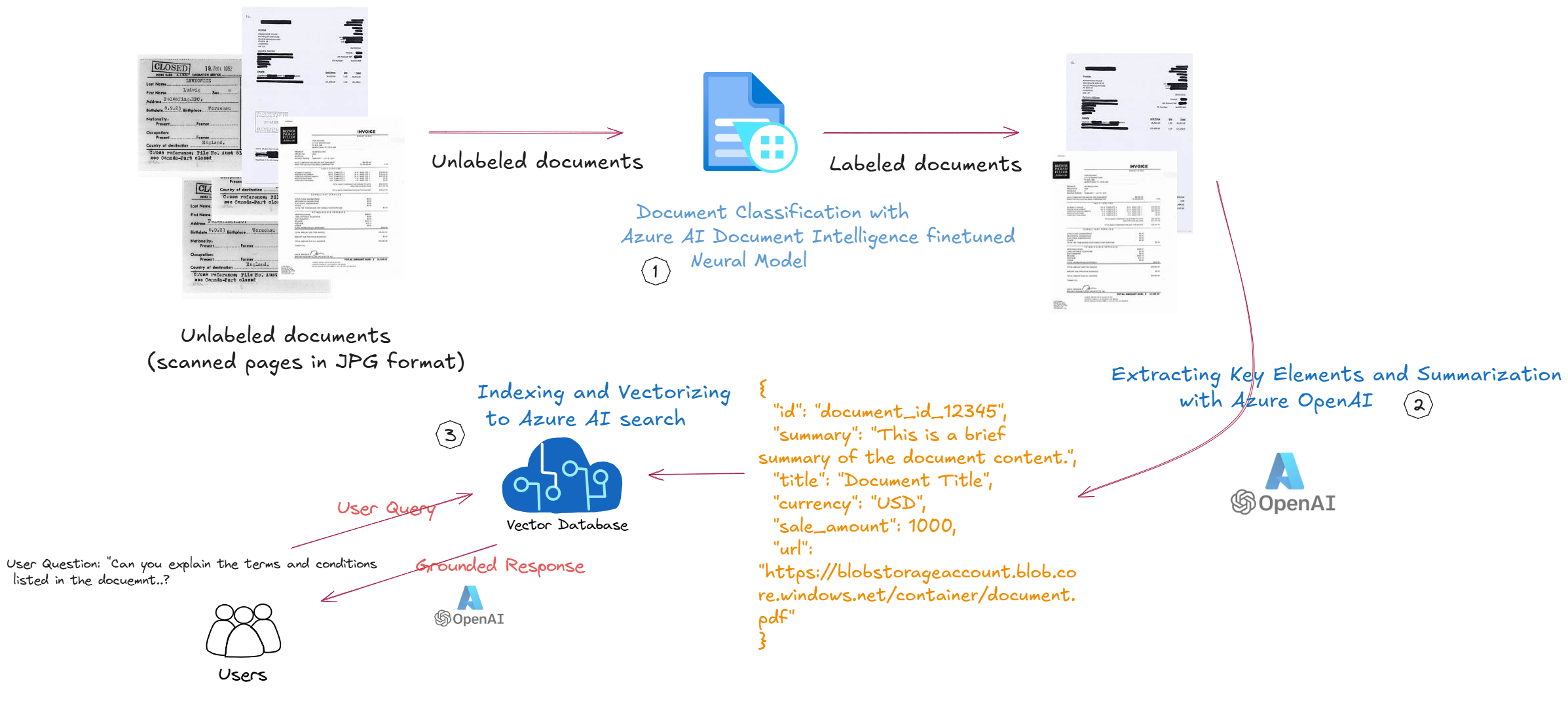

The Pipeline

- Document Classification: We classify documents into 16 categories by fine-tuning pre-trained neural models using Azure AI Document Intelligence.

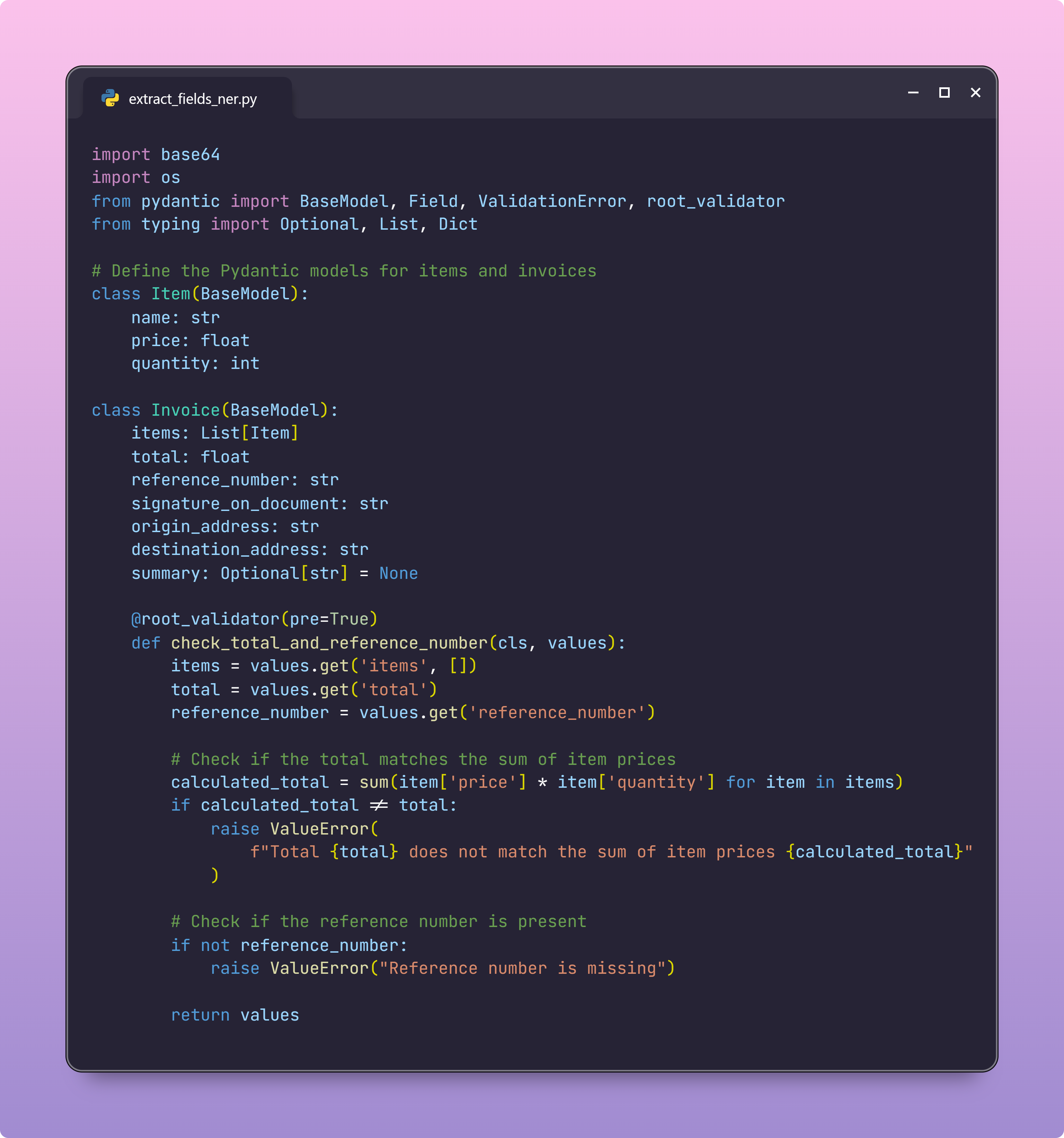

- Key Elements Extraction and Summarization: Extract and summarize key elements (NER) from documents classified as invoices using language models with multimodality capability for contextual entity extraction and summarization, enforcing proper validation and converting them into a structured format (JSON) with the support of the

pydanticandinstructorlibraries.

- Key Elements Extraction and Summarization: Extract and summarize key elements (NER) from documents classified as invoices using language models with multimodality capability for contextual entity extraction and summarization, enforcing proper validation and converting them into a structured format (JSON) with the support of the

- Data Indexing and Vectorization: We'll index and vectorize the JSON containing the key information and summarization per document into Azure AI Search, allowing your enterprise to query the documents in a "Bing-like" manner and make your previously unlabeled data searchable.

Document Classification with Fine-tuned Neural Models on Azure AI Document Intelligence

Why Fine-tuned Neural Models vs. LLM/SLM-based Approaches?

I chose fine-tuning pre-trained neural models on Azure AI Document Intelligence for their remarkable ability to combine layout and language features, leading to the accurate extraction of labeled fields from documents. These models are effective for both structured and semi-structured documents, making them ideal for my dataset of single-page scanned JPGs, which includes 16 types of documents such as invoices, receipts, and more. Fine-tuning was a clear choice given my rich training dataset that accurately represents my problem domain (population). Additionally, because of the nature of the data, I didn't need multi-page contextual information.

When to Consider LLM/SLM-based Solutions

Page Boundaries: If your documents span multiple pages and have values split across pages, LLM/SLM solutions can handle these better. For example, a contract split into several pages where key information is scattered would benefit from an LLM/SLM approach.

Unsupported Field Types: If your datasets contain unsupported field types for custom neural models, LLM/SLM solutions might offer more flexibility. For example, if you need to extract complex tables or uncommon field types, LLM/SLM models can be more adaptable.

Build Operations: Custom neural models are limited to 20 build operations per month, which can be a bottleneck if you need frequent retraining. For instance, continuously updating a model with new variations of purchase orders might require more frequent builds, making LLM/SLM a more viable option.

How-to: Multi-passed Approach

Training and Fine-tuning Process

Training and Fine-tuning Process

During this phase, the model undergoes a hosted fine-tuning process, improving its accuracy in classifying documents based on our own data (training set).

Best practices while building your training set:

Generalization: Custom neural models can generalize across different formats of a single document type, making them versatile for various document structures.

Representative Data: A diverse and representative training dataset enhances the model's ability to generalize and perform accurately across different document types.

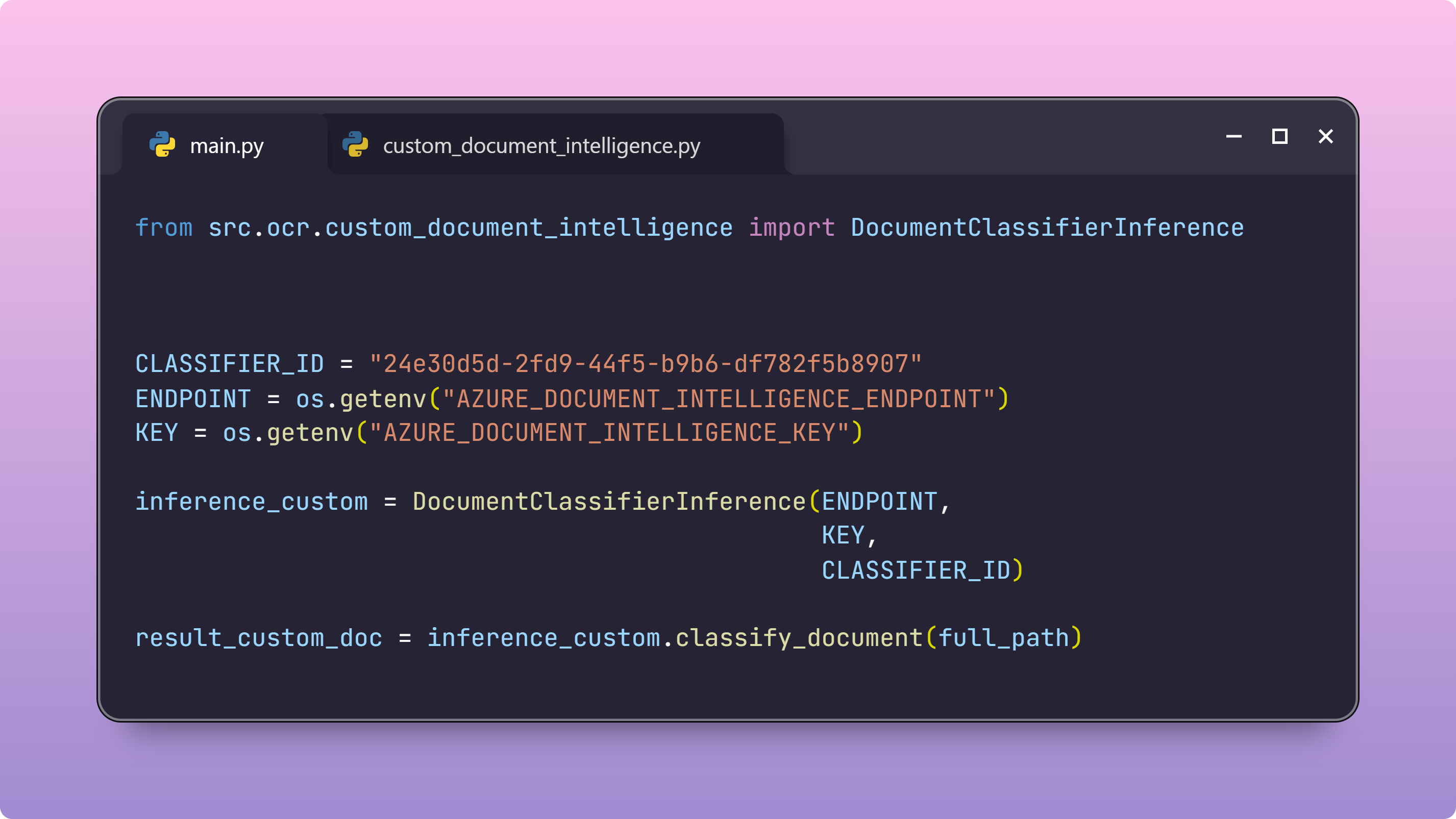

Inference Phase

Once trained, the model is deployed and hosted in Azure. The SDK simplifies integration into the inference pipeline, automating classification at scale. This phase enables efficient processing of large document volumes, ensuring consistent and accurate classification.

Continuous Training

This isn't a one-time exercise. By setting up a CI/CD/CT pipeline, the model's performance can be continuously improved, adapting to new data and document format variations.

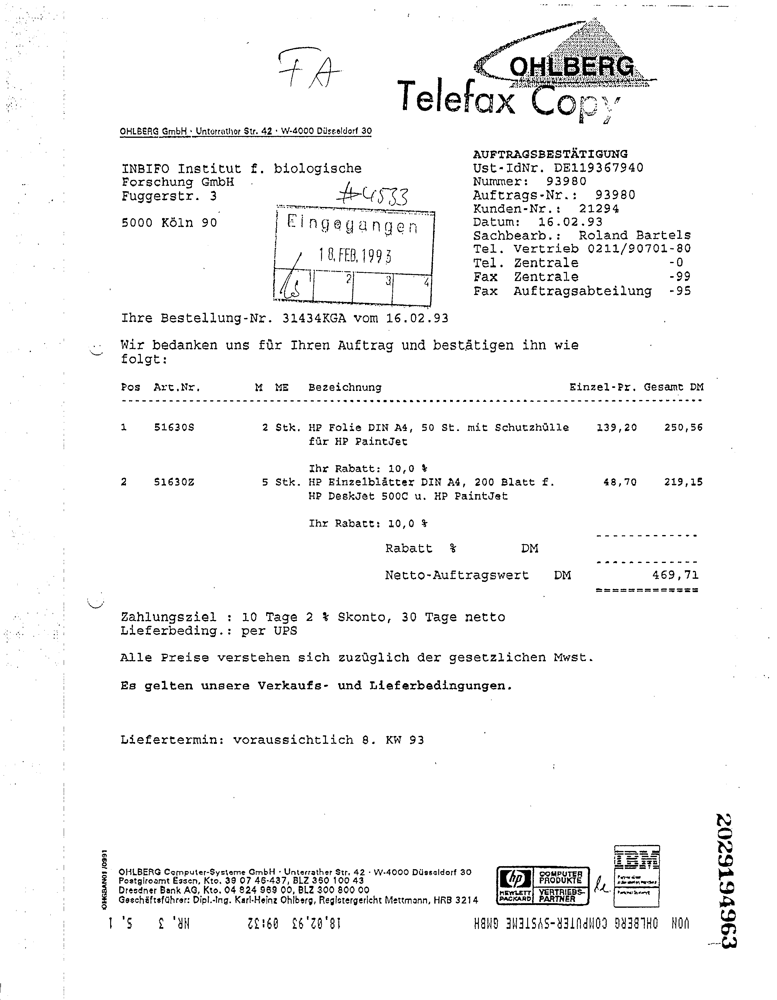

Extracting Key Elements and Summarization

As my data has very different structures, both between document types and within them, I found using an LLM approach to be the right choice for this use case. Through prompt engineering, I can identify terminology in various contexts and layouts. I am leveraging the multimodal capabilities of Azure OpenAI GPT-4-Omni to pass the labeled documents as inputs and extract the context I need, as well as perform summarization. Here is an example of an invoice.

Multi-passed Approach

NER - Extracting Key Information

We use the LLM to extract key-value pairs in context and ensure proper JSON parsing with

Pydanticand theinstrucutorlibrary to format the key labels correctly...

Summarizing Document

To go beyond key-value pair limitations, we generate summaries and apply a vectorization layer to enable semantic search. This adds a semantic understanding of the document, allowing us to search across traditional RAG patterns or other applications.

Take a look at the data extracted....

{

"id": "93980",

"content": "",

"content_vector": [],

"total": 469.71,

"reference_number": "93980",

"signature_on_document": "No signature present",

"origin_address": "OHLBERG GmbH, Unterrather Str. 42, W-4000 D\u00fcsseldorf 30",

"destination_address": "INBIFO Institut f. biologische Forschung GmbH, Fuggerstr. 3, 5000 K\u00f6ln 90",

"items_purchased": [

{

"list_item": "HP Folie DIN A4, 50 St. mit Schutzh\u00fclle f\u00fcr HP PaintJet, 125.28, 2"

},

{

"list_item": "HP Einzelbl\u00e4tter DIN A4, 200 Blatt f. HP DeskJet 500C u. HP PaintJet, 43.83, 5"

}

]

}

Making Our Documents Searchable

So now, we are in a point where we ahve laveeld and extarcted the ofnramtion we need form the dcuemnt . next stpeps is to egegnrtat ethe dneebeign and idnencin the ifnormnt tuont ona Idnexin in azure AI asear. To make our documents discoverable and enhance search capabilities, we index the data using embeddings. This process takes advantage of Azure AI Search's state-of-the-art features, such as hybrid search, which combines vector and BM25-type searches.

So, what does this mean? By using embeddings, we capture the semantic meaning of the documents, making it possible to understand the context of a query. At the same time, BM25-type search focuses on exact term matches. Together, these methods ensure that our searches are both accurate and relevant.

But that's not all. By indexing with embeddings, our documents can be found through natural language queries captuirititn the conewtatcyula meanai gin of ouw pahshres and utterances. This means users can ask questions in their own words and still find the right documents. Plus, you ca lavevrage the L2 re-ranker option in Azure AI Search further improves results by re-ranking them based on context and relevance.

In short, we're making our documents not just labeled and organized, but also easily discoverable and accessible through natural language. This way, users can efficiently find what they need by simply asking, without having to dig through piles of data.

Do you remember the original question?

What are the dates and total amounts of the invoices issued by Management Science Associates, Inc.? Please provide a brief summary of each invoice. If there are many invoices, summarize the top 5. If there is only one invoice, provide its details.

I asked this question using a classic search pattern with L2 re-ranker option in Azure AI Search for the data indexing and passing to the LLM, and this is the response I got:

Based on the provided context, there is only one invoice mentioned. Here are the details:

Date of Invoice: April 28, 1978

Total Amount: $3,296.00

Summary: The invoice is issued by Management Science Associates, Inc. to Mr. Don Fleming, Manager of Marketing Information & Analysis. It pertains to a Cigarette Research Audit conducted in February 1978, which includes an estimate of cigarette brand shares by Brown & Williamson Trading Areas, Departments, and U.S. Total. The invoice is signed by Patrick L. Flannery, and the payment terms are net 10 days.

Impressive progress! From raw scanned documents, we've constructed a comprehensive invoice knowledge base, enabling advanced access to vectorized text and key data elements for your applications. The possibilities are vast and ready to be explored. Happy coding! 🎉

Did you find it interesting? Subscribe to receive automatic alerts when I publish new articles and explore different series.

More quick how-to's in this series here: 📚🔧 Azure AI Practitioner: Tips and Hacks 💡

Explore my insights and key learnings on implementing Generative AI software systems in the world's largest enterprises. GenAI in Production 🧠

Join me to explore and analyze advancements in our industry shaping the future, from my personal corner and expertise in enterprise AI engineering. AI That Matters: My Take on New Developments 🌟

And... let's connect! We are one message away from learning from each other!

🔗 LinkedIn: Let’s get linked!

🧑🏻💻GitHub: See what I am building.

Subscribe to my newsletter

Read articles from Pablo Salvador Lopez directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pablo Salvador Lopez

Pablo Salvador Lopez

As a seasoned engineer with extensive experience in AI and machine learning, I possess a blend of skills in full-stack data science, machine learning, and software engineering, complemented by a solid foundation in mathematics. My expertise lies in designing, deploying, and monitoring GenAI & ML enterprise applications at scale, adhering to MLOps/LLMOps and best practices in software engineering. At Microsoft, as part of the AI Global Black Belt team, I empower the world's largest enterprises with cutting-edge AI and machine learning solutions. I love to write and share with the AI community in an open-source setting, believing that the best part of our work is accelerating the AI revolution and contributing to the democratization of knowledge. I'm here to contribute my two cents and share my insights on my AI journey in production environments at large scale. Thank you for reading!