Reinforcement Learning for dynamic load balancing in Multi-Cloud environments

Subhanshu Mohan Gupta

Subhanshu Mohan Gupta

In today’s digital landscape, enterprises are increasingly adopting multi-cloud strategies to optimize performance, cost, and resilience. However, managing workloads across multiple cloud providers like AWS, Azure, and Google Cloud - presents significant challenges. Traditional load balancing techniques often fall short in these complex environments, where traffic patterns and resource availability are constantly changing.

Reinforcement Learning (RL) offers a game-changing solution. By enabling systems to learn from their environment and adapt in real-time, RL can optimize the distribution of workloads dynamically across multiple cloud platforms, enhancing both resilience and cost-efficiency.

The Challenge: Balancing Workloads in Multi-Cloud Environments

Imagine a global retail giant that operates an online platform serving millions of customers worldwide. The company uses AWS for robust compute services, Azure for AI and analytics, and Google Cloud for efficient data storage. During peak shopping seasons like Black Friday, the platform experiences massive spikes in traffic. Ensuring smooth performance and avoiding downtime during these periods is critical.

Traditionally, the company would rely on static rules or manual adjustments to balance the load across different cloud providers. However, these approaches often lead to over-provisioning on one cloud while under-utilizing another, resulting in suboptimal performance and higher costs.

Enter Reinforcement Learning (RL)

Reinforcement Learning is a type of machine learning where an agent learns to make decisions by interacting with its environment and receiving feedback in the form of rewards or penalties. In the context of dynamic load balancing, the RL agent's environment consists of the multi-cloud infrastructure, where it observes factors like workload demand, server capacity, and costs associated with each cloud provider.

The RL agent's goal is to learn a policy that maximizes overall rewards defined as a combination of factors such as minimized costs, optimized performance, and balanced resource utilization. Over time, the agent learns to anticipate changes in the environment and proactively adjust the distribution of workloads across different cloud platforms.

Implementation: Building an RL-Driven Load Balancer

Defining the RL Agent’s Environment -

State: The current status of each cloud provider (e.g., CPU usage, memory, costs).

Actions: Possible moves the RL agent can make (e.g., shifting workloads from AWS to Azure).

Rewards: The goals the agent aims to achieve (e.g., minimizing costs, maximizing performance).

Designing the RL Algorithm -

Algorithm: A Deep Q-Network (DQN) is suitable for this scenario. The DQN processes the input (real-time data from cloud providers) and outputs the best action (e.g., redistribute workloads).

Reward Function: A formula that combines cost savings, response time improvements, and load balancing efficiency.

Building the RL Model -

Framework: Use TensorFlow or PyTorch to develop the RL model.

Neural Network: The model processes input data from AWS, Azure, and Google Cloud to determine optimal actions.

Integrating with the Load Balancer -

API Integration: The RL model communicates with your load balancer (e.g., Traefik, HAProxy) via APIs.

Feedback Loop: The load balancer provides performance metrics back to the RL agent for continuous learning.

Deployment and Testing -

Simulated Traffic: Test the RL agent with scenarios mimicking peak traffic (e.g., Black Friday).

Monitoring: Use tools like Prometheus and Grafana to visualize the agent’s decisions and their impact.

Iterative Training: Continuously train the model with new data to improve performance.

Fine-Tuning and Optimization -

Reward Function Adjustments: Modify the reward function to align with business priorities, such as cost or performance.

Model Retraining: Periodically retrain the model to adapt to new patterns.

Real-World Example: Black Friday Traffic Management

During Black Friday, our retail giant experiences a surge in traffic. The RL agent detects that AWS servers are nearing capacity and predicts that continuing to rely on AWS alone could lead to latency issues and higher costs. It dynamically shifts 20% of the workload to Azure, which has underutilized resources and offers a temporary discount on compute services. As a result, the company maintains optimal performance and reduces costs, ensuring a seamless shopping experience for customers.

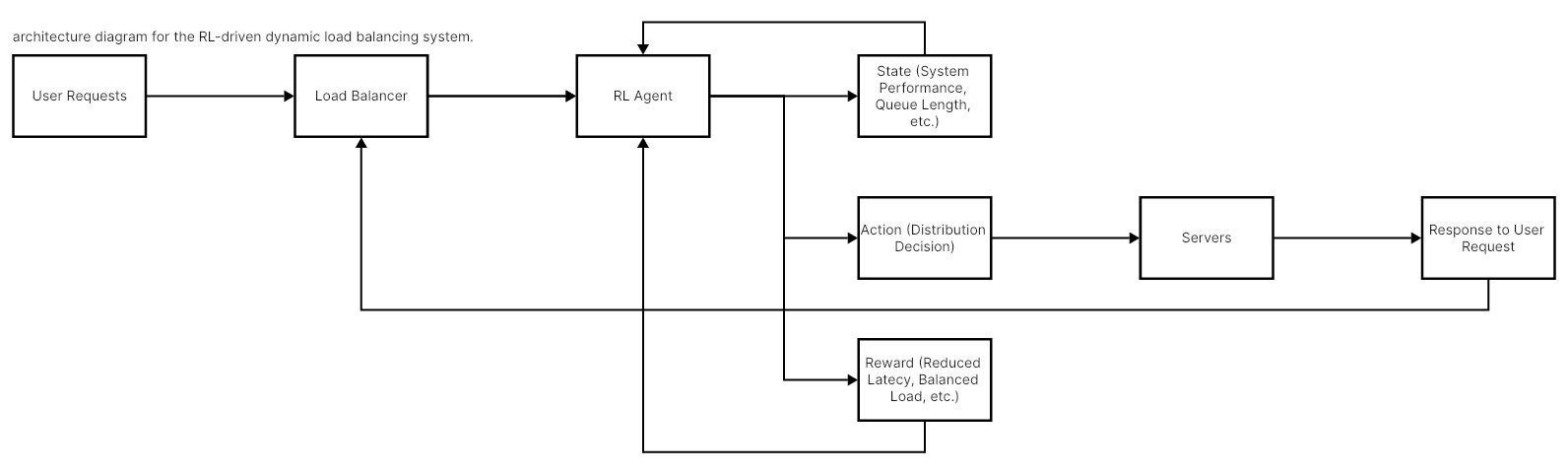

Here’s what the architecture looks like:

Explanation:

Traffic Ingress: User traffic enters the system via a load balancer.

Real-Time Monitoring: Metrics from each cloud provider are collected and fed into the RL agent.

RL Agent: The RL model processes the data, predicts optimal actions, and sends commands to the load balancer.

Action Execution: The load balancer dynamically shifts workloads based on the RL agent’s recommendations.

Feedback Loop: The system continuously monitors performance and adjusts the RL model as necessary.

Conclusion: The Future of Cloud Load Balancing

Reinforcement Learning offers a powerful tool for dynamic load balancing in multi-cloud environments. By enabling systems to learn and adapt in real-time, RL not only enhances resilience and performance but also optimizes costs, making it a vital component for enterprises managing complex cloud architectures.

For organizations looking to stay ahead of the curve, exploring RL-driven load balancing is not just an option, it’s a strategic move towards a resilient, cost-effective, and intelligent cloud infrastructure.

Subscribe to my newsletter

Read articles from Subhanshu Mohan Gupta directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Subhanshu Mohan Gupta

Subhanshu Mohan Gupta

A passionate AI DevOps Engineer specialized in creating secure, scalable, and efficient systems that bridge development and operations. My expertise lies in automating complex processes, integrating AI-driven solutions, and ensuring seamless, secure delivery pipelines. With a deep understanding of cloud infrastructure, CI/CD, and cybersecurity, I thrive on solving challenges at the intersection of innovation and security, driving continuous improvement in both technology and team dynamics.