Dynamic Credentials with Hashicorp Vault

Karim El Jamali

Karim El Jamali

The Hassle of Managing Secrets

It was around 3:15 AM when my pager buzzed for the first time ever, jolting me awake from sleep. At that time, I was working as a Technical Account Manager at AWS, and the situation was urgent—a developer had mistakenly checked in AWS credentials to GitHub. Recently, I started going through an excellent course by Bryan Krausen on HashiCorp Vault, which connects directly to that issue and inspired me to write this post.

You can think of Hashicorp Vault as a super secret safe for your digital secrets. At a high level it can store your secrets safely, and request dynamic secrets which will be the focus of today's post, with granular levels of access control and a detailed audit trail. You can think about it as your one stop shop for all things credentials and secrets or even a front-end or a service by which all clients (users/developers/machines) can request access to those secrets.

Figure 1. Hassle of Managing Secrets

Dynamic Secrets

You can think of Vault as an API Gateway servicing all your credential and secret requests, bringing a unified experience & a common methodology on secrets management. But what are Dynamic Secrets?

Dynamic secrets are short-lived keys or credentials. Instead of giving out a permanent password or API key, Vault generates or requests a new one whenever you need it, and it expires automatically after a set period. A few reasons this might be very beneficial:

Better Security: This means that if someone gets hold of the key, it won't be useful for long, keeping your data safer. You can have credentials that last for a few minutes which in case leaked will have a much more limited damage as most likely they wouldn't be usable shortly. When employees leave the company, you don't have to worry about any static long lived credentials that they might still have to access your systems. In addition, all secrets are safely encrypted via multiple chains of encryption keys.

On Demand Credentials: Credentials are only there when you need them. You need to assume a particular AWS role, you ask Vault to generate dynamic short lived credentials to AWS to do a particular task.

High Level Overview

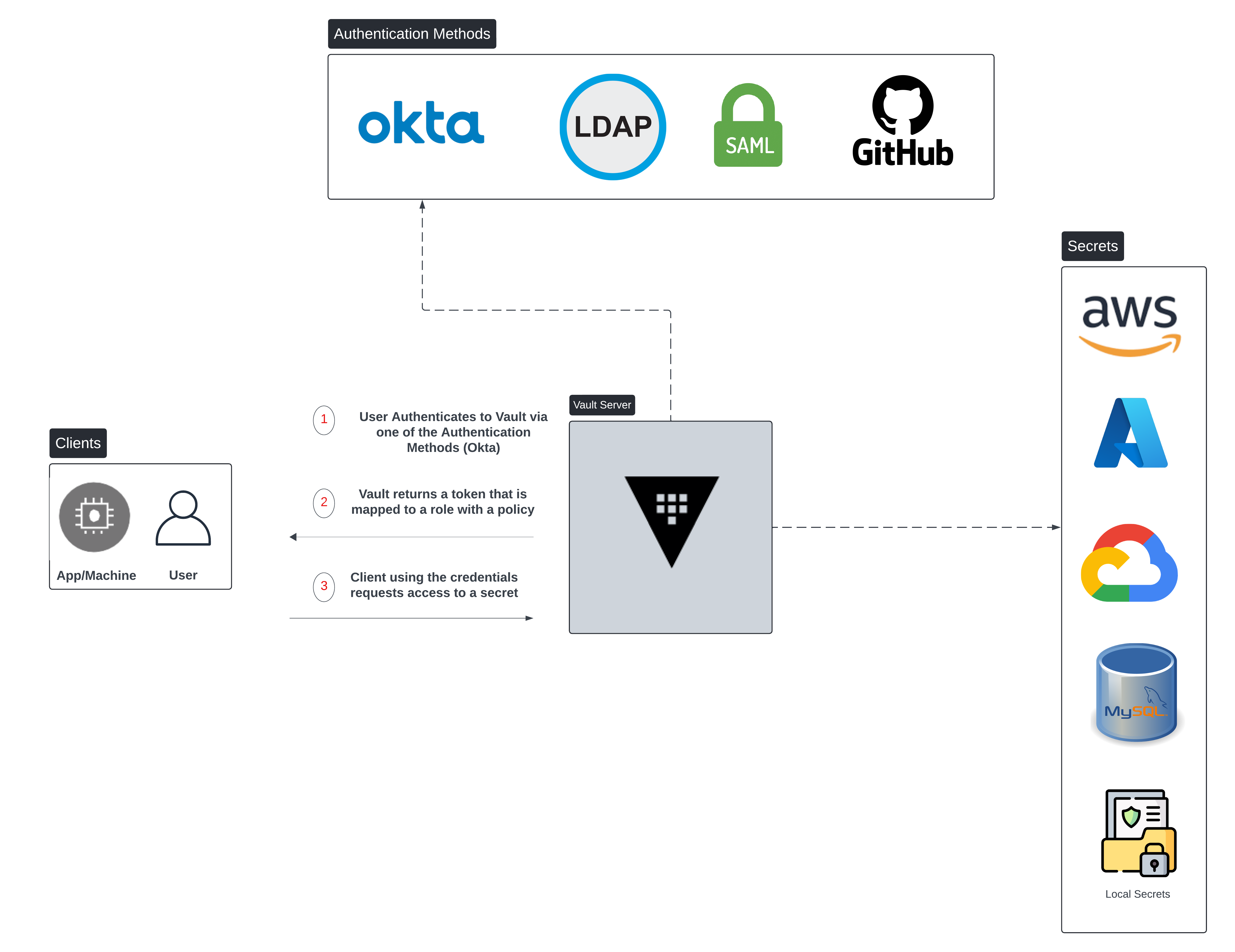

At a high level Vault works as follows:

Clients (Machines/Users) first need to authenticate to Vault. This is a critical step as it determines what the secrets the client can access or request. This authentication can happen via multiple methods some of which are shown in Figure 2. In addition, this authentication process can happen via CLI or UI. In this example, we will use Okta as our authentication method.

When Okta successfully authenticates the client, it returns back the Okta Group in which the user resides (e.g IT, SecOps..etc). Vault will make use of this group information and assign this user a policy. This policy basically authorizes the user or machine to authenticate for instance to AWS (simplified example here).

Now that Vault has identified the group and policy the user belongs to, it returns back a Token that is mapped to a policy that we discussed in step 2. The whole objective of this whole authentication process is to be able to get a token that is constrained by an associated policy.

Finally, the client can request credentials against a particular AWS Role i.e. AssumeRole will be requested by Vault against the particular AWS Role to receive Dynamic or time-bound AWS credentials (AWS Access Key, Secret Key, Session Token). AWS is just an example here, it could be a full Multi-Cloud environment, local secrets, Kubernetes, databases and much more. The full list of supported secret engines can be found here.

Figure 2. Vault High Level Architecture

Let's Dive Deeper

Setup Configuration

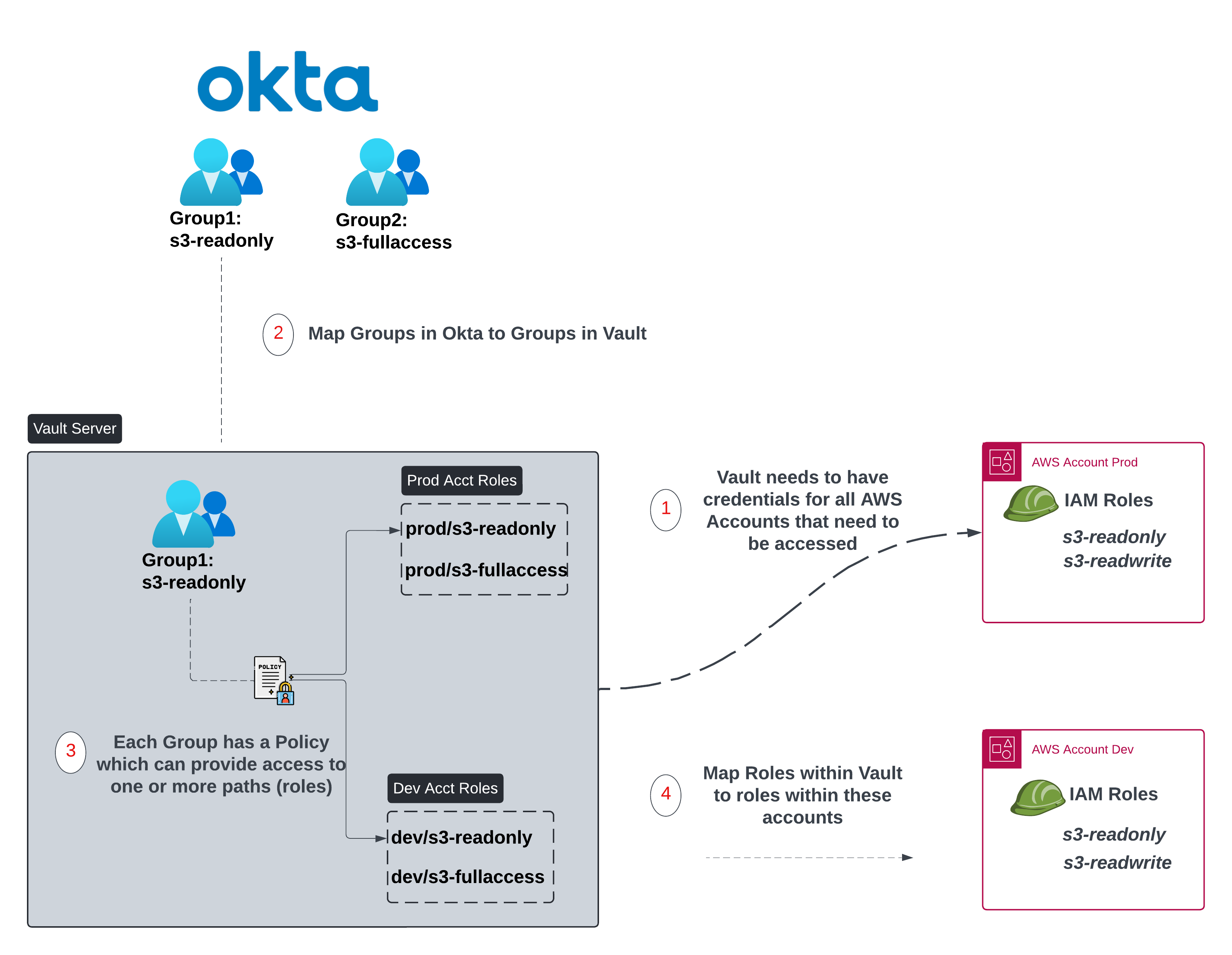

The objective of this section is to explain the setup from a configuration & object standpoint. In this section we are assuming the following:

Prerequisites:

There are two AWS Accounts Prod & Dev that users need access to.

For simplification purposes, within the Prod as well as Dev accounts there are two IAM roles. S3:readonly provides read only access to S3 storage and S3:readwrite allows full S3 access. This configuration is fully done within AWS. Enterprise setups would have a way larger number of accounts & roles but the same logic and object relationships continues to hold true.

These are the configuration steps:

First and foremost, Vault needs to have access to the AWS accounts to be able to request dynamic credentials from within these accounts. This can be done via credentials (Access Key, Secret Key) or via an instance Profile if the Vault server itself runs within AWS. The IAM permissions used by Vault are documented here.

Customers will generally have an Identity Provider (IdP) which in our case is Okta. Within Okta, most customers would group users depending on the role or function within the organization. It is important that the groups within Okta that would require Vault interaction be mapped to groups within Vault.

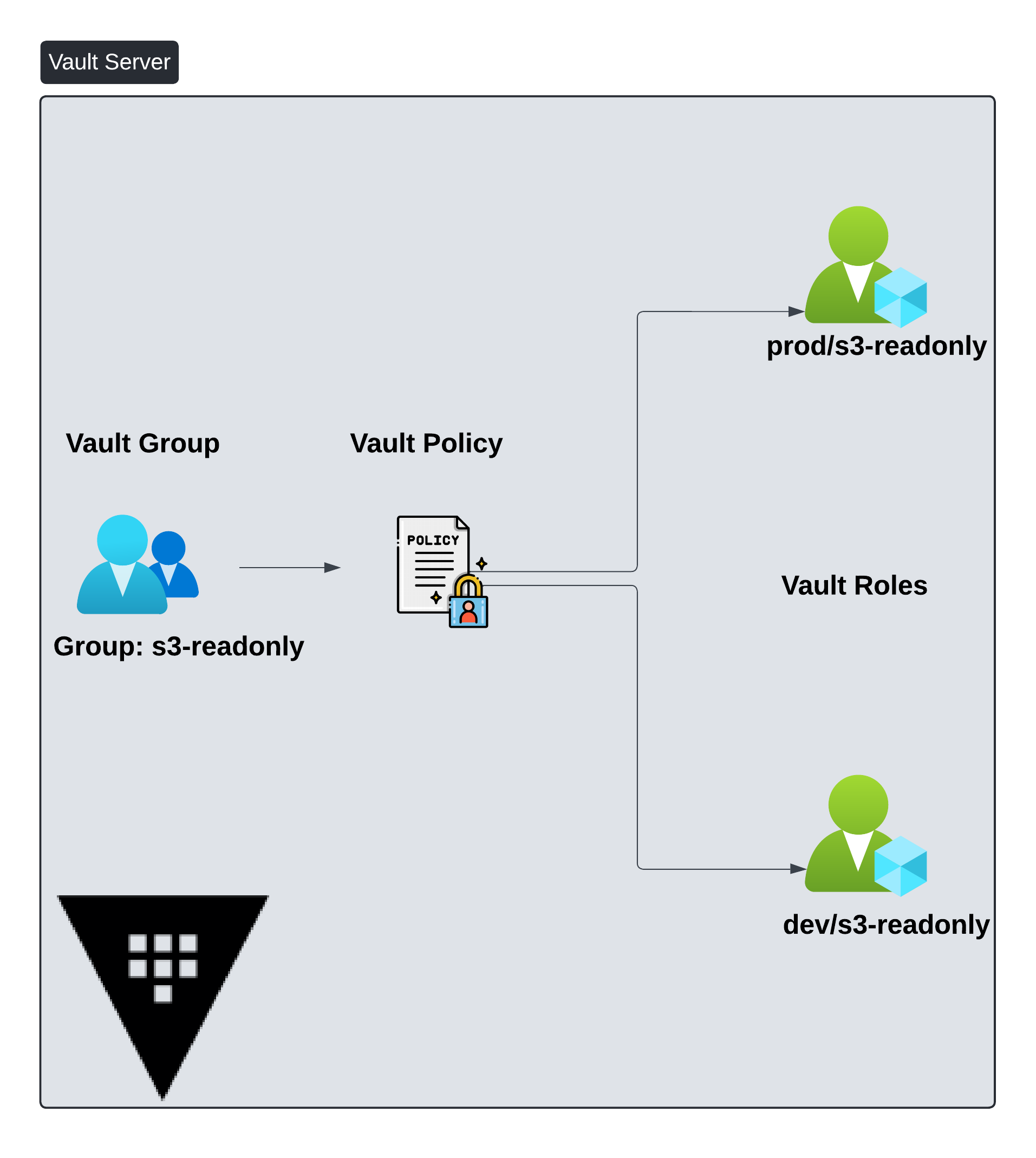

Each group within Vault (1:1 mapping of Okta/IdP group) has an associated Vault Policy. This Policy for instance would allow a particular group to access particular paths (Yes, everything in Vault is a path) and eventually assume particular Vault roles. Our policy in Figure3, allows access to prod/s3-readonly & dev/s3-readonly roles.

The Vault roles are indeed a 1:1 mapping of the roles within the different AWS accounts.

Figure 3. Setup Configuration

Object Relationships

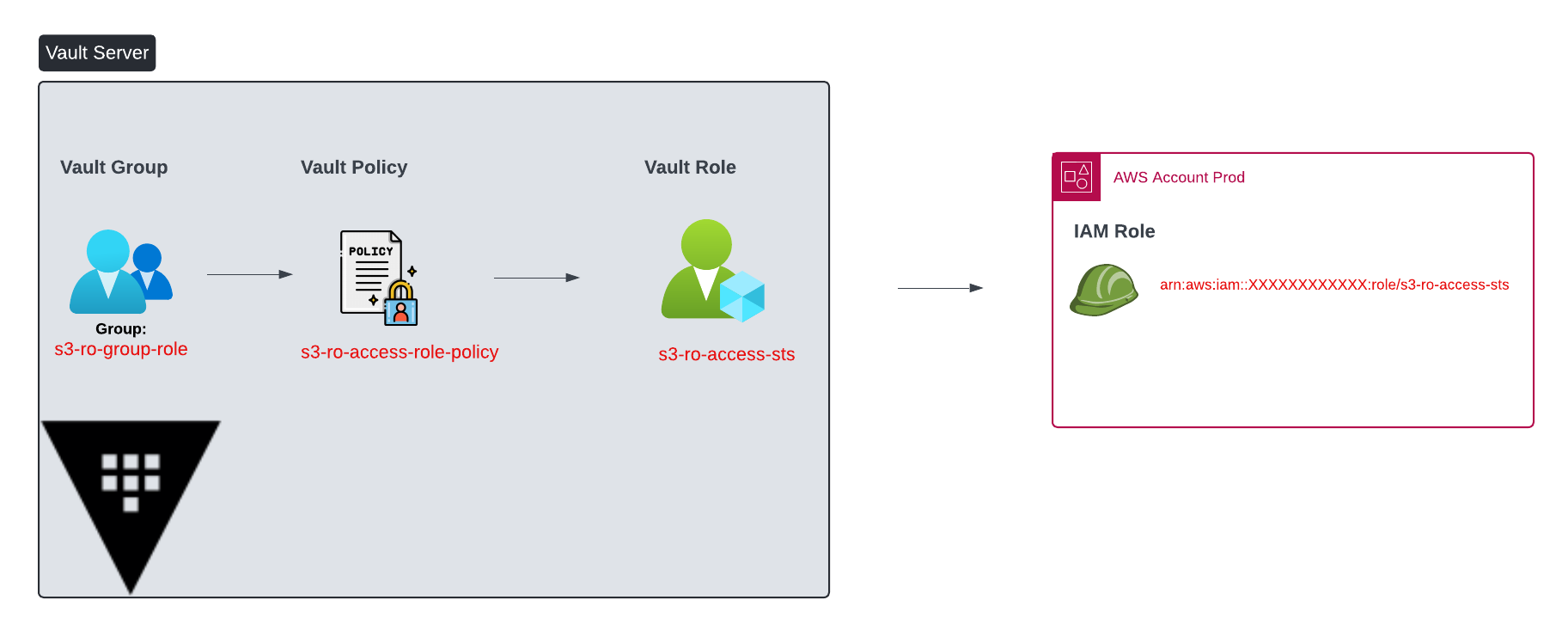

Figure 4 tries to simplify the object relationships.

A Vault Group needs to have an associated Vault Policy. Recall that in our setup configuration, these Vault groups within the Vault Server are a one to one mapping of the external IdP groups (Okta). The vault policy can in turn provide access to one or more paths (roles) where these roles are again a one to one mapping of the roles configured within AWS.

Figure 4. Object Relationships

Putting it All Together

In this section, we will tackle it end-to-end from the user's perspective again touching on some of the concepts we explained for an end-to-end understanding.

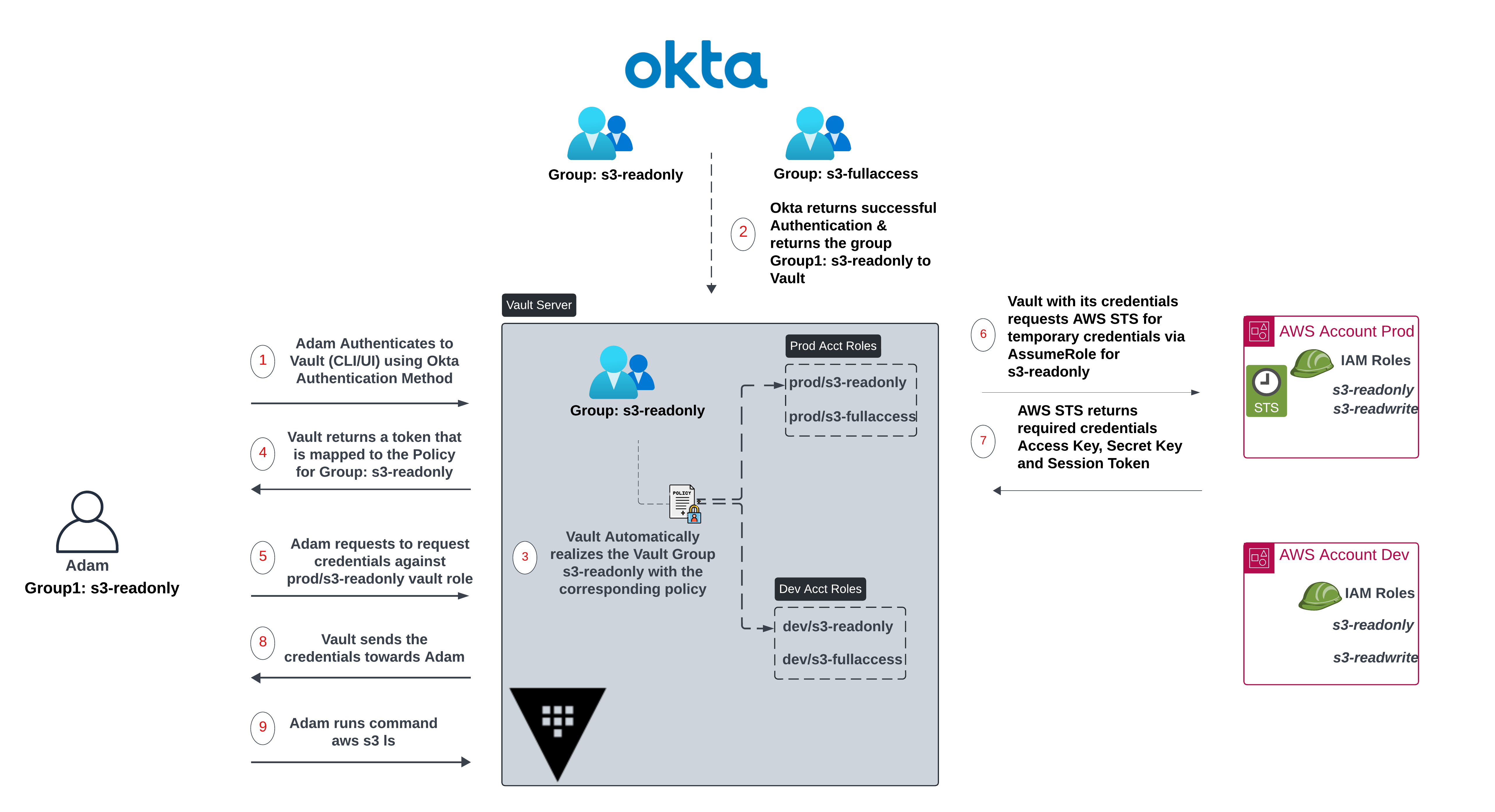

Adam is a user belonging to Group: s3-readonly in Okta.

Adam tries to authenticate to Vault by using the Okta authentication method supplying his credentials (CLI/UI). Okta authenticates Adam successfully and returns back the group name Group: s3-readonly.

As mentioned the groups in the IdP that need Vault access should have corresponding vault roles (Group: s3-readonly).

The vault group s3-readonly is associated with a policy that provides access to multiple paths (roles) in our example prod/s3-readonly & dev/s3-readonly. Note that each of those roles within Vault have corresponding IAM roles in the prod and dev accounts.

Now that authentication is complete and Vault maps the user to a particular policy, Vault issues a token and provides it to Adam.

With the token & correct permissions, Adam goes ahead and requests credentials against the Vault group prod/s3-readonly.

Since the vault policy allows Adam to access the Vault role prod/s3-readonly, Vault with its own credentials requests AWS STS for temporary credentials against the IAM role s3-readonly within the Prod account.

Vault will return the credentials to Adam consisting of Access Key, Secret Key and Session token.

Adam can now issue the command "aws s3 ls" to list the buckets within S3 with the received credentials.

Figure 5. Putting it All Together

Lab Setup

This is the lab setup we will use for demonstration. For Vault to be able to communicate with the AWS Account Prod, I associated the Vault EC2 instance with an IAM role via an Instance Profile.

Figure 6. Lab Setup

The below code snippet shows the Vault group within Okta "s3-ro-group-role" that maps to an Okta group name mapping it to a Vault Policy "s3-ro-access-role-policy".

# Creating the group within Vault and mapping it to a policy

vault write auth/okta/groups/s3-ro-group-role \

policies="s3-ro-access-role-policy"

#Creating the policy s3-ro-access-role-policy

vault policy write s3-ro-access-role-policy s3-ro-access-policy.hcl

Figure 7. Creating Auth Group within Vault & mapping it to a Vault Policy

The below figure shows the details of the Vault policy.

#Contents of the Policy

[ec2-user@ip-10-0-5-142 ~]$ vault policy read s3-ro-access-role-policy

#The below path allows access to request credentials (read capability in

#Vault) for the role s3-ro-access-sts. Note the path has creds

path "aws/creds/s3-ro-access-sts" {

capabilities = ["read"]

}

#The below path allows access to read the configuration of the role s3-ro-access-sts

path "aws/roles/s3-ro-access-sts" {

capabilities = ["read"]

}

# If you want to allow users to renew the leases for the same role

# Renew leases means rotating the credentials

path "sys/leases/lookup/aws/creds/s3-ro-access-sts" {

capabilities = ["read"]

}

#Ability to revoke the lease

path "sys/leases/revoke/aws/creds/s3-ro-access-sts" {

capabilities = ["update"]

}

#Ability to list all available roles, note this doesn't allow issuing credentials

# towards these roles

path "aws/roles/*" {

capabilities = ["list"]

}

Figure 8. Vault Policy s3-ro-access-role-policy

The code snippet in Figure 9 shows the mapping between the Vault role "s3-ro-access-sts" and the IAM role of the same name. Note that the ttl (default_sts_ttl) is set to 15 min and the max_sts_ttl (max ttl) is 24 hours. This means that that credentials generated against this rule will live for 15 minutes but can be renewed or rotated where applicable until the max_sts_ttl is reached. Once max_sts_ttl is reached, there is no possibility to renew the credential.

# Finally this is a mapping between the Vault role s3-ro-access-sts &

# the IAM Role ARN. Account number details are hidden

# default_sts_ttl is 15 min means that credentials will live for a period of 15 min

# max_sts_ttl=24h means you can request credential renewal where applicable

vault write aws/roles/s3-ro-access-sts \

role_arns="arn:aws:iam::XXXXXXXXXXXX:role/s3-ro-access-sts" \

credential_type=assumed_role \

default_sts_ttl=15m

max_sts_ttl=24h

#reading the configuration of the role with the same details

[ec2-user@ip-10-0-5-142 ~]$ vault read aws/roles/s3-ro-access-sts

Key Value

--- -----

credential_type assumed_role

default_sts_ttl 15m

external_id n/a

iam_groups <nil>

iam_tags <nil>

max_sts_ttl 24h

mfa_serial_number n/a

permissions_boundary_arn n/a

policy_arns <nil>

policy_document n/a

role_arns [arn:aws:iam::871253670082:role/s3-ro-access-sts]

session_tags <nil>

user_path n/a

Figure 9. Mapping Vault Roles to IAM Roles in AWS

Now let's try to have a client authenticate.

# Login with user credentials and receive the Vault token.

# At this stage you only logged into to Vault successfully and have a token

# with an associated policy (s3-ro-access-role-policy)

vault login -method=okta \

username="david@gmail.com" \

password="XXXXXXXXXX"

Success! You are now authenticated. The token information displayed below

is already stored in the token helper. You do NOT need to run "vault login"

again. Future Vault requests will automatically use this token.

Key Value

--- -----

token hvs.CAESIMniUaK0hhXKCGQaCQMkIR-P8K4UkKdQDAybYTWPQRLKGh4KHGh2cy5CcHRid01ETFhENmk5bWlCZGFoUk43ZTE

token_accessor jEUNtHfL7PGTFxIAOzxyJgNy

token_duration 768h

token_renewable true

token_policies ["default" "s3-ro-access-role-policy"]

identity_policies []

policies ["default" "s3-ro-access-role-policy"] #policy associated with the vault token

token_meta_policies s3-ro-access-role-policy

token_meta_username david@gmail.com

#Now david our user has requested credentials against the role s3-ro-access-sts

# Since the Vault policy allows it the request is successful

#david receives short-lived credentials (access key, secret key and session_token)

#note that there is no need for me to remove the credentials from the output because

#their lifetime is 15 min

vault read aws/creds/s3-ro-access-sts

Key Value

--- -----

lease_id aws/creds/s3-ro-access-sts/sfB1tR8zvGSLqlxyxS6UATFx

lease_duration 15m

lease_renewable false

access_key ASIA4VWWD7DBJJFME3HO

arn arn:aws:sts::871253670082:assumed-role/s3-ro-access-sts/vault-root-s3-ro-access-sts-1723777259-eAMrdCkR95w7Bv38YBbc

secret_key ch3xhCWqtz5rO2S5nnuj505Rjajy0WYxg9kDZrGq

security_token FwoGZXIvYXdzEPz//////////wEaDH3IMV5gEXgYudFS9iLfAfytgc1Ajloy83I+nyqFP92v++K60uGInLXQsWtztYEyCdRtNsGXfaumjU/Fuea2ZB6x3mLq7u/D8T5WQqU4/AHmecy0Gl2lGdsP6EFivLCVejJDVbgprDEVTZzDsBwIPpXsoMDmB9+9bxAxsVTEtifpqnrcG5WkuSne2OfrYEu6AwvDxp7HdhpgqXgabsxfT7zfO3WFyzEFutmM3N8yjyc001kROOxFxJojzDBAvo7PejN5tf2wjlWXdi8ZnxqjhSGaIcAn1rVCjpWM3DGn7wTUhzW97GMT2eaUsiBrw1so64H7tQYyLXiVIc3xZ3j1HbueByMKVUqDkuy885fM6CeIuVfW+KcLjsPahfngTb8O52Wbfg==

session_token FwoGZXIvYXdzEPz//////////wEaDH3IMV5gEXgYudFS9iLfAfytgc1Ajloy83I+nyqFP92v++K60uGInLXQsWtztYEyCdRtNsGXfaumjU/Fuea2ZB6x3mLq7u/D8T5WQqU4/AHmecy0Gl2lGdsP6EFivLCVejJDVbgprDEVTZzDsBwIPpXsoMDmB9+9bxAxsVTEtifpqnrcG5WkuSne2OfrYEu6AwvDxp7HdhpgqXgabsxfT7zfO3WFyzEFutmM3N8yjyc001kROOxFxJojzDBAvo7PejN5tf2wjlWXdi8ZnxqjhSGaIcAn1rVCjpWM3DGn7wTUhzW97GMT2eaUsiBrw1so64H7tQYyLXiVIc3xZ3j1HbueByMKVUqDkuy885fM6CeIuVfW+KcLjsPahfngTb8O52Wbfg==

ttl 14m59s

Figure 10. Client authenticates to Vault, receives token & requests AWS credentials

#Prior to getting credentials failing to list the buckets in S3

% aws s3 ls

An error occurred (InvalidAccessKeyId) when calling the ListBuckets operation: The AWS Access Key Id you provided does not exist in our records.

#Inputting the credentials

% export AWS_ACCESS_KEY_ID="ASIA4VWWD7DBJJFME3HO"

export AWS_SECRET_ACCESS_KEY="ch3xhCWqtz5rO2S5nnuj505Rjajy0WYxg9kDZrGq"

export AWS_SESSION_TOKEN="FwoGZXIvYXdzEPz//////////wEaDH3IMV5gEXgYudFS9iLfAfytgc1Ajloy83I+nyqFP92v++K60uGInLXQsWtztYEyCdRtNsGXfaumjU/Fuea2ZB6x3mLq7u/D8T5WQqU4/AHmecy0Gl2lGdsP6EFivLCVejJDVbgprDEVTZzDsBwIPpXsoMDmB9+9bxAxsVTEtifpqnrcG5WkuSne2OfrYEu6AwvDxp7HdhpgqXgabsxfT7zfO3WFyzEFutmM3N8yjyc001kROOxFxJojzDBAvo7PejN5tf2wjlWXdi8ZnxqjhSGaIcAn1rVCjpWM3DGn7wTUhzW97GMT2eaUsiBrw1so64H7tQYyLXiVIc3xZ3j1HbueByMKVUqDkuy885fM6CeIuVfW+KcLjsPahfngTb8O52Wbfg=="

#Succeeded in listing the buckets in S3

% aws s3 ls

2023-11-01 13:57:10 kops-configuration-test

Figure 11. Checking Credentials

# Here you can see the leases (credentials requested via Vault)

# Notice we only requested credentials for the role s3-ro-access-sts

[ec2-user@ip-10-0-5-142 ~]$ vault list sys/leases/lookup/aws/creds/

Keys

----

s3-ro-access-sts/

#Here you can see the lease id sfB1tR8zvGSLqlxyxS6UATFx

[ec2-user@ip-10-0-5-142 ~]$ vault list sys/leases/lookup/aws/creds/s3-ro-access-sts/

Keys

----

sfB1tR8zvGSLqlxyxS6UATFx

#Here you can see details of the lease. Just want to show the ttl 6m57s

#this is the time after which these credentials will expire

[ec2-user@ip-10-0-5-142 ~]$ vault lease lookup aws/creds/s3-ro-access-sts/sfB1tR8zvGSLqlxyxS6UATFx

Key Value

--- -----

expire_time 2024-08-16T03:15:59.000032318Z

id aws/creds/s3-ro-access-sts/sfB1tR8zvGSLqlxyxS6UATFx

issue_time 2024-08-16T03:00:59.177372978Z

last_renewal <nil>

renewable false

ttl 6m57s

#Another run of the same command now showing the ttl is now 28s

[ec2-user@ip-10-0-5-142 ~]$ vault lease lookup aws/creds/s3-ro-access-sts/sfB1tR8zvGSLqlxyxS6UATFx

Key Value

--- -----

expire_time 2024-08-16T03:15:59.000032318Z

id aws/creds/s3-ro-access-sts/sfB1tR8zvGSLqlxyxS6UATFx

issue_time 2024-08-16T03:00:59.177372978Z

last_renewal <nil>

renewable false

ttl 28s

#We can no longer find the lease (ttl is expired)

[ec2-user@ip-10-0-5-142 ~]$ vault lease lookup aws/creds/s3-ro-access-sts/sfB1tR8zvGSLqlxyxS6UATFx

error looking up lease id aws/creds/s3-ro-access-sts/sfB1tR8zvGSLqlxyxS6UATFx: Error making API request.

URL: PUT http://127.0.0.1:8200/v1/sys/leases/lookup

Code: 400. Errors:

* invalid lease

#Trying again to list the buckets in S3 and getting an ExpiredToken error

% aws s3 ls

An error occurred (ExpiredToken) when calling the ListBuckets operation: The provided token has expired.

Figure12. Details of the lease & TTL

Audit Logs

Audit logs are a necessity especially when it comes to your critical assets and attempts to access them.

This is how you can enable Audit Logs for Vault in a lab environment.

vault audit enable file file_path=/var/log/vault_audit.log

This is a sample log message that shows the user david authenticating and getting a token (client_token field) which is redacted in this output that is associated with the policy s3-ro-access-role-policy.

{

"auth": {

"client_token": "REDACTED",

"display_name": "okta-david@gmail.com",

"metadata": {

"policies": "s3-ro-access-role-policy",

"username": "david@gmail.com"

},

"policies": ["default", "s3-ro-access-role-policy"],

"token_ttl": 2764800,

"token_type": "service"

},

"request": {

"id": "REDACTED",

"mount_point": "auth/okta/",

"operation": "update",

"path": "auth/okta/login/david@gmail.com"

},

"response": {

"auth": {

"client_token": "REDACTED",

"display_name": "okta-david@gmail.com",

"metadata": {

"policies": "s3-ro-access-role-policy",

"username": "david@gmail.com"

},

"policies": ["default", "s3-ro-access-role-policy"],

"token_ttl": 2764800,

"token_type": "service"

},

"mount_point": "auth/okta/"

},

"type": "response"

}

Subscribe to my newsletter

Read articles from Karim El Jamali directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Karim El Jamali

Karim El Jamali

Self-directed and driven technology professional with 15+ years of experience in designing & implementing IP networks. I had roles in Product Management, Solutions Engineering, Technical Account Management, and Technical Enablement.