Chapter 4 - Hands-on Image Inpainting with Segmentation Application

Hrishikesh Yadav

Hrishikesh Yadav

Overview

In computer vision and image processing, image inpainting is a complex approach that replaces damaged image components. It preserves visual coherence and realism while filling in spaces or getting rid of unnecessary elements. Applications for this technology may be found in a wide range of industries, including driverless cars, medical imaging, photo restoration, and editing. Through the use of sophisticated algorithms and deep learning models, image inpainting creates believable material to fill in the suffering regions by examining surrounding context and taking structural information, texture, and color into account.

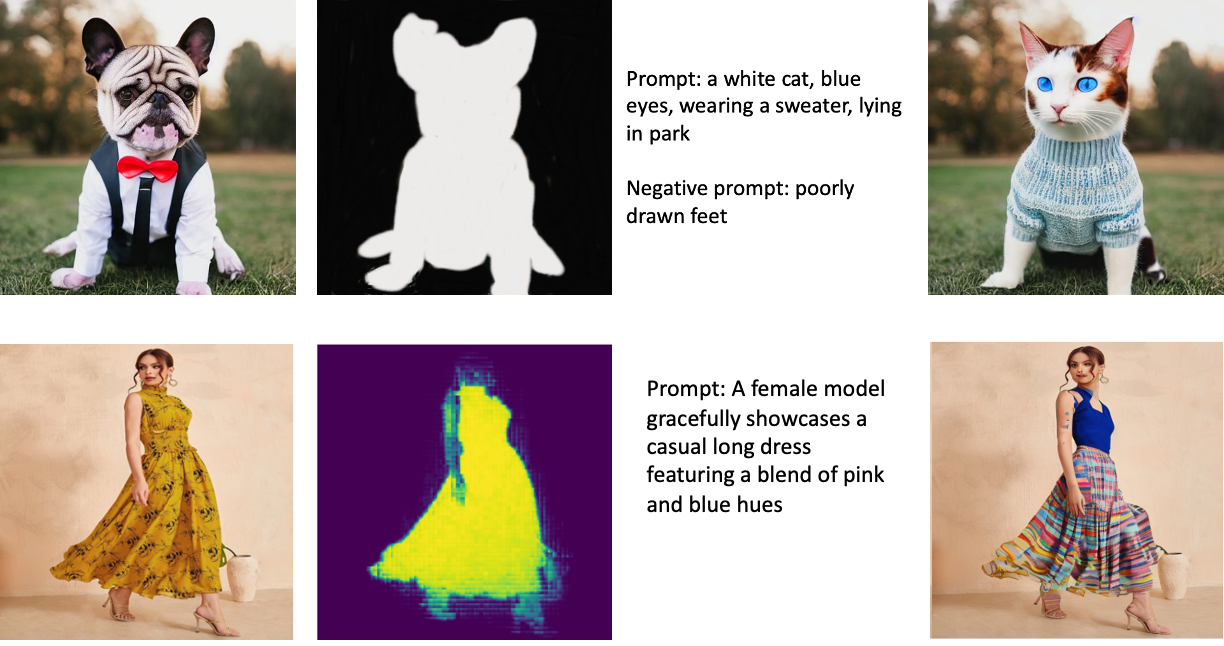

Segmentation is usually the first step in the process, when regions that need to be inpainted are determined. After that, the original picture and segmented mask are ready to be fed into a generative AI model, frequently together with a text prompt that explains the intended result. This model examines the context and creates new material based on the prompt. It is often built on deep learning architectures like transformer based diffusion model. After that, the produced material is polished and smoothly incorporated into the original picture. This method offers prompt-guided customization together with flexible, context-aware inpainting that can handle intricate scenarios and yield realistic results.

The demonstration of the application which will be building in the blog is explained below in the video, as is the architecture used to build the flow of the backend.

What's Image Segmentation & Masking ?

An image is divided into several segments or regions, each of which corresponds to a different item or area of the picture. By streamlining an image's representation, this method seeks to facilitate content analysis and comprehension. Semantic meaning, color, texture, intensity, and other attributes can all be used as criteria for segmentation. The idea is to cluster pixels with similar characteristics together so as to effectively isolate items of interest from one another or from the backdrop. You can observe below, the Apple red is the characteristics which helps for the grouping of the pixel and provides the edge for the segmentation portion and then the segmented portion is masked, with the white color which is binary coded as 1.

The process of constructing a binary or multi-class mask that matches the segmented parts of an image is known as mask creation, and it is frequently closely associated with image segmentation. Pixels that are part of the item of interest in a binary mask are usually assigned a value of 1 (white), whereas background pixels are assigned a value of 0 (black). Multi-class masks, where distinct pixel values indicate multiple object classes, can be utilized for more intricate segmentations. These masks are useful tools for many image processing tasks, such as object identification, picture manipulation, and most importantly - image inpainting. When it comes to inpainting, the mask indicates which sections must be filled in or recreated, directing the inpainting algorithm to concentrate on those portions of the picture while leaving the others intact.

Image Segmentation Architecture

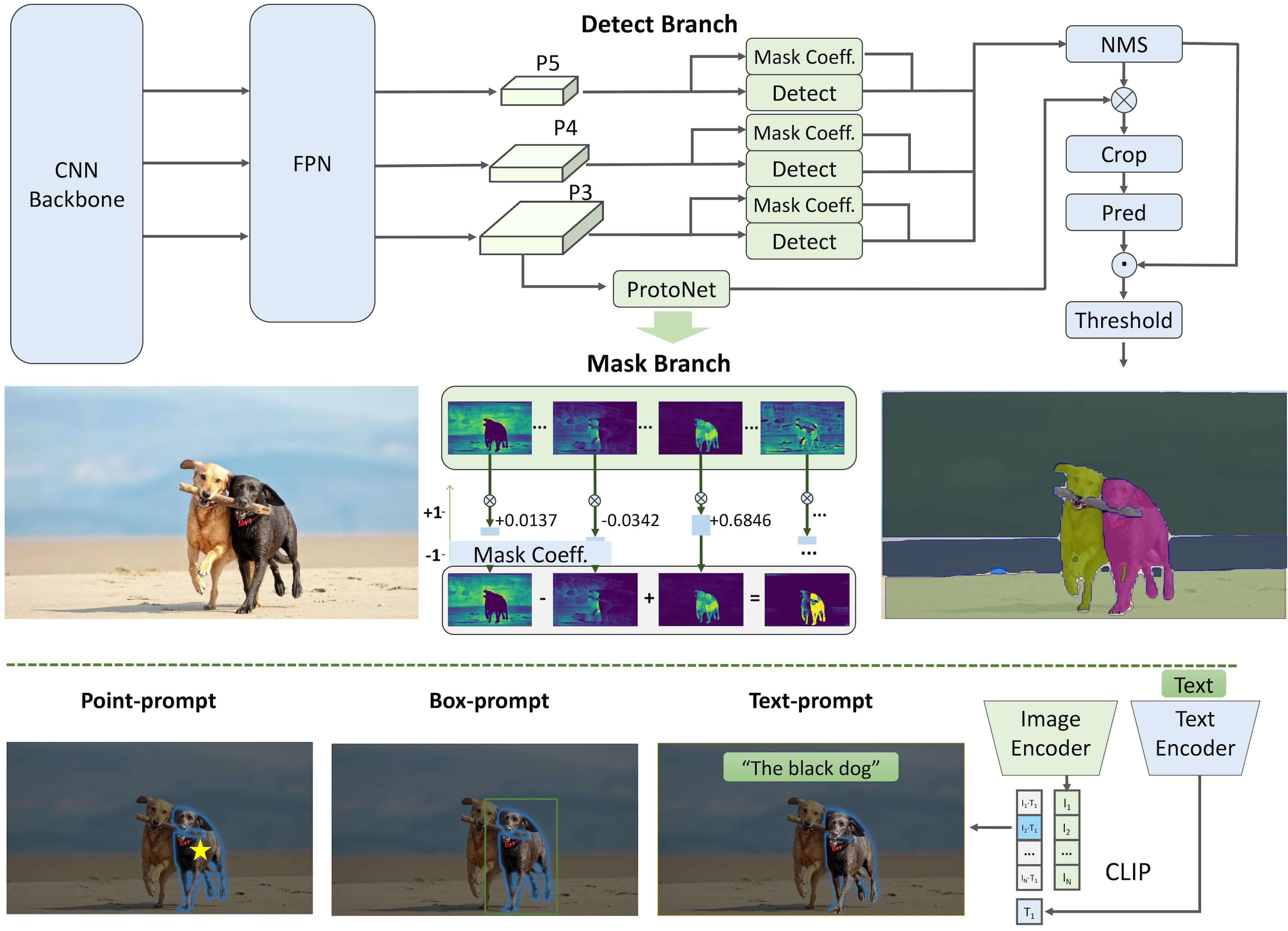

FastSAM is an innovative approach to image segmentation that addresses the computational challenges posed by its predecessor, the Segment Anything Model (SAM) by breaking down the segmentation process into two distinct phases, FastSAM achieves greater efficiency without sacrificing performance.

The first phase employs YOLOv8-seg, a convolutional neural network (CNN) architecture, to rapidly identify and segment all instances within an image. This step capitalizes on the speed and efficiency of CNNs, which are well-suited for processing visual data.

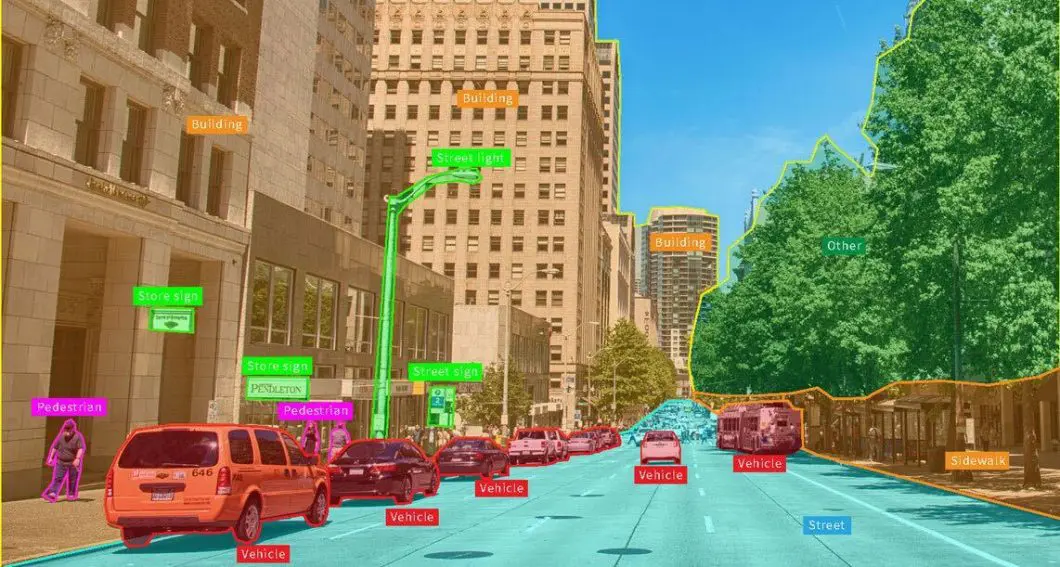

In the second phase, FastSAM uses prompt guided selection or the entity selection to refine the results based on specific user inputs or requirements. This allows for targeted segmentation of regions of interest, making the system more flexible and user-friendly. To illustrate this process, consider a photograph of a busy city street -

All-instance segmentation - FastSAM would quickly identify and outline all distinct objects in the scene - pedestrians, cars, buildings, street signs, etc.

Prompt guided selection - If a user then specifies "show me all the vehicles," FastSAM would highlight only the car and bus segmentations from the first stage, ignoring other elements.

This two-step approach offers several advantages - Speed, resource Utilization and the Real time application.

By combining the strengths of CNNs and prompt-guided refinement, FastSAM strikes a balance between speed and accuracy, making it a valuable tool for a wide range of computer vision applications that demand quick, efficient, and adaptable image segmentation. We will be making use of the FastSAM for the segmentation of the entity from the image.

What's Generative AI Inpainting

To truly revolutionize content creation and become indispensable tools, generative AI models need to evolve beyond basic generation. Adaptive Editing and Refinement Developing AI that can intelligently modify, update, and improve existing content while maintaining its core elements and intent. Style Transfer and Customization Creating systems that can apply specific styles, tones, or brand guidelines to content, allowing for consistent output across various projects. Content Fusion and Remixing Enabling AI to seamlessly combine elements from multiple sources, creating novel content that builds upon existing work.

For the selected entity selected prompt based generative inpainting is by providing the three particular parameters - Image, Entity Segmented Binary Image or Grayscale Image, Prompt to generate and replace. There are various model of the generative can be used from the model garden of the GCP like stable diffusion or Imagen.

The future of generative AI in content creation lies not in replacing human creativity, but in augmenting and enhancing it. By focusing on these advanced capabilities, AI developers can create tools that are truly transformative for content creators across industries. The most successful generative AI solutions will be those that offer flexibility, precision control, and seamless integration with existing content ecosystems.

Usecase of the Application

Photo Editing Software -

Remove unwanted objects or people from photos.

Restore damaged photographs.

Content Aware Fill Tools -

- Seamlessly fill in removed areas of an image.

Graphic Design Software -

Repair or modify stock images.

Create seamless textures and patterns.

Medical Imaging -

- Remove artifacts from MRI or CT scans.

Satellite Imagery -

- Fill in cloud-covered areas in aerial photographs Reconstruct missing data in remote sensing images.

Fashion and E-commerce -

- Remove backgrounds or change product colors.

- Architectural Visualization -

Remove unwanted elements from building renderings.

Add or modify architectural features

- Social Media Filters -

- Create seamless face filters or background replacements

Github for the Project -

https://github.com/Hrishikesh332/AI-Inpainting/blob/main/app.py

Research Paper

Fast Segment Anything https://arxiv.org/abs/2306.12156#

High Quality Entity Segmentation https://arxiv.org/abs/2211.05776

Brush2Prompt: Contextual Prompt Generator for Object Inpainting https://openaccess.thecvf.com/content/CVPR2024/papers/Chiu_Brush2Prompt_Contextual_Prompt_Generator_for_Object_Inpainting_CVPR_2024_paper.pdf

A Unified Prompt-Guided In-Context Inpainting Framework for Reference-based Image Manipulations https://www.researchgate.net/publication/370937903_A_Unified_Prompt-Guided_In-Context_Inpainting_Framework_for_Reference-based_Image_Manipulations

Subscribe to my newsletter

Read articles from Hrishikesh Yadav directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hrishikesh Yadav

Hrishikesh Yadav

With extensive experience in backend and AI development, I have a keen passion for research, having worked on over 4+ Research work in the Applied Generative AI domain. I actively contribute to the community through GDSC talks, hackathon mentoring, and participating in competitions aimed at solving problems in the machine learning and AI space. What truly drives me is developing solutions for real-world challenges. I'm the member of the SuperTeam and working on the Retro Nexus (Crafting Stories with AI on Chain). The another major work focused on the technological advancement and solving problem is Crime Dekho, an advanced analytics and automation platform tailored for police departments. I find immense fulfillment in working on impactful projects that make a tangible difference. Constantly seeking opportunities to learn and grow, I thrive on exploring new domains, methodologies, and emerging technologies. My goal is to combine my technical expertise with a problem solving mindset to deliver innovative and effective solutions.