Upload files in NestJS & GraphQL

Nicanor Talks Web

Nicanor Talks Web

The challenge with uploading files with GraphQL is that GraphQL isn't friendly for handling multipart requests and a bit of configuration is needed. There might be a better approach out there, nevertheless, I'll show you my way that gets things done.

Note

You can decide to use the Apollo version to navigate around the security restrictions introduced on version 4 e.g. Cors, CSRF, etc

You should have a basic NestJS project setup, you can choose to follow along on NestJS 101.

Follow along on the GitHub repo, note that this is a focus on NestJS GraphQL only. This repo contains other parts as well.

Getting Started

Set up a basic NestJS project. You can skip this part if you already set up the project.

On your terminal:

npm install -g @nestjs/cli

nest new file-uploads

You should have a folder structure like this:

project-name/

├── src/

│ ├── app.controller.ts

│ ├── app.controller.spec.ts

│ ├── app.module.ts

│ ├── app.service.ts

│ ├── main.ts

├── test/

├── node_modules/

├── package.json

├── tsconfig.json

└── nest-cli.json

Now let's configure GraphQL and Apollo server(Apollo helps in data fetching & management). Also, let's do a quick setup for file upload. We need graphql-upload package to manage file upload into our app. Also, you've to configure this as a middleware to enable files to be integrated into the GraphQL URL/environment.

Install the following dependencies:

yarn add @apollo/server graphql-tools graphql-upload@13.0.0 @types/graphql-upload@8.0.12

In the app.module.ts file, configure graphql-upload to be used by the app

import { Logger } from '@nestjs/common'

import { NestFactory } from '@nestjs/core'

import { graphqlUploadExpress } from 'graphql-upload'

import { AppModule } from './app.module'

async function bootstrap() {

const app = await NestFactory.create(AppModule)

app.enableCors()

app.use(graphqlUploadExpress())

app.useGlobalPipes()

const globalPrefix = 'api'

app.setGlobalPrefix(globalPrefix)

const port = process.env.PORT || 4000

await app.listen(port)

Logger.log(`🚀 Application is running on: ${await app.getUrl()}/graphql)`)

}

bootstrap()

In the app.module.ts , make sure to put the default uploads: false and disable default playground(playground will depend on the version of apollo-server you've installed, for me am using version 4). Also at the export of app, configure the Middleware to manage file uploads

import { MiddlewareConsumer, Module, NestModule } from '@nestjs/common'

import { ConfigModule } from '@nestjs/config'

import { GraphQLModule } from '@nestjs/graphql'

import { ApolloDriver, ApolloDriverConfig } from '@nestjs/apollo'

import { graphqlUploadExpress } from 'graphql-upload'

import {

ApolloServerPluginLandingPageLocalDefault,

} from '@apollo/server/plugin/landingPage/default'

import { AppController } from './app.controller'

import { AppService } from './app.service'

import { FilesModule } from './files/files.module'

@Module({

imports: [

ConfigModule.forRoot({ isGlobal: true }),

GraphQLModule.forRootAsync<ApolloDriverConfig>({

imports: [ConfigModule],

driver: ApolloDriver,

useFactory: async () => ({

playground: false,

uploads: false,

plugins: [ApolloServerPluginLandingPageLocalDefault({ footer: false })],

autoSchemaFile: true,

}),

}),

FilesModule

],

controllers: [AppController],

providers: [AppService],

exports: [],

})

export class AppModule implements NestModule {

configure(consumer: MiddlewareConsumer) {

consumer

.apply(graphqlUploadExpress({ maxFileSize: 10000000, maxFiles: 10 }))

.forRoutes('graphql')

}

}

Now, that we are done with the configurations, the complex part is over, let's go to the sweet stuff, file uploads.

Create new filesUpload module using nestjs-cli

nest g module filesUpload

nest g module resolver

nest g module service

Once, the above is done, then filesUploadModule should be in the app.module.ts.Now, let's create the necessary mutations. We need to upload files to AWS S3, and Cloudinary and save files from the frontend to the backend folder. Make sure to rely on graphql-upload to manage the file types.

Am going to use streams to manage file uploads, especially large file. Files have CreateReadStream from the file system that helps to read files in chunks rather than the entire file, check out this article on readable streams.

import { Args, Mutation, Query, Resolver } from '@nestjs/graphql';

import { FileUpload, GraphQLUpload } from 'graphql-upload'

import { FilesUploadService } from './filesUpload.service';

@Resolver()

export class FilesUploadResolver {

constructor(private readonly filesUploadService: FilesUploadService) {}

@Query(() => String)

async Test() {

return 'here we go'

}

@Mutation(() => Boolean)

async AWSfileUpload(@Args({ name: 'file', type: () => GraphQLUpload }) file: FileUpload) {

return this.filesUploadService.AWSfileUpload(file)

}

@Mutation(() => Boolean)

async CloudinaryfileUpload(@Args({ name: 'file', type: () => GraphQLUpload }) file: FileUpload) {

return this.filesUploadService.CloudinaryfileUpload(file)

}

@Mutation(() => Boolean)

async LocalfileUpload(@Args({ name: 'file', type: () => GraphQLUpload }) file: FileUpload) {

return this.filesUploadService.LocalfileUpload(file)

}

}

Then finally, we can create the service to now manage the file uploads to the residential location. Make sure to configure the AWS Key and secret key using AWS SDK, and Cloudinary credentials. Instead of 3 functions/mutations, you can have one mutation then have a variable identifier to upload to specific platform using conditional statements

import { Injectable } from '@nestjs/common';

import { FileUpload } from 'graphql-upload'

import { S3Client } from '@aws-sdk/client-s3'

import { Upload } from '@aws-sdk/lib-storage'

import { v2 as cloudinary } from 'cloudinary';

import { join } from 'path';

import { createWriteStream } from 'fs';

@Injectable()

export class FilesService {

private readonly s3Client: S3Client

private AWSRegion: string

constructor() {

this.AWSRegion = `eu-west-1`

this.s3Client = new S3Client({ region: this.AWSRegion })

cloudinary.config({

cloud_name: process.env.CLOUDINARY_CLOUD_NAME,

api_key: process.env.CLOUDINARY_API_KEY,

api_secret: process.env.CLOUDINARY_API_SECRET

});

}

async AWSfileUpload(file: FileUpload) {

try {

// s3 functionality

const key = `fileLocationFolder/${Date.now()}-${file?.filename}`

const { createReadStream, mimetype} = file;

const fileStream = createReadStream()

const upload = new Upload({

client: this.s3Client,

params: {

Bucket: `S3BucketName`,

Key: key,

Body: fileStream,

ContentType: mimetype,

},

})

await upload.done()

return true

} catch (error) {

console.log('Error occurred on AWSfileUpload-files.service: ', error.message)

}

}

async CloudinaryfileUpload(file) {

try {

// cloudinary functionality

// some other

// upload_large

// upload

var upload_stream= cloudinary.uploader.upload_stream({folder: 'file-series', tags: 'files-series'},function(err,image) {

if (err){

console.warn(err);

return false;

}

console.log("* ", image.public_id);

console.log("* ", image.url);

});

const { createReadStream } = file;

const fileStream = createReadStream()

await fileStream.pipe(upload_stream);

return true;

} catch (error) {

console.log('Error occurred on CloudinaryfileUpload-files.service: ', error.message)

return false

}

}

async LocalfileUpload(file: FileUpload) {

try {

// Save to local root folder functionality

const localFilePath = join(process.cwd(), 'uploads', `${Date.now()}-${file?.filename}`)

return new Promise<void>((resolve, reject) => {

const writeStream = createWriteStream(localFilePath)

file.createReadStream().pipe(writeStream).on('finish', () => resolve).on('error', (error) => {

console.error('Error occurred - ', error.message)

reject(error)

})

})

} catch (error) {

console.log('Error occurred on LocalfileUpload-files.service: ', error.message)

}

}

}

The backend part is now complete and we can upload files. Start the backend:

npm run start

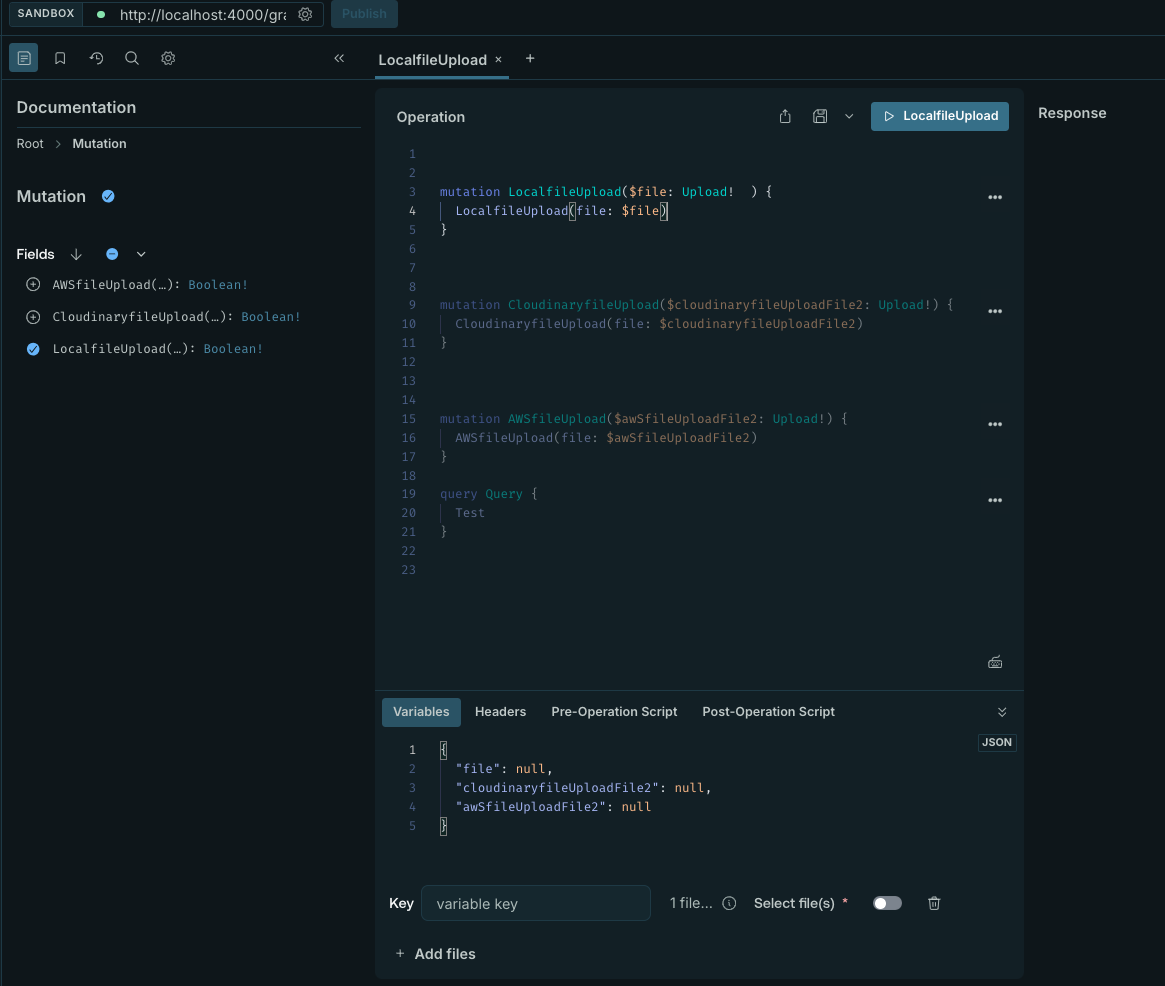

Your graphql playground should look something like this, once you select the mutation, then there's files to select on the variables input

We shall connect to the frontend on our next series, stay tuned

Subscribe to my newsletter

Read articles from Nicanor Talks Web directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Nicanor Talks Web

Nicanor Talks Web

Hi there! I'm Nicanor, and I'm a Software Engineer. I'm passionate about solving humanitarian problems through tech, and I love sharing my knowledge and insights with others.