Unlock Hidden Value: Leverage Transient & Temporary Tables in Snowflake

John Ryan

John Ryan

Having worked with over 50 Snowflake customers across Europe and the Middle East, I repeatedly find customers make the same mistakes. In this article, I've covered the surprising benefits of Snowflake TRANSIENT tables, and you, as a Data Engineer or Snowflake Administrator, can cut costs by upwards of $50,000 per year with very little effort.

I should imagine the end-of-year bonus negotiation will go a lot easier when you point out the savings you've made.

A Simple Test: How much Snowflake Storage are you using/wasting?

Execute the following SQL statement on your Snowflake account, and it will produce a simple table showing the storage breakdown by month and type.

select to_char(usage_date,'YYYYMM') as sort_month

, to_char(usage_date,'Mon-YYYY') as month

, trunc(avg(storage_bytes)) as storage

, trunc(avg(stage_bytes)) as stage

, trunc(avg(failsafe_bytes)) as failsafe

, round(avg(stage_bytes) / avg(storage_bytes) *100,1 ) as pct_stage

, round(avg(failsafe_bytes) / avg(storage_bytes) *100,1 ) as pct_failsafe

from snowflake.account_usage.storage_usage

group by month, sort_month

order by sort_month;

The above SQL statement lists the monthly storage costs broken by:

Data Storage: This includes your active storage and micro partitions held for Time Travel.

Stage Storage: Which represents the storage used to temporarily hold data files in an internal stage before being loaded into Snowflake tables.

Failsafe Storage: Holds data that is usually inaccessible and held for seven days before being deleted. This allows you to recover accidentally deleted data, but unlike Time Travel, which is immediately accessible, you need to contact Snowflake Support.

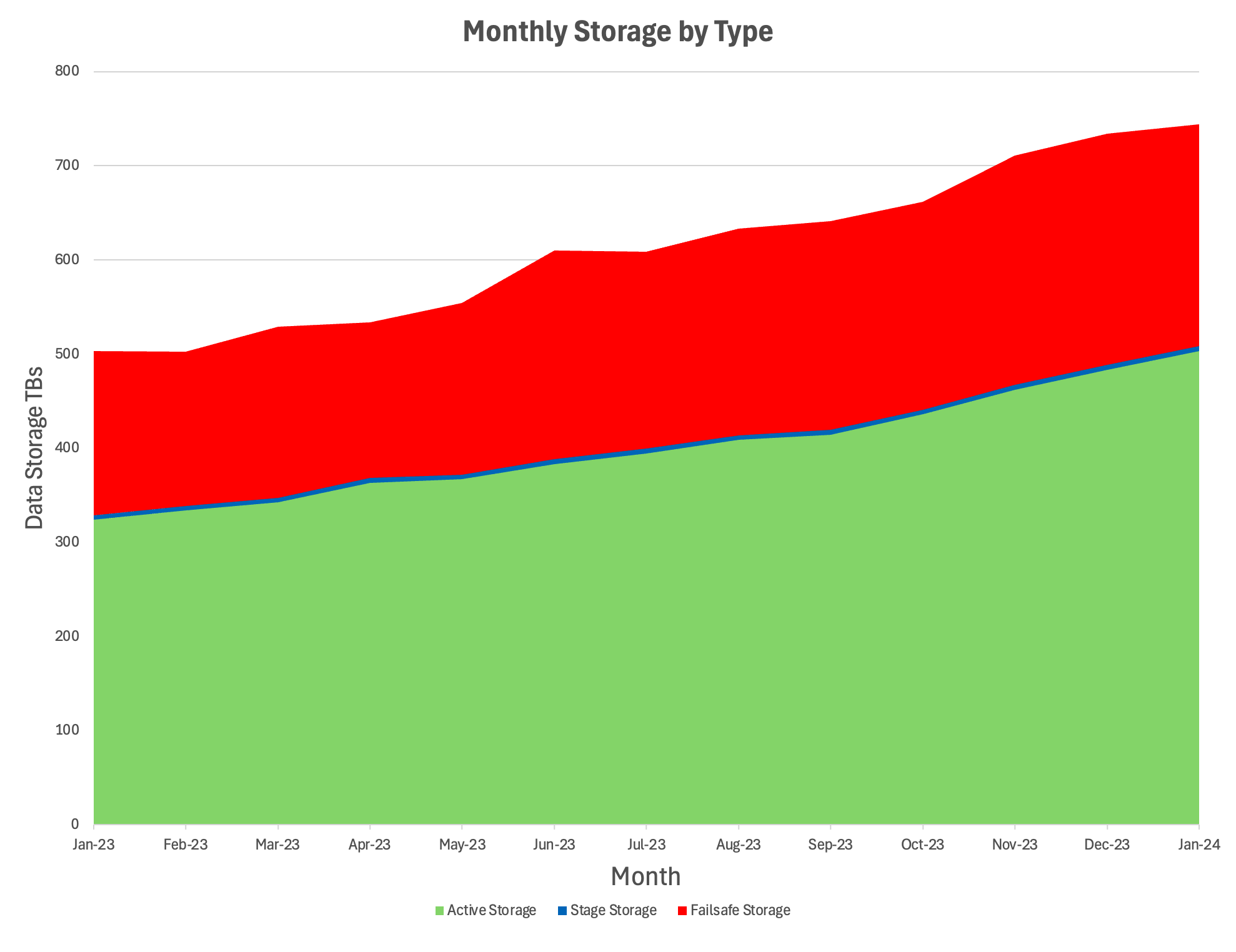

The graph below shows a pattern I've seen many times. It shows a gradual increase in data storage costs over the year, with Failsafe Storage accounting for over 30% of the total storage bill, which should never exceed about 5% of total storage.

Assuming a total storage of around 500 GB means paying more than $65,000 annually for a data recovery option you may never use. Worse still, 95% of the Failsafe data will never need recovery as it holds temporary data.

Snowflake Data Pipelines

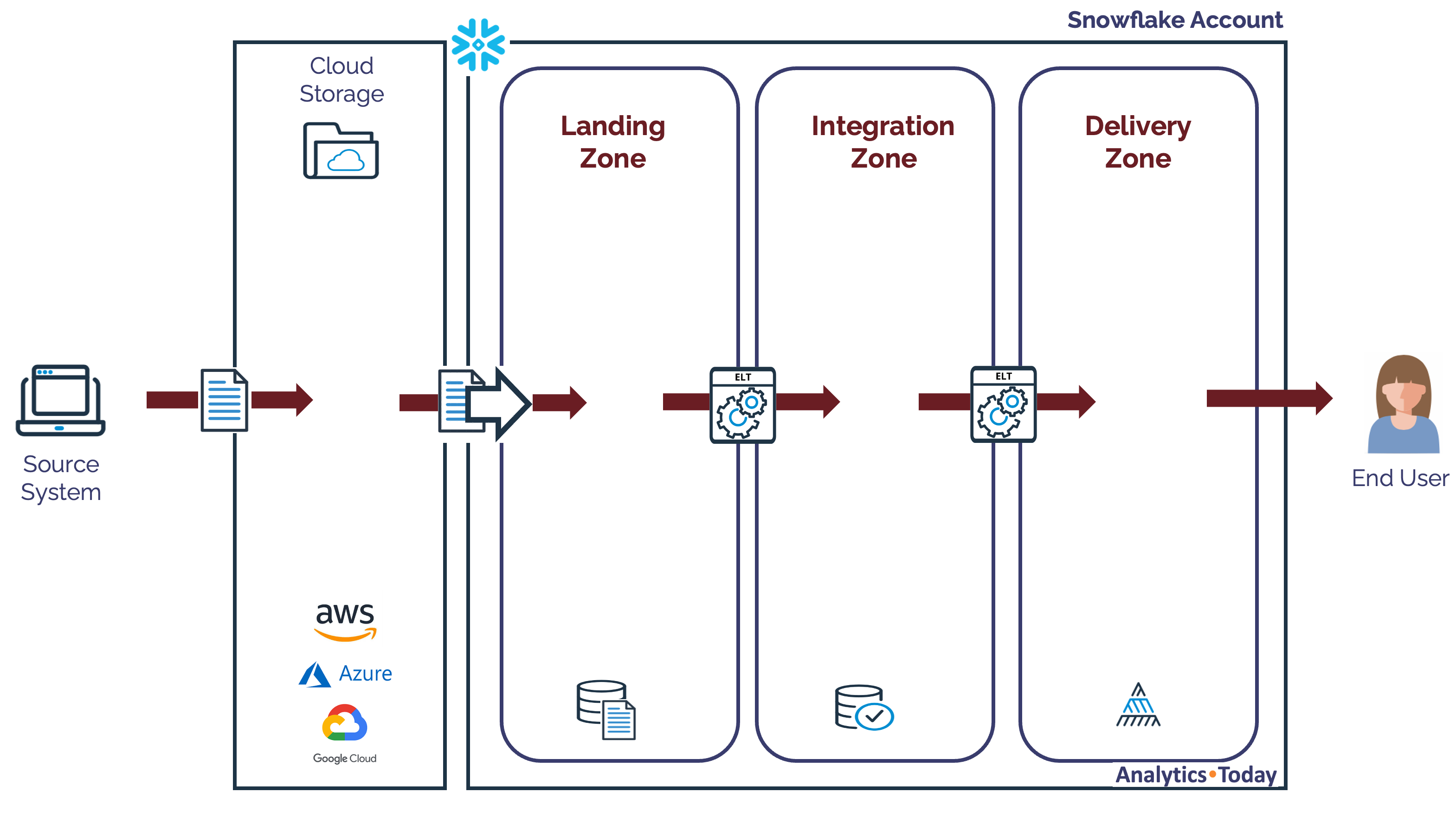

The diagram below illustrates a typical Snowflake data pipeline in which data is loaded from Cloud Storage into a Landing Zone using Snowflake Copy or Snowpipe before being transformed and integrated into the Integration Zone and then delivered to end-users in the Delivery Zone.

The critical point in the above diagram is that data files are loaded from Cloud Storage into the Landing Zone and later deleted or truncated after they have been transformed.

Even the Integration Zone includes tables with frequently re-processed data, which are repeatedly deleted and re-loaded.

The critical point is that this purely transient data is still held in Failsafe for seven days to enable data recovery. However, in the event of a failure, the data is more likely to be reloaded and re-processed rather than recovered.

For example, if a system loads around 10 TBs of data daily, it adds around $3,000 per year for additional storage costs. However, it also adds $19,320 per year for Failsafe storage for the extra 70 TBs of storage.

The answer is to convert tables in the Landing Zone into TRANSIENT tables.

Snowflake Data Retention

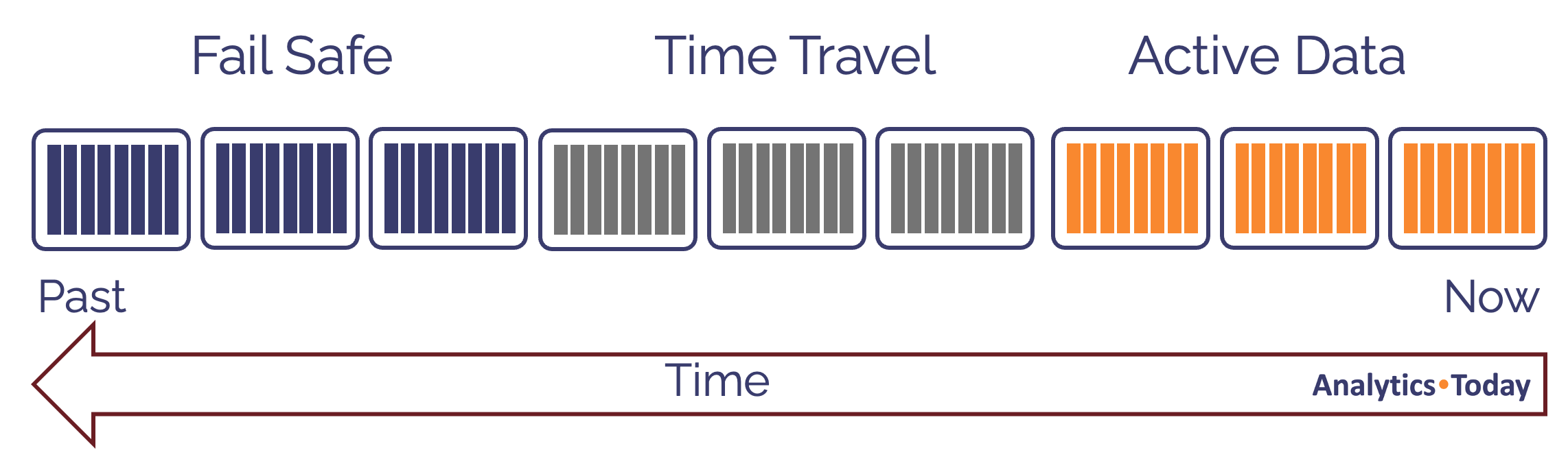

The diagram below illustrates how Snowflake includes built-in systems to retain data and avoid accidental loss.

The diagram above illustrates the default situation in which Active Data is added as new micro-partitions on the right. As data is updated or deleted, these are added to Time Travel for up to 90 days before being moved to Failsafe for 7 days.

This level of protection is sensible for infrequently changing data; however, it makes little sense for tables that are often dropped and re-created, deleted, or truncated. These are best deployed using TRANSIENT tables.

Identifying Candidate TRANSIENT Tables

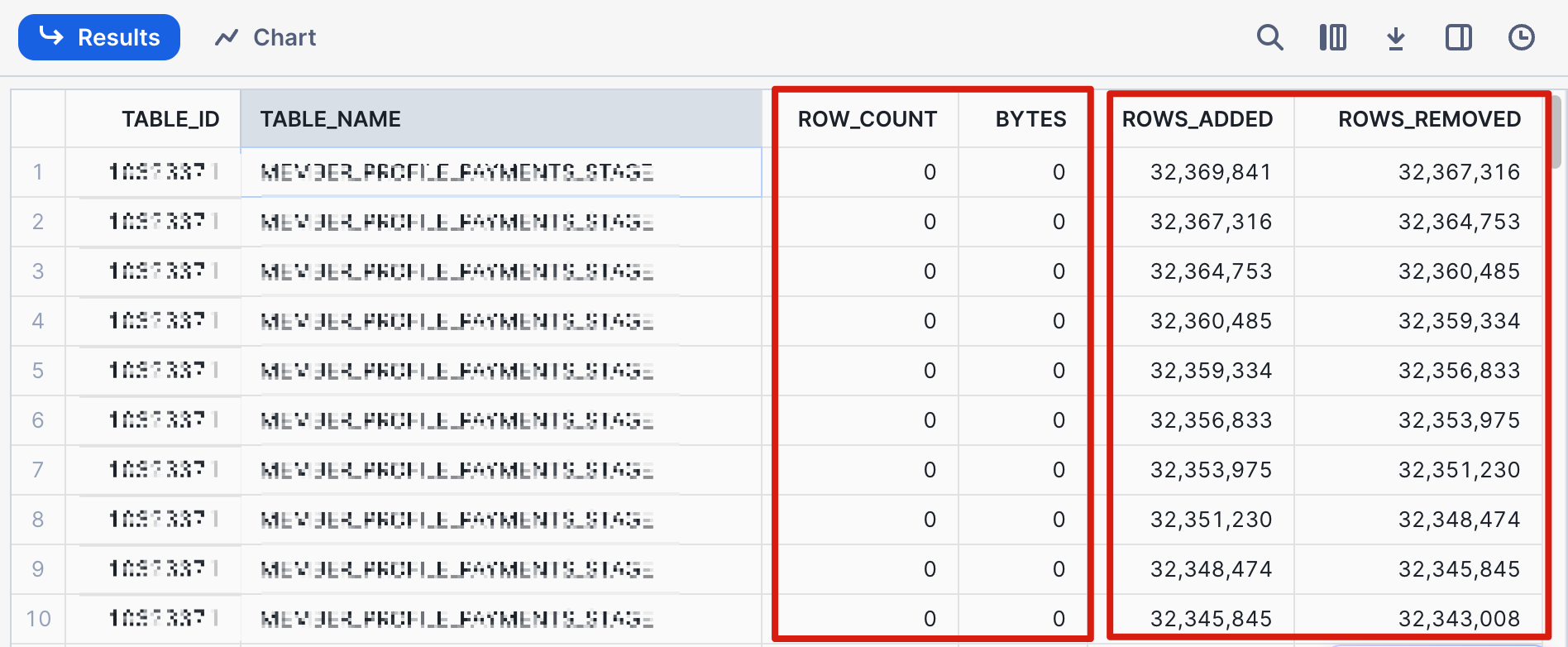

It's relatively easy to identify tables with repeating transformations that insert and then remove rows using the newly added view : table_dml_history.

The SQL statement below helps identify situations where tables are repeatedly re-loaded and can be used as the starting point to identify potential candidates to convert to TRANSIENT tables.

select h.table_id, h.table_name, t.row_count, t.bytes, h.rows_added, h.rows_removed

from snowflake.account_usage.table_dml_history h,

snowflake.account_usage.tables t

where true

and h.table_id = t.table_id

and h.start_time > dateadd(day, -30, current_timestamp())

and h.rows_removed > 0

and t.row_count = 0

and t.is_transient = 'NO'

order by h.rows_added + h.rows_removed desc

limit 100;

The screenshot above demonstrates how a single table is repeatedly loaded with millions of rows, which are then deleted or truncated. This table is a good candidate for replacement with a transient table.

Creating a Snowflake Transient Table

The SQL script below demonstrates how to create a transient table by simply including the TRANSIENT keyword.

create TRANSIENT table sales (

sale_id number,

sale_date date

customer_id number);

The critical feature of transient tables is that the data is transient, not the table. Once created, transient tables behave in precisely the same way as a default (PERMANENT) table.

The only difference is the ability to recover, which affects:

Time Travel: Unlike permanent tables, which have up to 90 days of Time Travel, Transient tables have (by default) just one day's Time Travel.

FailSafe: Unlike default tables, which are held for seven days, transient tables have no Failsafe and are immediately deleted when they are no longer held in Time Travel.

Snowflake Transient Tables Vs. Temporary Tables

Unlike permanent and transient tables, Snowflake temporary Tables are temporary and only exist within the current session. Once the session is ended, temporary tables will be dropped, which can help reduce storage costs further.

However, temporary tables have additional features, which include:

Naming Conflicts: Permanent and Transient tables must have a unique name within the schema. A temporary table can, however, be created with the same name as an existing table. In fact, a temporary table takes precedence over an existing table, hiding the original.

Session Specific: Temporary tables are only visible within the current session. This means multiple users can create temporary tables with the same name without issues.

Time Travel: Similar to Transient and Permanent tables, Temporary tables have time travel available. This supports time travel queries of up to one day from within the same session.

How to Convert a Table to a Transient Table

It's reasonable to assume Snowflake would provide an alter table command, but this is not an option. Instead, the following SQL demonstrates converting a permanent to a transient table.

create or replace transient table customer_transient

clone customer

copy grants;

alter table customer

swap with customer_transient;

drop table customer_transient;

The table CUSTOMER was converted to a transient table in the above example. The steps included:

Clone the

CUSTOMERtable to a transient table. (Note: thecopy grantsoption means the grants from theCUSTOMERtable are not lost.The

CUSTOMERandCUSTOMER_TRANSIENTtables are swapped. This is the logical equivalent of simply swapping the names.The

CUSTOMER_TRANSIENTtable was dropped as it's no longer needed.

How to create a Transient Schema or Database

In addition to Transient Tables, Snowflake provides the option to create a Transient Database or Schema.

The SQL script below demonstrates how to create these.

create transient database edw_dev;

create transient schema edw_landing_zone;

The advantage of a transient database or schema is that the tables created within it are transient by default. This means you don't need to remember to use the transient keyword when creating tables.

ALTER an existing database or schema to make them transient. However, that will not alter the existing tables, which need to be converted using the abovementioned method.Best Practices for TRANSIENT and TEMP tables

As indicated above, tables in the Landing Zone should always be created as TRANSIENT, and tables used to store intermediate values in the Integration Zone should be TRANSIENT or TEMPORARY.

The following best practices for transient data should be used:

Create a transient database or transient schema for tables in the Landing Zone to avoid unnecessary Failsafe storage costs.

Convert existing databases and schemas used to land data to transient and convert any existing tables, as these won't be converted automatically. Only newly created tables in a transient schema will be created as transient.

Avoid storing both transient and permanent tables in the same schema, as this makes it challenging to produce a point-in-time backup using a clone operation. The article on Time Travel and Clones provides more information about using Clones to create historical point-in-time backups.

Consider using a transient or temporary table when storing intermediate results as part of the data transformation pipeline. I favor using transient tables over temporary ones because they retain the data after the session completes, which makes it easier to query intermediate results in the event of failure. This technique makes it easier to debug transformation pipelines.

Periodically check data storage usage on the account to help identify issues around Failsafe storage resulting from transient or temporary tables.

Subscribe to my newsletter

Read articles from John Ryan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

John Ryan

John Ryan

After 30 years of experience building multi-terabyte data warehouse systems, I spent five years at Snowflake as a Senior Solution Architect, helping customers across Europe and the Middle East deliver lightning-fast insights from their data. In 2023, he joined Altimate.AI, which uses generative artificial intelligence to provide Snowflake performance and cost optimization insights and maximize customer return on investment. Certifications include Snowflake Data Superhero, Snowflake Subject Matter Expert, SnowPro Core, and SnowPro Advanced Architect.