ArgoCD on AWS EKS, as Simple as Ever!

Cloud Commander

Cloud Commander

Overview

Organizations today have multitudes of pipelines for deploying their applications. However, these lack integration with the model tool set and a more progressive and secure way to deploy an application using the latest DevOps practices. DevOps practices like GitOps and DevSecOps are becoming increasingly popular, and more engineers are employing them to make their workflow easier, more efficient, and more resilient to changes.

Let’s start with GitOps on the ArgoCD side,

GitOps is a software engineering practice that uses a Git repository as its single source of truth. Teams commit declarative configurations into Git, which are used to create environments needed for the continuous delivery process. There is no manual environment setup and no use of standalone scripts—everything is defined through the Git repository.

A basic part of the GitOps process is a pull request. New configuration versions are introduced via pull requests and merged with the main branch in the Git repository. The latest version is then automatically deployed. The Git repository contains a full record of all changes and environment details at every process stage.

Argo CD handles the latter stages of the GitOps process, ensuring that new configurations are correctly deployed to a Kubernetes cluster.

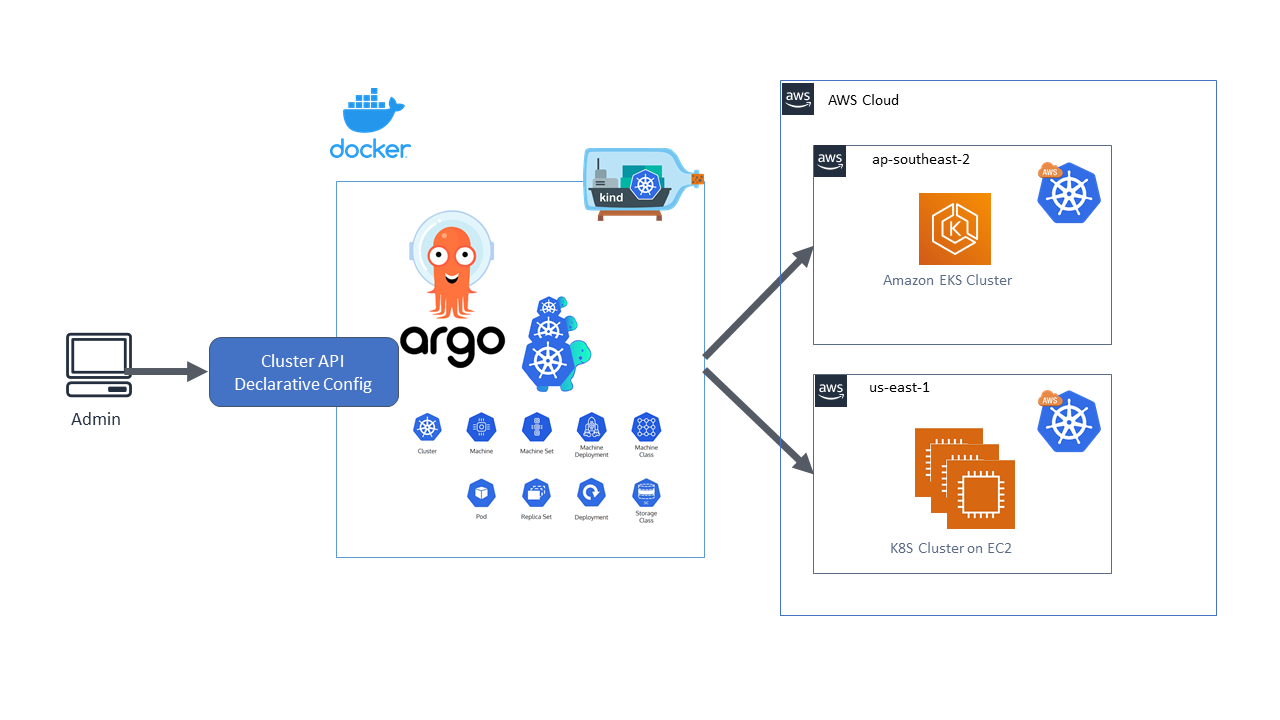

So, in modern pipelines or CD workflows, rather than running Helm apply directly in the cluster, we will use ArgoCD in a side-car strategy, where Argo remains in a particular namespace inside the cluster and can easily deploy applications in the same cluster using the CRDs it has. Applications with multiple environments can deploy Argo in a central environment and use a remote cluster approach to deploy applications in each workload (beta, alpha, staging, prod, etc.). You can also divide the access to applications in Argo using the RBAC.

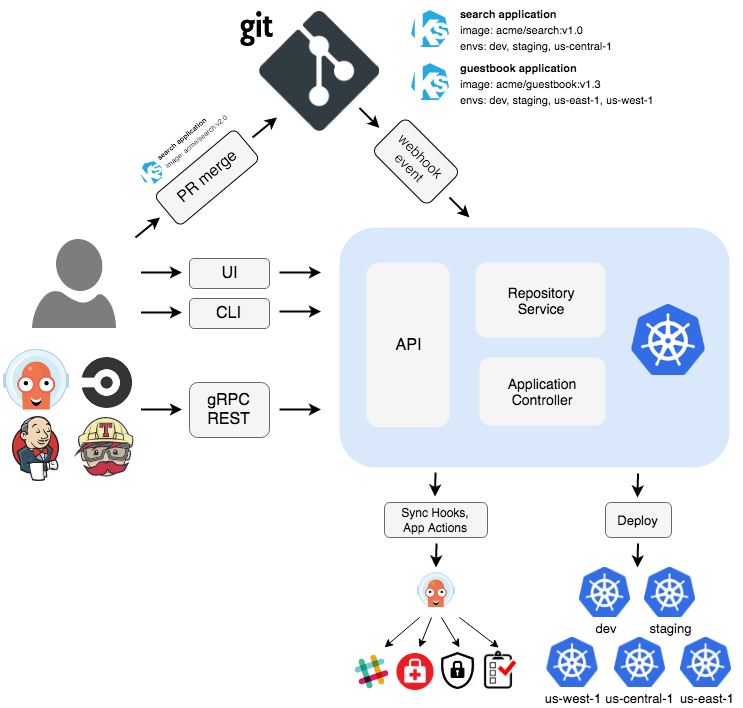

How does ArgoCD function?

So ArgoCD utilizes these three resources it has to manage the state of the cluster and fetch the latest state from the repo:

API Server

The API server is a gRPC/REST server that exposes the API consumed by the Web UI, CLI, and CI/CD systems. It has the following responsibilities:

· application management and status reporting

· invoking of application operations (e.g sync, rollback, user-defined actions)

· repository and cluster credential management (stored as K8s secrets)

· authentication and auth delegation to external identity providers

· RBAC enforcement

· listener/forwarder for Git webhook events

Repository Server

The repository server is an internal service that maintains a local cache of the Git repository holding the application manifests. It is responsible for generating and returning the Kubernetes manifests when provided the following inputs:

· repository URL

· Revision (commit, tag, branch)

· application path

· template-specific settings: parameters, helm values.yaml

Application Controller

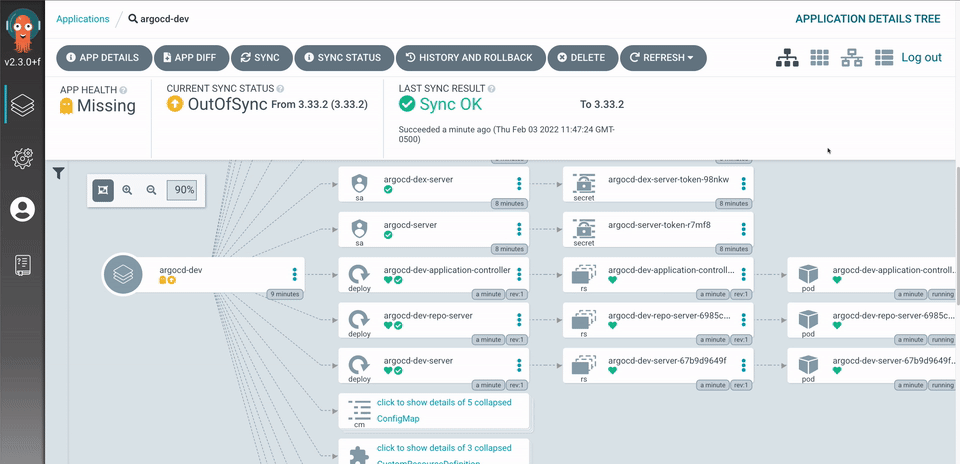

The application controller is a Kubernetes controller which continuously monitors running applications and compares the current, live state against the desired target state (as specified in the repo). It detects the OutOfSync application state and optionally takes corrective action. It is responsible for invoking any user-defined hooks for lifecycle events (PreSync, Sync, PostSync)

Let’s see Argo in action with AWS EKS.

Amazon EKS is a fully managed Kubernetes service that provides high availability, scalability, and security for containerized applications. It integrates seamlessly with AWS services, automates control plane management, and supports hybrid deployments. EKS also offers deep security features, native Kubernetes compatibility, and flexibility in deployment, making it ideal for production workloads.

I am particularly biased towards using EKS as I have more hands-on experience with AWS services, but you can also go with Azure or GCP.

Prerequisite -: AWS CLI, kubectl, eksctl, helm

Steps:

Start with installing eksctl on your machine. Reference here

Create a cluster using

eksctl create cluster --name demo-cluster --region us-east-1 --fargate

- Update the local Kube config.

aws eks update-kubeconfig --region us-east-1 --name demo-cluster

- Create an Argocd namespace.

kubectl create namespace argocd

5. Associate the namespace with the fargate profile

eksctl create fargateprofile \ --cluster demo-cluster \ --region us-east-1 \ --name argocd \ --namespace argocd

- Now apply argocd in cluster.

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/v2.11.2/manifests/install.yaml

Let the services come up and show 1/1.

You can test it locally on

localhost:8080by

kubectl port-forward svc/argocd-server 8080:443 -n argocd

- The initial user ID will be: admin, and the password can be retrieved through

kubectl describe argocd-initial-admin-secret -n argocd

Now copy the password field and decode it using

echo your-pass | bease64 --decode

You have successfully deployed argocd on cluster

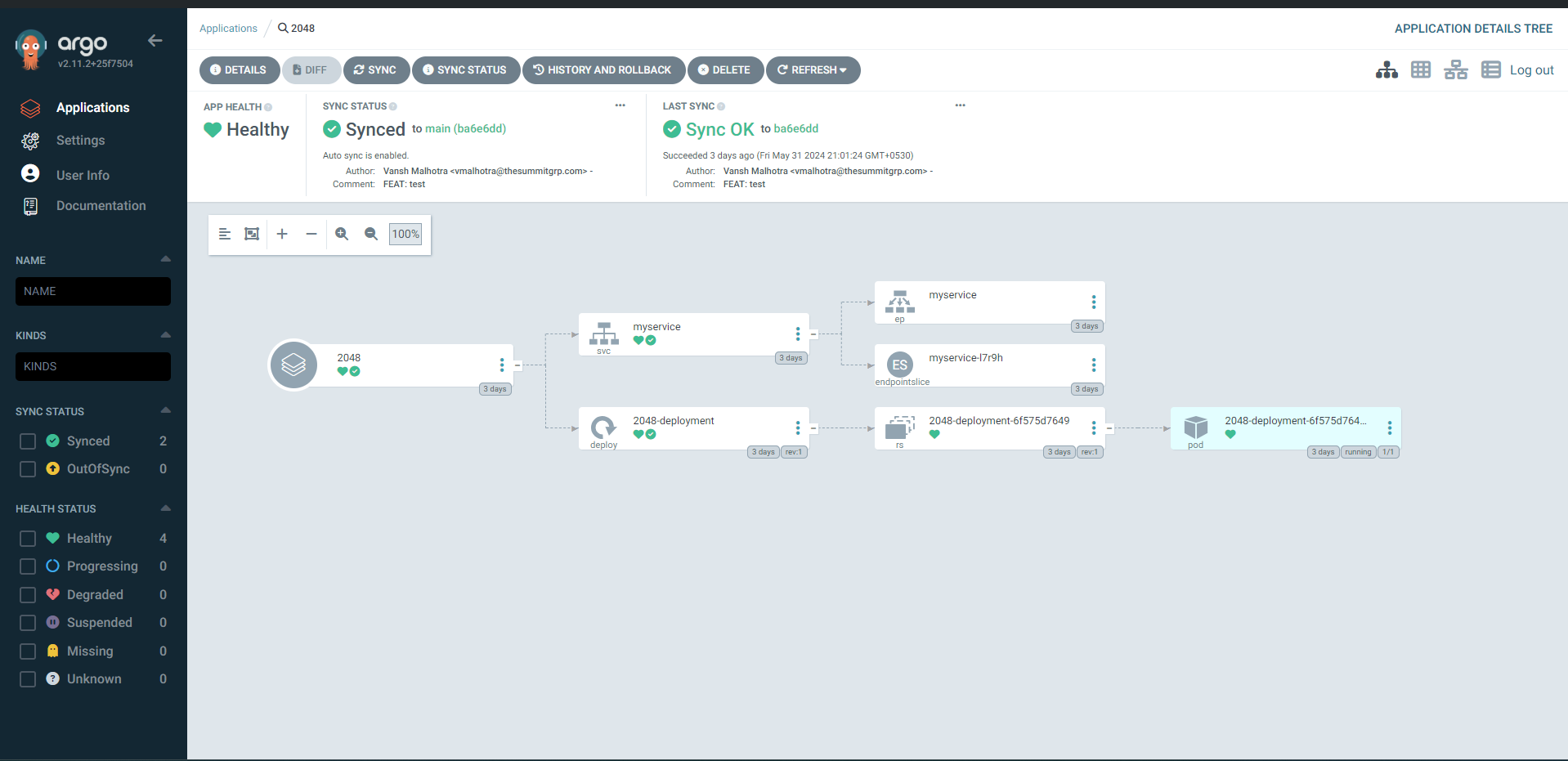

Now, we can use this to configure an app and use argo to deploy the app in the cluster

You can reference a sample repo GitHub - vmalhotra7/Snyk-testing: Testing Trivy as a DevSec tool for CI/CD workflows with argocd handling the CD part

- You can now use the Argo UI or the argocli to create an app

#Login to ArgoCD CLI using ->

argocd admin <initial-password> -n argocd

argocd app create <app-name> --repo <repo-url> --path <path-to-app> --dest-namespace <namespace> --dest-server https://kubernetes.default.svc

# https://kubernetes.default.svc --> signify in cluster deployment model

Use the deploy path as the path for k8s config files

Save the app, and Argo will start polling the k8 configs from the repo and applying them on the cluster.

Success! Your app is deployed in the cluster.

You can access the app locally by running

kubectl port-forward svc/myservice 8081:80

use localhost:8081 to access the website

How to setup alb add-on (Optional)

To access the app, we have to create an alb and ingress resources, so has to map the application endpoint to the alb endpoint

commands to configure the IAM OIDC provider

export cluster_name=demo-cluster

oidc_id=$(aws eks describe-cluster --name $cluster_name --query "cluster.identity.oidc.issuer" --output text | cut -d '/' -f 5)

Check if there is an IAM OIDC provider configured already

aws iam list-open-id-connect-providers | grep $oidc_id | cut -d "/" -f4\n

If not, run the below command

eksctl utils associate-iam-oidc-provider --cluster $cluster_name --approve

Configure an IAM service account.

Download IAM policy

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/install/iam_policy.json

Create IAM Policy

aws iam create-policy \ --policy-name AWSLoadBalancerControllerIAMPolicy \ --policy-document file://iam_policy.json

Create IAM Role

eksctl create iamserviceaccount \ --cluster=<your-cluster-name> \ --namespace=kube-system \ --name=aws-load-balancer-controller \ --role-name AmazonEKSLoadBalancerControllerRole \ --attach-policy-arn=arn:aws:iam::<your-aws-account-id>:policy/AWSLoadBalancerControllerIAMPolicy \ --approve

Deploy ALB controller

Add helm repo

helm repo add eks https://aws.github.io/eks-charts

Update the repo

helm repo update eks

Install

helm install aws-load-balancer-controller eks/aws-load-balancer-controller \ -n kube-system \ --set clusterName=<your-cluster-name> \ --set serviceAccount.create=false \ --set serviceAccount.name=aws-load-balancer-controller \ --set region=<region> \ --set vpcId=<your-vpc-id>

Verify that the deployments are running.

kubectl get deployment -n kube-system aws-load-balancer-controller

Now check the alb DNS name by

kubectl get ingress/ingress-2048

Clean out the resources.

Delete all the services you think have an external resource like ALB attached to them.

kubectl get svc --all-namespaces kubectl delete svc svc-nameIf the svc is built and deployed through argo cd and the auto sync is on, it will not get deleted even if you try to delete it manually, as argocd will maintain the cluster state with the state in the GitHub repo.

So you can delete those resources by commenting them out inside the config files and sync changes through Argo.

Next, we have to delete the cluster along with any related resources, so we will use

eksctl delete cluster --name cluster-name --region your cluster region

Conclusion

Regarding CD workflow, argoCD, along with FluxCD, is the frontrunner and is taking the lead. ArgoCd might or might not be the right solution for you, depending on the number of applications you have as a part of your deployment. If someone with the only deployment of 2-3 resources, ArgoCD would be an overkill for the requirement. For larger workloads, Argo would work like magic; now, there is no need to run commands on your cluster. GitOps methodology through argo allows organizations to maintain a single source of truth, which is easier and more efficient to manage.

A better approach to repo management than the one I have not followed here would be to keep separate repos for the application files and the deployment configs. This could theoretically separate the CI and CD process, but it would be a good thing to practice as managing and maintaining applications through MONO-REPOS is cumbersome and inefficient. Multi-repo would enable users to have better control over the workflow.

For multi-env applications, having beta, alpha, staging, and prod ArgoCD can be very efficient using the remote clusters approach rather than the side-car approach.

For the cloud platform, I must say you should start with AWS as the community support is incredible, and the API support plus the documentation with abstraction on resources are very beginner-friendly.

Finally, continuous delivery is super important to any organization on an agile journey. It is baked into the Agile manifesto and is “table stakes” for any serious technology company.

Like any investment, it will cost money, but it will pay off well if you take it seriously and do it well. It will increase quality, reduce risk, remove errors, enable fast feedback, and deliver customer value.

Subscribe to my newsletter

Read articles from Cloud Commander directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by