Blackwell vs. Hopper: In-Depth Comparison of Architecture Performance

Tanishka Singh

Tanishka Singh

When it comes to GPU architecture NVIDIA never disappoints. The company’s products are known for being as competitive with each other as they are with anything else on the market. And with the recent ongoing buzz about blackwell vs hopper, their latest architectures, it pretty much goes without saying that NVIDIA is not stopping anytime soon.

Let’s do this first in Layman language - Imagine Hopper as your reliable, hardworking friend who's been working out for a while and now pays off big. Now, Blackwell? That's the new kid on the campus, ready to show off some fresh moves. You never know if Blackwell is better or not, but one thing that you know for sure is that Blackwell will cause havoc in the campus (GPU industry).

But wait, why should you care? If you’re into gaming, developing, AI or any similar field, knowing about this is really important. Let’s break it down!

Blackwell: The Next Generation of GPU Technology

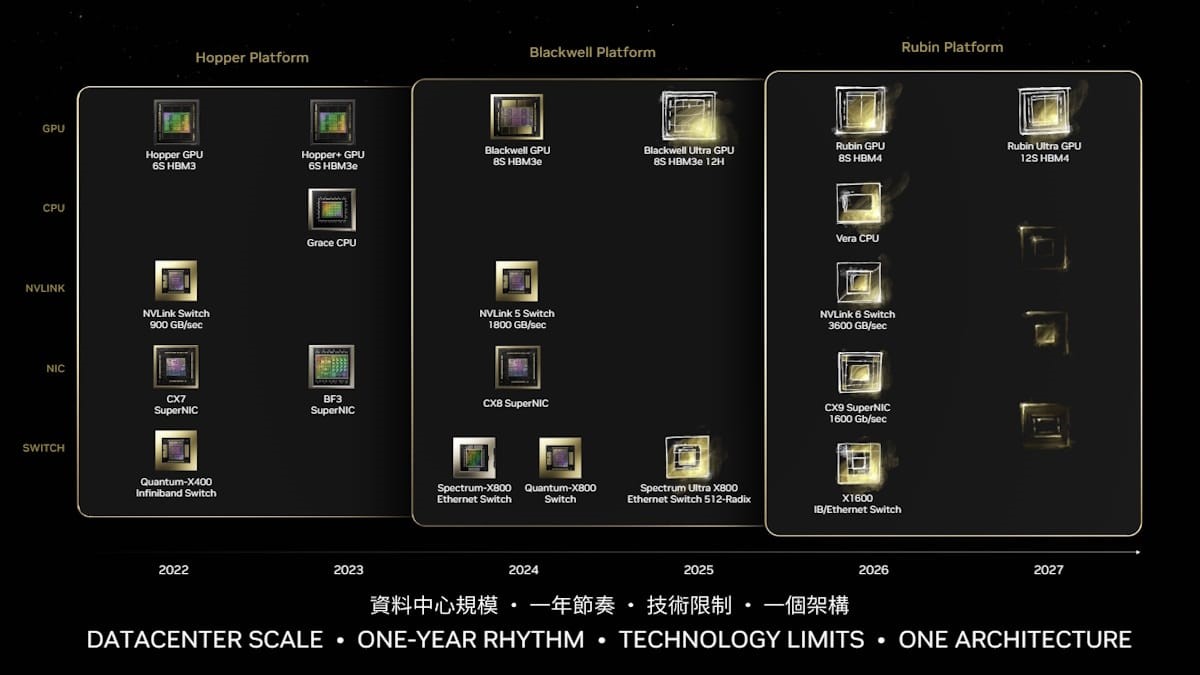

Blackwell architecture is named after a famous statistician and mathematician, David Blackwell. The Blackwell architecture came into public view through leaked details in 2022. Officially, the details were unknown until October 2023 — when NVIDIA finally confirmed the B40 gpu and B100 gpu, revealing them on a roadmap for future innovations, in an investor presentation.

Blackwell, the latest GPU architecture by NVIDIA, is to make an enormous leap in accelerated computing while revolutionizing generative AI capabilities. Nicknamed "The New Industrial Revolution's Engine," Blackwell proudly presents six game-changing technologies to deliver AI training and real-time LLM inference at scale of 10 trillion parameters.

Key Innovations of Blackwell:

World's Most Powerful Chip:

208 billion transistors

Custom-built 4NP TSMC process

Two-reticle limit GPU dies connected by 10 TB/second chip-to-chip link

Second-Generation Transformer Engine:

Micro-tensor scaling support

Advanced dynamic range management algorithms

4-bit floating point AI ML inference capabilities

Double the compute and model sizes compared to previous generation

Fifth-Generation NVLink:

1.8TB/s bidirectional throughput per GPU

Supports up to 576 GPUs for complex LLMs

RAS Engine:

Dedicated engine for reliability, availability, and serviceability

AI-based preventative maintenance capabilities

Improved system uptime and resiliency for large-scale AI deployments

Secure AI:

Advanced confidential computing capabilities

Native interface encryption protocols

Designed for privacy-sensitive industries like healthcare and financial services

Decompression Engine:

Supports latest formats for accelerated database queries

Enhances performance in data analytics and data science

Additional Blackwell Specifications:

20 petaFLOPS of AI performance

1900GB of HBMx

8TB/s of memory bandwidth

Full stack, CUDA enabled

Hopper: Revolutionizing AI and HPC Workloads

The Hopper architecture, named after computing pioneer Grace Hopper, was introduced by NVIDIA as a breakthrough in AI and high-performance computing (HPC). Officially unveiled in 2023, Hopper builds on NVIDIA’s legacy of innovation, targeting the needs of AI training, inference, and HPC at a data center scale. Leveraging cutting-edge technology, Hopper is designed to accelerate complex workloads with unparalleled efficiency and scalability.

Key Innovations of Hopper:

Fourth-Generation Tensor Cores:

Improved AI and HPC performance

Support for FP8 precision to enhance AI model accuracy and speed

Third-Generation RT Cores:

- Advanced ray tracing capabilities for realistic rendering

HBM3 Memory Subsystem:

Up to 80GB of high-bandwidth memory

Memory bandwidth reaching up to 3TB/s

Second-Generation MIG Technology:

Enables secure partitioning of GPUs into multiple instances

Optimizes GPU utilization and quality of service in multi-tenant environments

NVLink 4.0 and NVSwitch:

900 GB/s bidirectional throughput per GPU

Enhanced scalability for multi-GPU systems across multiple servers

Transformer Engine:

Accelerates AI training and inference for transformer models

Utilizes mixed FP8 and FP16 precisions for faster computations

Additional Hopper Specifications:

60 teraflops of FP64 performance

Built on TSMC's 4N process

Support for PCIe Gen 5 for faster data transfer

Advanced confidential computing capabilities

Hence blackwell vs hopper represent significant evolution in GPU technology, each with its own strengths and target applications. As NVIDIA continues to come up with something every few years, it’s clear that the future of computing is not just about more power, but also about what’s smarter and has more specialized design. No matter if it's gaming, development, or research, there's an NVIDIA architecture built just for you. You may Pre-reserve NVIDIA HGX B200 GPUs from NeevCloud for your future endeavors.

Versatility of Blackwell or the specialized power of Hopper: it's your choice!

Subscribe to my newsletter

Read articles from Tanishka Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by