Mini-git, Understanding How Files Are Stored in Git Objects

Keerthivardhan

Keerthivardhan

Yesterday, I set out to implement one of Git's core functionalities on my own—specifically, how files are stored, what Git objects are, and the processes of hashing and compressing. It took me 4 hours to develop, and in this article, I'll walk you through my thought process and approach.

What Happens When You Commit a File?

When you commit a file in Git, several important steps occur under the hood:

File Compression:

The content of the file is compressed using a zlib algorithm to reduce its size. This compressed content is what gets stored in the Git object database.

Hash Calculation:

A unique SHA-1 hash is generated from the compressed file content. This hash serves as the identifier for the file in the Git object database.

Storing the Object:

The object file is stored in the .mygit/objects directory, organized by the first two characters of the hash. This structure makes it easier to manage and retrieve objects efficiently.

Updating Commit Information:

To demonstrate how files are stored in git.

constructor() {

const rootDir = process.cwd();

this.gitDir = path.join(rootDir, '.mygit');

this.objectsDir = path.join(this.gitDir, 'objects');

this.fileAndLastCommitMap = path.join(this.gitDir , 'fileCommitMap.json');

this.stagedFiles = {}; // Tracks the hashes of the files // not suitable

this.Branchs = [];

this.currBranch = new Branch("head");

// Initialize the repository

if (!fs.existsSync(this.gitDir)) {

fs.mkdirSync(this.gitDir);

fs.mkdirSync(this.objectsDir);

fs.writeFileSync(this.fileAndLastCommitMap , JSON.stringify({}));

}

}

I have implemented commit functionality, taking one file in to consideration

commit(filePath) {

const fullPath = path.resolve(filePath);

console.log("fullPath : ", fullPath)

if (!fs.existsSync(fullPath)) {

console.log(`File ${filePath} does not exist.`);

return;

}

const fileContent = fs.readFileSync(fullPath);

const compressedContent = zlib.deflateSync(fileContent);

const hash = crypto.createHash('sha1').update(compressedContent).digest('hex');

const lastCommitMap = JSON.parse(fs.readFileSync(this.fileAndLastCommitMap, 'utf-8'));

const previousHash = lastCommitMap[filePath];

if ( previousHash && previousHash === hash) {

console.log(`No changes detected in ${filePath}.`);

return;

}

let commit = new Commit(hash);

lastCommitMap[filePath] = hash;

fs.writeFileSync(this.fileAndLastCommitMap , JSON.stringify(lastCommitMap));

/*

add the commit object to the branch

*/

this.currBranch.commits.push(commit);

const objectDir = path.join(this.objectsDir, hash.substring(0, 2));

const objectPath = path.join(objectDir, hash.substring(2));

if (!fs.existsSync(objectDir)) {

fs.mkdirSync(objectDir);

}

fs.writeFileSync(objectPath, compressedContent);

console.log(`on Branch ${this.currBranch.name}`);

console.log(`Committed ${filePath} with hash ${hash}`);

// console.log("commit changes = ", commit.changes);

if(previousHash != undefined){

this.displayChanges(previousHash , fileContent.toString('utf-8') , commit);

}

}

For every file, I have calculated hash (crypto.js)

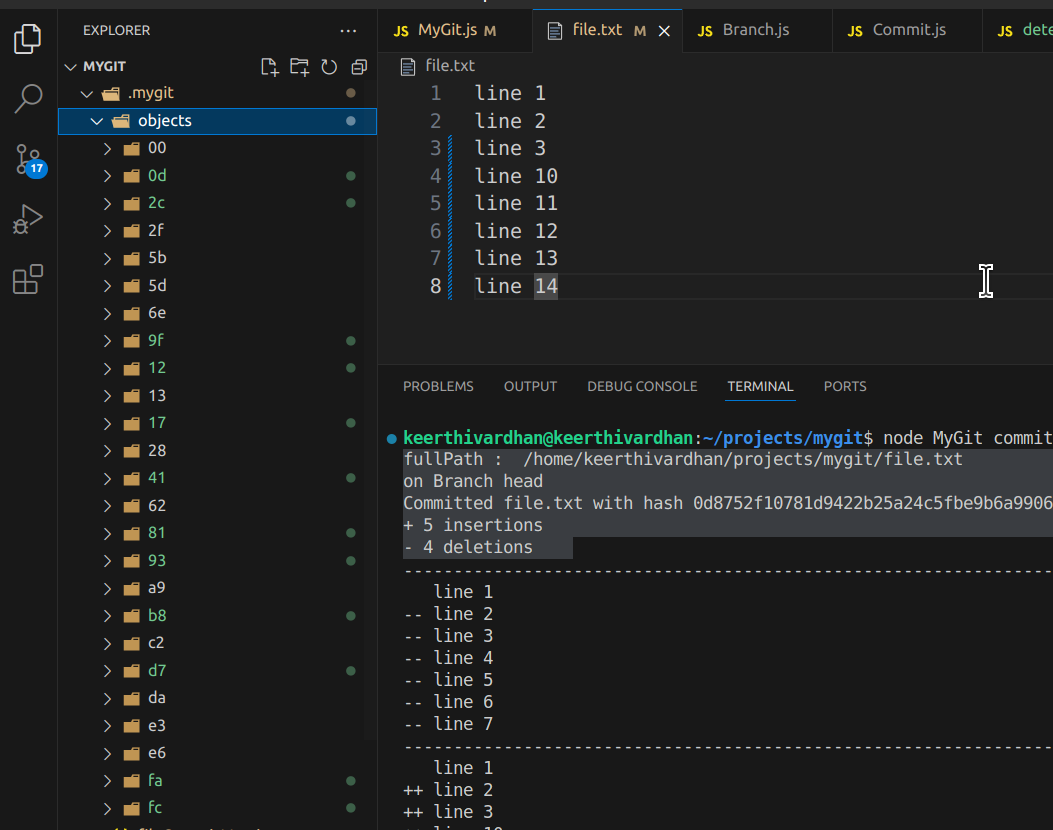

Inside objects folder, new folder is created with name equal to first two characters of hash.

And a file is created inside that folder with remaining hash as name.(this file stores the compressed format of committed file) (zlib)

Detected changes by comparing newly calculated hash and last calculated hash of the file

Detecting Changes

I implemented this algorithm based on my own approach, but Git uses more efficient algorithms for these operations.

function detectChanges(oldContent , newContent) {

const oldLines = oldContent.split("\n");

const newLines = newContent.split('\n');

const n = oldLines.length;

const m = newLines.length;

const oldMap = new Map();

for(let i=0;i<n;i++){

oldMap.set(oldLines[i] , i);

}

let oldcommon = [];

let newcommon = [];

oldcommon.push(0);

newcommon.push(0);

for(let i=0;i<m;i++){

if(oldMap.has(newLines[i])){

oldcommon.push(oldMap.get(newLines[i]));

newcommon.push(i);

}

}

oldcommon.push(n);

newcommon.push(m);

// console.log(oldcommon);

// console.log(newcommon);

// print changes in oldlines

console.log("--------------------------------------------------------------------");

let start =0;

let olddiff = new Set();

for(let i=0;i<oldcommon.length;i++){

if(oldcommon[i]-start <= 0){

start = oldcommon[i];

continue;

}

for(let j=start+1;j<=oldcommon[i]-1;j++){

olddiff.add(j);

}

}

for(let i=0;i<n;i++){

if(olddiff.has(i)) console.log(`-- ${oldLines[i]}`);

else console.log(` ${oldLines[i]}`);

}

// print changes in newlines

console.log("--------------------------------------------------------------------");

start =0;

let newdiff = new Set();

for(let i=0;i<=newcommon.length;i++){

if(newcommon[i]-start <= 0){

start = newcommon[i];

continue;

}

for(let j=start+1;j<=newcommon[i]-1;j++){

newdiff.add(j);

}

}

for(let i=0;i<m;i++){

if(newdiff.has(i)) console.log(`++ ${newLines[i]}`);

else console.log(` ${newLines[i]}`);

}

}

module.exports = detectChanges;

Extracted array, of lines from oldContent and newContent.

Created a Map to store line as key and index as value.

Created two new arrays to store indexes of common lines in oldContent and newContent 4.eg: OldCommonarray = [0 , 3] then deleted lines will be [1,2]

Thanks a lot for you time.

Subscribe to my newsletter

Read articles from Keerthivardhan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by