Streamline S3 Deployments with AWS CodePipeline

Anant Vaid

Anant Vaid

Introduction

Quick Recap

Welcome to part four of our series on Building a Secure Static Website on Amazon S3. Previously, we covered hosting a static website on S3, creating a custom domain, managing its DNS, and securing the S3 bucket by only granting access to Cloudflare IPs. For better context, we recommend reading the earlier parts.

In this article, we will guide you on how to automate the deployment of the static website when changes are pushed to the Git repository.

Importance of streamlining S3 deployments

Streamlining S3 deployments is crucial as it brings in efficiency by reducing the manual intervention, saving time and effort. This allows developers to focus on more critical tasks rather than repetitive deployment steps. Automated deployments ensure that the same process is followed every time, reducing the risk of human error.

With streamlined deployments, updates and changes can be pushed to production faster. This is particularly important for agile development environments where quick iterations are necessary. Streamlined deployments often include automated rollback mechanisms, allowing for quick recovery in case of deployment failures. This minimizes downtime and ensures business continuity.

Purpose of the article

Carefully look at the architectural diagram. We have already implemented the part where the end user accesses the S3 static website through Cloudflare. Lambda ensures the security of the bucket by updating it daily, scheduled by EventBridge (Refer here).

In this article, we will integrate GitHub with AWS CodePipeline. AWS CodePipeline creates a streamlined process for integrating code changes from GitHub directly to our S3 bucket, which hosts our static website.

Creating a CodePipeline for S3 Deployment

Key features and benefits

Key features and benefits of AWS CodePipeline include:

Continuous Integration and Continuous Delivery (CI/CD): Automates the build, test, and deploy phases of your release process every time there is a code change, ensuring faster and more reliable software delivery.

Scalability: Easily scales with your infrastructure and can handle multiple pipelines and complex workflows, making it suitable for projects of any size.

Integration with AWS Services: Seamlessly integrates with other AWS services like CodeBuild, CodeDeploy, Lambda, and S3, providing a cohesive environment for your deployment needs.

Automated Rollbacks: Supports automated rollback mechanisms, which help in quickly reverting to a previous stable state in case of deployment failures, minimizing downtime.

Monitoring and Logging: Offers comprehensive monitoring and logging capabilities through AWS CloudWatch, enabling you to track the performance and health of your pipelines in real-time.

Cost-Effective: Pay-as-you-go pricing model ensures you only pay for what you use, making it a cost-effective solution for managing your deployment processes.

Ease of Use: User-friendly interface and detailed documentation make it easy to set up and manage pipelines, even for users with minimal DevOps experience.

Creating CodePipeline

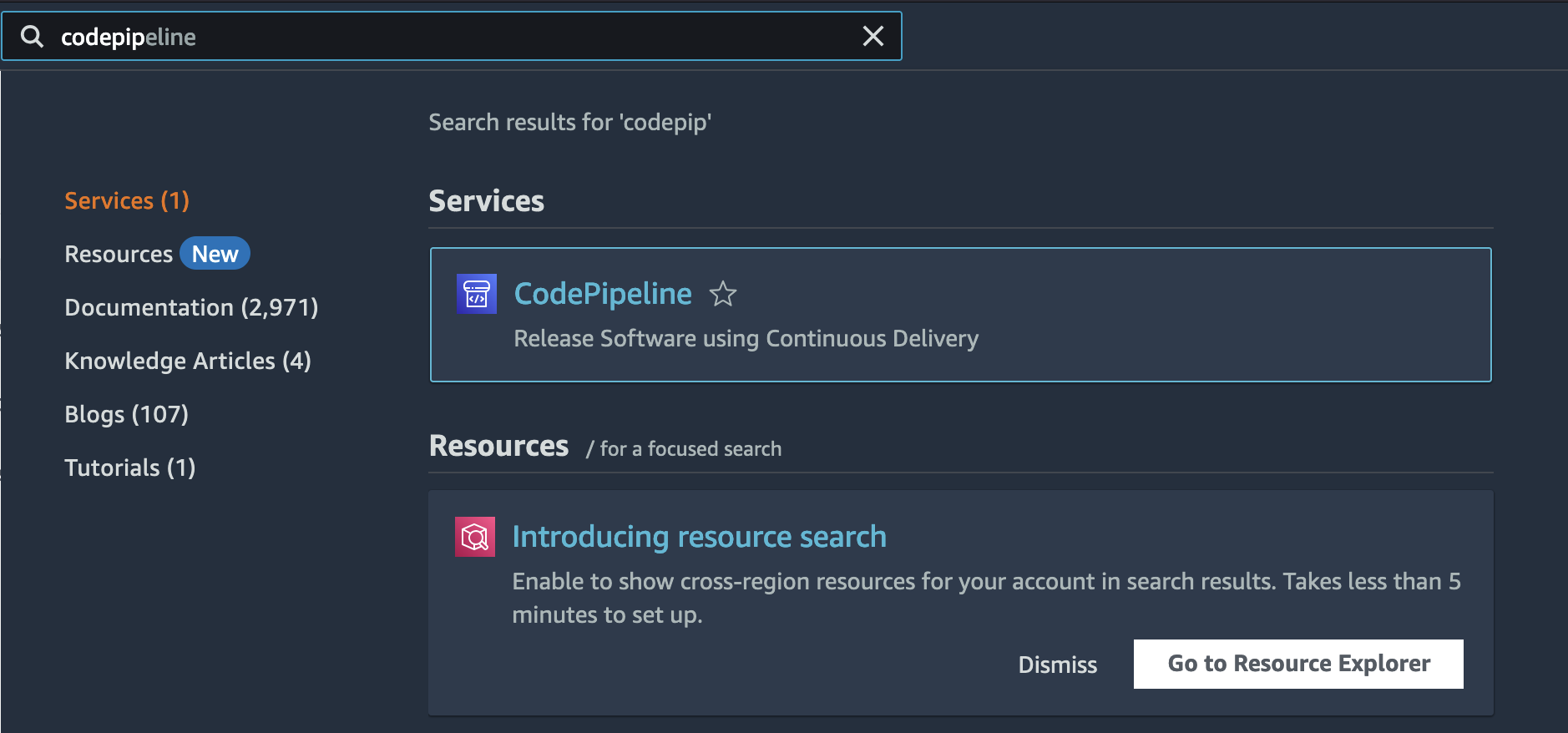

Search CodePipeline in AWS Management Console and click on CodePipeline.

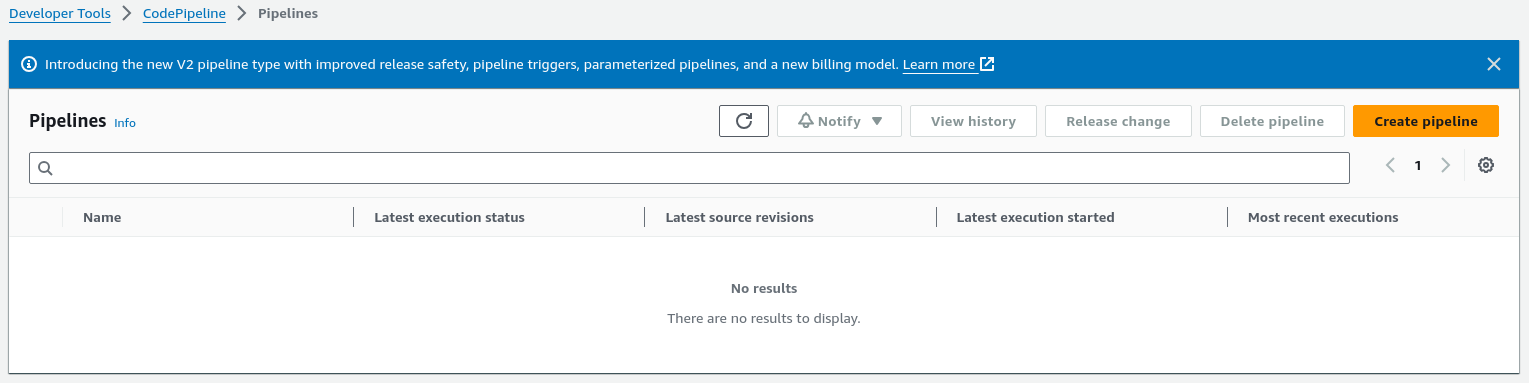

Click on Create pipeline.

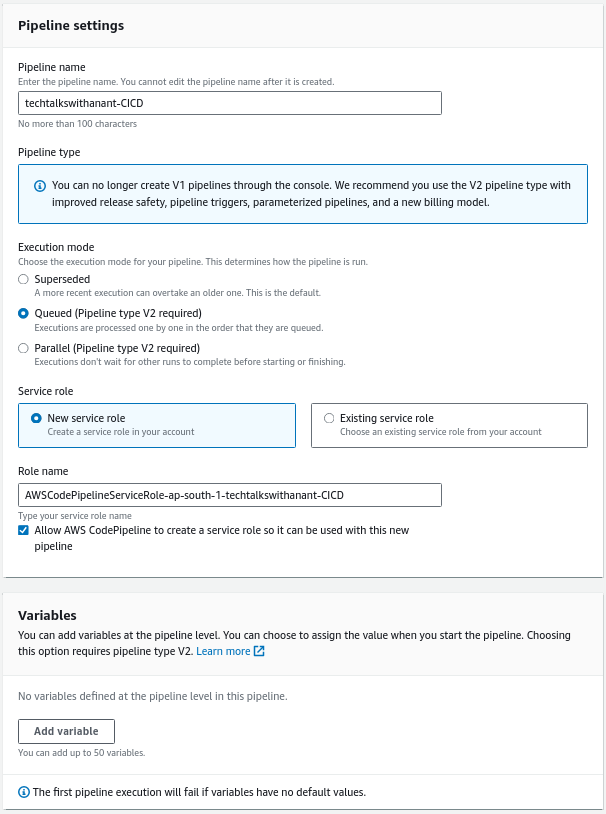

Fill in the name of the Pipeline, leave the rest as defaults, and then click Next.

Defining the source stage

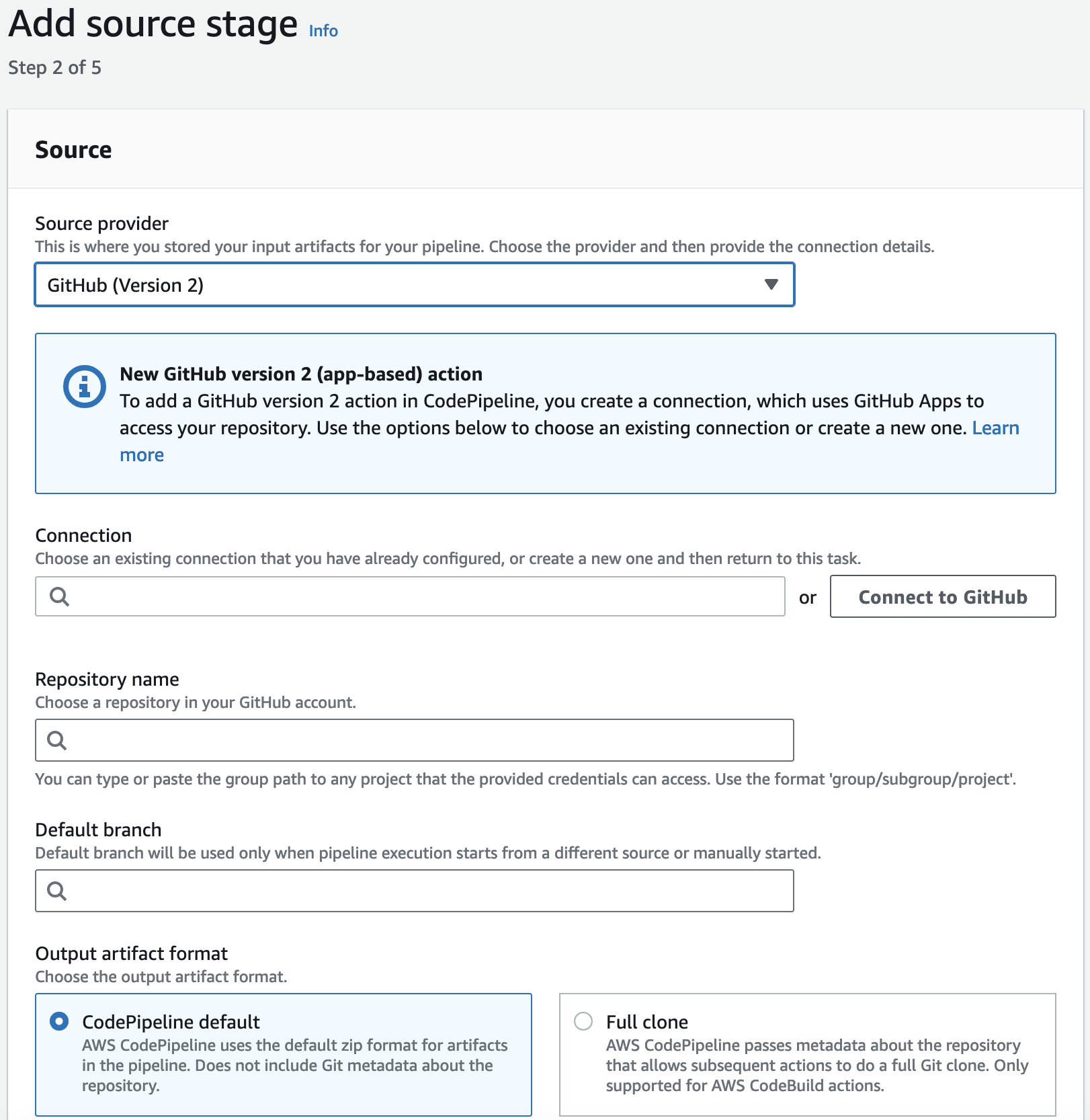

In the Add source stage step, Choose GitHub (Version 2).

Note: AWS recommends us to use GitHub (Version 2) instead of GitHub (Version 1). This is because Version 2 uses Github app-based auth backed by a CodeStarSourceConnection in GitHub.

Click on Connect to GitHub. Type in your GitHub credentials and log in to GitHub.

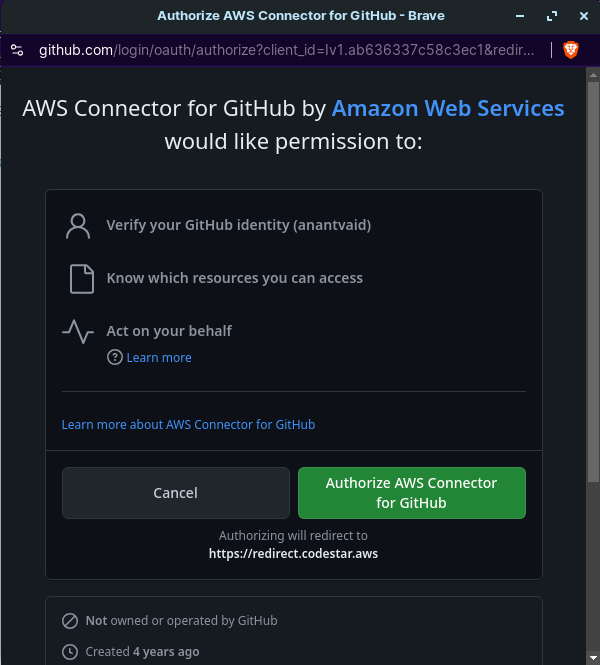

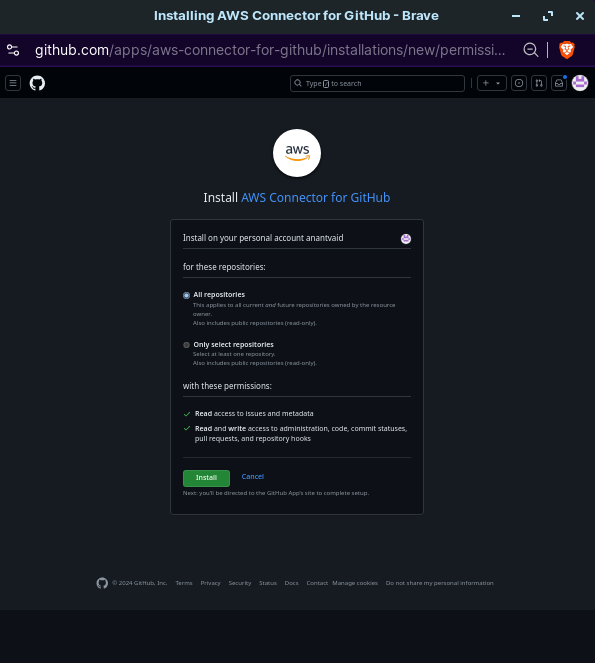

Click on Authorize AWS Connector for GitHub and then Install to install AWS Connector for GitHub.

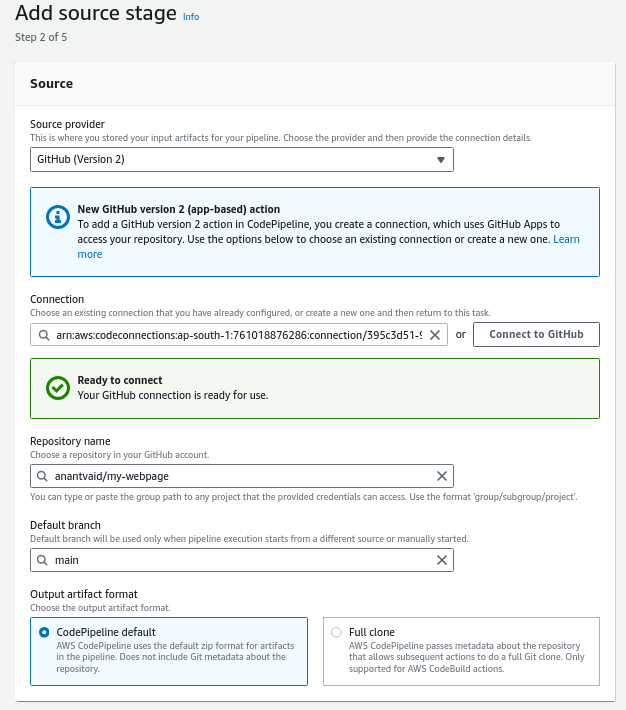

Select the repository we want to use from the dropdown menu. Make sure it is the same repository that contains the code files for hosting your static website. The default branch should be main, unless you are committing the changes to another custom branch. Let the rest be defaults. Click on Next.

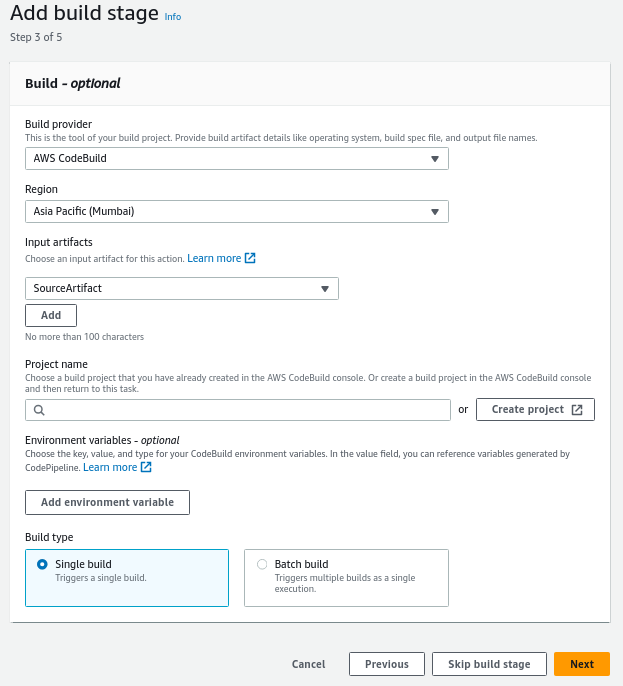

Next, click on Skip build stage button, as we do not have any build artifact.

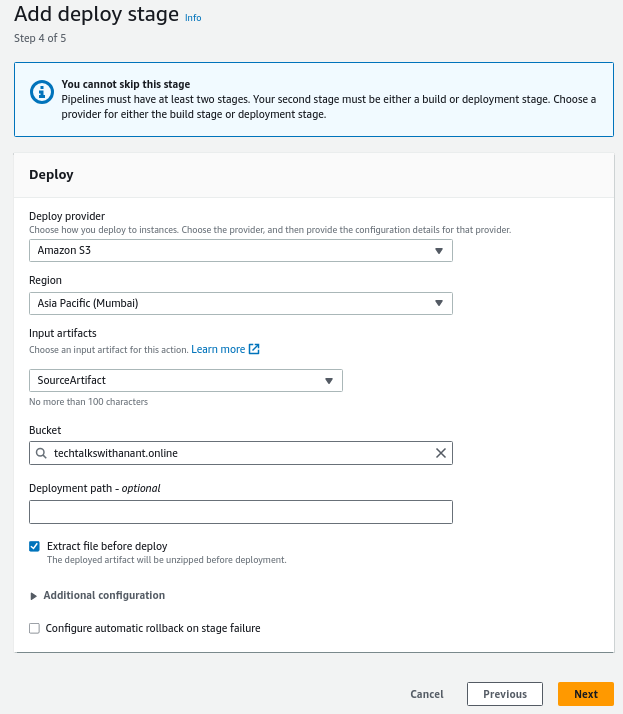

Configuring the deploy stage

In the deploy stage, select the Deploy Provider as Amazon S3. The Region must be the same as where the S3 bucket is deployed. Choose the S3 bucket from the dropdown menu. Check the Extract file before deploy button to ensure that the files are unzipped before deployment.

Click the Next button.

Friendly User Interface

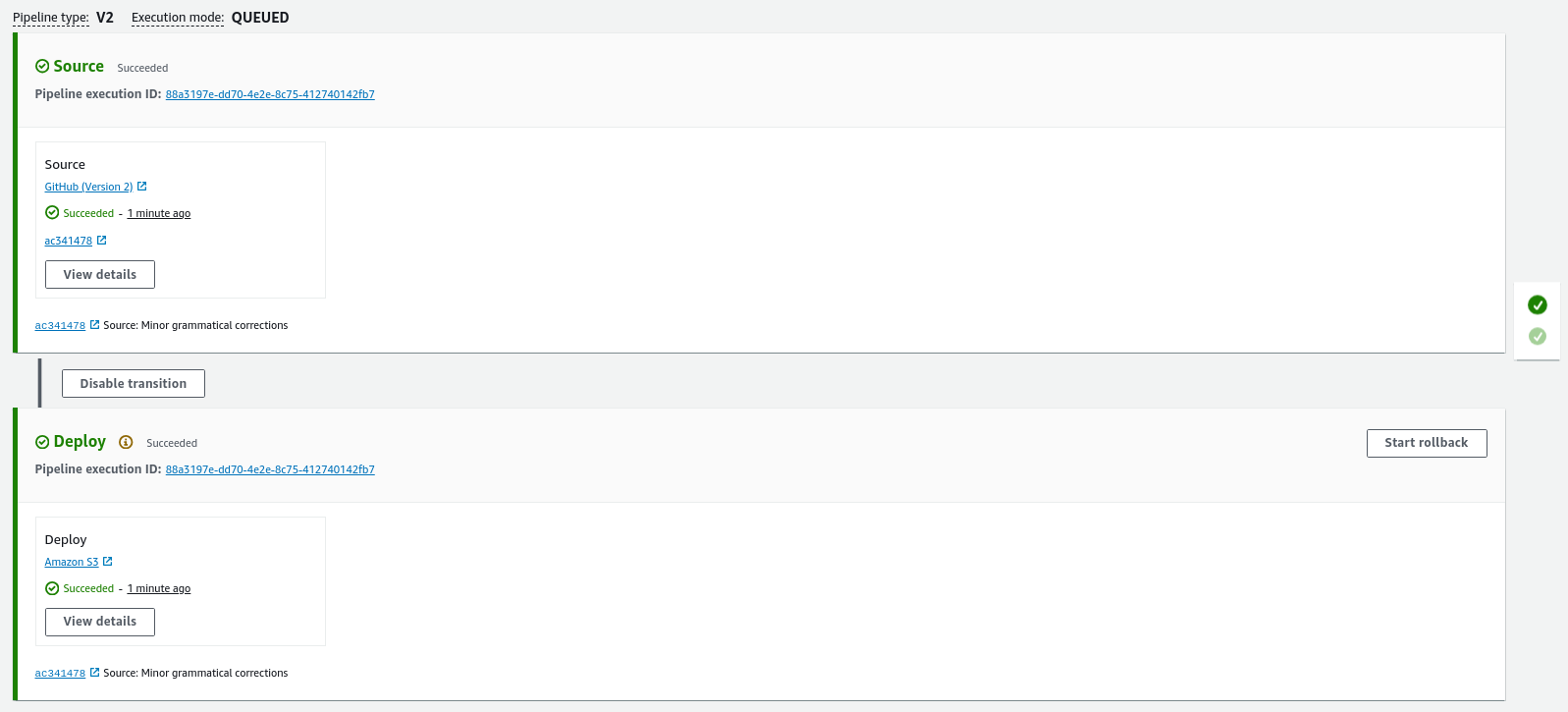

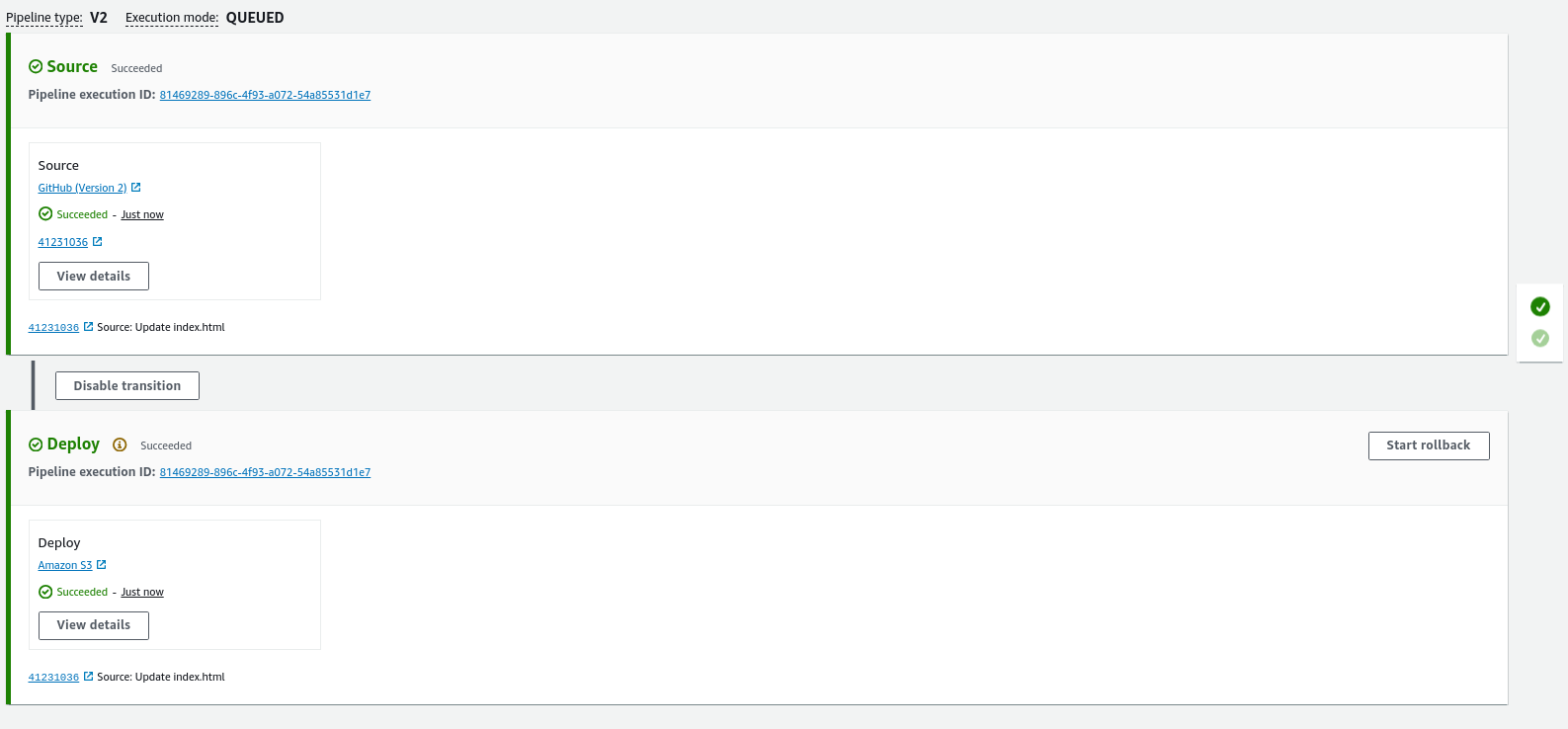

When the CodePipeline is created, it automatically triggers for the first run. This pipeline checks out and pulls the code from the specified source repository. In the deploy stage, it deploys the code to the S3 bucket that is already set up to host the static website.

Event-based triggers

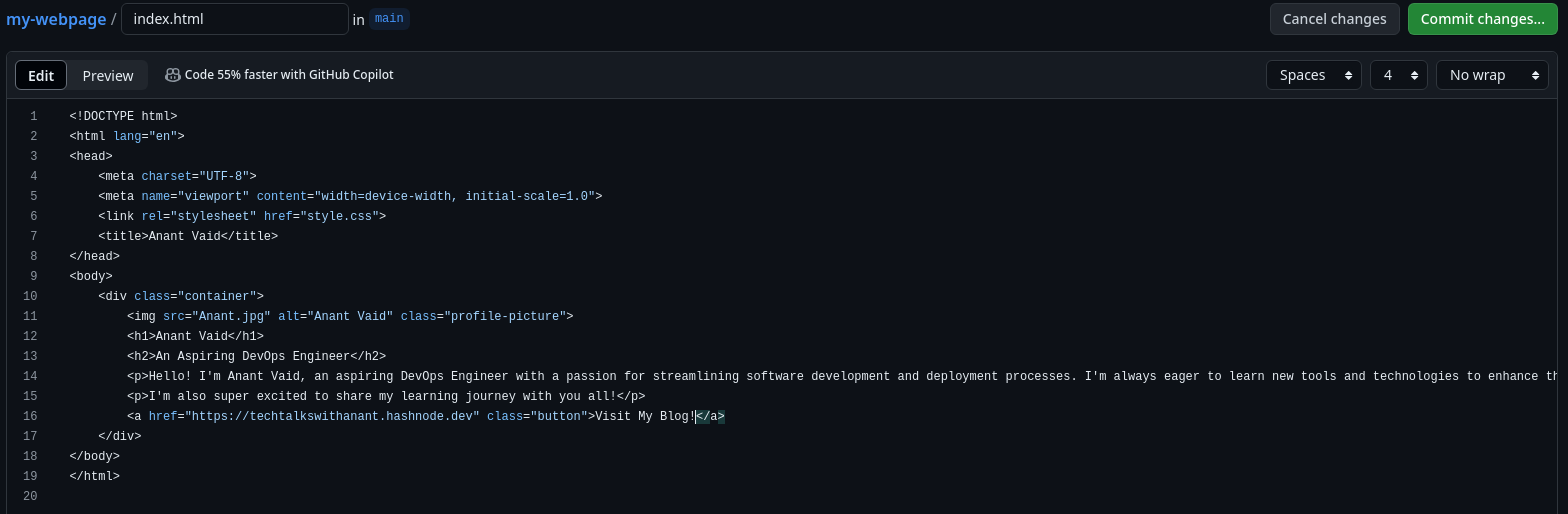

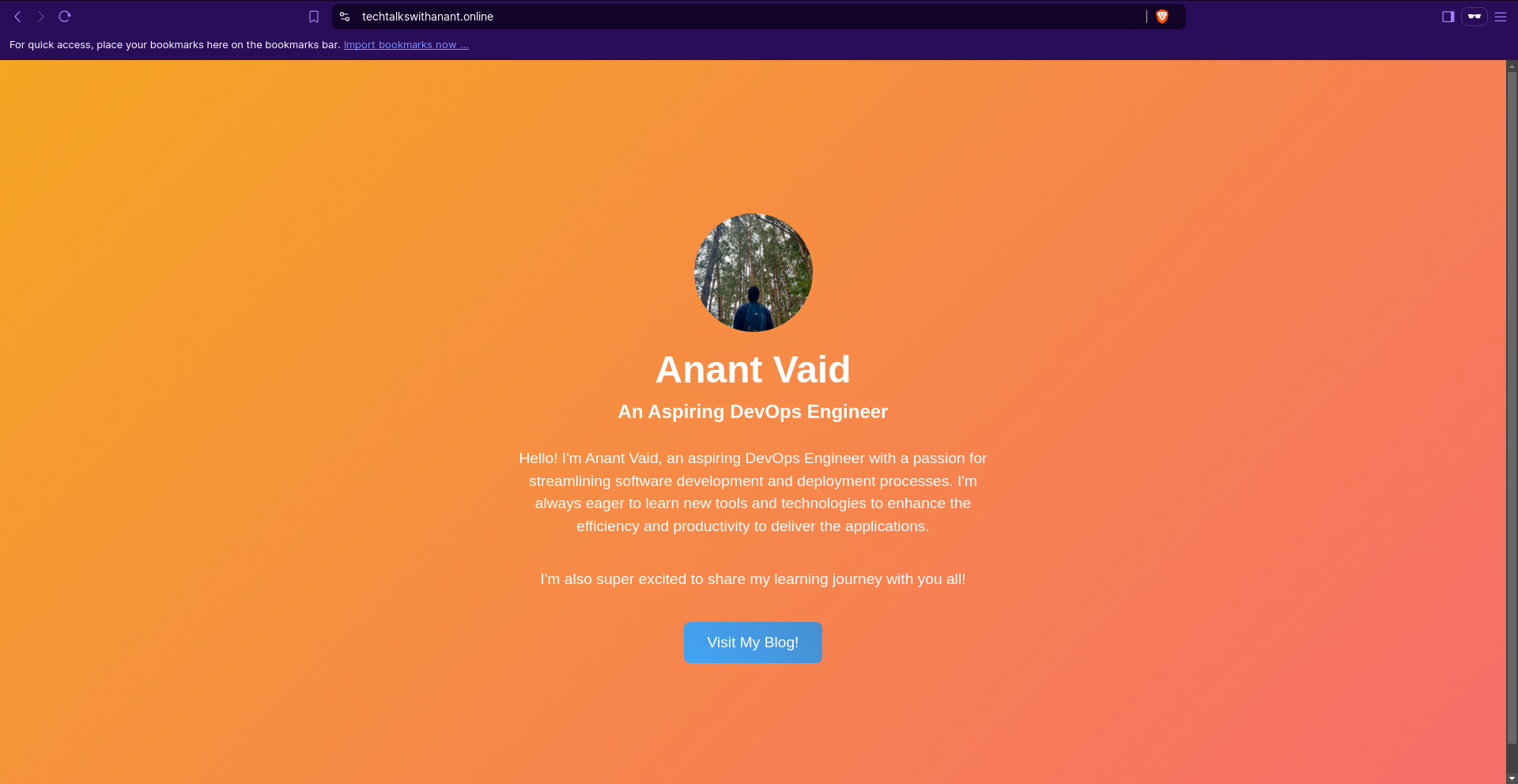

Let's trigger a pipeline event by pushing changes to our code repository. We'll make a small change in the code: instead of Visit My Blog, we'll change it to Visit My Blog!. Now, let's see if the exclamation mark is updated in our code repository.

Once the changes are pushed to the GitHub repository, it automatically triggers a CodePipeline event. This event checks out the updated files and places them into the S3 bucket.

Carefully notice that in each stage, we see Source: Update index.html. This means the new code has already been pulled and is being deployed to the S3 bucket.

Verifying the changes

Let's head on to our URL to see if our changes were deployed.

Fantastic! We can now see an exclamation mark on the button. We successfully automated the process of deploying changes made to our GitHub repository into the S3 bucket.

Troubleshooting Common Issues

Debugging pipeline failures

When a pipeline fails, the first step is to identify the stage where the failure occurred. AWS CodePipeline provides detailed logs for each stage. Common reasons for pipeline failures include:

Source Stage Issues: Check if the connection to the GitHub repository is properly configured and if the repository and branch names are correct.

Permissions: Ensure that the IAM roles associated with CodePipeline have the necessary permissions to access the resources involved in the pipeline.

Configuration Errors: Verify that the pipeline configuration, such as the S3 bucket name and region, is correct.

Working Directory Issues: Ensure that the code files are in the root directory of the repository, as the directory structure is pulled directly from the GitHub repository.

Conclusion

Summary of key points

This article, part of a series on building a secure static website on Amazon S3, focuses on automating the deployment process using AWS CodePipeline. It covers the importance of streamlining S3 deployments for efficiency, steps to create a CodePipeline integrated with GitHub, and how to verify and troubleshoot the pipeline. Key benefits of AWS CodePipeline include continuous integration and delivery, scalability, integration with other AWS services, automated rollbacks, monitoring and logging, cost-effectiveness, and ease of use.

Encouragement to use AWS Solutions

By using AWS solutions for hosting your entire static website, you benefit from an integrated, scalable, and secure platform that can grow with your needs. AWS's comprehensive service offerings eliminate the need for multiple vendors, simplifying management and ensuring everything works seamlessly together.

I encourage you to consider the following AWS services and integrate them into your project to create a complete in-house AWS solution (Reference):

AWS Route 53:

AWS Route 53 is not only a highly reliable DNS service but also offers domain registration services. By registering your domain directly with Route 53, you can manage your domain names and DNS settings within the same platform, simplifying the overall management process.

AWS CloudFront:

AWS CloudFront is a Content Delivery Network (CDN) that securely delivers your website content with low latency and high transfer speeds, using a global network of edge locations.

AWS WAF (Web Application Firewall) and AWS Shield:

AWS WAF provides protection against common web exploits and attacks, including SQL injection and cross-site scripting (XSS).

AWS Shield offers DDoS protection, particularly important for websites that require high availability.

AWS Certificate Manager (ACM):

AWS Certificate Manager simplifies the process of provisioning, managing, and deploying SSL/TLS certificates for your website hosted on S3 and CloudFront.

Conclusion of the Series

This brings the end to our ongoing series: Building a Secure Static Website on Amazon S3. We learned how to build a static website on Amazon S3 with a custom domain, secure it, and automate the entire deployment process with ease.

Your comments and feedback would be really helpful for creating more projects, blogs, and series. Please share this series with anyone looking to learn AWS, DevOps, and Cloud.

Subscribe to my newsletter

Read articles from Anant Vaid directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Anant Vaid

Anant Vaid

An Aspiring DevOps Engineer passionate about automation, CI/CD, and cloud technologies. On a journey to simplify and optimize development workflows.