Searching text content using linux command

Asfaq Leeon

Asfaq Leeon

Sometimes we need to search text in log files or command outputs. For example, you may need to search error in production server's log files. Typically those log files size are huge and text editor's can't handle it even if the computer has a standard configuration.

The popular and also the powerful command in linux for searching text is the grep. Let's see how can we use this command for text searching.

Preparing files

I've asked ChatGPT to generate a sample log. I've created a file named test.log and paste the content in that file. Here is the content of generated log by ChatGPT.

2024-08-20 12:00:01 INFO Starting the application

2024-08-20 12:05:45 WARN Low disk space on /dev/sda1

2024-08-20 12:10:23 ERROR Unable to connect to database

2024-08-20 12:15:11 INFO User login successful: user123

2024-08-20 12:20:33 INFO Data synchronization completed

2024-08-20 12:25:47 WARN High memory usage detected

2024-08-20 12:30:05 ERROR File not found: /path/to/file.txt

2024-08-20 12:35:22 INFO Scheduled task executed

2024-08-20 12:40:55 WARN CPU usage high

2024-08-20 12:45:12 ERROR Disk write failure

Syntax of grep

grep [option] pattern [file...]

We can put multiple file names or a single file or a regex pattern of file names or extension like *.txt

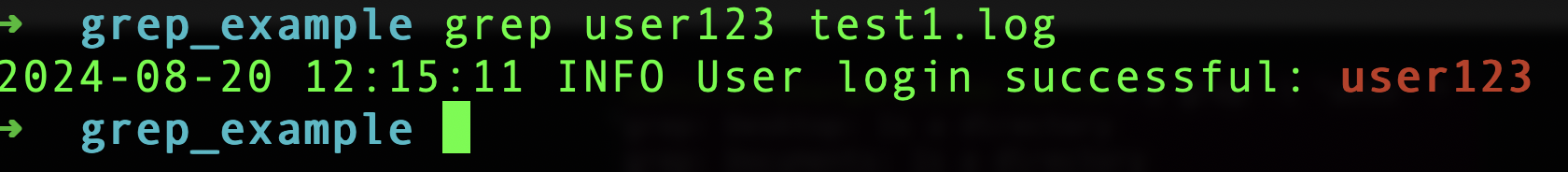

Let's find the text user123 from the file test.log

The command is easy, the string is user123 and the only one file that is test.log

grep user123 test1.log

The output is

Notice that search token is in red mark and the matched line is captured.

Search in multiple files

For this part, I've create 4 other log files. Those names are

webserver_access.log

security.log

system.log

app_log.txt

Each contains ChatGPT generated content. Also, you can one file has the .txt extension and other have .log

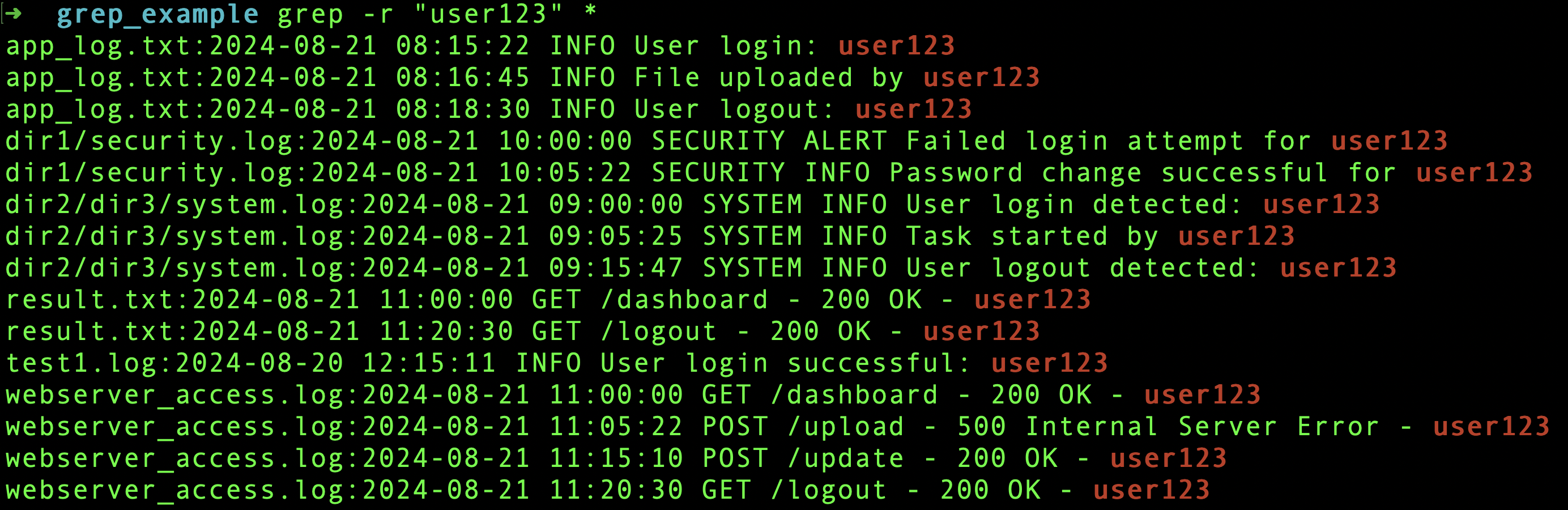

Now let's search the term user123

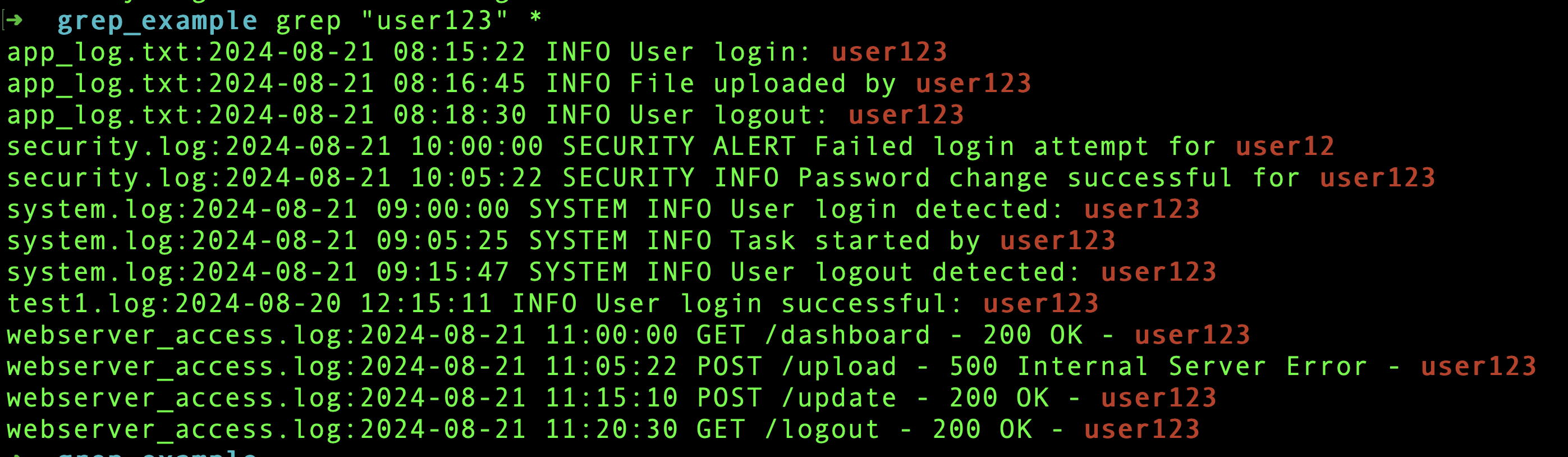

grep "user123" *

Here the regex * refers the all files in the current directory The output is

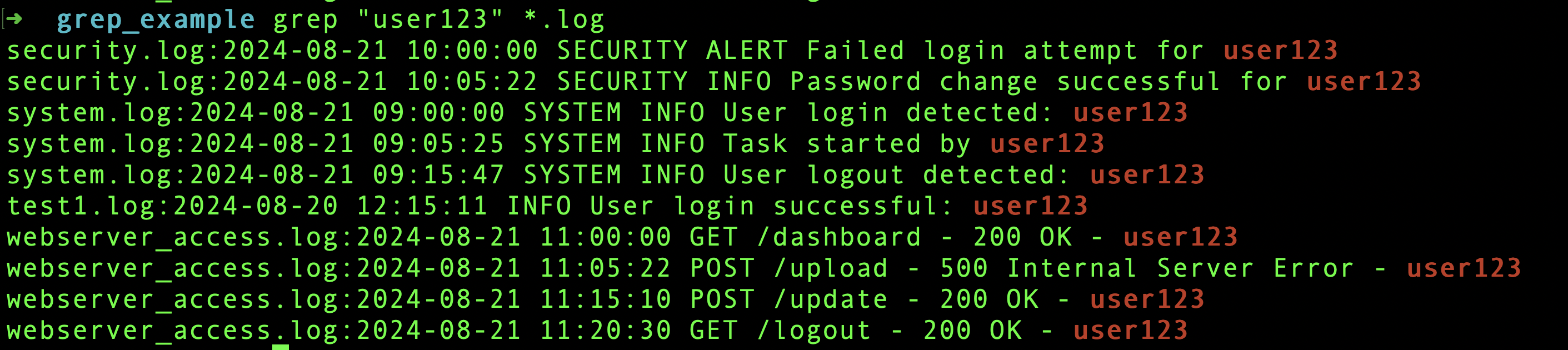

Now, lets filter some file. We want to search in only .log files only not the .txt then

Subdirectories

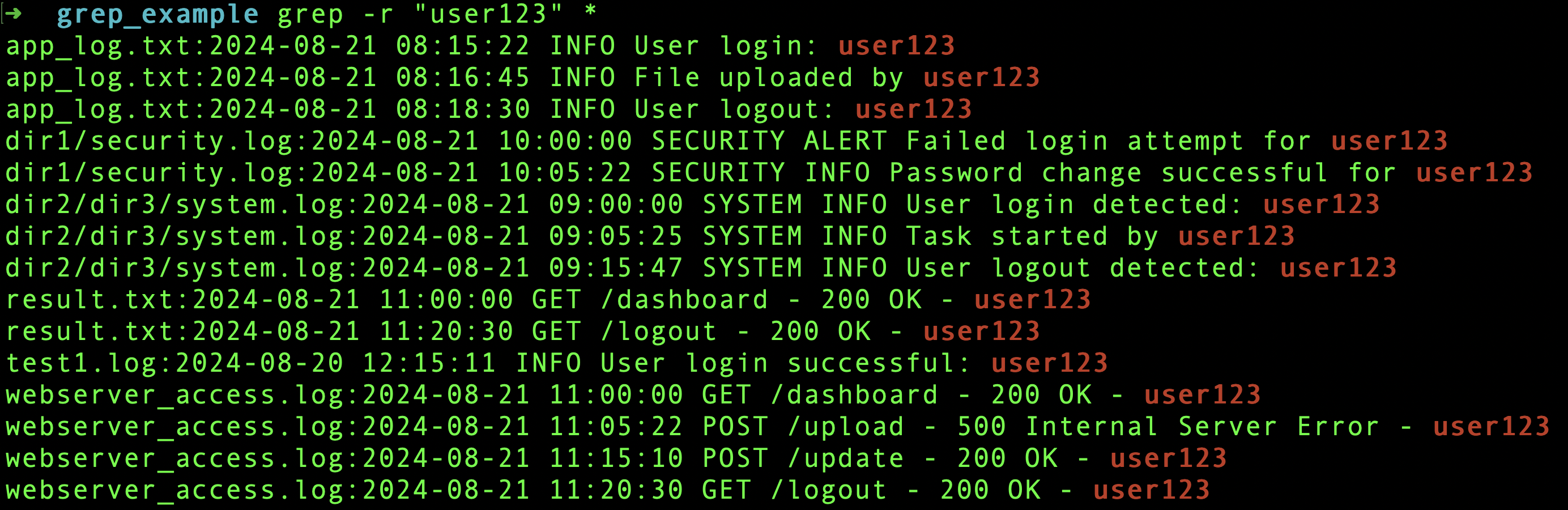

What if there are subdirectories and there are log file inside those. Then we have to use an option -r. I've moved

grep -r "user123" *.log

Here you can see dir1, dir2/dir3 etc.

Ignoring cases

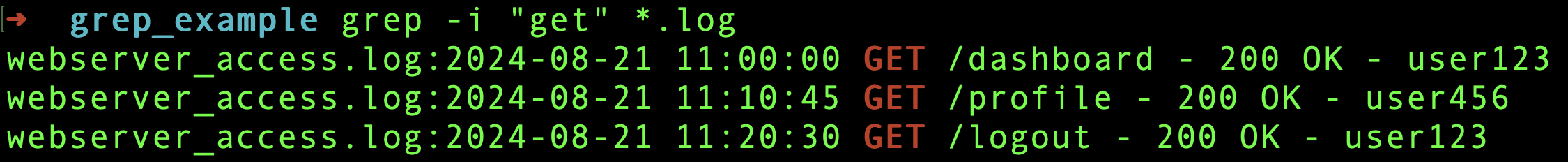

In webserver_access.log file, I've put some rest api request/response log. The verbs in uppercase. So, if we want to ignore case we have an option -i

grep -i "get" *.log

The output is

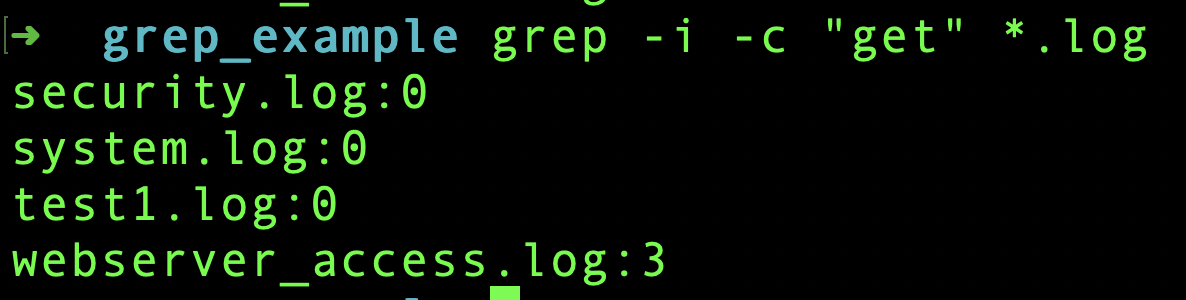

Total count

If we want to know only total occurrence count, then we have an option -c

grep -i -c "get" *.log

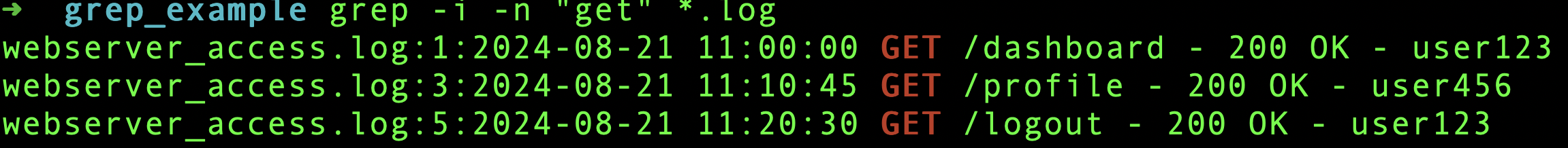

Line numbers

We may want to know the line number where pattern matched. Then use the option -n

grep -i -n "get" *.log

Here, you can see line 1, 3, 5 after the filename.

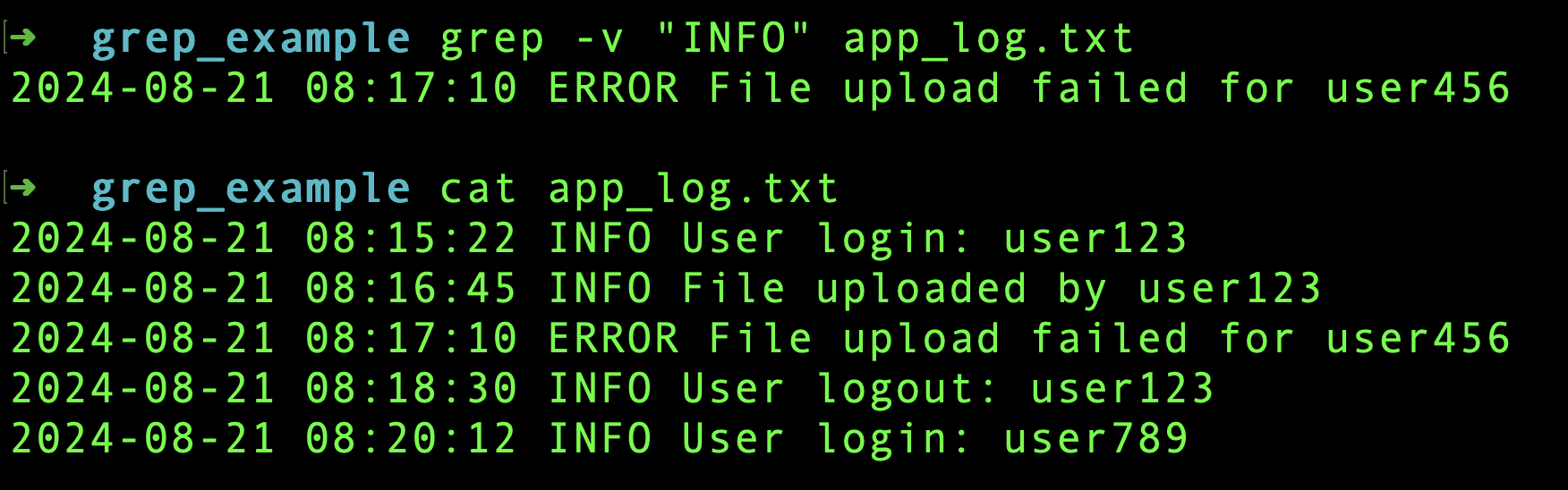

Invert search

Means which pattern we've provided the result have anything without the pattern itself. In this case we have to use the option -v

Here, I've defined without 'INFO' print others. There is only line contains which doesn't have the term 'INFO'

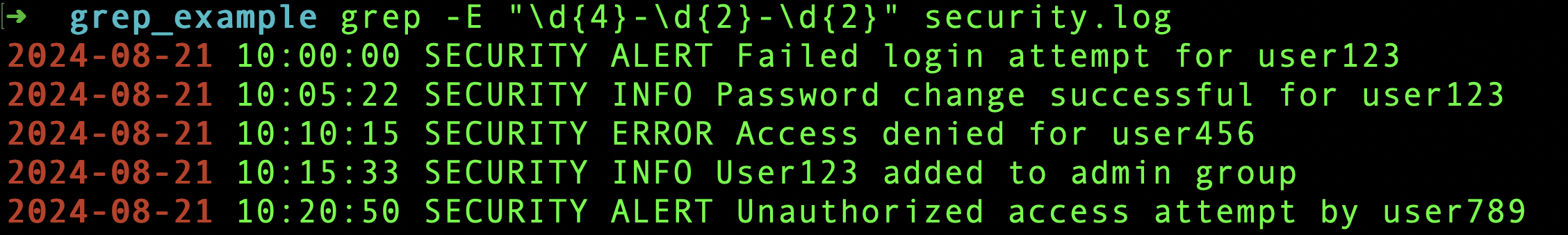

Advanced regex

If we have to use complex regex then there are 2 options. One is extended regex-E and another one is pear -P. For example if we want to extract the date-time, we need a complex regex.

grep -E "\d{4}-\d{2}-\d{2}" security.log

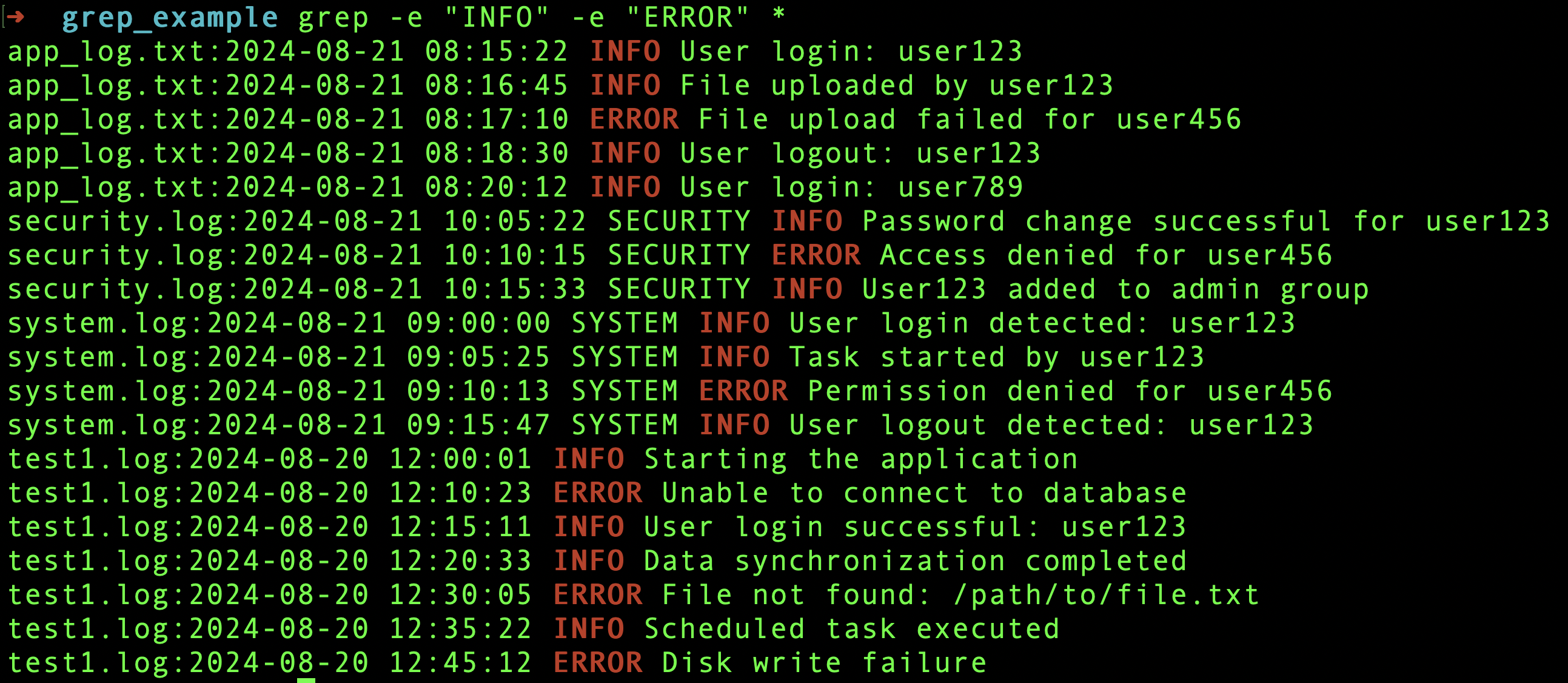

Multiple keywords

Use option -e

grep -e "INFO" -e "ERROR" *

Piping

We can use grep with another command using pipe.

curl -X GET https://jsonplaceholder.typicode.com/todos/1 | grep "title"

Redirection

Using redirection operation we can write the search output to another file

grep "GET" webserver_access.log > result.txt

Conclusion

Those are for today. I hope you've learned something from this article. If you like this then you can give me a thumb. Happy coding.

Subscribe to my newsletter

Read articles from Asfaq Leeon directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by