6. multistage dockerfile practice

Amrit Poudel

Amrit PoudelTable of contents

- Optimizing a Simple HTML and CSS Website

- Dockerizing a Java Application: Single-Stage vs. Multi-Stage Builds

- Dockerizing a React Application: The Power of Multi-Stage Builds.(copied from chatgpt .i donot have idea of react application)

- Key Takeaways from My Dockerfile Optimization Journey

- Final Thoughts

As a DevOps enthusiast, optimizing Dockerfiles has been an enlightening journey. Over the past few weeks, I've been working on Dockerizing different applications, including simple static websites, Java applications, and React apps. This article showcases my learning process, improvements made, and the impact of these optimizations on Docker image sizes and build efficiency.

Optimizing a Simple HTML and CSS Website

I started with a basic Dockerfile for a simple HTML and CSS static website. The original Dockerfile looked like this:

FROM ubuntu:latest

# Set the working directory

WORKDIR /var/www/html

# Install Nginx (this creates a layer)

RUN apt update && apt install nginx -y

# Copy files one by one (each COPY command creates a new layer)

COPY index.html .

COPY style.css .

COPY assets/ ./assets/

# Expose port 8000

EXPOSE 8000

# Start Nginx in the foreground to keep the container running

CMD ["nginx", "-g", "daemon off;"]

The Challenges:

Multiple Layers: Each

RUNandCOPYcommand created a separate layer, increasing the image size.Unnecessary Files: The default installation of Nginx left behind temporary files that weren't needed in the final image.

The Optimized Version:

Here's how I improved the Dockerfile:

FROM ubuntu:latest

# Combine related commands to reduce layers

RUN apt-get update && apt-get install -y nginx && apt-get clean && rm -rf /var/lib/apt/lists/*

# Set the working directory

WORKDIR /var/www/html

# Copy all files in one command to reduce layers

COPY index.html style.css assets/ ./

EXPOSE 8000

# Start Nginx in the foreground to keep the container running

CMD ["nginx", "-g", "daemon off;"]

Improvements:

Layer Reduction: By combining commands, I reduced the number of layers, making the image smaller.

Cleanup: Removing unnecessary files after installation saved significant space.

Efficient File Copying: Grouping all file copy operations into one reduced the number of layers and sped up the build process.

Result: The image size decreased from 138MB to 98.5MB—a 28.6% reduction!

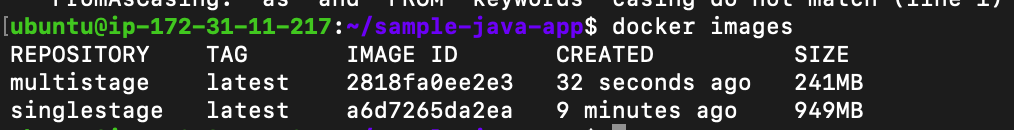

Dockerizing a Java Application: Single-Stage vs. Multi-Stage Builds

Next, I moved on to Dockerizing a Java application. Initially, I created a single-stage Dockerfile:

FROM ubuntu

RUN apt-get update &&\

apt install default-jdk -y &&\

apt -y install maven

WORKDIR /app

COPY . .

RUN mvn clean package

CMD [ "java","-jar","target/app.jar" ]

Single-Stage Challenges:

Large Image Size: This approach included unnecessary build tools and dependencies in the final image.

Inefficient for Production: The image was bulky and not optimized for a production environment.

Optimizing with Multi-Stage Builds:

To tackle these issues, I used a multi-stage build approach:

FROM ubuntu as stage1

RUN apt-get update &&\

apt install default-jdk -y &&\

apt -y install maven

WORKDIR /app

COPY . .

RUN mvn clean package

# Use a slim Java runtime for the final image

FROM openjdk:11-jre-slim

WORKDIR /app

# Copy the JAR file from the previous stage

COPY --from=stage1 /app/target/*.jar app.jar

# Run the application

CMD ["java", "-jar", "app.jar"]

Multi-Stage Benefits:

Reduced Image Size: Only the necessary runtime and application files were included in the final image.

Production-Ready: The slim image made it more suitable for deployment in production environments.

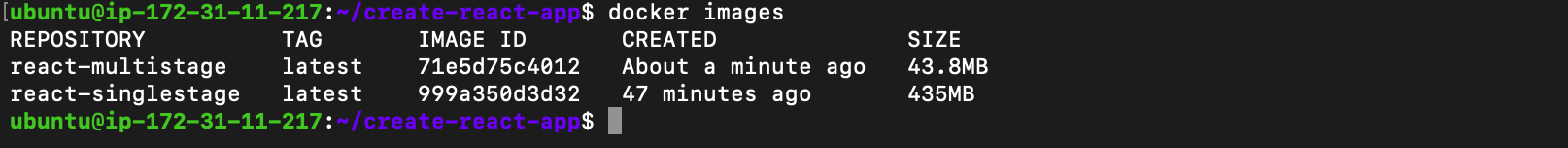

Dockerizing a React Application: The Power of Multi-Stage Builds.(copied from chatgpt .i donot have idea of react application)

Finally, I worked on Dockerizing a React application. I began with a single-stage Dockerfile:

# Use a Node.js base image

FROM node:16-alpine

# Set the working directory inside the container

WORKDIR /app

# Copy package.json and package-lock.json first to leverage Docker cache

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the rest of the application code

COPY . .

# Build the React application

RUN npm run build

# Install a simple web server to serve the static files

RUN npm install -g serve

# Set the command to start the web server

CMD ["serve", "-s", "build"]

# Expose the port on which the app will run

EXPOSE 3000

Single-Stage Challenges:

Large Image Size: The inclusion of development dependencies led to a larger image.

Inefficiency: The final image contained unnecessary files and tools not required for running the application.

Multi-Stage Build Optimization:

To address these issues, I optimized the Dockerfile using a multi-stage build:

# Stage 1: Build Stage

FROM node:16-alpine as build

# Set the working directory inside the container

WORKDIR /app

# Copy package.json and package-lock.json to leverage Docker cache

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the rest of the application code

COPY . .

# Build the React application

RUN npm run build

# Stage 2: Production Stage

FROM nginx:alpine

# Copy the build output from the first stage to NGINX's default directory

COPY --from=build /app/build /usr/share/nginx/html

# Expose port 80 to serve the app

EXPOSE 80

# Start NGINX

CMD ["nginx", "-g", "daemon off;"]

Improvements:

Lean Image: The production stage only includes the necessary files for serving the app, resulting in a much smaller image.

Better Performance: The final image was lighter, faster to deploy, and easier to manage.

Key Takeaways from My Dockerfile Optimization Journey

Understand the Importance of Layers: Each command in a Dockerfile adds a layer to the image. Combining related commands can significantly reduce image size.

Use Multi-Stage Builds: Multi-stage builds are powerful for creating lean and optimized Docker images, especially for production environments.

Clean Up Unnecessary Files: Removing temporary files and unused dependencies after installation can greatly reduce image size.

Leverage Docker Cache: Properly structuring your Dockerfile to maximize the use of Docker’s build cache can lead to faster builds.

Final Thoughts

Dockerfile optimization is a crucial skill for anyone looking to work in DevOps. Through these experiments, I learned that even small changes can make a significant impact on the efficiency and performance of your Docker images. Whether you’re working with static websites, Java applications, or React apps, understanding how to optimize your Dockerfiles is key to creating efficient and scalable containers.

Subscribe to my newsletter

Read articles from Amrit Poudel directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by