How to Set Up WebGPU with TypeScript and Vite: A Simplified Guide

Gift Mugweni

Gift Mugweni

Hello 👋, how are you doing? It's a lovely day right? In my last post, I said I was making a game engine and thought instead of writing documentation for it I might as well make blog articles to document my journey (I mean it's basically free content).

I'm decently far in the project at present but, it didn't feel right to start from my current point as there's too much missing context. So let's use the power of time travel and go way back to when I started the project.

Setting up the project

So far, we've been using vanilla JavaScript to work with WebGPU and I probably could make the entire engine that way. I didn't want to though as I feel more comfortable working with TypeScript because of its sweet sweet useless types that operate on the "Trust me bro" ethos. As such, we're gonna have to create a basic build setup. I used to use Webpack but thought I'd give Vite a try for a change as I'd heard good things about it.

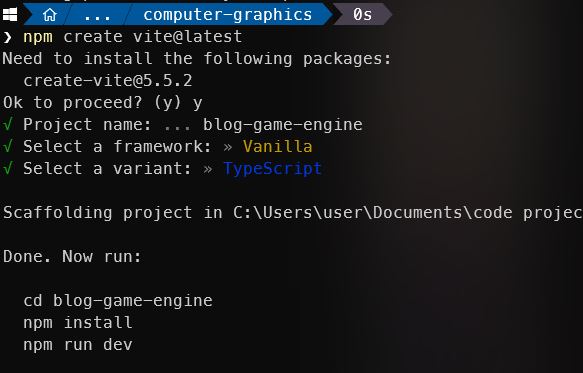

To create a project you run the below command in your terminal.

npm create vite@latest

We're not making anything extra so the vanilla-ts template will do just fine. Here's a screenshot of the options selected in the setup.

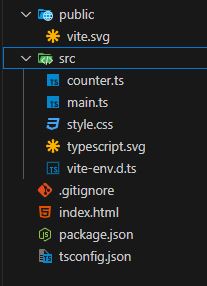

When opening the project you should have the following files present more or less.

We don't need the counter.ts, style.css, and typescript.svg files so you're free to delete those at your pleasure.

The next thing we need is to add the types for WebGPU since they do not come pre-added at the moment. To download the types run the below command.

npm i @webgpu/types

This downloads the package but we still need to link it to our project so modify the tsconfig.json to look as below.

{

"compilerOptions": {

"target": "ES2020",

"useDefineForClassFields": true,

"module": "ESNext",

"lib": [

"ES2020",

"DOM",

"DOM.Iterable"

],

"skipLibCheck": true,

/*********************THIS IS THE IMPORTANT PART!! ******/

"types": ["@webgpu/types"],

/******************************************************/

/* Bundler mode */

"moduleResolution": "bundler",

"allowImportingTsExtensions": true,

"resolveJsonModule": true,

"isolatedModules": true,

"moduleDetection": "force",

"noEmit": true,

/* Linting */

"strict": true,

"noUnusedLocals": true,

"noUnusedParameters": true,

"noFallthroughCasesInSwitch": true

},

"include": [

"src"

]

}

When I did this my types still weren't showing and after some googling, I also modified the vite-env.d.ts file to look like this.

/// <reference types="vite/client" />

/// <reference types="@webgpu/types" />

If you're using the VSCode IDE, you can also download these extensions that introduce some much-needed syntax highlighting in the wgsl shader code.

Next, replace the index.html and main.ts files with the below code.

index.html

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<link rel="icon" type="image/svg+xml" href="/vite.svg" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Web Game Engine</title>

<style>

html, body {

margin: 0px;

padding: 0px;

width: 100%;

height: 99.74%;

}

</style>

</head>

<body>

<canvas id="GLCanvas">

Your browser does not support the HTML5 canvas.

</canvas>

<script type="module" src="/src/main.ts"></script>

</body>

</html>

main.ts

import { Renderer } from "./Renderer";

const canvas = document.getElementById("GLCanvas") as HTMLCanvasElement

const render = new Renderer(canvas)

render.init()

The index.html file should look familiar. Its main point is to set up a canvas where the output will be displayed. The canvas has also been resized to fill the window.

The main.ts file contains code that will make sense as we go further down. To understand this, we need to ask a basic foundational question.

What is the purpose of a game engine?

Fundamentally, a game engine exists to make developing games easier by abstracting away important but unessential parts of the game-making process. For better context on what this means let's use a basic example of a like FromSoftware's Elden Ring game.

As far as the player is concerned, they are playing Elden Ring to enjoy exploring the open world, overcoming tough bosses, and getting lost in the scenery, music, and breathtaking visuals. All these things are difficult to get right and require a lot of effort and time from the game designers to perfect.

What the player forgets is that they are interacting with the game via their controller or keyboard and mouse. They might be playing the game on drastically different screens, using devices with varying ranges of specifications. Aside from this, Elden Ring is a 3D game but they are playing it on a 2D screen so how does that work? How does the animation work? What about the lighting? How does the game handle the multiplayer aspects to ensure no one is cheating or that multiple players see the same thing? Now that we're down this rabbit hole, how even is that music getting played in the first place?

All these questions must be answered before we start dealing with anything the player cares about and as I'm learning, they have no easy answers. As a game designer, you don't want to worry about these things. You're trying to make a game and provide memorable experiences. Could you make these things yourself? Yes, but that needs time, people, and money.

This is where game engines come in. They don't guarantee you've got a good game and aren't all you need either. They're a small part of the bigger process of making games that focus primarily on key things like rendering, audio, networking, input, physics, lighting, modelling, etc. Hence my initial explanation of them abstracting away important but unessential elements.

Now that we've answered this question we'll start working on the first element of the game engine, the rendering system.

Building the basic rendering system

What is a rendering system? In short, it's the thing responsible for drawing stuff on the screen. Practically speaking, this is where we'll be putting most of our WebGPU and shader logic as it directly relates to drawing stuff.

To start, we'll be remaking our triangle example as that's the foundational building block. The implementation will be slightly different as I chose to wrap up this logic in a class so it is more compact and makes it easier to add more features.

The init func

The first thing is to define the Renderer class in a new file we'll call Renderer.ts that will house all this logic.

export class Renderer {

private device!: GPUDevice

private context!: GPUCanvasContext

private presentationFormat!: GPUTextureFormat

private vertexShader!: GPUShaderModule

private fragmentShader!: GPUShaderModule

private pipeline!: GPURenderPipeline

private renderPassDescriptor!: GPURenderPassDescriptor

constructor(private canvas: HTMLCanvasElement) {

}

public async init() {

await this.getGPUDevice()

this.configCanvas()

this.loadShaders()

this.configurePipeline()

this.configureRenderPassDescriptor()

this.render()

}

When we instantiate the class, we pass in a canvas reference. This allows the class to work with any canvas which could be handy in the future maybe🤷♂️. The init function is responsible for setting up the rendering loop which I'll now explain.

Getting a GPU device and configuring canvas

The first thing we need is access to the GPU device on the host machine.

private async getGPUDevice() {

var adapter = await navigator.gpu?.requestAdapter()

const device = await adapter?.requestDevice()

if (!device) {

this.fail("Browser does not support WebGPU")

return

}

this.device = device

}

private fail(msg: string) {

document.body.innerHTML = `<H1>${msg}</H1>`

}

Next, we need to set up the canvas to accept the output from our GPU.

private configCanvas() {

var context = this.canvas.getContext("webgpu")

if (!context) {

this.fail("Failed to get canvas context")

return

}

this.context = context

this.presentationFormat = navigator.gpu.getPreferredCanvasFormat()

context.configure({

device: this.device,

format: this.presentationFormat

})

}

Loading shaders

After setting up the canvas, we load in the vertex and fragment shaders.

private loadShaders() {

this.loadVertexShader()

this.loadFragmentShader()

}

private loadVertexShader() {

this.vertexShader = this.device.createShaderModule({

label: "Vertex Shader",

code: /* wgsl */ `

// data structure to store output of vertex function

struct VertexOut {

@builtin(position) pos: vec4f,

@location(0) color: vec4f

};

// process the points of the triangle

@vertex

fn vs(

@builtin(vertex_index) vertexIndex : u32

) -> VertexOut {

let pos = array(

vec2f( 0, 0.8), // top center

vec2f(-0.8, -0.8), // bottom left

vec2f( 0.8, -0.8) // bottom right

);

let color = array(

vec4f(1.0, .0, .0, .0),

vec4f( .0, 1., .0, .0),

vec4f( .0, .0, 1., .0)

);

var out: VertexOut;

out.pos = vec4f(pos[vertexIndex], 0.0, 1.0);

out.color = color[vertexIndex];

return out;

}

`

})

}

private loadFragmentShader() {

this.fragmentShader = this.device.createShaderModule({

label: "Fragment Shader",

code: /* wgsl */ `

// data structure to input to fragment shader

struct VertexOut {

@builtin(position) pos: vec4f,

@location(0) color: vec4f

};

// set the colors of the area within the triangle

@fragment

fn fs(in: VertexOut) -> @location(0) vec4f {

return in.color;

}

`

})

}

You'll notice I've now split the vertex and fragment shaders into separate variables. This will allow us to easily change the vertex and fragment shaders on the fly as we go forward. A downside of this is the duplication of the VertexOut struct but that's not so bad right?

Another important point is that the @builtin(position) pos: vec4f in the vertex and fragment shaders do not contain the same values. For the vertex shader, the pos effectively only contains three values. These three points form a region that encompasses some pixels which are determined by a process called rasterization. The fragment shader is then executed for every pixel. What this means in practice is that the pos variable in the fragment shader contains the respective pixel coordinates and has as many elements as pixels in the defined region. This is an important point to remember if you want to do any fancy shader stuff and you can find more details about it in this article.

Configuring pipeline

Our pipeline is still pretty basic so it hasn't changed from our last version

private configurePipeline() {

this.pipeline = this.device.createRenderPipeline({

label: "Render Pipeline",

layout: "auto",

vertex: { module: this.vertexShader },

fragment: {

module: this.fragmentShader,

targets: [{ format: this.presentationFormat }]

}

})

}

private configureRenderPassDescriptor() {

this.renderPassDescriptor = {

label: "Render Pass Description",

colorAttachments: [{

clearValue: [0.0, 0.8, 0.0, 1.0],

loadOp: "clear",

storeOp: "store",

view: this.context.getCurrentTexture().createView()

}]

}

}

Rendering function

This too is still the basic implementation with nothing special

private render() {

(this.renderPassDescriptor.colorAttachments as any)[0].view = this.context.getCurrentTexture().createView()

const encoder = this.device.createCommandEncoder({ label: "render encoder" })

const pass = encoder.beginRenderPass(this.renderPassDescriptor)

pass.setPipeline(this.pipeline)

pass.draw(6)

pass.end()

this.device.queue.submit([encoder.finish()])

}

Conclusion

Congratulations 👏👏👏. You just spent all this effort to recreate the same thing you made last time. But, now you have TYPES 🎉🎉. I recognize that this might be a bit annoying but I felt it important to show all this so that we can start on the same page moving forward. Sadly I'll have to stop here for now as this article is already pretty long but next, we'll begin refining this foundation and shaping it into our wonderful dodgy engine.

Subscribe to my newsletter

Read articles from Gift Mugweni directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gift Mugweni

Gift Mugweni

Just your chilled programmer sharing random facts I've learnt along my journey.