Java Caching Framework: Design, Implement, and Optimize

Sourav Mansingh

Sourav Mansingh

I've been searching for full-time opportunities as a Backend Software Engineer and have been attending many interviews that test my Java fundamental skills, system design concepts, and coding standards. To be honest, I've been struggling in these areas because I've been out of touch for over a year. In one such interview, I was asked to create a simple caching application, while in another one I was asked to explain how a thread returns a value. I succeeded in one of the situations but failed in the other. Reflecting on these experiences, I decided to create an application that serves as a caching framework and also demonstrates how a thread can return a value.

Introduction:

Caching is a fundamental technique for optimizing application performance, particularly in scenarios where data retrieval from a primary source (like a database) is costly in terms of time or resources. A well-designed cache can significantly reduce latency and improve the overall responsiveness of an application. In this article, we'll explore how to build a robust and scalable caching framework in Java, suitable for various use cases, including multi-threaded environments.

We'll cover everything from designing the cache, and implementing a Least Recently Used (LRU) eviction policy, to making the framework available for others to use in their projects.

Why Caching Matters

Caching provides several benefits:

Improved Performance: By storing frequently accessed data in memory, caches reduce the time needed to retrieve data from slower sources.

Reduced Load: Caching can reduce the load on databases or external APIs, preventing them from being overwhelmed by repeated requests.

Scalability: Effective caching can help applications scale by minimizing resource usage.

However, implementing an efficient cache requires careful consideration of factors like eviction policies, thread safety, and memory management.

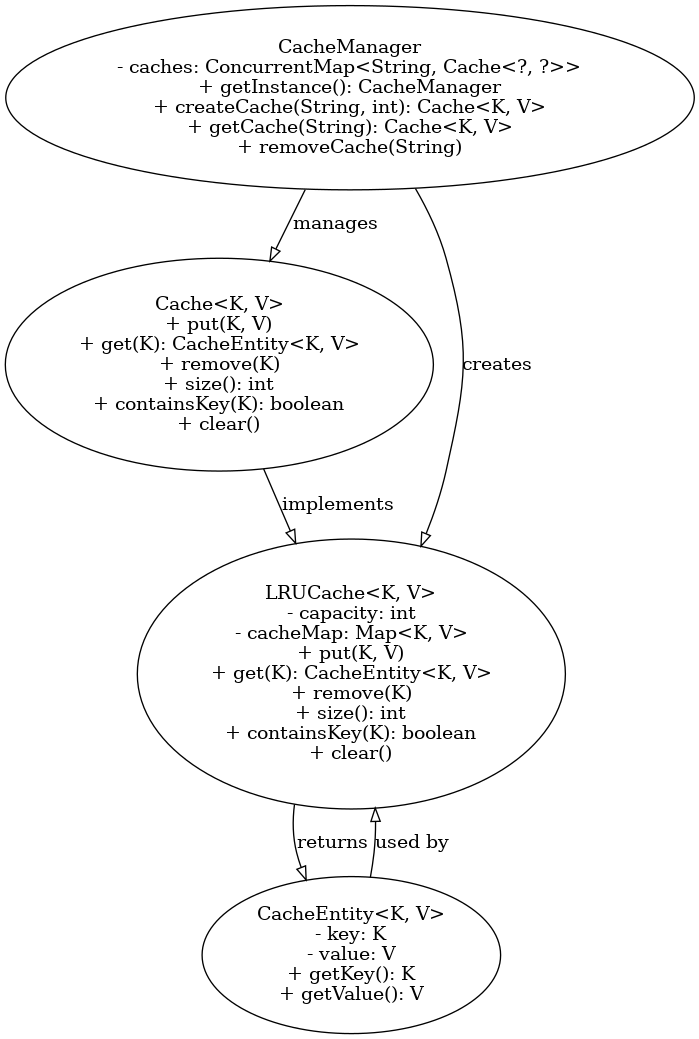

Designing the Cache Framework

Our caching framework is designed to be generic, flexible, and easy to integrate into any Java application. The key components include:

Cache Interface: A generic interface that defines the basic operations for any cache implementation.

LRUCache: A concrete implementation that uses a Least Recently Used (LRU) eviction policy.

CacheManager: A singleton class responsible for managing cache instances.

SOLID Principles in Action

The design of this caching framework adheres to the SOLID principles, which are key to creating maintainable and scalable software.

Single Responsibility Principle (SRP):

- Each class in the framework has a single responsibility. For instance,

CacheManageris solely responsible for managing cache instances, whileLRUCachefocusing on cache operations and eviction policy.

- Each class in the framework has a single responsibility. For instance,

Open/Closed Principle (OCP):

- The framework is open for extension but closed for modification. Developers can extend the cache by implementing the

Cache<K, V>interface, without modifying the existing code.

- The framework is open for extension but closed for modification. Developers can extend the cache by implementing the

Liskov Substitution Principle (LSP):

- The

Cache<K, V>interface ensures that any implementation of a cache can be substituted for another without affecting the functionality of the application. This makes it easy to swap out different cache implementations if needed.

- The

Interface Segregation Principle (ISP):

- The

Cache<K, V>interface is minimal, containing only the necessary methods for cache operations. This avoids forcing developers to implement unnecessary methods.

- The

Dependency Inversion Principle (DIP):

- High-level modules, such as application code using the cache, depend on abstractions (

Cache<K, V>) rather than concrete implementations (LRUCache<K, V>). This makes the code more flexible and easier to maintain.

- High-level modules, such as application code using the cache, depend on abstractions (

Design Patterns in the Framework

Several design patterns are employed to create a robust and flexible caching framework:

Singleton Pattern:

- The

CacheManagerclass is implemented as a singleton, ensuring that only one instance of the cache manager exists throughout the application. This centralizes cache management and prevents issues related to multiple instances.

- The

public static CacheManager getInstance() {

return Holder.INSTANCE;

}

Factory Method Pattern:

- The

CacheManagerclass acts as a factory for creating cache instances. ThecreateCachemethod is a factory method that encapsulates the logic for creatingCacheobjects.

- The

public <K, V> Cache<K, V> createCache(String cacheName, int size) {

Cache<K, V> cache = new LRUCache<>(size);

caches.put(cacheName, cache);

return cache;

}

Class level daigram

Cache Interface

The Cache interface defines the contract for any cache implementation:

package com.eomaxl.utilities;

import com.eomaxl.entity.CacheEntity;

public interface Cache<K,V> {

void put(K key, V value);

CacheEntity<K, V> get(K key);

void remove(K key);

void clear();

int size();

boolean containsKey(K key);

}

This interface ensures that any cache implementation provides basic operations like put, get, and remove.

Cache Entity

The CacheEntity class encapsulates the key-value pair stored in the cache:

public class CacheEntity<K, V> {

private final K key;

private final V value;

public CacheEntity(K key, V value) {

this.key = key;

this.value = value;

}

public K getKey() {

return key;

}

public V getValue() {

return value;

}

}

This class can be extended to include additional metadata, such as timestamps or access counters, making it more versatile for different use cases.

LRUCache Implementation

The LRUCache class implements the Cache interface, using a LinkedHashMap to maintain the order of elements based on access, which is crucial for the LRU eviction policy:

package com.eomaxl.utilities.Impl;

import java.util.LinkedHashMap;

import java.util.Map;

import com.eomaxl.utilities.Cache;

import com.eomaxl.entity.CacheEntity;

public class LRUCache<K,V> implements Cache<K, V> {

private final int capacity;

private final Map<K,V> cacheMap;

public LRUCache(int capacity){

this.capacity = capacity;

this.cacheMap = new LinkedHashMap<K,V>(capacity, 0.75f, true) {

private static final long serialVersionUID = 1L;

@Override

protected boolean removeEldestEntry(Map.Entry<K,V> eldest) {

return size() > LRUCache.this.capacity;

}

};

}

@Override

public synchronized void put(K key, V value){

cacheMap.put(key, value);

}

@Override

public synchronized CacheEntity<K,V> get(K key){

V value = cacheMap.get(key);

if (value != null){

return new CacheEntity<K,V>(key, value);

}

return null;

}

@Override

public synchronized int size(){

return cacheMap.size();

}

@Override

public synchronized void remove(K key){

cacheMap.remove(key);

}

@Override

public synchronized boolean containsKey(K key){

return cacheMap.containsKey(key);

}

@Override

public synchronized void clear(){

cacheMap.clear();

}

}

This class is thread-safe, ensuring that cache operations are consistent even in multi-threaded environments.

CacheManager

The CacheManager is a singleton class that provides a centralized way to create and manage cache instances:

import com.eomaxl.utilities.Cache;

import com.eomaxl.utilities.Impl.LRUCache;

import java.util.concurrent.ConcurrentHashMap;

public class CacheManager {

private final ConcurrentHashMap<String, Cache<?,?>> caches;

private CacheManager(){

caches = new ConcurrentHashMap<>();

}

private static class Holder {

private static final CacheManager INSTANCE = new CacheManager();

}

public static CacheManager getInstance(){

return Holder.INSTANCE;

}

public <K,V> Cache<K,V> createCache(String cacheName, int size){

if (caches.containsKey(cacheName)){

throw new RuntimeException("Cache already exists: " + cacheName);

}

Cache<K,V> cache = new LRUCache<>(size);

caches.put(cacheName, cache);

return cache;

}

public <K,V> Cache<K,V> getCache(String cacheName){

return (Cache<K,V>) caches.get(cacheName);

}

public void removeCache(String cacheName){

caches.remove(cacheName);

}

}

This class manages the lifecycle of caches, ensuring that each cache is unique and easily retrievable by name.

Using the Caching Framework

Now that we’ve built the caching framework, let’s see how developers can integrate it into their projects.

1. Adding the Dependency

First, add the caching framework as a dependency in your project. If it’s published on Maven Central or JitPack, you can include it in your pom.xml

2. Creating and Using a Cache

Here’s how to create and use a cache:

CacheManager cacheManager = CacheManager.getInstance();

// Create a cache with a capacity of 100

Cache<String, String> cache = cacheManager.createCache("myCache", 100);

// Add some data to the cache

cache.put("key1", "value1");

cache.put("key2", "value2");

// Retrieve data from the cache

CacheEntity<String, String> entity = cache.get("key1");

if (entity != null) {

System.out.println("Retrieved value: " + entity.getValue()); // Outputs: value1

} else {

System.out.println("Key not found in cache.");

This simple example shows how to store and retrieve data from the cache. The LRUCache will automatically evict the least recently used entries when the cache reaches its capacity.

3. Handling Multi-Threaded Access

The cache framework is designed to be thread-safe. Here’s how you can safely use it in a multi-threaded environment:

Runnable cacheTask = () -> {

cache.put("threadKey", "threadValue");

CacheEntity<String, String> entity = cache.get("threadKey");

if (entity != null) {

System.out.println(Thread.currentThread().getName() + " retrieved: " + entity.getValue());

}

};

Thread thread1 = new Thread(cacheTask);

Thread thread2 = new Thread(cacheTask);

thread1.start();

thread2.start();

thread1.join();

thread2.join();

This code demonstrates how multiple threads can safely access and modify the cache.

Conclusion

I believe this application should be scalable and robust having primary features that a caching framework does provide which is thread-safe also. Below is the github link for this application.

https://github.com/Eomaxl/LLD-CacheFramework

Whether you're working on a high-performance server application or simply need to optimize your code, implementing a caching layer can make a substantial difference. I hope this article provides you with the knowledge and tools to start building your caching solutions in Java.

Feel free to explore the code, extend the framework, and adapt it to your specific needs. Happy coding!

Subscribe to my newsletter

Read articles from Sourav Mansingh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sourav Mansingh

Sourav Mansingh

Hey there! I'm a Senior Software Engineer with over a decade of experience in crafting web applications and back-end services. My sweet spot is where finance meets technology, and I thrive on building secure, user-friendly financial applications that stand up to the fast-paced demands of the market. With a strong foundation in Java, Spring Boot, and data solutions like Redis, MongoDB, and PostgreSQL, I bring a full-stack perspective to the table, even diving into front-end work with React when needed. I’m all about code quality and collaboration, and I love mentoring others in the tech community—helping drive innovation and growth in the fintech space. On the side, I’m an open-source enthusiast and enjoy sharing what I’ve learned. I’ve put together a three-part series on payment gateway design that’s resonated with over 1700 readers on Medium. Currently, I am exploring Rust as a language and am curious about High Frequency Trading (HFT) applications. Stay tuned as I will be sharing all my thoughts and knowledge as I dive deep into these two topics.