What is Docker? A Beginner's Guide to Understanding Containers

Yash Dugriyal

Yash Dugriyal

Let's get to know about some terms first before diving deep into docker.

HyperVisor and Virtual Machines

software which is responsible (using which we can) to make virtual servers(machines) on top of the physical servers.Also known as virtual machine monitor (VMM).Enables the creation and management of the virtual machines on a physical host machine.

Types:

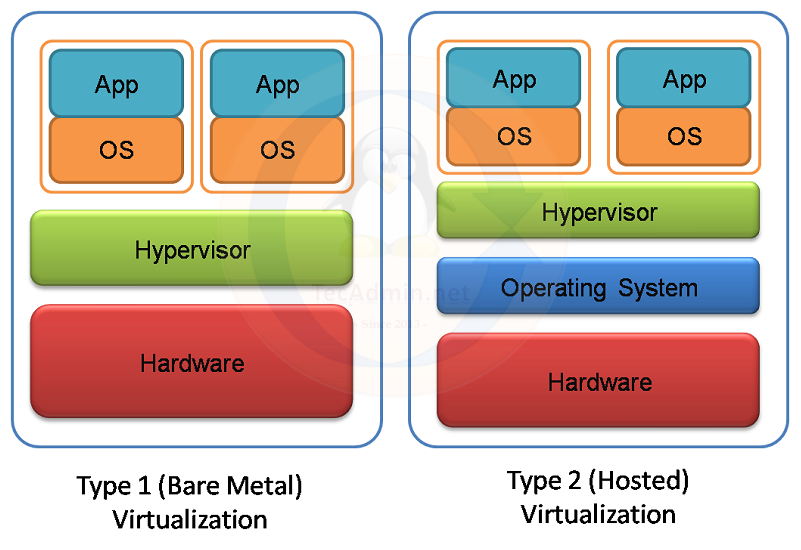

Type 1 (Hardware) Hypervisor (ex: VMWare ESXI , Hyper-v)

Type 2 (Software) Hypervisor (ex: VMWare workstation , VirtualBox)

In type 1 the hypervisor directly interacts with the hardware while in type 2 it interacts with the OS and OS interacts with the hardware further to execute the tasks.

In virtual machines its not easy provisioning them as they come with much overhead as compared to the containers , Resource allocation is not dynamic (even if memory is available in the physical machine)

It cannot increase automatically or it cannot decrease automatically. Technically we call it as scale in and scale out. A Scale-in and Scale-out functionality is not available in Virtual machine.

Any Resource which is allocated to a virtual machine will only be used by that machine only even if this is not using the resources and some other mahcine requires it , it would not be possible to allocate that memory to the other machine (even if that memory remains useless).

Now as to run application in VM , it requires OS which in return requires resources to run hence it means the resources and memory allocated to the VM is majorly used by the OS and not by our application.

What are Containers ?

A container is a lightweight, standalone, executable package that includes everything needed to run a piece of software: code, runtime, system tools, libraries, and settings. Here's a clearer breakdown:

Isolation: Containers provide a way to run applications in isolated environments. Each container has its own filesystem, networking, and process space, which means it doesn't interfere with other containers or the host system.

Dependencies Included: Containers package the application along with all its dependencies—OS binaries, libraries, and other necessary resources(Actually in Linux Operating system, We need kernel packages to talk to the hardware, because as an human being, as an application cannot understand binary language Kernel will help you to convert human readable language to the machine level language and vice versa . That's the reason why we use the kernel. Containers doesn't have this particular kernel). This ensures that the application will run consistently regardless of the environment it's deployed in.

Interaction with Host OS: Containers share the host system's OS kernel but have their own userspace. This is different from virtual machines (VMs), which include a full OS stack. Because of this shared kernel, containers are much lighter and faster to start compared to VMs.

Efficiency and Portability: By including only the necessary parts of the OS (like specific binaries and libraries), containers are much more efficient. They can start and stop quickly, and because they contain everything needed to run the application, they can be easily moved and run on any machine with a container runtime (like Docker).

So, in summary, a container is like a lightweight "sandbox" that encapsulates the application and its environment, ensuring it runs consistently across different computing environments.

Containers primarily share the operating system (OS) kernel of the host machine rather than the hardware itself. Here's a more detailed explanation:

How Containers Work:

OS Kernel Sharing: Containers share the host OS kernel, meaning they use the same core of the operating system that manages hardware resources like CPU, memory, and I/O. This allows multiple containers to run on a single host without the overhead of each having a separate OS, as is the case with virtual machines.

User Space Isolation: Each container has its own isolated user space. This means that within a container, you can have different binaries, libraries, and application dependencies. Even though they share the same kernel, containers don’t have access to each other's processes, network, or filesystem unless explicitly configured.

Lightweight: Since containers don’t need to boot up a full OS, they are much lighter and faster to start compared to virtual machines. They only include the application and its dependencies, not a full-blown operating system.

Portability: The isolation provided by containers allows them to run consistently across different environments, as long as the container runtime (e.g., Docker) is present. This makes it easy to move containers between development, testing, and production environments.

Contrast with Virtual Machines (VMs):

VMs: Each virtual machine has its own OS, which means VMs are heavier and take more resources (RAM, CPU). They virtualize hardware, creating a full machine environment.

Containers: Containers share the host OS kernel but maintain separate user spaces. This makes them more efficient and less resource-intensive, leading to faster startup times and higher density (more containers can run on a single host compared to VMs).

In summary, containers share the host OS kernel but maintain their own isolated environments, which provides the benefits of lightweight, fast, and portable application deployment.

Docker Compose Tool : If you want to do some orchestration on Docker engine, we have a tool called Docker Compose Where we will write a file and we will tell what we want to do, what kind of containers we want to create, what image we want to use for a container, what volume i want to use, what port forwarding i have to do. All these particular details, i will write in a fileThat particular file i will send as input to my Docker engine. This mechanism is called as orchestration.

Writing a file and giving it to Docker engine is called as orchestration. This particular job is done by your docker compose.Basically all the rules and everything is written in that file and using that file the docker engine can interact with the OS accordingly to access the resources on hardware.

Docker Engine : Tool from docker which is responsible on the backend to execute all the commands using the OS.It is the actual tool which we are using to create containers and to run the containers.

Docker engine runs on different platform like virtual machines, bare metal, cloud.It is a cross platform.(this is why containers are also platform independent as the engine which is responsible to run them is independent).

Access ,Containers from outside of Docker Host Machine

The term Docker Host Machine refers to the physical or virtual machine where the Docker Engine is installed and running.

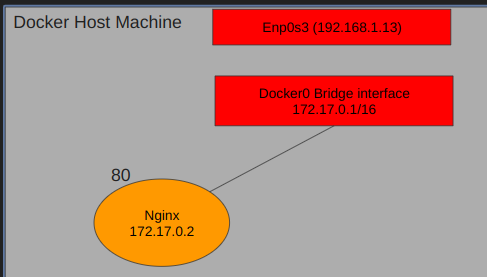

By default all the applications which are running in Docker Engine will not be accessible from outside. Why because it is using a private address. Let us see that particular behaviour.

Key Terms

Host: This refers to the physical or virtual machine where Docker is installed. It's the computer that runs Docker containers.

Containers: Lightweight, isolated environments that run applications. They share the host's operating system but have their own filesystem, processes, and network interfaces.

Outside World: Any network or system outside of the host machine, like the internet or other computers in your local network.

How Containers Network with the Host and the Outside World

Docker Network Basics:

When you install Docker, it creates a virtual network inside your host machine called the

docker0bridge. You can think of this bridge like a virtual switch inside the host.Every time you start a new container, Docker gives it a virtual network card (like a virtual cable) called

eth0. This card is plugged into thedocker0bridge, so the container can communicate with the host and other containers.

Communication Inside the Host:

Host to Container: If the host (the physical or virtual machine) needs to communicate with a container, it uses the IP address assigned to the container by Docker. This is like the host sending a letter to the container’s address through the

docker0bridge.Container to Host: Containers see the host as having a special IP address (usually

172.17.0.1). If a container wants to send data to the host, it uses this IP address, and thedocker0bridge handles the delivery.

Communication with the Outside World:

When a container needs to talk to the outside world (like accessing the internet), Docker helps it by using a process called Network Address Translation (NAT). Here’s how it works:

From Container to Outside: When a container sends a request to the internet, it first goes through the

docker0bridge. Docker then changes the container's IP address to the host's IP address (this is NAT) and sends it out. This way, the container’s identity is hidden, and it looks like the request is coming from the host itself.From Outside to Container: By default, containers can’t be accessed directly from outside the host. But if you want to allow access, you can use port mapping. For example,

docker run -p 8080:80 myappmakes the container’s internal port 80 accessible via port 8080 on the host. So if someone on the outside world tries to reach the host’s IP on port 8080, Docker will redirect that to the container’s port 80.

Custom Networks:

- Besides the default

docker0bridge, you can create custom networks. This is useful if you want to group certain containers together and keep others separate for security or organizational reasons. For example, containers in one custom network can talk to each other but not to containers in another network.

- Besides the default

Summary

The host is the machine running Docker. It provides the physical resources for containers.

Containers are like virtual rooms with their own network cables (

eth0), connected to a central switch (docker0).The outside world is everything outside of the host, like the internet.

Docker uses NAT to let containers communicate with the outside world while keeping their IP addresses hidden.

You can control access to containers from the outside world using port mapping and create isolated groups of containers with custom networks.

This setup allows Docker containers to run applications in isolated environments, ensuring they can communicate when necessary while remaining secure and efficient.

Understanding Docker Networking: The Role of enp0s3 and docker0

In Docker's networking model, understanding how containers interact with the host and the outside world is crucial. Here’s a simplified overview:

enp0s3: This is a network interface on the Docker host machine, often used to connect the host to external networks like the internet. It handles traffic going in and out of the host.Docker Containers: Each container connects to the Docker host through a virtual network interface (e.g.,

eth0) and communicates with other containers via thedocker0bridge.docker0Bridge: This virtual network bridge connects all containers to each other and to the host. It allows internal communication among containers and routes traffic between containers and the host.Network Address Translation (NAT): Docker uses NAT to enable containers to access external networks. When a container sends traffic to the outside world, Docker modifies the packets’ source address to the host’s IP address before they leave the host, and it handles incoming traffic through port mapping.

In essence, Docker’s docker0 bridge and host interfaces like enp0s3 work together to manage network communication, allowing containers to interact with each other, the host, and external systems.

As you continue to explore Docker and integrate it into your projects, you'll find that its capabilities extend far beyond the basics covered here. Dive deeper into Docker's advanced features, such as orchestration with Docker Compose and Kubernetes, to fully harness the potential of containerization.

Happy containerizing! If you have any questions or need further clarification, feel free to reach out or explore additional resources to enhance your Docker journey.

Subscribe to my newsletter

Read articles from Yash Dugriyal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by