Mounting Amazon EFS in Kubernetes: A Comprehensive Guide for On-Prem and AWS Environments

Hardik Bansal

Hardik BansalTable of contents

- Understanding the Application's Unique Storage Requirements

- Why Amazon EFS?

- Prerequisites

- Essential Requirements for Setting Up EFS

- Step 1: Create and Configure Amazon EFS

- Step 2: Mount EFS on EC2 Instance

- Step 3: Install and Configure SSHFS on each On-Premises VM

- Step 4: Mount EFS from EC2 to each On-Premises VM Using SSHFS

- Step 5: Verifying Your EFS Setup

- Step 6: Configuring Persistent Volumes and Claims

- Integrating EFS with Your Applications

- Mounting EFS in AWS Lambda Functions

- Conclusion

- Additional Resources and Guides

In a recent project at my organization, we faced an unusual challenge: deploying a microservice-based application in Kubernetes that needed shared storage accessible both on-premises and within AWS. While the internet is flooded with guides for EFS integration in AWS environments, finding a solution that catered to our hybrid setup was a daunting task. After extensive research and experimentation, I developed a workable solution that I believe could benefit others facing similar challenges.

This blog outlines my approach to mounting Amazon Elastic File System (EFS) in a Kubernetes cluster deployed across both on-premises VMs and AWS using Amazon EKS. Whether you’re dealing with a similar hybrid environment or just looking for insights on EFS integration, this guide is for you.

Understanding the Application's Unique Storage Requirements

Our application, deployed as a Kubernetes pod, dynamically spins up AWS Lambda functions in real-time and requires shared access to the same data. We maintain two environments: a development environment hosted on on-premises VMs and a production environment in AWS using EKS. To meet the shared storage needs, we chose Amazon EFS for its flexibility and robust features. However, integrating EFS with our on-premises Kubernetes cluster posed some unique challenges.

Why Amazon EFS?

Amazon EFS provides serverless, elastic file storage that scales automatically, making it an ideal solution for applications requiring shared access and dynamic scalability. Key features include:

Scalability: EFS automatically scales as files are added or removed, providing virtually unlimited storage without the need to manage volume sizes.

Shared Access: Multiple EC2 instances and Lambda functions can concurrently access the same EFS file system, facilitating seamless data sharing.

High Availability: EFS is designed for high availability and durability, with data redundancy across multiple Availability Zones (AZs) within a region.

Managed Service: AWS handles infrastructure management and maintenance, reducing administrative overhead.

Performance: EFS delivers consistent, low-latency performance suitable for a wide range of workloads, including latency-sensitive applications.

Seamless Integration: EFS integrates effortlessly with other AWS services like EC2, Lambda, and ECS.

Prerequisites

Before diving into the setup, ensure you have the following:

An AWS account with access to EFS, EC2, and VPC services.

An on-premises VM with SSH access.

An EC2 instance running in the AWS cloud

An AWS Direct Connect or VPN connection established between your on-premises network and AWS VPC (for production, though a VPN workaround is discussed for development).

Essential Requirements for Setting Up EFS

To facilitate network traffic between your AWS VPC and on-premises VMs, you typically use AWS Direct Connect or AWS Client VPN. However, given the cost implications especially for a development environment, this guide explores a cost-effective workaround using existing VPN infrastructure.

Notably, EFS doesn’t directly support mounting on VMs outside AWS, so we’ll leverage SSHFS (SSH File System) for this purpose. SSHFS allows you to securely mount a remote file system over an SSH connection, making the remote files accessible as if they were on your local machine.

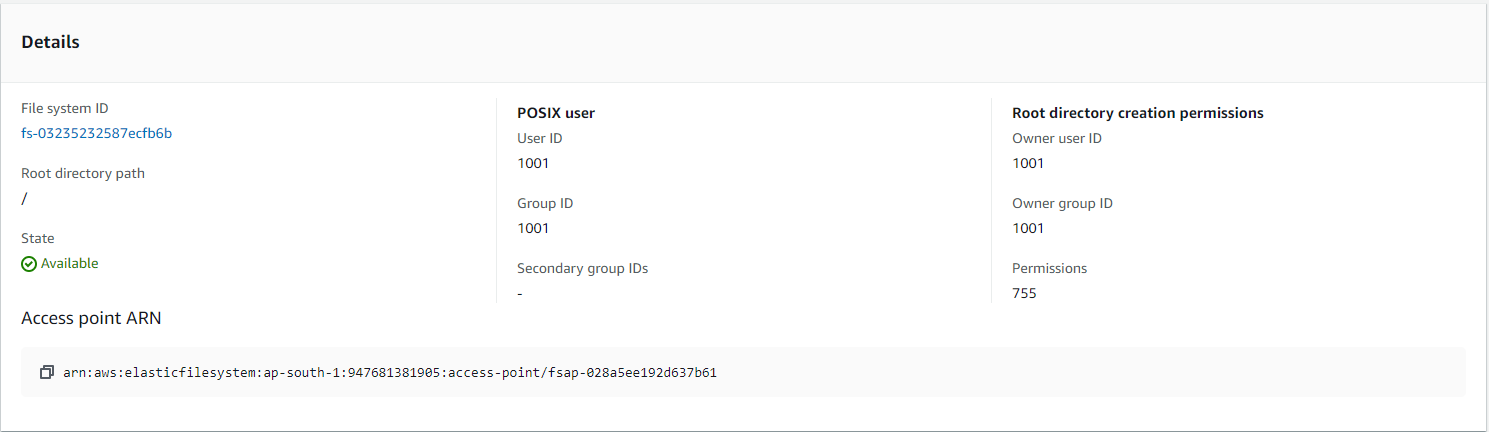

Step 1: Create and Configure Amazon EFS

Log in to the AWS Management Console.

Navigate to the EFS service and create a new file system. Ensure the following configurations:

Attach a public subnet in each Availability Zone (AZ).

Create a security group for EC2 with:

Inbound Rule: Port 22 for SSH access (source: your VPN CIDR).

Outbound Rule: All traffic to anywhere (0.0.0.0/0).

Attach another security group to your EFS:

Inbound Rule: Port 2049 for NFS traffic (source: EC2 security group created above).

Outbound Rule: All traffic to anywhere (0.0.0.0/0).

Step 2: Mount EFS on EC2 Instance

Choose an existing EC2 instance (or spin up a t2.nano instance) within the same VPC as your EFS and attach the EC2 security group created earlier.

Connect to your EC2 instance using SSH.

Install the NFS client package using the package manager (e.g.,

sudo yum install -y nfs-utilsfor Amazon Linux).Create a mount point for EFS (e.g.,

mkdir ~/mnt/efs/fs1).Mount the EFS file system to the EC2 instance either while creating instance from console or using the mount command after SSH into machine:

sudo mount -t nfs4 -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport file-system-id.efs.aws-region.amazonaws.com:/ /mnt/efs/fs1

- Give read and write permissions to this mount point

sudo chmod 777 /mnt/efs/fs1

Step 3: Install and Configure SSHFS on each On-Premises VM

- Install SSHFS on your on-premises VM. For most Linux distributions, this can be done via the package manager.

sudo apt-get install sshfs

or

yum install epel-release

yum install fuse-sshfs

- Create a local directory to serve as the mount point for the remote EFS (e.g.,

mkdir /mnt/efs).

Step 4: Mount EFS from EC2 to each On-Premises VM Using SSHFS

- Use the SSHFS command to mount the EFS directory from the EC2 instance to your on-premises VM. The command will look something like this and change the permissions so that the directory is read and writable:

sudo sshfs -o allow_other,cache=no,IdentityFile=/path/to/private/key.pem user@ec2-instance-public-ip:/mnt/efs/fs1 /mnt/efs/

sudo chmod +wr -R /mnt/efs

Replace placeholders with actual values:

/path/to/private/key.pem– Path to your SSH private key.user– Username for the EC2 instance.ec2-instance-public-ip– Public IP of the EC2 instance.

For unmounting use sudo umount -l efs

Step 5: Verifying Your EFS Setup

Check the mounted directories using the following command:

df -T -h

You should see the EC2 instance alongside other mounted storage like below:

Test read/write operations to confirm successful EFS access from the on-premises VM.

Ensure persistence across reboots by adding the SSHFS mount command to your VM’s startup scripts.

Note: Ensure that all security groups and network ACLs allow the necessary traffic between your on-premises VM, EC2 instance, and EFS.

Step 6: Configuring Persistent Volumes and Claims

Create a Persistent Volume (PV) manifest like below and make sure that the host path is same as where your EC2 storage is mounted.

apiVersion: v1 kind: PersistentVolume metadata: name: test-efs-pv namespace: efs spec: capacity: storage: 5Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: manual hostPath: path: /mnt/efsCreate a Persistent Volume Claim (PVC) manifest to request the desired storage.

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: test-efs-pvc namespace: efs spec: accessModes: - ReadWriteOnce resources: requests: storage: 5Gi storageClassName: manual

Integrating EFS with Your Applications

To use EFS in your on-premises Kubernetes applications, include the PVC in your pod manifest file:

volumeMounts: - mountPath: /mnt/efs name: efs-storage volumes: - name: efs-storage persistentVolumeClaim: claimName: test-efs-pvc

Mounting EFS in AWS Lambda Functions

To integrate Amazon EFS with AWS Lambda functions, you can easily attach the EFS file system through the Lambda console under the configuration settings.

Important Note: Before proceeding with the EFS attachment, ensure the following prerequisites are met:

Private Subnets: Your Lambda function must be associated with private subnets within the same VPC that contains your EFS file system.

VPC Configuration: Ensure that the VPC where your EFS is hosted is correctly configured with the necessary network settings to allow access from Lambda.

IAM Role: Assign an IAM role to your Lambda function that has the necessary permissions to access the EFS file system. This role should include the required policies for mounting and accessing EFS.

Once these prerequisites are in place, you can directly attach the EFS file system in the Lambda console, enabling seamless access to shared storage within your serverless applications.

Conclusion

In conclusion, integrating Amazon EFS with Kubernetes, whether in an on-premises environment or within AWS, can significantly enhance your application's storage capabilities. By leveraging EFS's scalability, shared access, high availability, and seamless integration with AWS services, you can ensure efficient and reliable data management. While setting up EFS in an AWS EKS environment is straightforward, mounting it in an on-premises Kubernetes cluster requires additional steps, such as using SSHFS. By following the detailed steps outlined in this guide, you can successfully configure and utilize EFS in both environments, ensuring your applications have the robust and flexible storage solutions they need.

Additional Resources and Guides

Subscribe to my newsletter

Read articles from Hardik Bansal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hardik Bansal

Hardik Bansal

DevOps Engineer☁️