How Discord Stores Trillions of Messages

Kamran Ali

Kamran Ali

Discord shared its journey from storing 1,000,000,000 (Billion) messages to 1,000,000,000,000 (Trillion) messages

Issues faced when Cassandra Cluster grew from 12 Nodes (in 2017) to 177 Nodes (in 2022)

Problems

In 2017, 12 Cassandra nodes were storing Billions of messages which grew to 177 nodes storing Trillions of messages.

It became a high-toil system, with the following issues

Unpredictable Latencies

The on-call team frequently gets paged for database issues

Multiple Concurrent Reads and Uneven Discord Server sizes hot-spotting a partition, (given Read in Cassandra are more expensive than Writes) affecting latency across the entire cluster

Compactions were falling behind creating cascading latency as a node tried to compact

To avoid GC pauses (which would cause significant latency spikes) a large amount of time was spent tuning the JVM’s garbage collector and heap settings

Architecture Changes

Fixing Hot Partitions

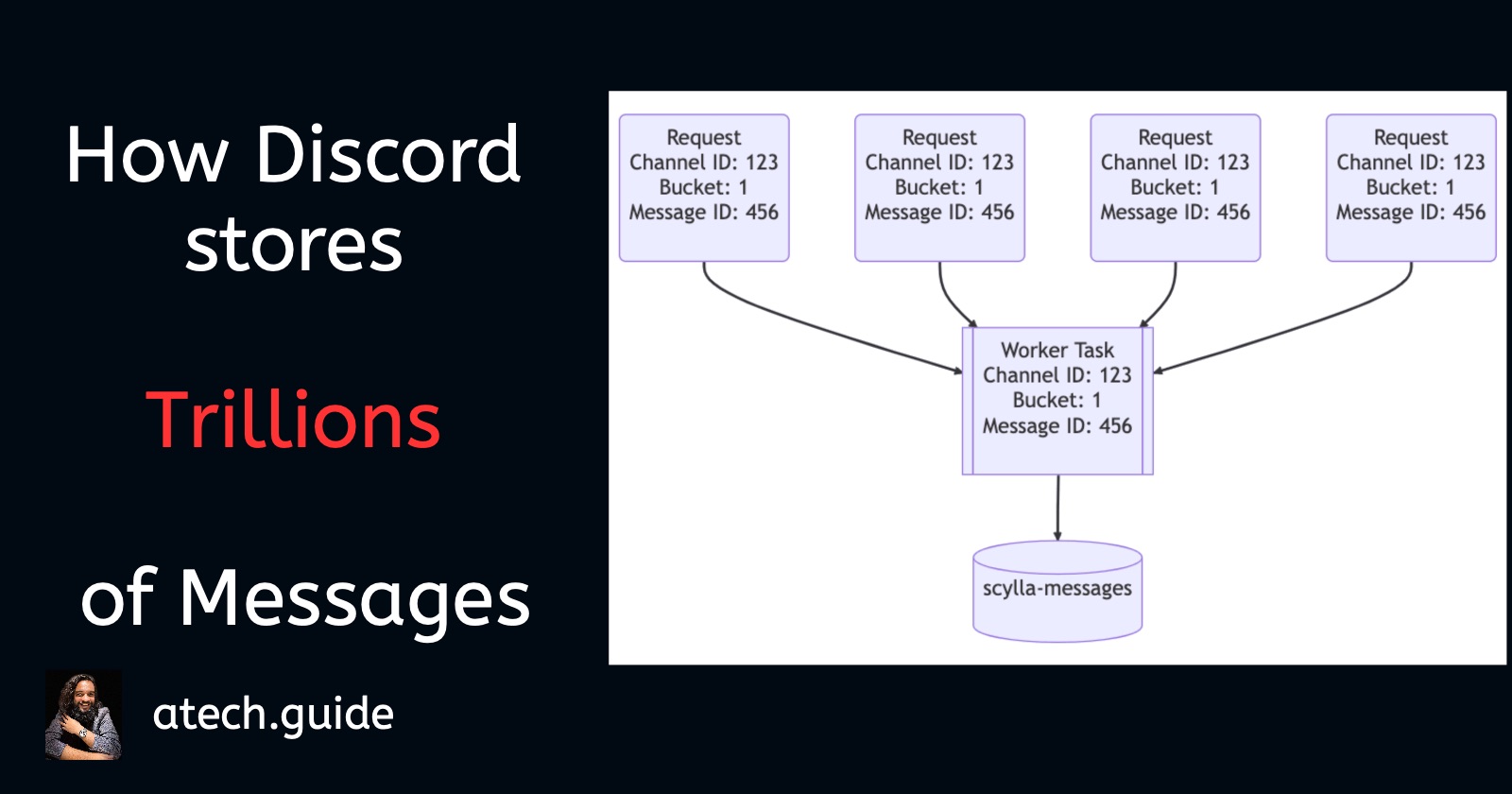

Enabling Request Coalescing (Query DB once for multiple requests for the same data) by building Data Services in Rust, as intermediary services that sit between API and database clusters containing roughly one gRPC endpoint per database query and no business logic

Building efficient consistent hash-based routing to the data services which routes requests for the same channel to the same instance of the service

Migration to Scylla DB

The data service library was extended to perform large-scale data migrations which read token ranges from a database, checkpoints them locally via SQLite, and then firehoses them into ScyllaDB

The estimated time was 9 days with a speed of 3.2 million per second

With an additional hiccup of intervention to compact gigantic ranges of tombstones (that were never compacted in Cassandra), migration was complete

Automated data validation was performed by sending a small percentage of reads to both databases and comparing the results

Post Migration

Decrease in Node count from 177 Cassandra Nodes to 72 ScyllaDB nodes

Each ScyllaDB node has 9 TB of disk space, up from the average of 4 TB per Cassandra node

Improved Tail latencies from p99 of between 40-125ms on Cassandra to 15ms p99 on ScyllaDB

Improved message insert performance from 5-70ms p99 on Cassandra, to a steady 5ms p99 on ScyllaDB

Reference and Image Credit

Subscribe to my newsletter

Read articles from Kamran Ali directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kamran Ali

Kamran Ali

Hi, I'm Kamran Ali. I have ~11.5 years of experience in Designing and Building Transactional / Analytical Systems. I'm actually getting paid while pursuing my Hobby and its already been a decade. In my next decade, I'm looking forward to guide and mentor engineers with experience and resources