k8s-01: Understanding Kubernetes: A Deep Dive into Container Orchestration

Amrit Poudel

Amrit PoudelIntroduction to Containers and Orchestration

Docker is one of the most well-known container engines, revolutionizing how we package and deploy applications. Containers offer lightweight, consistent, and isolated environments for running applications, making them highly portable across different environments.

While Docker provides the platform for creating and managing containers, container orchestration tools are essential for managing these containers at scale in a production environment. Two of the most popular orchestration tools are Kubernetes and Docker Swarm.

Why Kubernetes is Preferred Over Docker Swarm

Though Docker Swarm is simple and tightly integrated with Docker, it has several limitations that have led to Kubernetes becoming the preferred choice for container orchestration. Here are some key reasons:

Lack of TLS secrets management: Docker Swarm doesn't offer built-in support for managing TLS secrets securely.

Limited Role-Based Access Control (RBAC): Swarm’s access control capabilities are minimal compared to Kubernetes, which offers robust RBAC to control access at a granular level.

Namespace Isolation: Kubernetes allows you to create isolated environments within the same cluster using namespaces, a feature not available in Docker Swarm.

Stateful Workloads Management: Kubernetes excels at managing stateful applications, offering StatefulSets that provide unique network identifiers and persistent storage for pods.

Probes for Health Checks: Kubernetes offers Readiness and Liveness Probes to automatically manage and heal containerized applications based on their health status.

Extensive Ecosystem and Flexibility: Kubernetes supports a vast ecosystem of tools and services, allowing extensive customization and scalability.

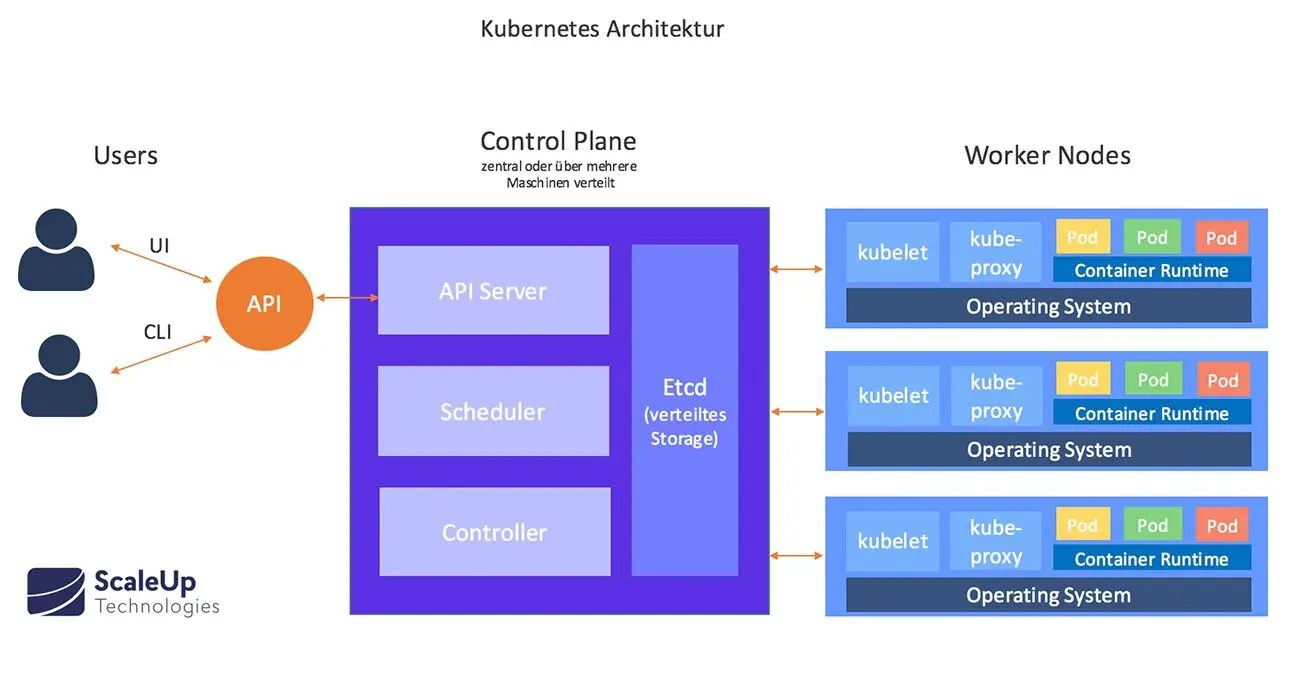

The Architecture of Kubernetes

Kubernetes architecture is divided into two main planes: the Control Plane and the Data Plane.

Control Plane

Kube-API Server: The heart of the control plane, this component exposes the Kubernetes API. All interactions with the cluster, whether from users, CI/CD pipelines, or other Kubernetes components, go through this server.

Scheduler: This component is responsible for assigning pods to nodes in the cluster based on resource availability, constraints, and policies.

etcd: A distributed key-value store that stores all the cluster’s configuration data and the state of the cluster. It’s the single source of truth for Kubernetes.

kubectl: A command-line tool used to interact with the Kubernetes API. It helps in managing cluster resources, deploying applications, and troubleshooting.

Controller Manager: This includes several controllers that manage different aspects of the cluster’s state. Key controllers include:

Node Controller: Monitors the health of nodes.

Replication Controller: Ensures that the desired number of pod replicas are running at any time.

Data Plane

Kubelet: This is an agent running on each node in the cluster. It ensures that containers are running as expected and reports the status back to the control plane. The kubelet is unique in that it is deployed as a DaemonSet, ensuring that it runs on every node in the cluster.

Kube-Proxy: This component maintains network rules on nodes, enabling communication between pods and managing networking within the cluster.

Container Runtime Interface (CRI): Kubernetes uses CRI to interact with the underlying container runtime (e.g., Docker, containerd), which actually runs the containers.

How Kubernetes Works: A Command-Driven Journey

When you execute a command using kubectl, for example, kubectl create deployment nginx --image=nginx, here's what happens behind the scenes:

Command Execution: The

kubectlcommand is sent to the Kube-API Server. This server is the gateway for all requests to the Kubernetes cluster.Authentication and Authorization: The API server authenticates the request and checks if the user has the necessary permissions (using RBAC).

Admission Controllers: If the request passes authentication and authorization, it is sent through admission controllers that validate and mutate the request if necessary.

Persistence in etcd: The desired state of the deployment (e.g., running

nginxpods) is stored in etcd.Scheduler Assignment: The Scheduler detects the new pods that need to be created and assigns them to appropriate nodes based on resource availability and other constraints.

Pod Creation: The Kubelet on the assigned node receives the request to create the pod, pulls the required container image (

nginxin this case), and starts the container.Continuous Monitoring: The Controller Manager ensures that the actual state of the cluster matches the desired state. If a pod fails, the replication controller will attempt to create a new one.

Networking: The Kube-Proxy ensures that network traffic is correctly routed to the new pods, making the application accessible.

Throughout this process, Kubernetes constantly monitors the cluster's state, ensuring that everything runs smoothly and any discrepancies between the desired and actual states are corrected.

Conclusion

Kubernetes is an incredibly powerful tool for managing containerized applications at scale, offering features and flexibility that go beyond what simpler orchestration tools like Docker Swarm can provide. Understanding its architecture is key to leveraging its full potential, whether you're deploying a simple web app or running complex microservices in production.

Subscribe to my newsletter

Read articles from Amrit Poudel directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by