Message streaming with Apache Kafka on Kubernetes

Ronit Banerjee

Ronit Banerjee

Apache Kafka is an excellent distributed messaging and stream-processing platform for real-time data processing. Its integration with container orchestration platforms like Kubernetes has become essential in the era of microservices and containerized applications. This guide provides an in-depth look at deploying Kafka on Kubernetes, covering Kafka features, configuration, workflows, and techniques for achieving high availability.

1. Introduction: What is Kafka?

Kafka is a distributed messaging and stream-processing platform that can handle a wide variety of use cases. It’s event-driven, multi-tenant, highly available, and easily integrates with data sources. Kafka supports message queues, stream processing, and the publish-subscribe model in one unified system. This guide explores how to deploy Kafka on top of Kubernetes to take advantage of its full capabilities in containerized environments.

2. Kafka Architecture

Topics, Partitions, Segments

Kafka’s fundamental abstraction is the topic, which publishes a stream of records. Topics are partitioned, hierarchical, immutable records stored in the commit log. Partitions support parallelism and distributed message processing, allowing Kafka to scale from one server to another. Each partition is divided into segments, a collection of partition messages that optimize delete and read operations.

Consumers

Consumers read records from different partitions, creating consumer groups that balance messages across multiple instances. Each consumer reads from a specific partition, ensuring efficient message handling and fault tolerance. Kafka's pull-based system allows consumers to fetch messages at their own pace, matching different levels of resources and preventing redundancies.

Brokers

Brokers are servers that manage partition management in a Kafka cluster. Each broker can be a leader or follower for a particular partition, distributing the load evenly across the cluster. Leaders handle read and write requests and ensure data is replicated to followers. When a leader fails, a follower is promoted to the position of leader, maintaining a high level of availability.

3. Kafka Performance and Configuration Best Practices

Hardware, Runtime, and OS Requirements

Java: Use the latest JDK for best performance.

RAM: Kafka typically needs 6GB of RAM for its Java heap space, with large production loads benefiting from 32GB or more.

OS Settings: Configure file descriptor limits, maximum socket buffer size, and maximum number of memory map locations accordingly.

Disk and File System Configuration

Use multiple drives for high throughput.

Avoid sharing drives used for Kafka data with other applications.

Consider RAID or individual directories for the disk.

Prefer the XFS file system for best performance.

Use the default flush settings and enable the application's fsync.

Content Configuration

Replication: Create multiple replicas to ensure fault tolerance.

Max message size: Avoid large messages to reduce search time.

Calculate partition data rates: Create a schedule of items based on message size and average rates.

Critical issues: Assign specialists to critical issues to increase productivity and reduce the impact of failures.

Cleanup unused topics: Implement programs to delete invalid topics to free up resources.

4. Best Practices for Running Kafka on Kubernetes

Why Run Kafka on Kubernetes?

Kubernetes automates distributed application management and optimizes Kafka deployment, scaling, and administration. Kubernetes handles tasks such as rolling updates, scaling, adding and removing nodes, and application health checks.

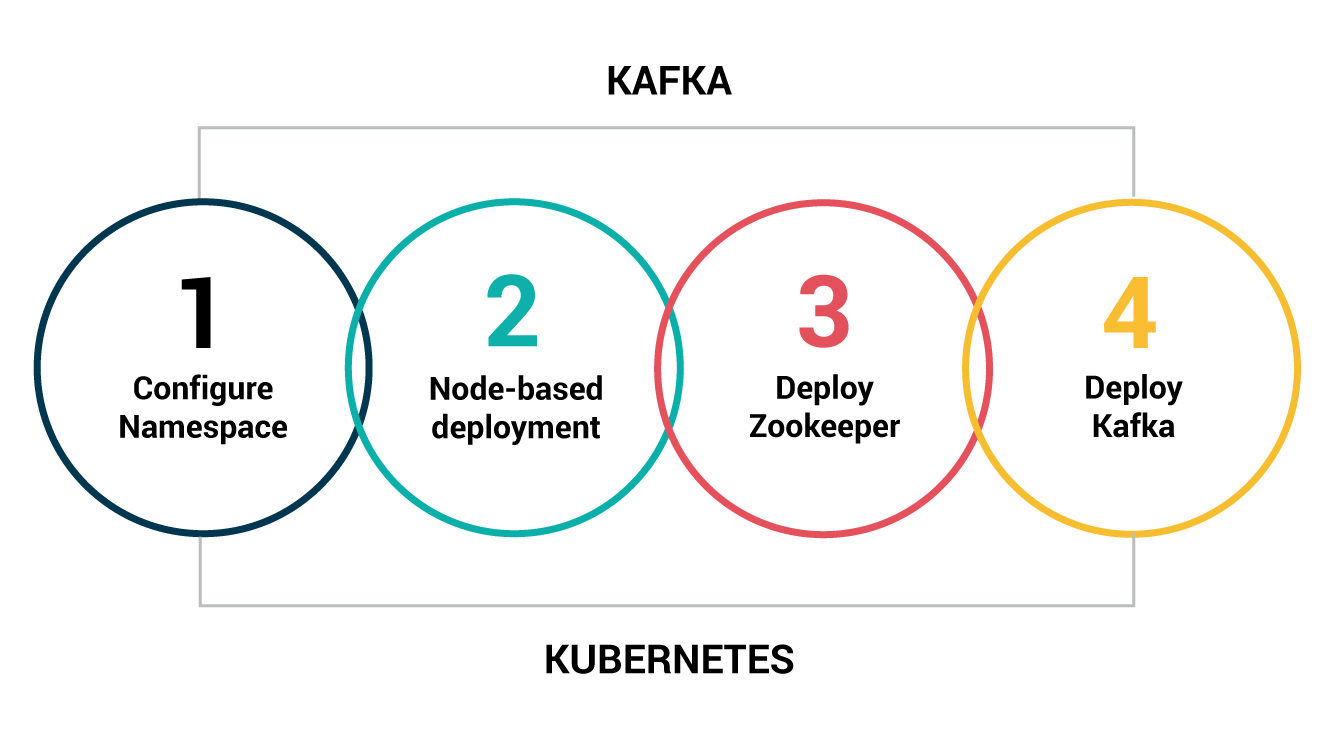

How to Install Kafka on Kubernetes

Using Kafka Helm Chart: Helm charts simplify deployment with preconfigured Kubernetes objects.

Using Kafka Operators: Operators manage the entire lifecycle of Kafka, including deployment, upgrades, and backups.

Manual Deployment: Provides maximum control over Kafka systems, requiring the use of StatefulSets and Headless Services for persistent identity and storage.

Kafka ensures high availability through partition replication and leader election. Kubernetes increases HA through node health monitoring and persistent storage.

Kafka Security in Kubernetes

Authentication: Use SSL and SASL methods to verify identity.

Data encryption: Enable SSL/TLS for in-flight data encryption.

Authorization: Use ACLs for access control and integrate with external authorization services.

Kafka Metrics Pipeline on Kubernetes

Monitor Kafka through Prometheus, with metrics displayed using Grafana or Kibana. Use the JMX exporter as a sidecar container to expose Kafka metrics compatible with Prometheus.

5. Tutorial Time!

Deploying Kafka on Kubernetes Using Helm

Kubernetes-native tools facilitate seamless migration of data and configuration across clusters, enabling use cases such as maintaining updated replicas, testing new versions, and migrating operations between workload environments.

This tutorial will guide you through deploying a Kafka cluster on Kubernetes using Helm, a package manager for Kubernetes, leveraging its full potential for high availability, fault tolerance, and scalability in modern, containerized environments.

Prerequisites

Kubernetes Cluster: Ensure you have a running Kubernetes cluster.

Helm: Install Helm on your local machine. You can follow the official Helm installation guide if needed.

kubectl: Install kubectl to interact with your Kubernetes cluster.

Step 1: Add the Bitnami Repository

Bitnami provides a well-maintained Helm chart for Kafka. First, add the Bitnami repository to Helm:

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

Step 2: Install Kafka Using Helm

helm install my-release oci://registry-1.docker.io/bitnamicharts/kafka

This command deploys a Kafka cluster with default settings. The output should be similar to:

Pulled: registry-1.docker.io/bitnamicharts/kafka:29.3.14

Digest: sha256:77ce9d932b3a7bd530bb06c87999ca79893c9358eaf1df2824db7f569938aa48

NAME: my-release

LAST DEPLOYED: Sun Aug 4 23:05:17 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: kafka

CHART VERSION: 29.3.14

APP VERSION: 3.7.1

** Please be patient while the chart is being deployed **

Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster:

my-release-kafka.default.svc.cluster.local

//

.

.

[Prompts to the potential use cases]

.

.

//

WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs:

- controller.resources

+info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

Step 3: Verify the Installation

Check the status of your Kafka pods:

kubectl get pods -A

You should see pods for Kafka and Zookeeper running:

NAME READY STATUS RESTARTS AGE

my-release-kafka-0 1/1 Running 0 1m

my-release-kafka-zookeeper-0 1/1 Running 0 1m

Step 4: Configure Kafka Clients

To connect to your Kafka cluster, you need to create a client.properties file with the following content:

security.protocol=SASL_PLAINTEXT

sasl.mechanism=SCRAM-SHA-256

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required \

username="user1" \

password="$(kubectl get secret my-release-kafka-user-passwords --namespace default -o jsonpath='{.data.client-passwords}' | base64 -d | cut -d , -f 1)";

Step 5: Create a Kafka Client Pod

Create a pod that you can use as a Kafka client:

kubectl run my-release-kafka-client --restart='Never' --image docker.io/bitnami/kafka:3.7.1-debian-12-r4 --namespace default --command -- sleep infinity

Copy the client.properties file to the pod:

kubectl cp --namespace default /path/to/client.properties my-release-kafka-client:/tmp/client.properties

Access the pod:

kubectl exec --tty -i my-release-kafka-client --namespace default -- bash

Step 6: Produce and Consume Messages

Producer:

kafka-console-producer.sh \

--producer.config /tmp/client.properties \

--broker-list my-release-kafka-controller-0.my-release-kafka-controller-headless.default.svc.cluster.local:9092,my-release-kafka-controller-1.my-release-kafka-controller-headless.default.svc.cluster.local:9092,my-release-kafka-controller-2.my-release-kafka-controller-headless.default.svc.cluster.local:9092 \

--topic test

Type some messages in the console and press Enter.

Consumer:

Open another terminal and access the Kafka client pod:

kubectl exec --tty -i my-release-kafka-client --namespace default -- bash

Run the consumer:

kafka-console-consumer.sh \

--consumer.config /tmp/client.properties \

--bootstrap-server my-release-kafka.default.svc.cluster.local:9092 \

--topic test \

--from-beginning

You should see the messages produced by the producer.

Step 7 : Clean Up

To remove the Kafka deployment, run:

helm uninstall my-release

This will delete all resources associated with the Kafka deployment.

You can now leverage Kafka's capabilities for your distributed messaging and stream-processing needs in a containerised environment.

6. Project Implementation

To watch how a consumer and a producer relationship works in a real world application, you can checkout my github project, Apache Kafka implementation with GoLang.

Thanks for reading till the very end.

Follow me on Twitter, LinkedIn and GitHub for more amazing blogs about Tech and More!

Happy Learning <3

Subscribe to my newsletter

Read articles from Ronit Banerjee directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ronit Banerjee

Ronit Banerjee

Building ProjectX.Cloud | GSoC'23 @ DBpedia | DevOps & Cloud Computing