A Practical Guide to GROQ

DataGeek

DataGeek

Background

In today's rapidly evolving tech landscape, LLMs have become incredibly popular, with many companies striving to integrate AI into their solutions. However, building a LLM-based application presents significant challenges, particularly in terms of requring huge amount of data and high computation power for LLMs training.

To address this, organizations opt for pre-trained LLMs, whether paid or open-source. However, implementing open-source models often comes with its own set of challenges:

High Storage Demands - In order to use these models, one needs to download them, which requires substantial storage capacity.

High Computational Costs - Running these models necessitates powerful hardware, leading to increased expenses.

To overcome these challenges with using open-source LLM models, Groq Cloud was introduced as a more memory-efficient and cost-effective solution for integrating LLMs.

What is GROQ Cloud ?

Groq Cloud is a powerful extension of Groq’s revolutionary AI hardware technology, designed to bring the incredible performance of Groq’s processors to the cloud.

Groq Cloud provides a cloud-based platform that utilizes Groq’s high-performance processors to deliver advanced AI and machine learning capabilities. It enables users to access Groq’s cutting-edge hardware and software infrastructure over the internet, without the need to invest in and manage their own physical hardware.

Why GROQ ?

Groq Cloud stands out for several compelling reasons -

Instant Access to Open-Source LLMs: Skip the complexities of downloading and managing models. With Groq Cloud, you get immediate access to leading open-source LLMs like Gemma 7B, directly from the platform.

Effortless API Integration: Seamlessly incorporate advanced AI functionalities into your applications with Groq Cloud’s easy-to-use APIs, streamlining your development process.

Groq LPU (Large Processing Unit): The Groq LPU is the engine driving Groq Cloud’s performance. This state-of-the-art processor provides unmatched speed and efficiency, enabling rapid execution of complex AI tasks with minimal latency.

User-Friendly: Focus on development with Groq Cloud’s intuitive interface and simplified management.

Setup and Integration of GROQ

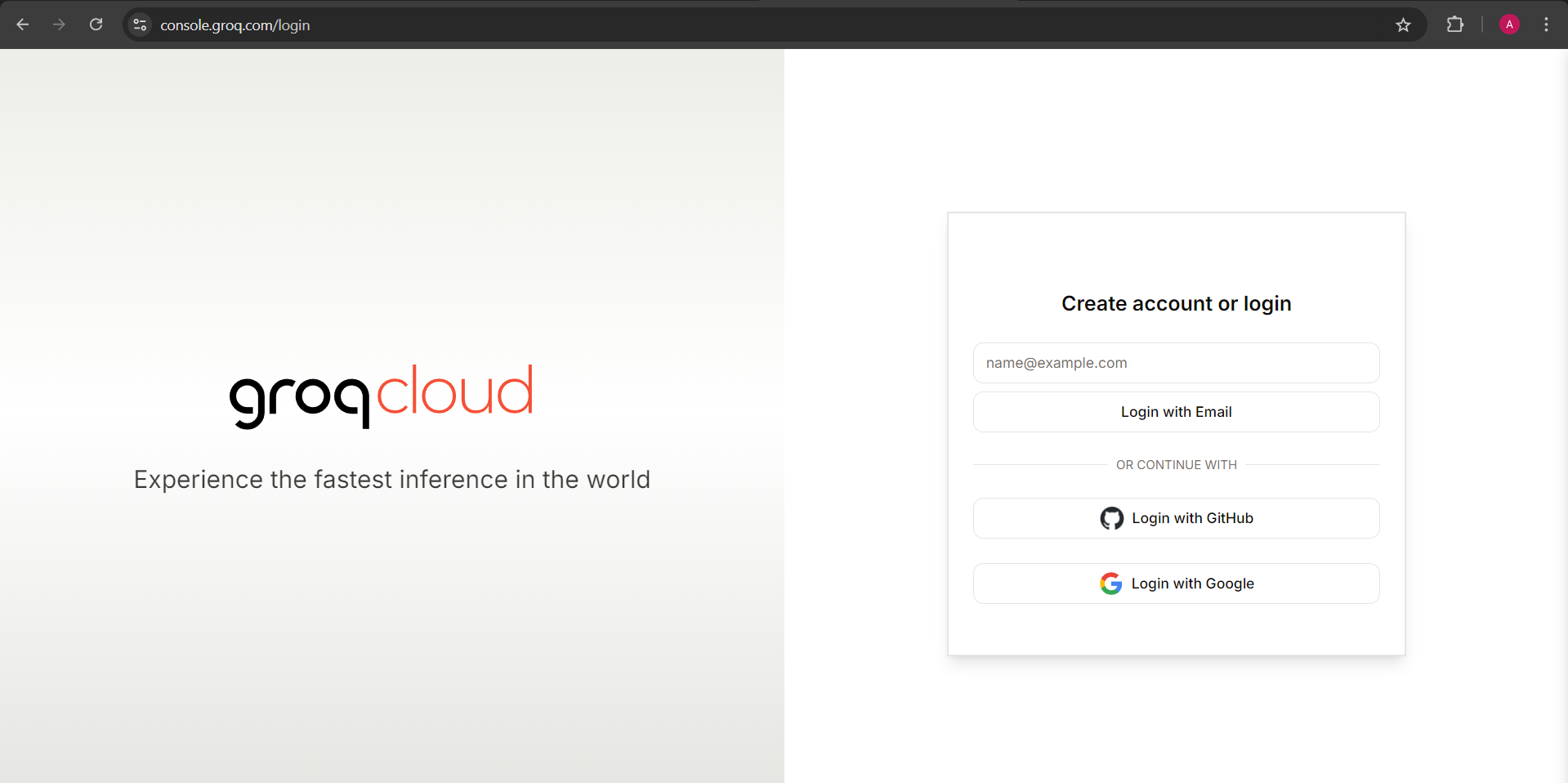

To use Groq Cloud, start by logging into your Groq account. If you don't have an account, click here to create one.

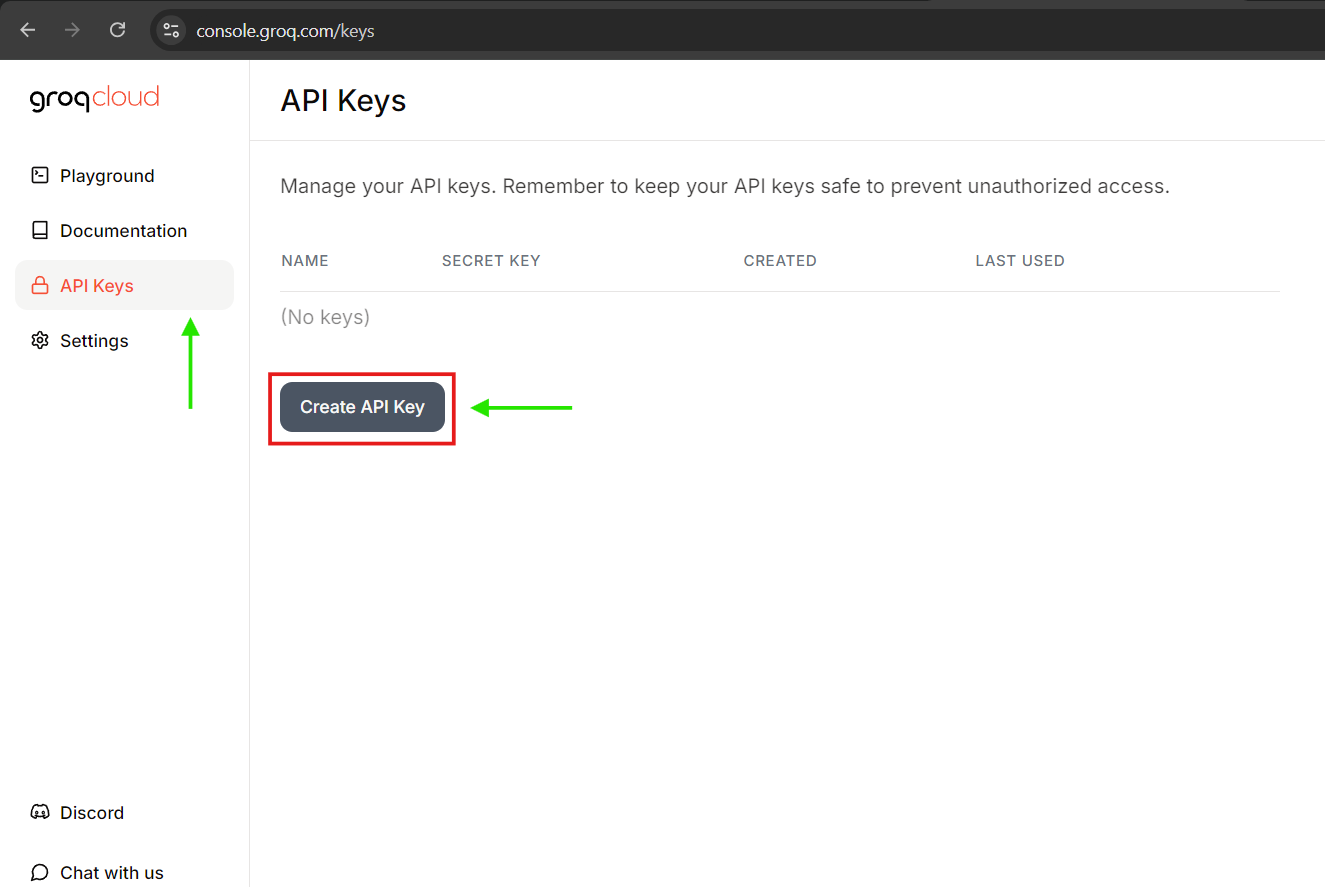

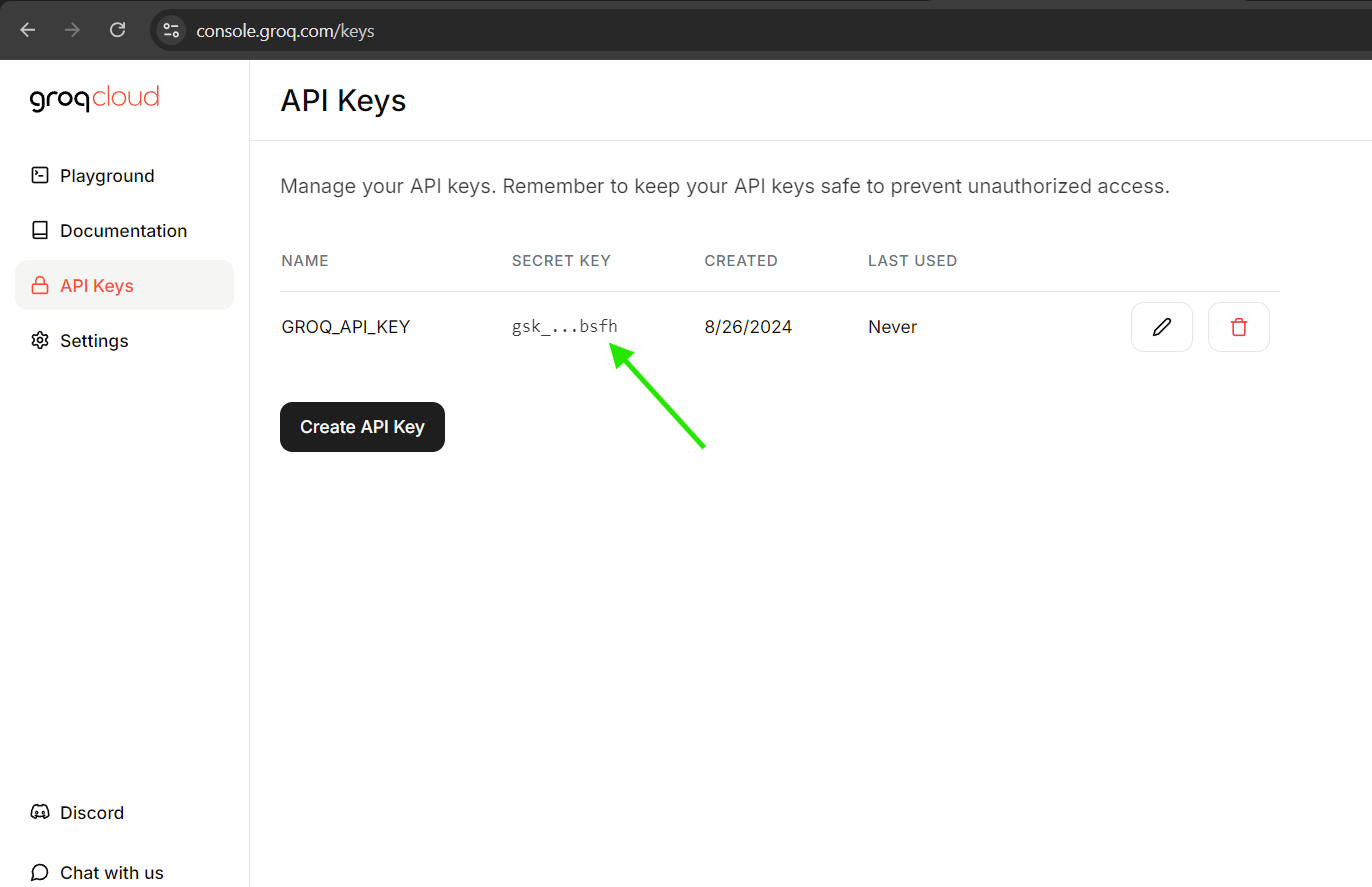

Once logged in, navigate to the

API Keystab and click onCreate API Key.

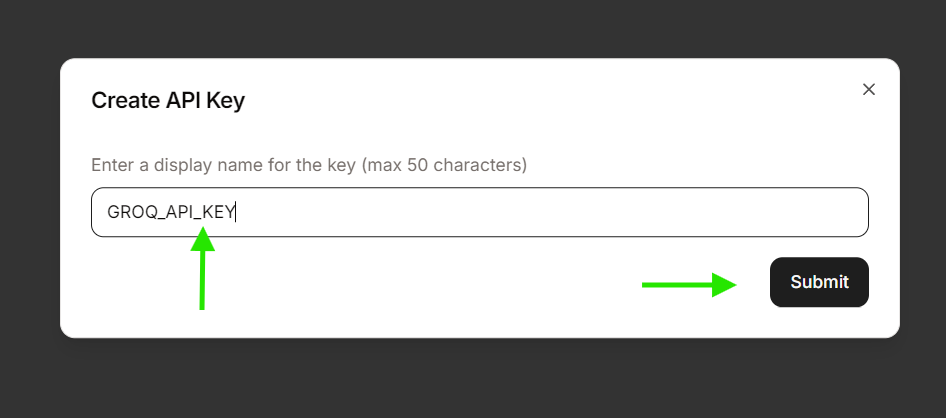

Provide a name for your

API Key, then click theSubmitbutton.

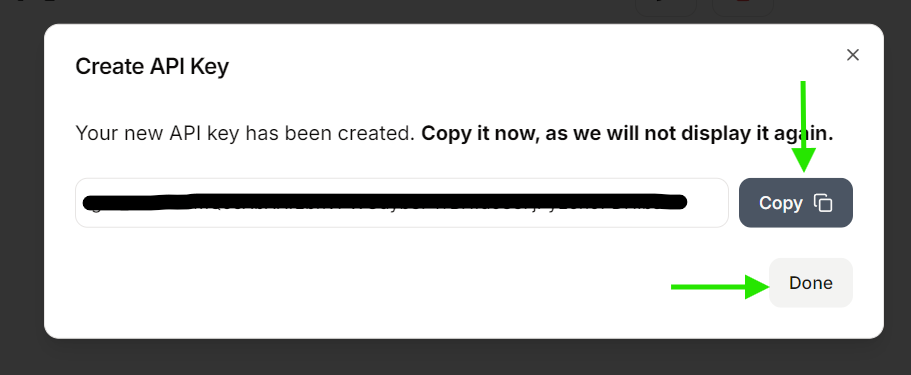

Copy the newly created

API Keyand store it securely.

After successful creation of the

API Key-

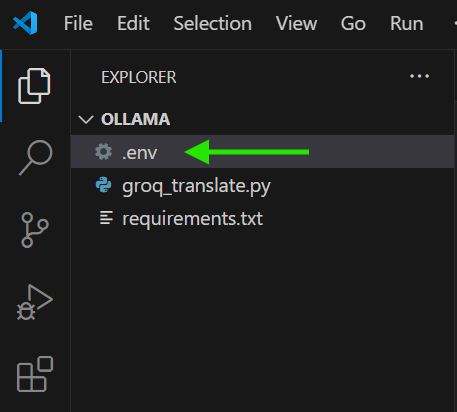

Next, Open your favorite IDE and create a new

.envfile -

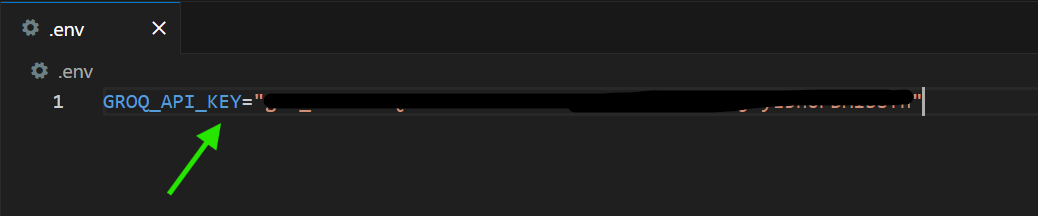

Open the

.envfile and Paste your copiedAPI Keyin a key-value format, like this -

Next create a requirements.txt file and paste the following requirements -

langchain-communitylangchain-corelangchainlangchain_groqpython-dotenvstreamlitOpen the IDE terminal and run the following command, in order to install the libraries requried to implement LLM Model using GROQ.

pip install -r requirements.txt- Note: Ensure that your Python version is above 3.8.

- Next, create a

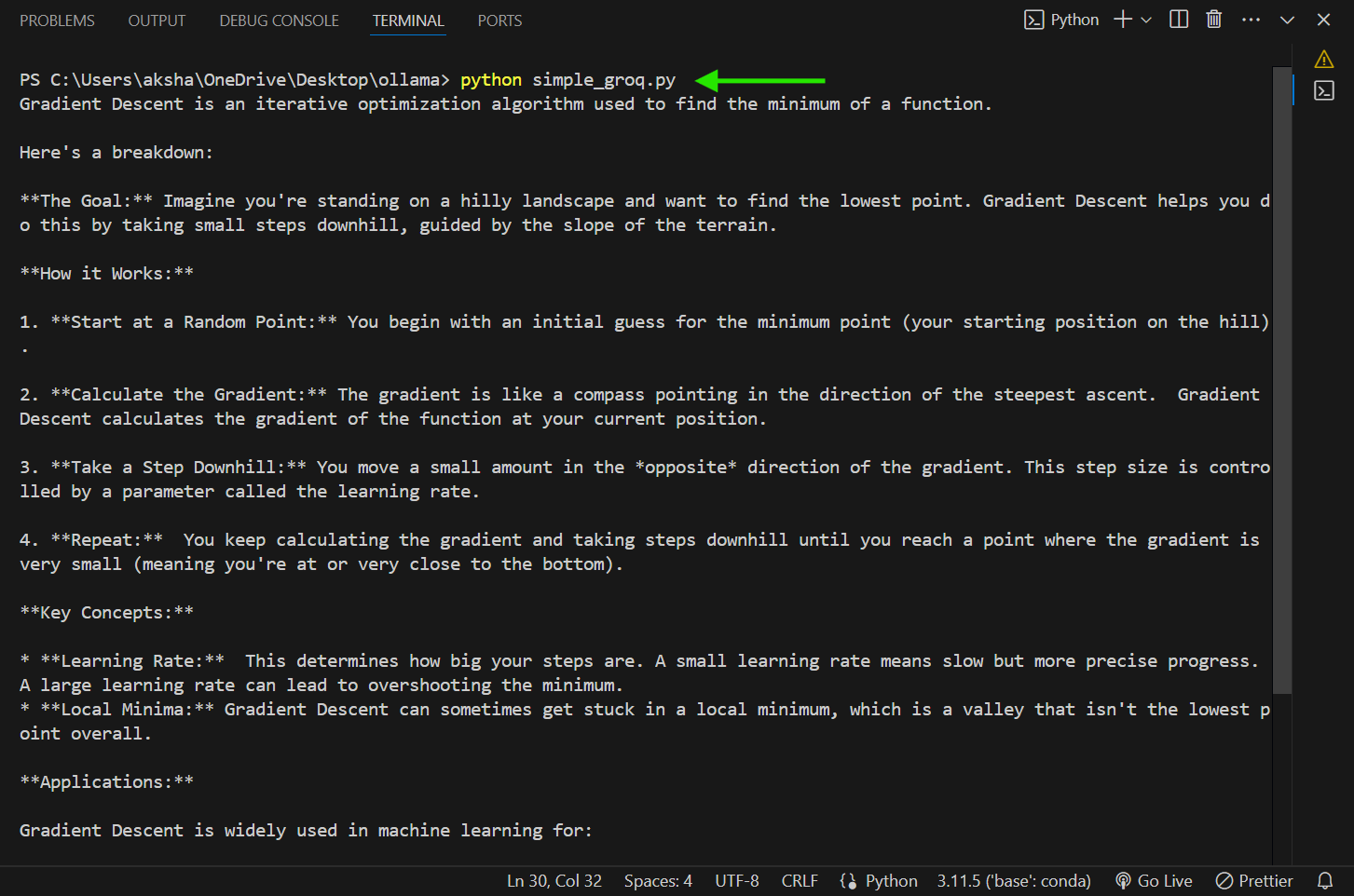

.pyfile and run the following code to implement an open-source model available in GROQ -

import os

from dotenv import load_dotenv # To access the GROQ_API_KEY

from langchain_groq import ChatGroq # To create a chatbot using Groq

from langchain_core.messages import HumanMessage, SystemMessage # To specify System and Human Message

from langchain_core.output_parsers import StrOutputParser # View the output in proper format

# Loading the environment variables

load_dotenv()

# Retrieving Groq API key from environment variables

groq_api_key = os.getenv("GROQ_API_KEY")

# Initializing the desired model

model = ChatGroq(model="Gemma2-9b-It", groq_api_key=groq_api_key)

# Change the model parameter, to the specific model you need.

# Defining the output parser -> to display the respone in proper format

parser = StrOutputParser()

# Definingthe message prompt

messages = [

# Instructions given to the Model -> can be used for hypertuning the Model

SystemMessage(content="You are an AI Assistant. Your primary role is to provide accurate answers to the user's questions."),

# Query asked by the user

HumanMessage(content="What is Gradient Descent Algorithm ?")

]

# Invoking the model

result = model.invoke(messages)

# Parsing the result

response = parser.invoke(result)

# Displaying the response

print(response)

- To know more about models -> click here

Run the

.pyfile to receive the response.

By following these steps, you can set up and use any open-source LLM model in your application without needing to download them locally.

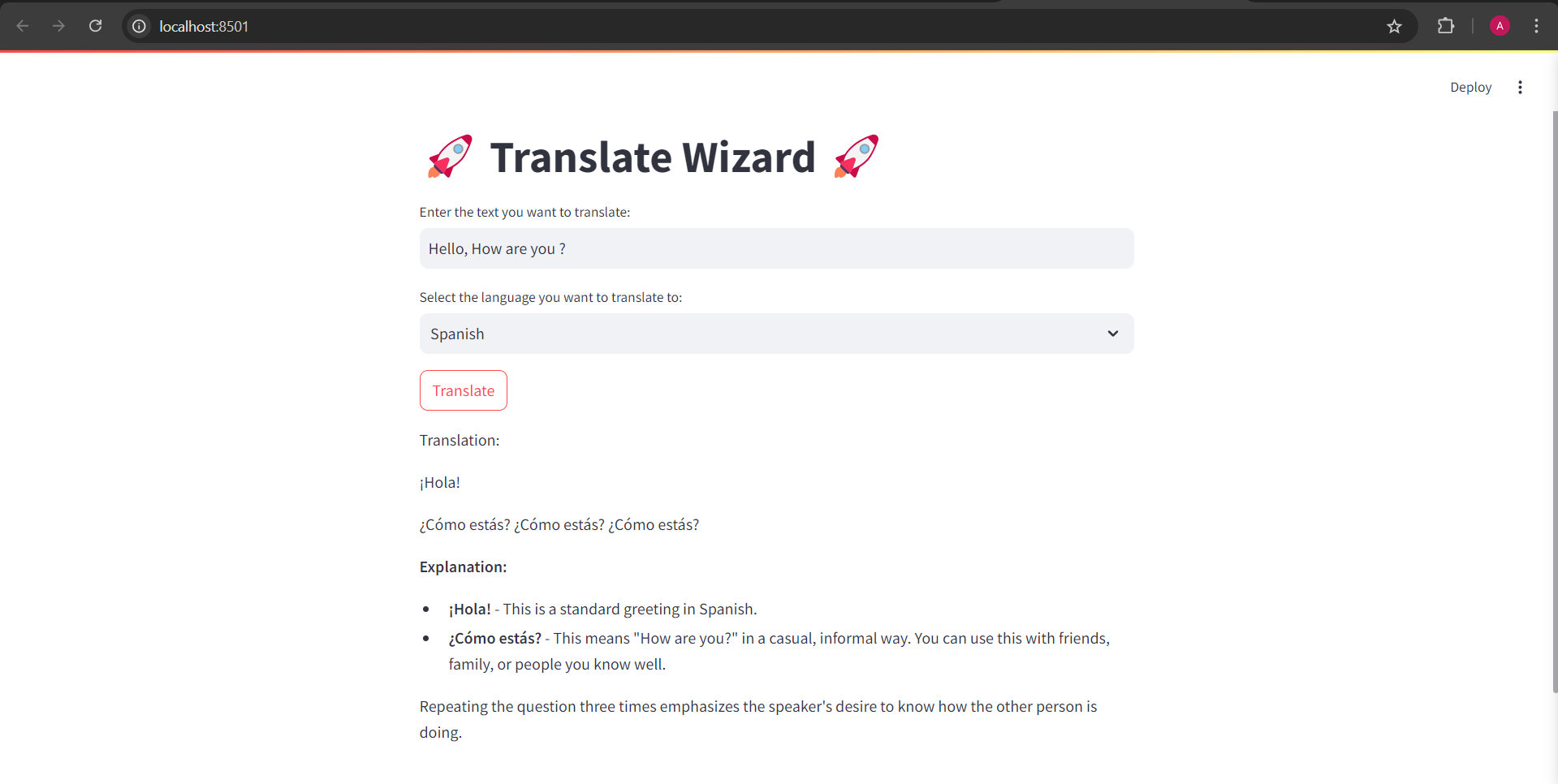

Practical Implementation

Overview:

In this section, we will create a basic Multilingual Translation App using

Groq,Gemma2-9b-ItModel andStreamlit.User can translate the Input text into following Languages -

"French", "Spanish", "Hindi", "German", "Japanese"

Steps:

Refer to Steps 5, 6, 7, and 8 from the section above.

By now, you should have created a

.envfile, arequirements.txtfile, and installed all the required libraries.Create a

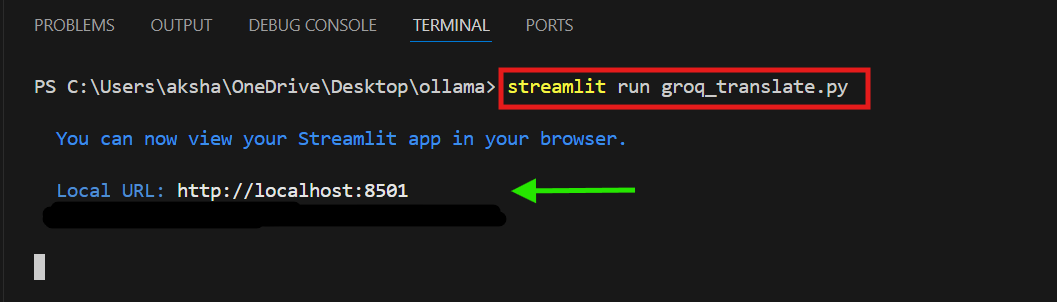

.pyfile and include the following code:import streamlit as st # For devloping a Simple UI from langchain_groq import ChatGroq # To create a chatbot using Groq from langchain_core.messages import HumanMessage, SystemMessage # To specify System and Human Message from langchain_core.output_parsers import StrOutputParser # View the output in proper format import os from dotenv import load_dotenv # To access the GROQ_API_KEY # Loading environment variables load_dotenv() # Retriving Groq API key from environment variables groq_api_key = os.getenv("GROQ_API_KEY") # Initializing the model model = ChatGroq(model="Gemma2-9b-It", groq_api_key=groq_api_key) # Change the model parameter, to the specific model you need. # Defining the output parser -> to display the respone in proper format parser = StrOutputParser() # Creating a Streamlit UI st.title("🚀 Translate Wizard 🚀") # Taking the Text input from the user input_text = st.text_input("Enter the text you want to translate:") # Language selection dropdown language = st.selectbox( "Select the language you want to translate to:", ["French", "Spanish", "Hindi", "German", "Japanese"] ) if st.button("Translate"): if input_text: # Preparing messages for the model messages = [ # Instructions given to the Model -> can be used for hypertuning the Model SystemMessage(content=f"Please translate the following English text to {language}. The translation should be accurate, preserving the meaning and tone"), # Query asked by the user HumanMessage(content=input_text) ] # Invoking the model result = model.invoke(messages) # Parsing the result translation = parser.invoke(result) # Displaying the translation st.write("Translation:") st.write(translation) else: st.write("Please enter some text to translate.")Execute the following command, to initialize the application:

streamlit run {{app_name.py}}

Open the local URL in your web browser to access the application.

Demonstration

Conclusion

- In conclusion, as the demand for LLMs continues to grow, addressing the challenges of storage and computational power becomes crucial. Groq emerges as a practical solution, offering a more efficient and cost-effective approach to integrating these powerful models. By providing enhanced memory management and reducing costs, Groq enables organizations to harness the full potential of LLMs without the typical constraints.🚀🌟

Subscribe to my newsletter

Read articles from DataGeek directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

DataGeek

DataGeek

Data Enthusiast with proficiency in Python, Deep Learning, and Statistics, eager to make a meaningful impact through contributions. Currently working as a R&D Intern at PTC. I’m Passionate, Energetic, and Geeky individual whose desire to learn is endless.