Zero-Downtime Kubernetes Rolling Updates: NGINX Pod Upgrade Demonstration

Hemanth Gangula

Hemanth Gangula

In the fast-paced world of software development, one of the most critical challenges is updating applications without disrupting service. Imagine the frustration of users when an application suddenly goes offline for an update. How can we ensure seamless updates without compromising user experience? This is where Kubernetes, the powerful open-source container orchestration platform, steps in with its elegant solution: Rolling updates.

Rolling updates are a game-changer for zero-downtime deployments. They allow applications to be updated gradually, ensuring that service availability is maintained throughout the process. Unlike traditional methods where an entire application might go offline during an update, rolling updates incrementally replace instances of the application with new versions. This means that at no point during the update is the application completely unavailable to users. The result? Continuous service without interruptions.

The Concept of Rolling Updates

So, how exactly do rolling updates work? A rolling update is a deployment strategy where instances of the application are updated in a controlled, step-by-step manner. Kubernetes handles the heavy lifting, sequentially terminating old Pods and replacing them with new Pods running the updated version. This ensures that the application remains accessible and operational, providing a seamless experience for users.

Imagine you're watching your favourite series on Netflix, and suddenly, a new feature or improvement needs to be deployed. With rolling updates, Netflix can introduce this update without interrupting your viewing experience. As you continue to binge-watch your show, some of the back-end servers handling your requests are being updated, but you won't notice any disruption because the update happens gradually, one server at a time.

Significance of Rolling Updates in Achieving Zero-Downtime Deployments

Zero-downtime deployments are not just a luxury; they are essential for modern applications where any service interruption can lead to significant user dissatisfaction and potential revenue loss. Rolling updates help achieve zero-downtime deployments by:

Minimizing Service Interruptions: By updating a few Pods at a time, rolling updates ensure that the application remains accessible to users throughout the deployment process.

Enabling Continuous Delivery: Rolling updates support the continuous delivery model, allowing development teams to deploy updates and new features regularly without affecting the user experience.

Reducing Risk: Gradually rolling out updates helps in identifying and mitigating issues early in the deployment process. If a problem is detected, the update can be paused or rolled back with minimal impact.

Benefits of Rolling Updates Compared to Other Deployment Strategies

Rolling updates offer several advantages over other deployment strategies, such as blue-green deployments and canary releases:

Reduced Downtime: Unlike blue-green deployments, which involve switching traffic from one environment to another, rolling updates ensure that there is always a portion of the application running and serving users.

Cost Efficiency: Rolling updates do not require duplicating the entire infrastructure, as seen in blue-green deployments. This makes rolling updates more resource-efficient and cost-effective.

Smooth Transition: Canary releases involve deploying the new version to a small subset of users before a full rollout. While this helps in minimizing risk, it can be complex to manage. Rolling updates offer a more straightforward approach by updating the application incrementally.

Scalability: Rolling updates are well-suited for large-scale applications where updating all instances simultaneously is impractical. The incremental approach ensures that resources are managed effectively during the update process.

POC Setup

1. Setting Up the Environment

To set up the Kubernetes environment for the Proof of Concept (POC), we have two options: using an AWS EC2 instance or setting it up locally on any operating system.

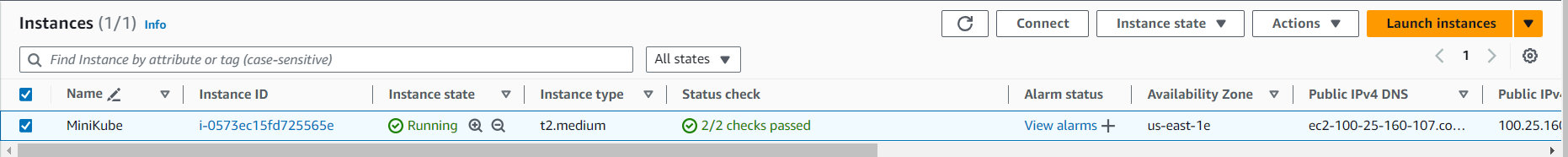

Option 1: Using an AWS EC2 Instance

Instance Details:

Operating System: Ubuntu 22.04 LTS

Instance Type: t2.medium

Configuring Instance:

Open the necessary ports and protocols as per the Kubernetes documentation.

This includes configuring inbound rules to allow traffic on the required ports.

Installing Kubernetes Components:

- Follow the official Kubernetes documentation to install

kubeadm,kubelet, andkubectl: Installing kubeadm

- Follow the official Kubernetes documentation to install

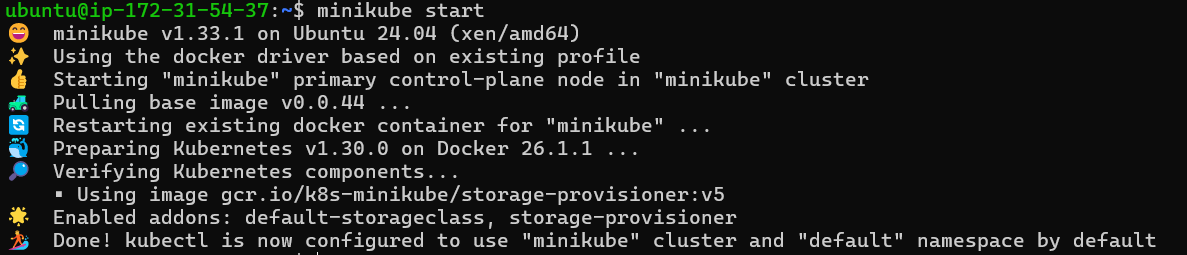

Setting Up Minikube:

Install Minikube by following the instructions on the official Minikube documentation.

Start Minikube:

minikube start

Option 2: Local Installation

Operating System: Any (Windows, macOS, Linux)

Installing Kubernetes Components:

- Follow the official Kubernetes documentation to install

kubeadm,kubelet, andkubectl.

- Follow the official Kubernetes documentation to install

Setting Up Minikube:

- Install and start Minikube as per the Minikube documentation.

3. Creating the Kubernetes Deployment Manifest

Nginx Deployment Manifest:

Here is the updated YAML configuration file for the initial deployment of the Nginx application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 4

selector:

matchLabels:

app: nginx

strategy:

rollingUpdate:

maxSurge: 25

maxUnavailable: 25%

type: RollingUpdate

minReadySeconds: 5

template:

metadata:

labels:

app: nginx

role: rolling-update

spec:

containers:

- name: nginx

image: nginx:1.19.0

ports:

- containerPort: 80

Explanation:

strategy: Defines the rolling update strategy.

rolling update: Specifies the rolling update parameters.

maxSurge: Specifies the maximum number of pods that can be created above the desired number of replicas during an update. For example, if you have 4 replicas and

maxSurgeis set to 25%, Kubernetes can create 1 additional pod during the update (25% of 4 is 1).maxUnavailable: Specifies the maximum number of pods that can be unavailable during the update. With 4 replicas and

maxUnavailableset to 25%, Kubernetes can terminate up to 1 pod during the update (25% of 4 is 1).

type: Specifies the type of update strategy (

RollingUpdate).

Nginx Service Manifest:

To expose the Nginx application, we will create a Kubernetes Service:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: NodePort

Explanation:

Explanation:

The

apiVersion,kind, andmetadatafields define the service's type and name (nginx-service).The

specfield specifies the service's desired state, routing traffic to pods with the labelapp: nginxand exposing them on port 80 via TCP using aNodePort.

Note: The strategy field in a Kubernetes Deployment defines how updates are performed. By default, Kubernetes uses the RollingUpdate strategy with maxSurge set to 25% and maxUnavailable set to 25%. This means that during an update, up to 25% more pods than the desired number can be created (maxSurge), and up to 25% of the desired number of pods can be unavailable (maxUnavailable) at any time.

Demonstrating a Rolling Update in Kubernetes

This section will demonstrate how to perform a rolling update in Kubernetes, ensuring zero downtime. We will deploy an Nginx application and update its version from 1.19.0 to 1.19.1.

Initial Setup

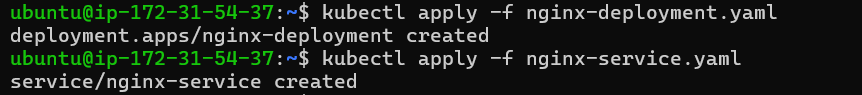

Create the Deployment and Service Manifests

First, create a

nginx-deployment.yamlfile and copy the following code into it. Ensure the Nginx image version is1.19.0:apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 4 selector: matchLabels: app: nginx strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 type: RollingUpdate template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.19.0 ports: - containerPort: 80Apply the deployment:

kubectl apply -f nginx-deployment.yamlNext, create a

nginx-service.yamlfile and copy the following code into it:apiVersion: v1 kind: Service metadata: name: nginx-service spec: selector: app: nginx ports: - protocol: TCP port: 80 targetPort: 80 type: NodePortApply the service:

kubectl apply -f nginx-service.yamlAt this point, you have successfully deployed the Nginx application and created a service to expose it.

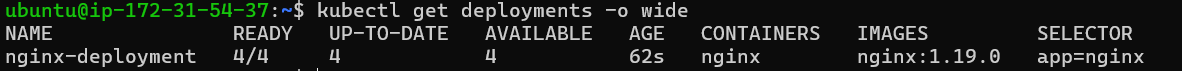

Verify the Deployment

Check the deployment:

kubectl get deployment -o wide

You will observe that the deployment version is

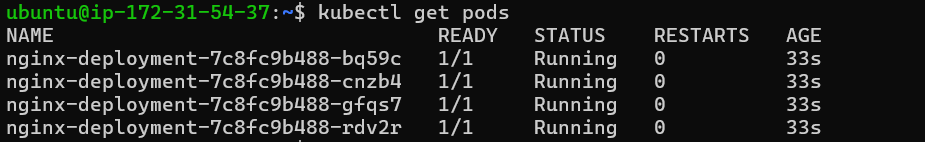

nginx:1.19.0under theIMAGEScolumn.Check the pods created by the deployment:

kubectl get pods

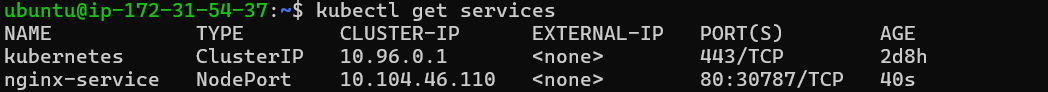

Check the services:

kubectl get services

Observe the

nginx-servicewithClusterIPand port.Access the Application

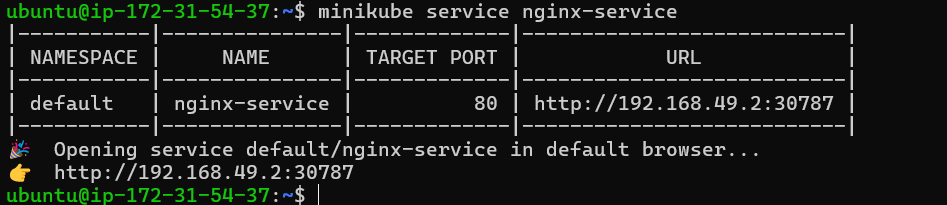

Get the address of the application:

minikube service nginx-service

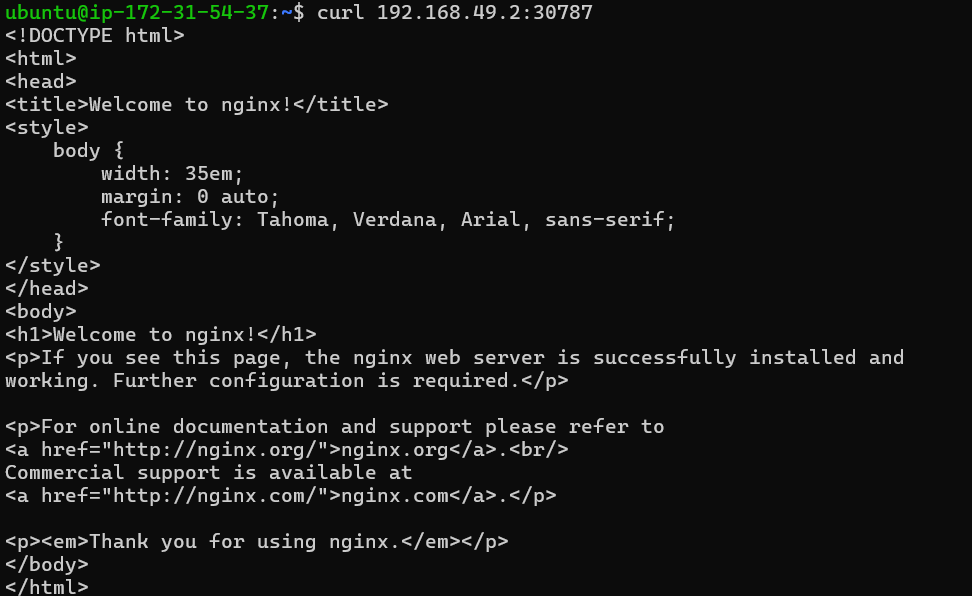

Test the application using

curl:curl <minikube-ip>:<node-port>

This will return the HTML code of the Nginx application. You can also access it via the provided URL.

Performing the Rolling Update

Prepare for the Update

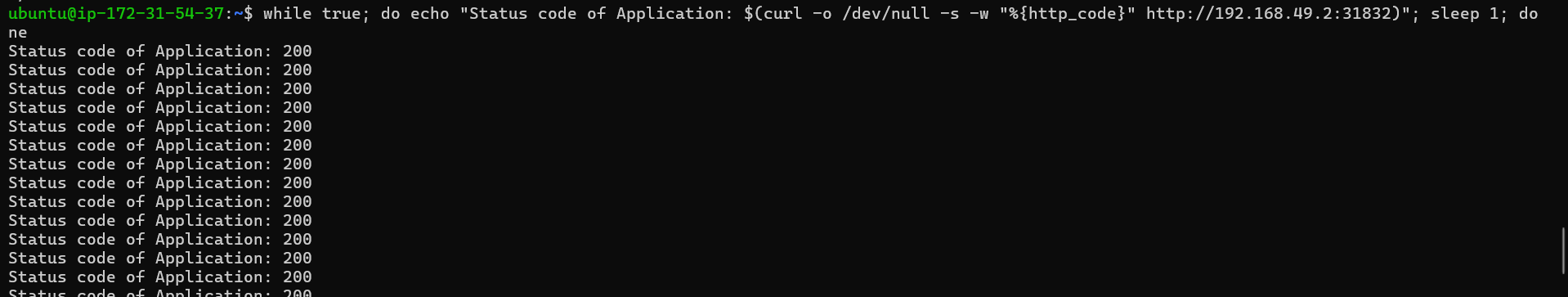

In a second terminal, run the following command to check the application's status continuously:

[Make sure that you are changing your IP address and Node port]

while true; do echo "Status code of Application: $(curl -o /dev/null -s -w "%{http_code}" http://<minikube-ip>:<node-port>)"; sleep 1; done

This command will output the status code

200if the application is accessible.Update the Deployment

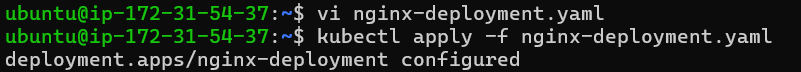

In the first terminal, open

nginx-deployment.yamland change the Nginx image version to1.19.1. Save and close the file.Apply the updated deployment:

kubectl apply -f nginx-deployment.yaml

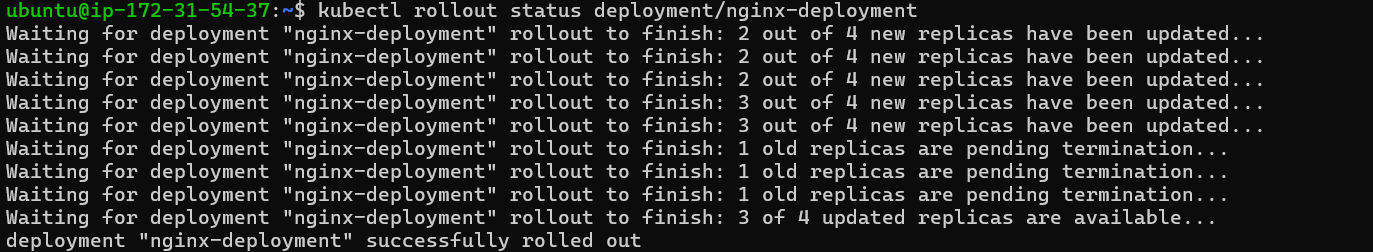

Check the rolling update status:

kubectl rollout status deployment/nginx-deployment

You will see the old containers being terminated and new containers being created.

Verify the Update

Check the second terminal to ensure the status code remains

200, indicating that the application is accessible throughout the update process.In the first terminal, verify the deployment and image versions:

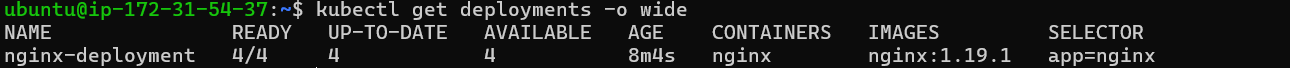

kubectl get deployment -o wide

You will observe that the image version is now

nginx:1.19.1.

Congratulations, you have successfully performed a rolling update on your application, ensuring zero downtime!

Best Practices and Considerations for Rolling Updates in Kubernetes

Implementing rolling updates in a production environment can be highly beneficial, but it also requires careful planning and execution. Here are some best practices and considerations to keep in mind to ensure smooth and effective rolling updates.

Best Practices

Monitor the Deployment

Always monitor the deployment process. Use tools like Prometheus, Grafana, or Kubernetes-native tools to keep an eye on metrics and logs. This helps in identifying any issues quickly.

kubectl get pods -wThis command continuously watches your pods, making it easier to see changes in real time.

Use Health Checks

Configure readiness and liveness probes to ensure that your application is healthy before Kubernetes routes traffic to it. This prevents routing traffic to an unhealthy pod, which could cause downtime.

readinessProbe: httpGet: path: / port: 80 initialDelaySeconds: 5 periodSeconds: 10 livenessProbe: httpGet: path: / port: 80 initialDelaySeconds: 15 periodSeconds: 20Gradual Updates

Update your application gradually by setting appropriate values for

maxSurgeandmaxUnavailable. This ensures that your application remains available during the update.strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1Automated Rollbacks

Configure your deployment to automatically roll back if the new version fails health checks or if any issues are detected. This can save time and reduce the risk of prolonged downtime.

kubectl rollout undo deployment/nginx-deploymentTesting in Staging

Always test your updates in a staging environment before deploying them to production. This helps catch issues early and ensures a smoother deployment.

Potential Challenges and Risks

Unhealthy Pods

If a new version of your application has issues, unhealthy pods can affect the entire deployment. Mitigate this by using readiness and liveness probes as mentioned above.

Configuration Errors

Misconfigurations in deployment manifests can cause failed updates or downtimes. Always double-check configurations and use tools like

kubectl apply --dry-runto validate changes before applying them.kubectl apply --dry-run -f nginx-deployment.yamlResource Constraints

Insufficient resources can cause pods to fail during updates. Ensure that your cluster has enough CPU and memory resources to handle additional pods during updates.

kubectl describe nodesThis command helps you see the resource availability on your nodes.

Service Disruptions

Although rolling updates aim to minimize downtime, there can still be minor disruptions. To mitigate this, use traffic management tools like Istio or Linkerd to route traffic intelligently during updates.

Dependency Management

Ensure that all dependencies (such as databases, and external services) are compatible with the new version. Incompatibilities can cause failures even if the application pods are healthy.

Mitigation Strategies

Blue-Green Deployments

For critical applications, consider using blue-green deployments. This involves running two identical environments (blue and green) and switching traffic to the new environment once it is verified to be healthy.

Canary Releases

Deploy the new version to a small subset of users first. This allows you to monitor its performance and catch issues before a full rollout.

replicas: 1Graceful Shutdowns

Ensure that your application can handle graceful shutdowns to avoid disrupting ongoing processes or transactions.

lifecycle: preStop: exec: command: ["/bin/sh", "-c", "sleep 5"]Rollback Plans

Always have a rollback plan. If something goes wrong, you should be able to quickly revert to the previous stable version.

kubectl rollout undo deployment/nginx-deploymentConclusion:

Key Findings & Benefits:

Kubernetes rolling updates offer a seamless solution for updating applications without service disruptions. This incremental approach ensures continuous availability during updates, minimising interruptions and enabling efficient continuous delivery. Compared to traditional methods, rolling updates reduce downtime, enhance cost efficiency, and provide scalability.

Insights & Recommendations:

Based on our experience, it's vital to monitor deployments, implement health checks, and test updates in staging environments. Mitigating challenges like unhealthy pods and configuration errors requires strategic approaches such as blue-green deployments and canary releases. By following best practices, we ensure smooth deployments and resilient operations.

Thanks for reading! I hope this article helps you. Feel free to leave feedback and follow for more content like this.

Subscribe to my newsletter

Read articles from Hemanth Gangula directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hemanth Gangula

Hemanth Gangula

🚀 Passionate about cloud and DevOps, I'm a technical writer at Hasnode, dedicated to crafting insightful blogs on cutting-edge topics in cloud computing and DevOps methodologies. Actively seeking opportunities in the DevOps domain, I bring a blend of expertise in AWS, Docker, CI/CD pipelines, and Kubernetes, coupled with a knack for automation and innovation. With a strong foundation in shell scripting and GitHub collaboration, I aspire to contribute effectively to forward-thinking teams, revolutionizing development pipelines with my skills and drive for excellence. #DevOps #AWS #Docker #CI/CD #Kubernetes #CloudComputing #TechnicalWriter