flAWS Walkthrough Level1 - Level3

Akanksha Giri

Akanksha Giri

LEVEL 1

In Level 1 of the flAWS challenge, we explore AWS S3 buckets and their security implications. The key goal in this level is to uncover the first sub-domain by understanding how S3 bucket permissions work and how they can be misconfigured.

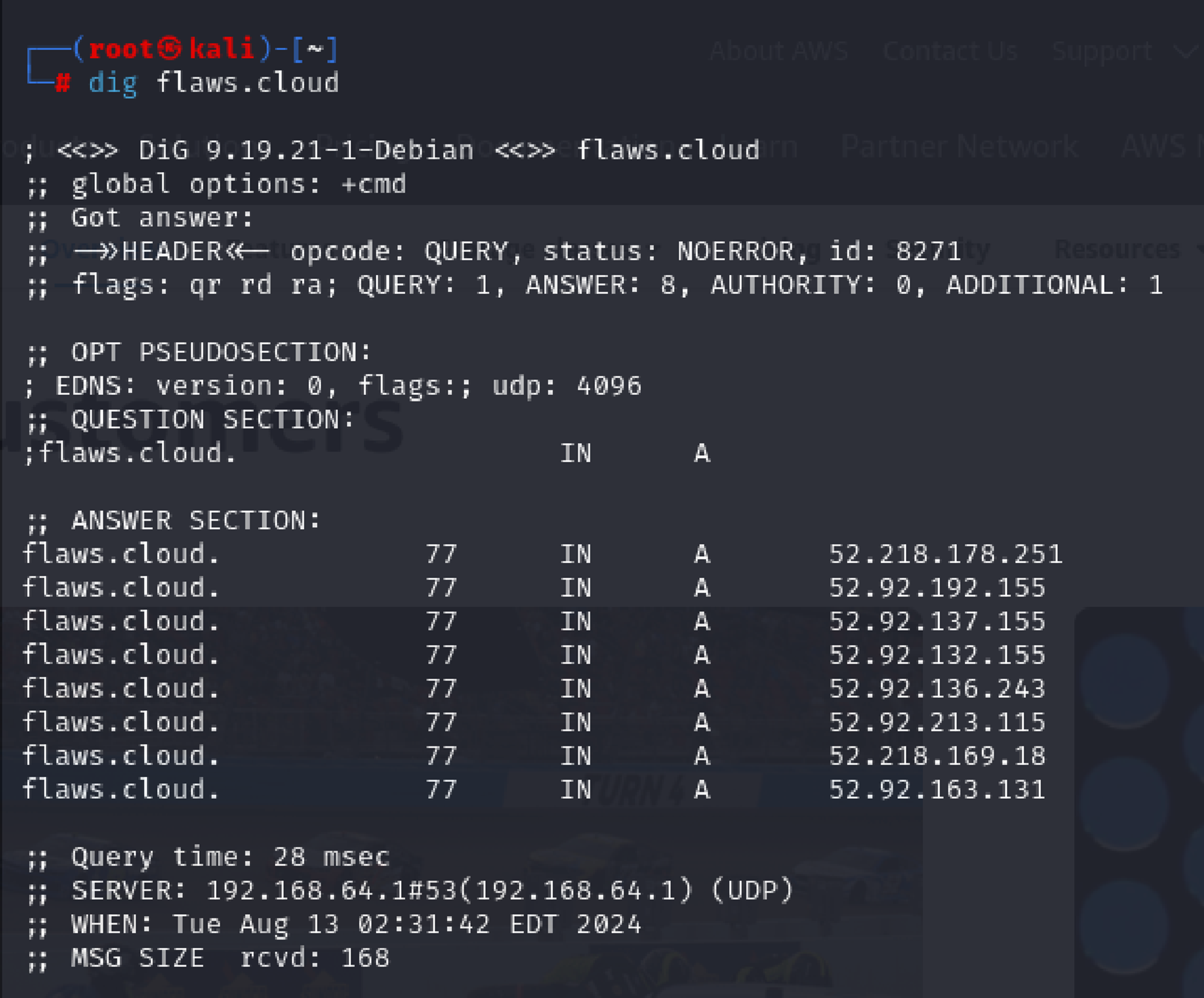

Let's start my determining where the site is hosted.

The dig command helps you find that IP address by asking the DNS (Domain Name System) servers, which are like the phone book directories of the internet.

dig flaws.cloud

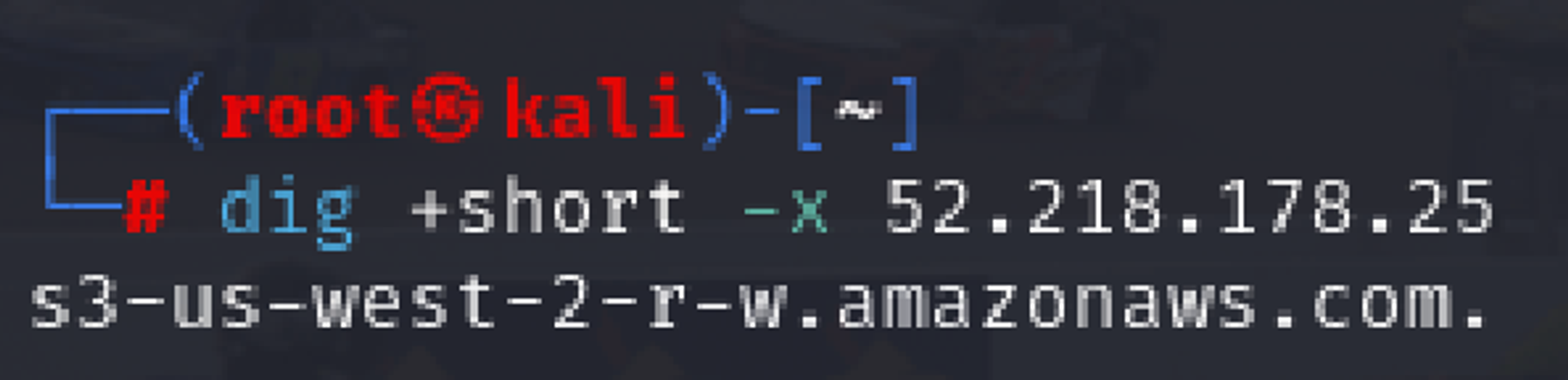

Use the obtained IP address to perform a reverse DNS lookup. A reverse DNS lookup finds the domain name associated with a given IP address.

dig +short -x 52.218.178.251. #-x option performs a reverse DNS lookup for the specified IP address

s3-website-us-west-2.amazonaws.com indicates that the IP is associated with an Amazon S3 website endpoint in the us-west-2 AWS region.c

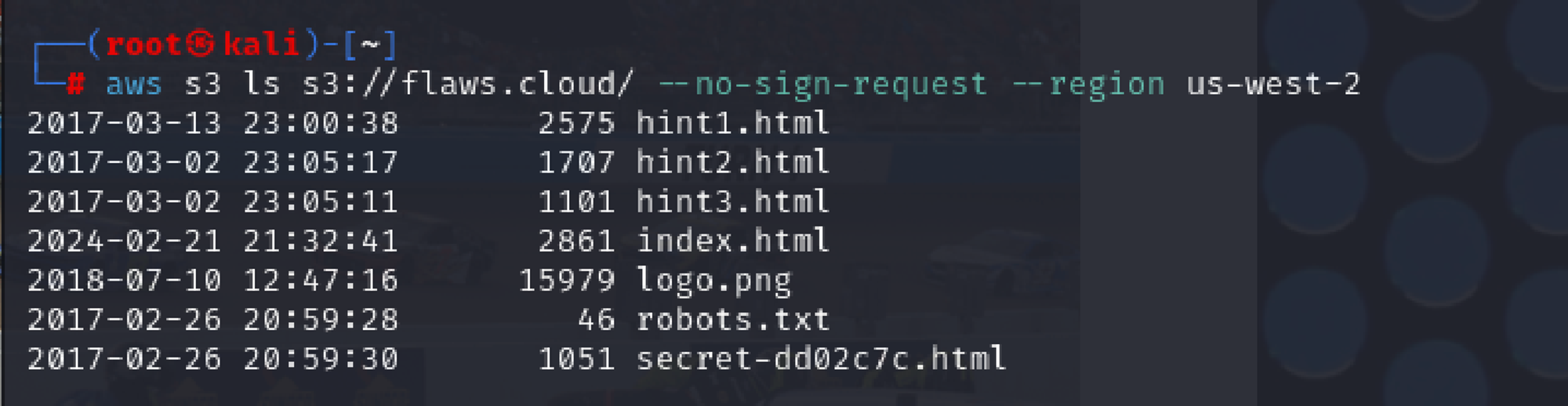

Let's list the contents of S3 buckets using AWS CLI.

aws s3 ls s3://flaws.cloud/ --no-sign-request --region us-west-2

#--no-sign-request: This option allows you to make the request without providing any AWS credentials (no authentication). It’s useful when the bucket is publicly accessible and does not require signed requests.

Accessing the secret file, secret-dd02c7c.html in the bucket which seems to be the odd one among the files listed in the bucket.

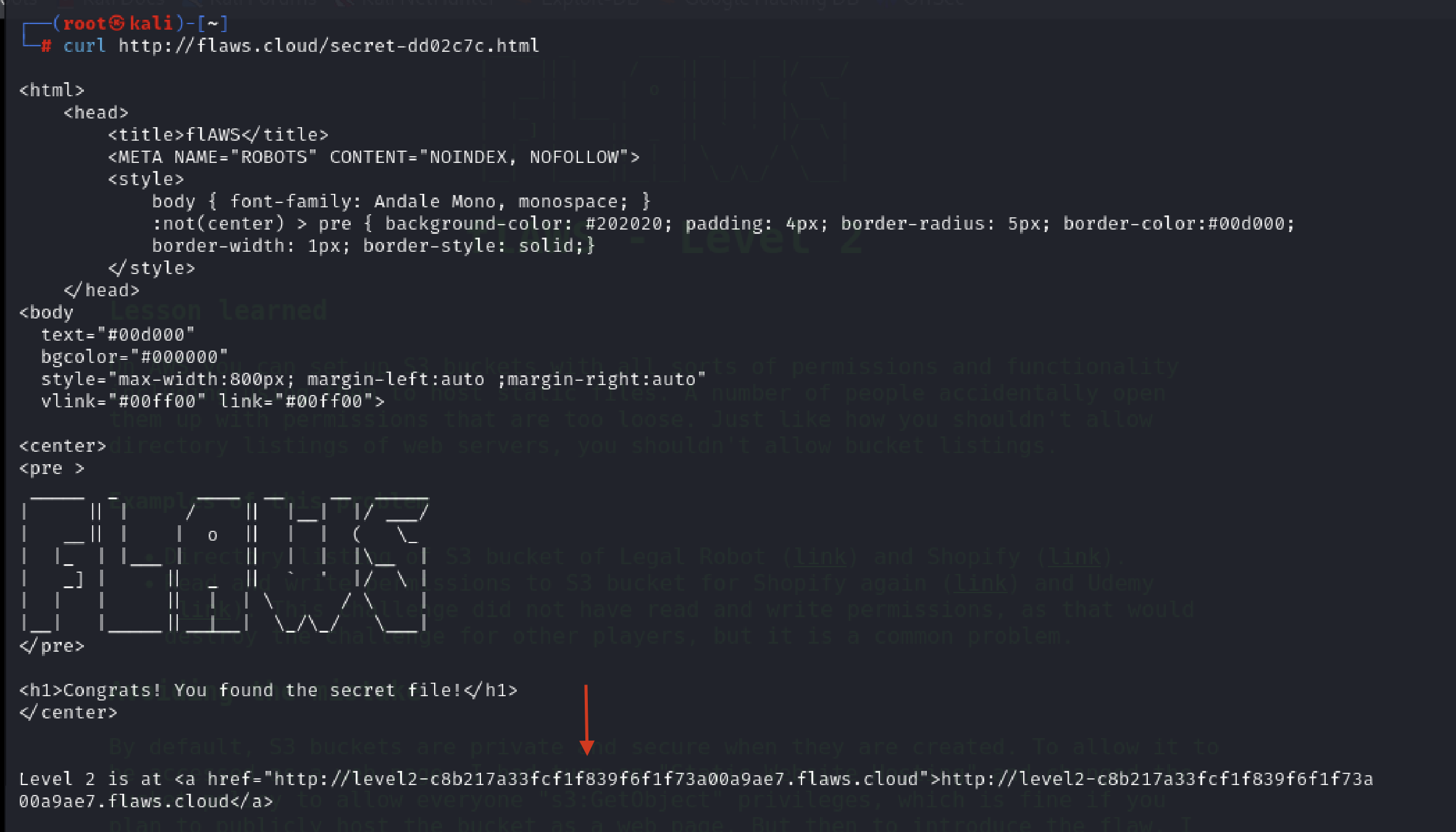

curl http://flaws.cloud/secret-dd02c7c.html

On clicking the link, we’ll be taken to Level 2

Key Takeaways:

What was wrong :

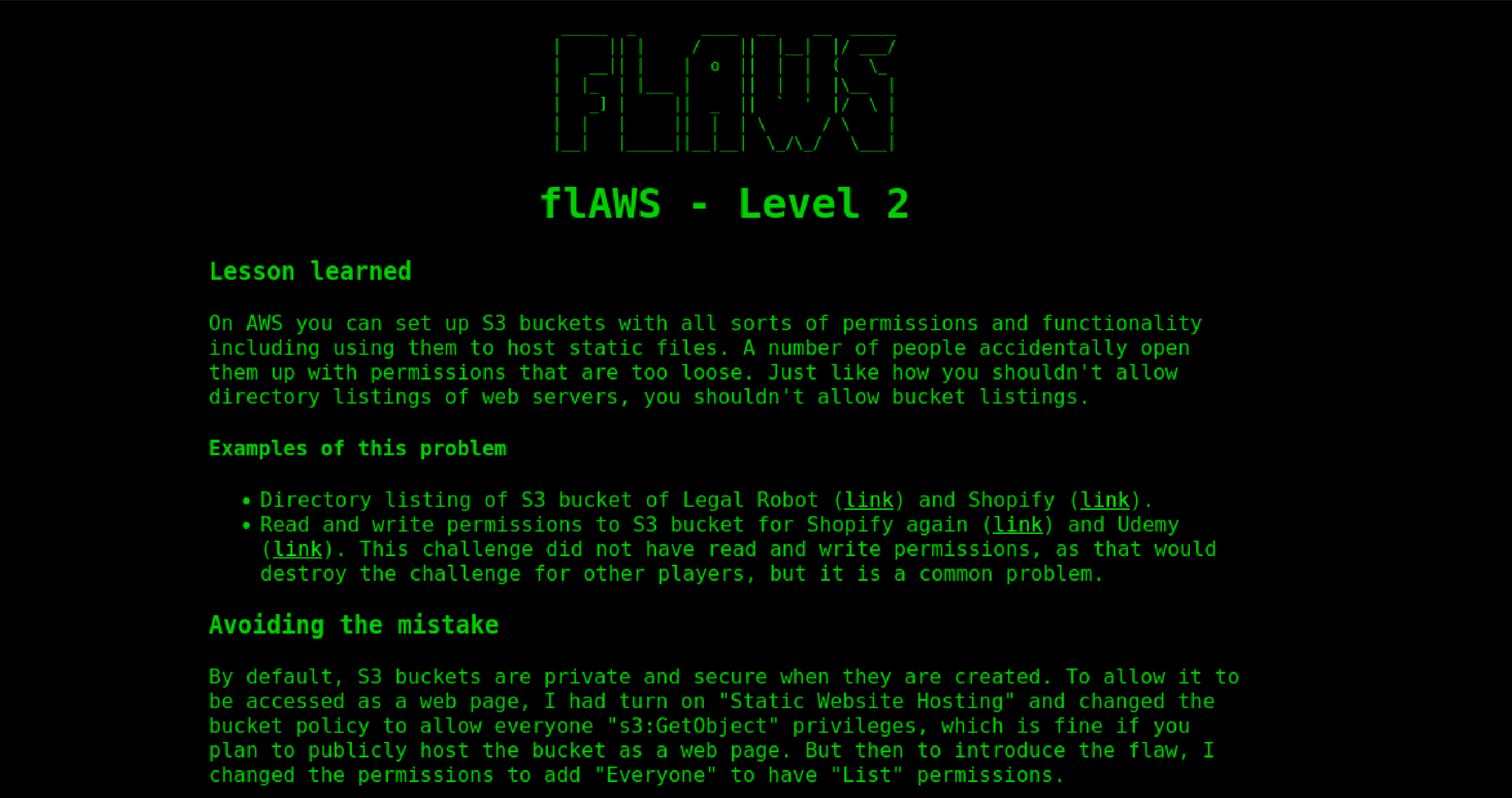

In this challenge, I discovered that the flaws.cloud S3 bucket was configured with overly permissive settings, specifically allowing public "List" permissions. This meant that anyone on the internet could view the contents of the bucket by simply visiting the URL http://flaws.cloud.s3.amazonaws.com/ .

What Could Have Been Done to Prevent It:

For a public-facing website, only the

"s3:GetObject"permission should be granted to the public. This allows users to access specific files without exposing the entire file listing.Review and Audit Bucket Policies: Regularly reviewing and auditing S3 bucket policies can help ensure that permissions are not overly broad and align with security best practices.

LEVEL 2

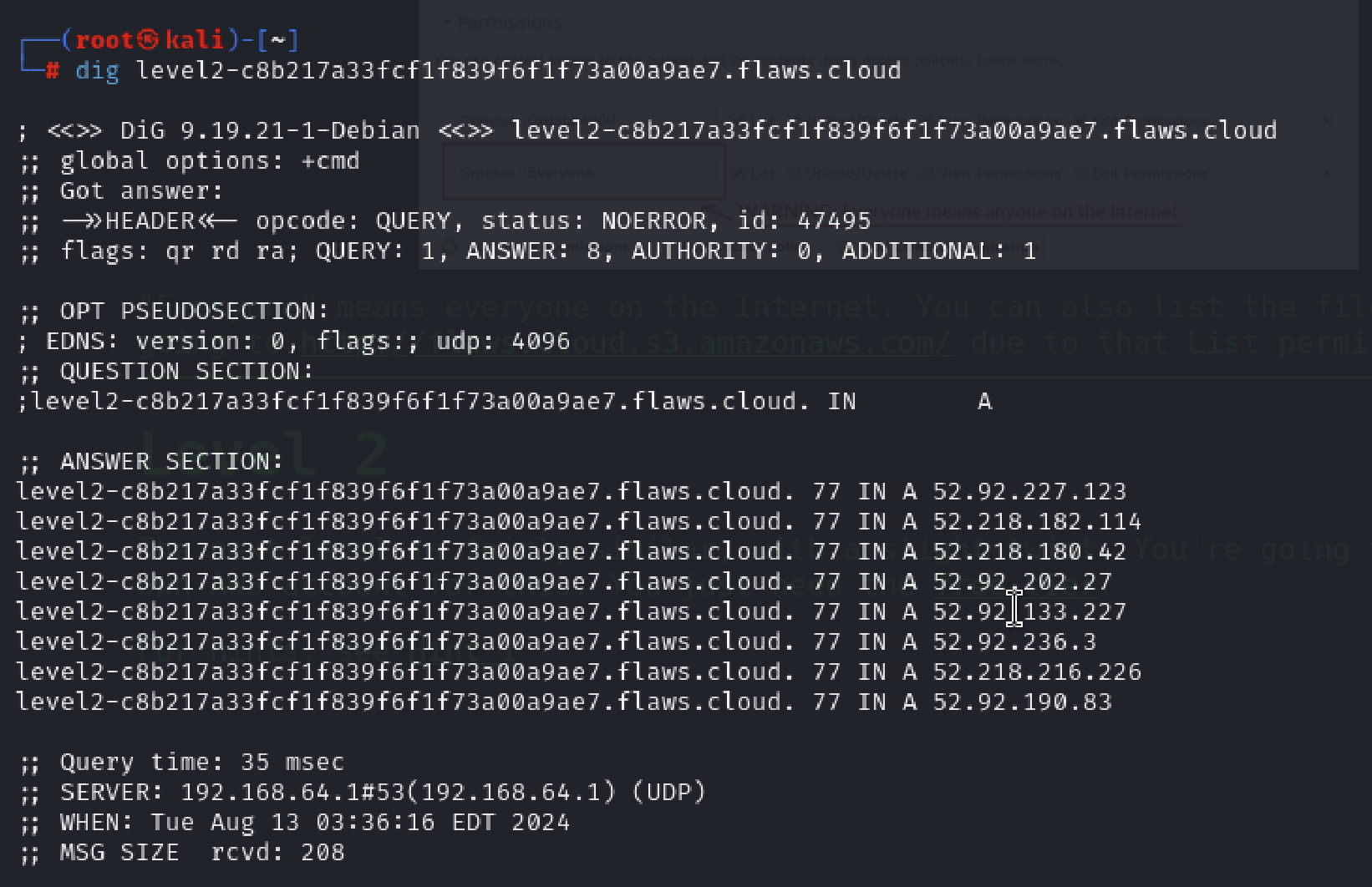

At first we’ll follow similar steps to Level 1 for identifying the sub-domain.

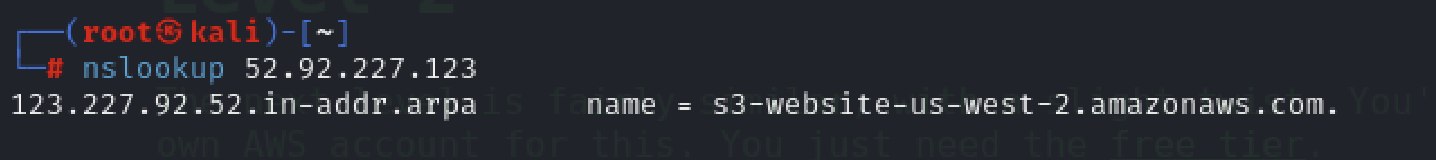

When I tried to retrieve a list of the contents within this bucket, much like the previous action in Level 1, I received a different response, indicating ‘Access Denied’ when trying to list S3 objects.

It’s possible that a policy is set up to verify the S3 permissions of the caller.

To address this, we can create an IAM User or Role with full S3 permissions within our account and use their credentials to try listing the S3 bucket contents again.

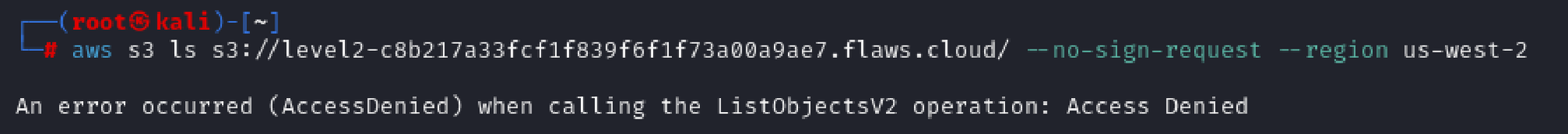

I used my AWS Learner Lab console, which has full S3 permissions, to perform these tasks.

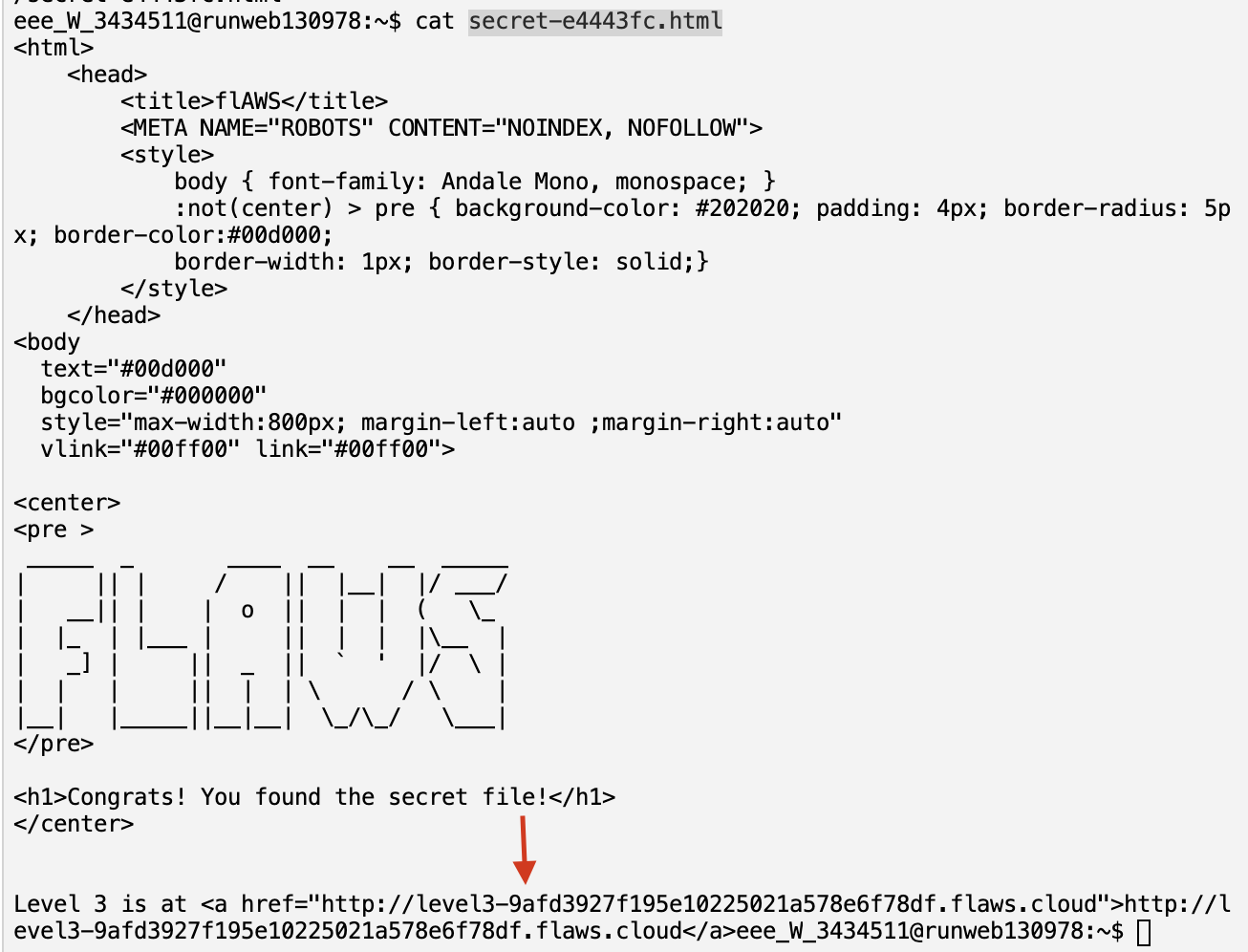

I downloaded the files and displayed the secret-e4443fc.html file using commands:

aws s3 sync s3://level2-c8b217a33fcf1f839f6f1f73a00a9ae7.flaws.cloud/ .

cat secret-e4443fc.html

Bingo ! we've reached Level 3.

Key Takeaways:

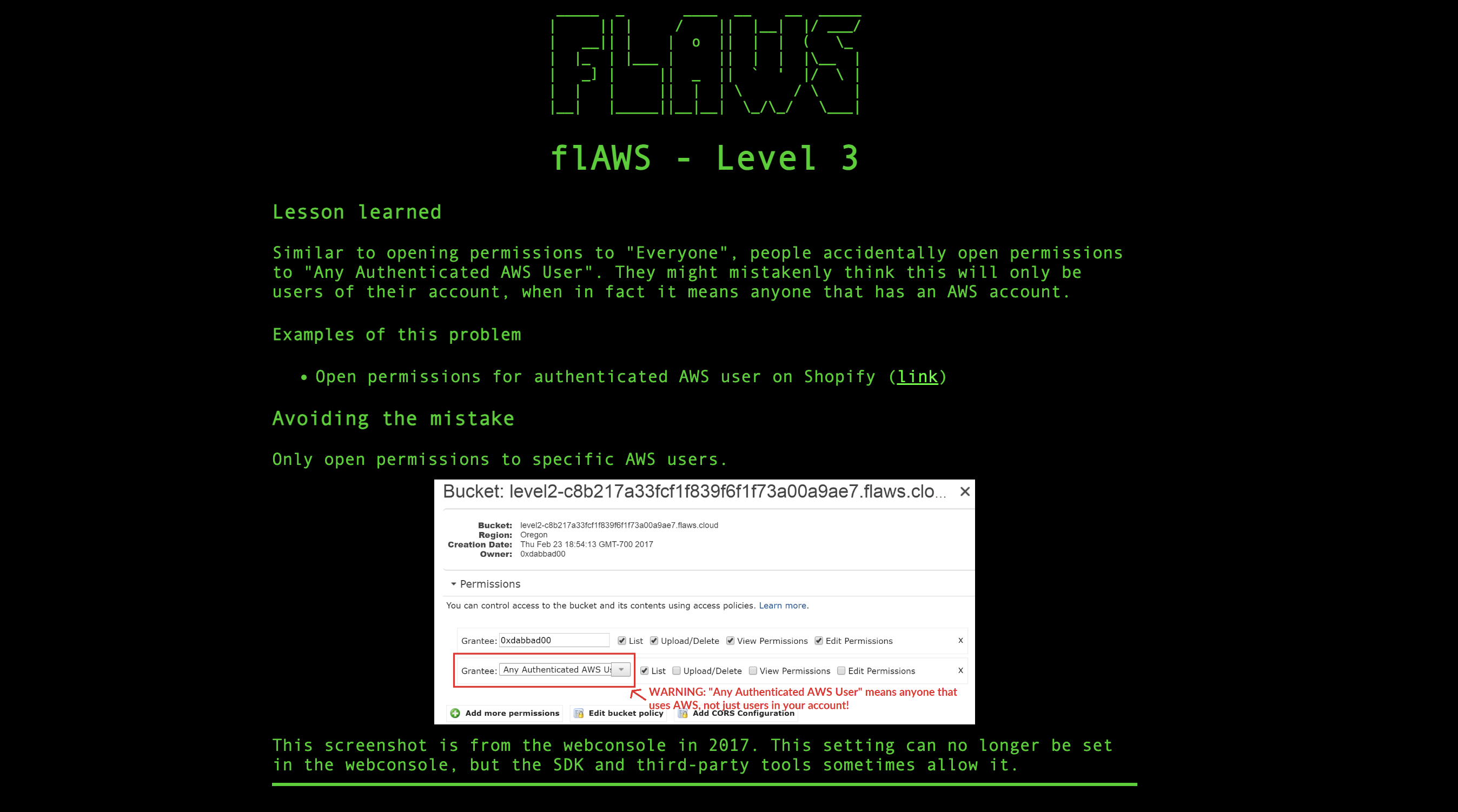

What was wrong:

Similar to mistakenly setting permissions to "Everyone," some people accidentally grant permissions to "Any Authenticated AWS User." This broader permission actually includes anyone with an AWS account, not just users within their own account.

What Could Have Been Done to Prevent It:

To avoid this mistake, permissions should be restricted to specific AWS users rather than granting access to "Any Authenticated AWS User” using IAM policies.

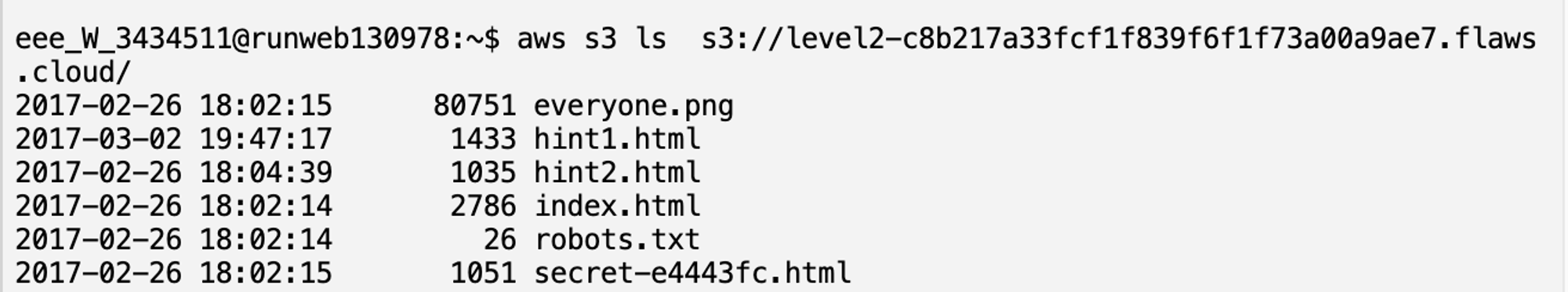

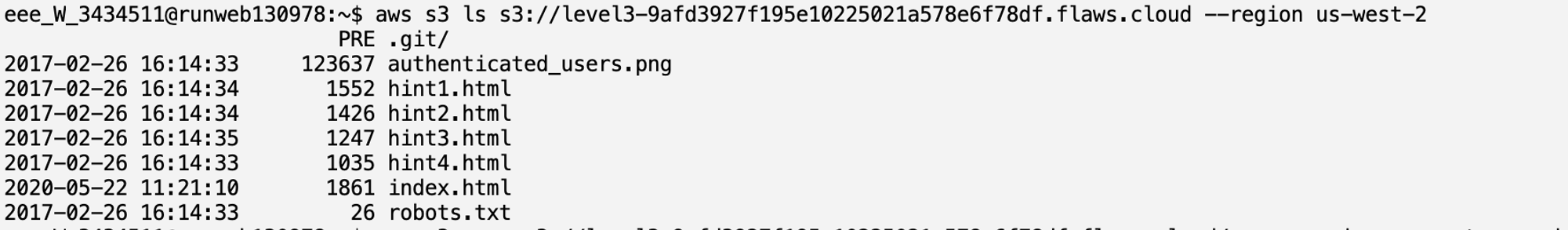

Let's start off my listing the content of level 3 S3 bucket.

This S3 bucket has a .git file. There probably might be interesting things in it. I Downloaded the contents of this S3 bucket and listed all files and directories, including hidden ones.

aws s3 sync s3://level3-9afd3927f195e10225021a578e6f78df.flaws.cloud/ . --no-sign-request --region us-west-2

ls -la

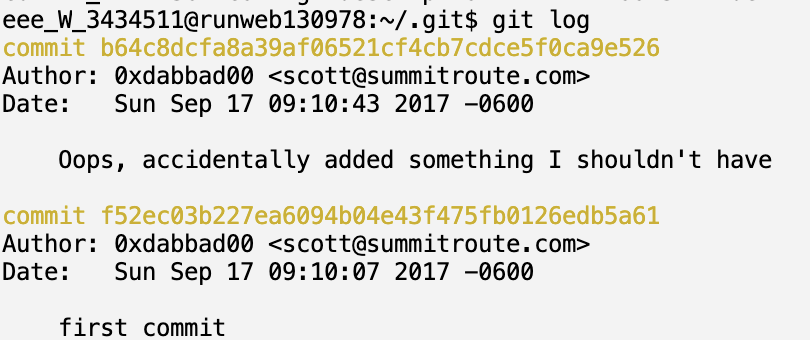

People often accidentally add secret things to git repos, and then try to remove them without revoking or rolling the secrets. I looked through the history of a git repo by running:

git log

It appears there are 2 available git versions/branches. Now, to read each commit we have to use the following command:

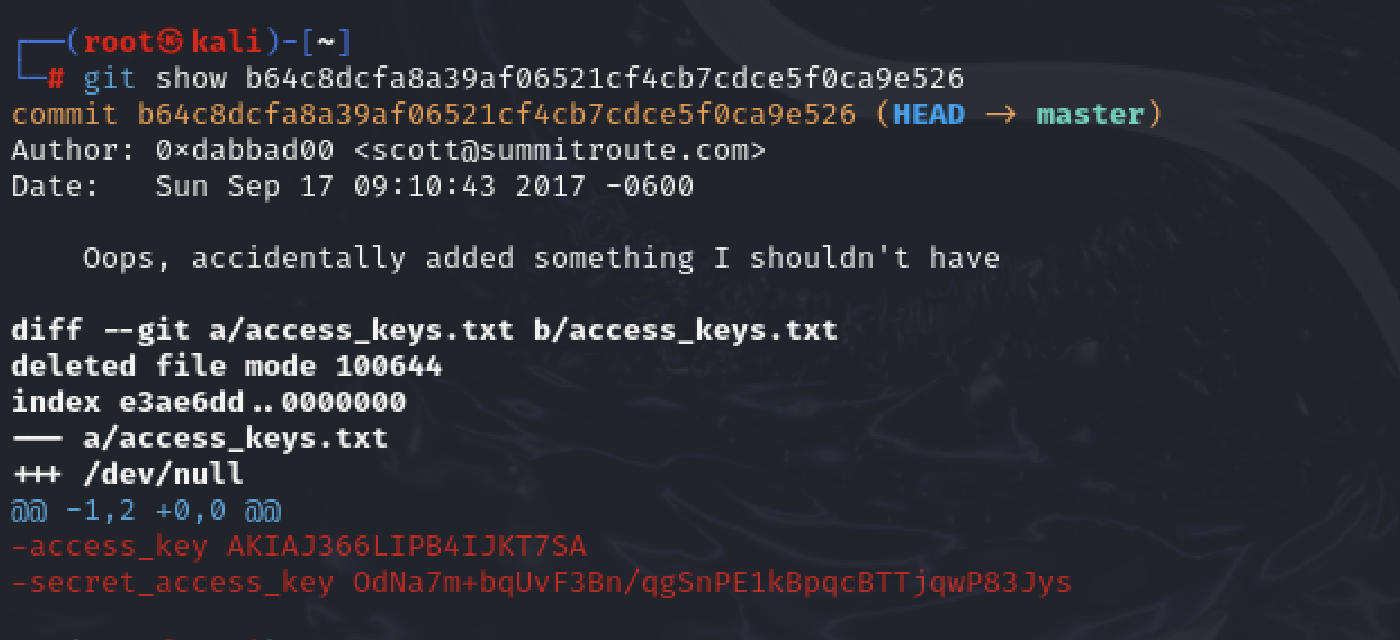

git show b64c8dcfa8a39af06521cf4cb7cdce5f0ca9e526

There it is , I found the access key and secret key id . Now I’ll use the discovered credentials to log in as a user.

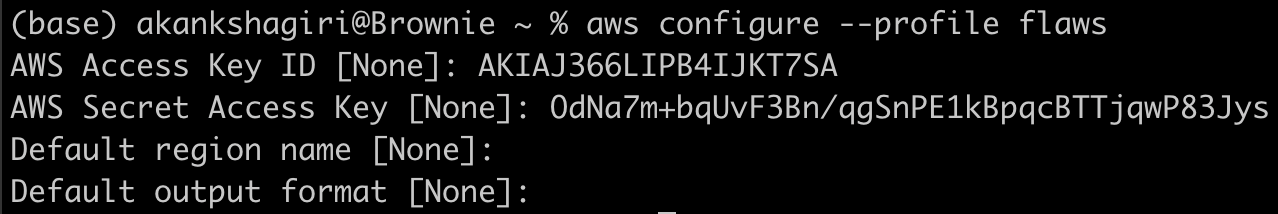

To create a new profile with the keys retrieved from the repository:

aws configure --profile <profile_name>

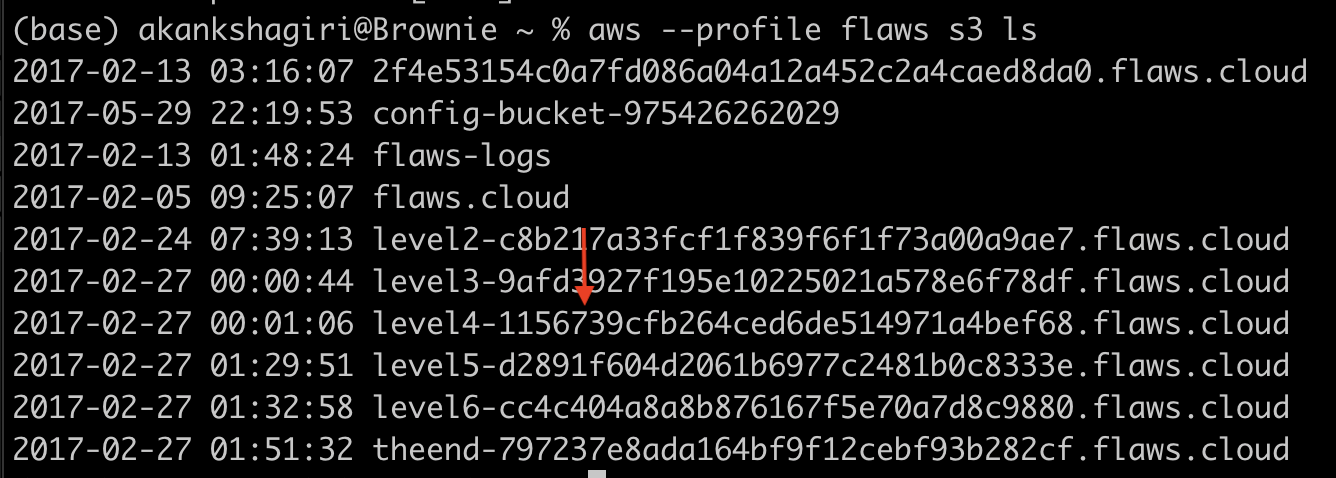

Then I listed the S3 buckets with credentials discovered earlier and there we have access to level 4.

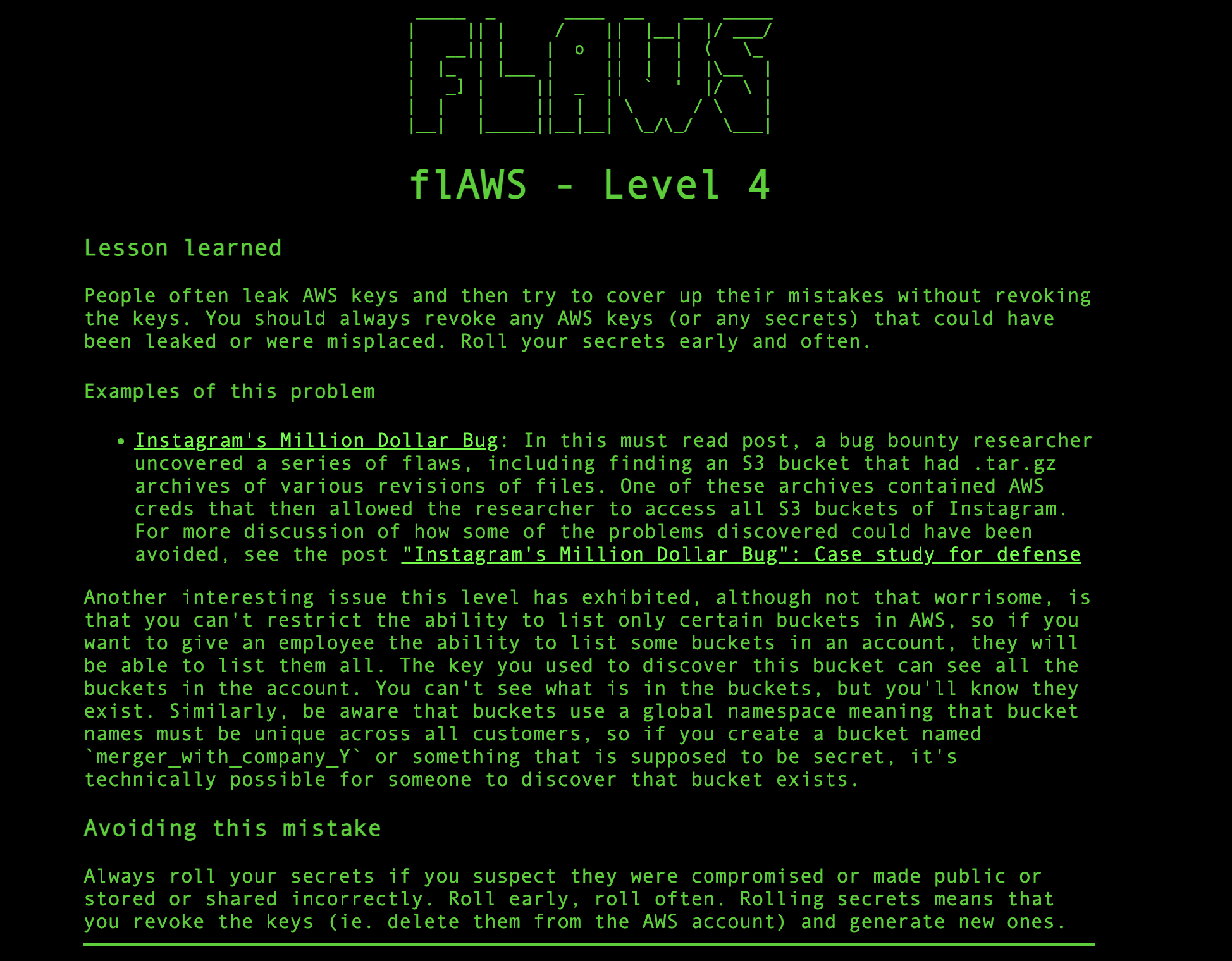

Key Takeaways:

What was wrong ?

People often accidentally share AWS access keys publicly (like in a Git repository) and then try to fix the mistake without disabling the exposed keys. This can allow unauthorized users to access your AWS resources. If you give someone permission to list S3 buckets in AWS, they can see all buckets in your account, which might reveal sensitive information. For example, in this challenge, when trying to list the buckets of level 3 we could see all the buckets that exists (levels 5, 6, and 7). Even though we couldn't see inside these buckets, knowing they exist can still be a security threat

What Could've Been Done?

If you think AWS keys or other secrets have been exposed or shared by mistake, revoke them immediately and generate new ones to protect your account.

Regularly update and change AWS keys and other secrets to minimize risk if they are accidentally exposed.

Name your buckets and resources in a way that doesn't reveal sensitive information. Avoid using names that could hint at confidential projects or data.

Subscribe to my newsletter

Read articles from Akanksha Giri directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by