Building a Retrieval-Augmented Generation (RAG) Pipeline with NVIDIA NIM, Azure AI Search, and LlamaIndex

Farzad Sunavala

Farzad Sunavala

Want to win over $9000 in cash prizes? Maybe even a NVIDIA® GeForce RTX™ 4080 SUPER GPU? This blog is for you!

NVIDIA and LlamaIndex have partnered together to launch an exciting LlamaIndex Developer Contest. While I won't be participating, I will help as many people as I can build cool s*** with Azure AI Search.

In this blog, I'll guide you through creating a powerful Retrieval-Augmented Generation (RAG) pipeline using NVIDIA's AI models, Azure AI Search, and LlamaIndex. This process will equip you with the skills to integrate cutting-edge technology into your projects, giving you a competitive edge in the LlamaIndex <> NVIDIA Partnership Hackathon. With over $9,000 in cash prizes, hardware, credits, and more up for grabs, this is an opportunity you won't want to miss!

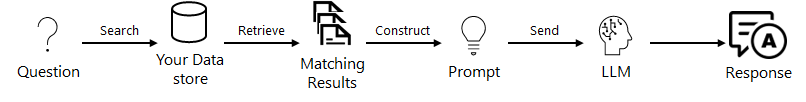

Intro: What in the world is RAG?

Retrieval-Augmented Generation (RAG) is a method that enhances the performance of large language models (LLMs) by integrating external data sources into their responses. It works by retrieving relevant documents or data based on a query, and then grounding the generated response in this contextually relevant information. This approach improves the accuracy, relevance, and factual grounding of LLM outputs.

What is Azure AI Search?

Azure AI Search is a comprehensive retrieval system that is committed to giving the best quality search results for Generative AI scenarios such as RAG. It has powerful search capabilities, including vector and full-text search, faceted navigation, and reranking powered by Microsoft Bing. It's the perfect fit for integrating with RAG pipelines where embeddings are used to enhance search and retrieval accuracy.

Read more here: Introduction to Azure AI Search - Azure AI Search | Microsoft Learn

What is NVIDIA API Catalog / NIM?

The NVIDIA API Catalog provides access to a range of AI models and services, including large language models, embeddings, and other AI-powered tools. The NVIDIA NIM microservices are part of this catalog, enabling developers to integrate these advanced models into their applications via APIs.

Featured models we'll be using:

Phi-3.5-MOE-instruct: A state-of-the-art model optimized for instruction-following tasks, ideal for generating contextually accurate and relevant outputs in RAG pipelines.

nv-embedqa-e5-v5: An embedding model designed to create high-quality vector representations of text, crucial for efficient and accurate information retrieval.

What is LlamaIndex?

LlamaIndex is a framework that facilitates the indexing, storage, and querying of data for use in AI applications. It allows seamless integration with various vector stores, like Azure AI Search, and supports the use of NVIDIA models to power advanced search and retrieval functionalities.

Step-By-Step RAG Quickstart w/Azure AI Search, NVIDIA, and LlamaIndex

In this section, we’ll demonstrate how to set up a RAG pipeline using NVIDIA’s AI models and LlamaIndex, integrated with Azure AI Search.

Step 1: Installation and Requirements

First, set up your Python environment and install the necessary packages:

!pip install azure-search-documents==11.5.1

!pip install --upgrade llama-index

!pip install --upgrade llama-index-core

!pip install --upgrade llama-index-readers-file

!pip install --upgrade llama-index-llms-nvidia

!pip install --upgrade llama-index-embeddings-nvidia

!pip install --upgrade llama-index-vector-stores-azureaisearch

!pip install python-dotenv

Step 2: Getting Started with Variables

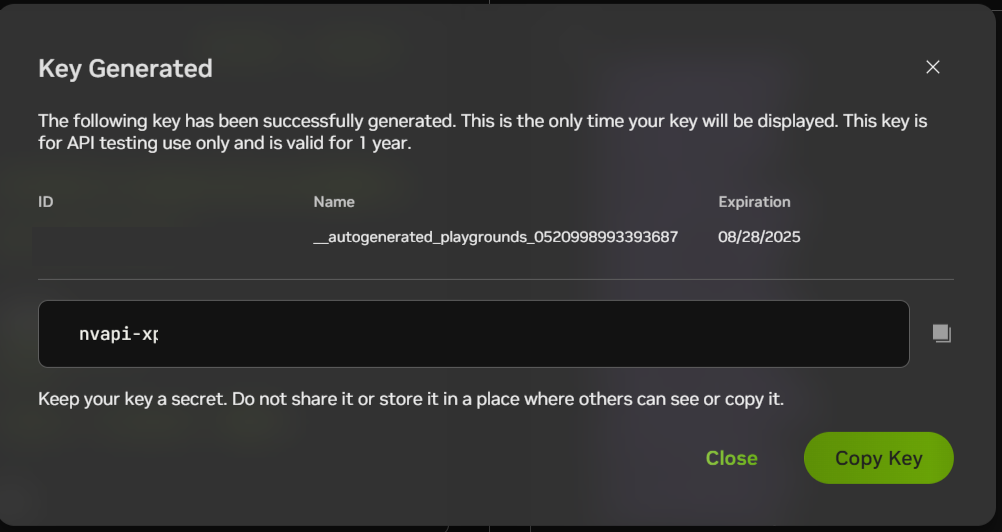

To use NVIDIA's AI models, you'll need an API key.

Create a free account with NVIDIA.

Choose your model.

Generate and save your API key.

import getpass

import os

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

if not os.environ.get("NVIDIA_API_KEY", "").startswith("nvapi-"):

nvidia_api_key = getpass.getpass("Enter your NVIDIA API key: ")

assert nvidia_api_key.startswith("nvapi-"), f"{nvidia_api_key[:5]}... is not a valid key"

os.environ["NVIDIA_API_KEY"] = nvidia_api_key

Step 3: Initialize the LLM (Large Language Model)

We’ll use the llama-index-llms-nvidia connector to connect to NVIDIA’s models.

Side note: I was surprised to see the new Phi-3.5-MOE in beta on NIM within 48 hours of the public launch. The LLM/SLM space is RED-HOT!

Phi-3.5-MoE, featuring 16 small experts, delivers high-quality performance and reduced latency, supports 128k context length, and multiple languages with strong safety measures. It surpasses larger models and can be customized for various applications through fine-tuning, all while maintaining efficiency with 6.6B active parameters. We can use any model in NVIDIA API Catalog, but let's check out this one!

from llama_index.core import Settings

from llama_index.llms.nvidia import NVIDIA

# Using the Phi-3.5-MOE-instruct model from the NVIDIA API Catalog

Settings.llm = NVIDIA(model="microsoft/phi-3.5-moe-instruct", api_key=os.getenv("NVIDIA_API_KEY"))

Step 4: Initialize the Embedding

Next, set up the embedding model with llama-index-embeddings-nvidia.

from llama_index.embeddings.nvidia import NVIDIAEmbedding

Settings.embed_model = NVIDIAEmbedding(model="nvidia/nv-embedqa-e5-v5", api_key=os.getenv("NVIDIA_API_KEY"))

Step 5: Create an Azure AI Search Vector Store

Integrate with Azure AI Search by creating a vector store.

from azure.core.credentials import AzureKeyCredential

from azure.search.documents import SearchClient, SearchIndexClient

from llama_index.vector_stores.azureaisearch import AzureAISearchVectorStore, IndexManagement

search_service_api_key = os.getenv('AZURE_SEARCH_ADMIN_KEY') or getpass.getpass('Enter your Azure Search API key: ')

search_service_endpoint = os.getenv('AZURE_SEARCH_SERVICE_ENDPOINT') or getpass.getpass('Enter your Azure Search service endpoint: ')

credential = AzureKeyCredential(search_service_api_key)

index_name = "llamaindex-nvidia-azureaisearch-demo"

index_client = SearchIndexClient(endpoint=search_service_endpoint, credential=credential)

search_client = SearchClient(endpoint=search_service_endpoint, index_name=index_name, credential=credential)

vector_store = AzureAISearchVectorStore(

search_or_index_client=index_client,

index_name=index_name,

index_management=IndexManagement.CREATE_IF_NOT_EXISTS,

id_field_key="id",

chunk_field_key="chunk",

embedding_field_key="embedding",

embedding_dimensionality=1024, # for nv-embedqa-e5-v5 model

)

Step 6: Load, Chunk, and Upload Documents

Now, prepare your documents for indexing.

from llama_index.core import SimpleDirectoryReader, StorageContext, VectorStoreIndex

from llama_index.core.text_splitter import TokenTextSplitter

text_splitter = TokenTextSplitter(separator=" ", chunk_size=500, chunk_overlap=10)

documents = SimpleDirectoryReader(input_files=["data/txt/state_of_the_union.txt"]).load_data()

storage_context = StorageContext.from_defaults(vector_store=vector_store)

index = VectorStoreIndex.from_documents(

documents,

transformations=[text_splitter],

storage_context=storage_context,

)

Create a Query Engine

Finally, set up a query engine to interact with your indexed data.

query_engine = index.as_query_engine()

response = query_engine.query("Who did the speaker mention as being present in the chamber?")

display(Markdown(f"{response}"))

Output:

The speaker mentioned the Ukrainian Ambassador to the United States, along with other members of Congress, the Cabinet, and various officials such as the Vice President, the First Lady, and the Second Gentleman, as being present in the chamber.

Hybrid Retrieval Mode

# Initialize hybrid retriever and query engine

hybrid_retriever = index.as_retriever(vector_store_query_mode=VectorStoreQueryMode.HYBRID)

hybrid_query_engine = RetrieverQueryEngine(retriever=hybrid_retriever)

# Query execution

query = "What were the exact economic consequences mentioned in relation to Russia's stock market?"

response = hybrid_query_engine.query(query)

# Display the response

display(Markdown(f"{response}"))

print("\n")

# Print the source nodes

print("Source Nodes:")

for node in response.source_nodes:

print(node.get_content(metadata_mode=MetadataMode.LLM))

Hybrid + Semantic Ranking (Reranking) Retrieval Mode

# Initialize hybrid retriever and query engine

semantic_reranker_retriever = index.as_retriever(vector_store_query_mode=VectorStoreQueryMode.SEMANTIC_HYBRID)

semantic_reranker_query_engine = RetrieverQueryEngine(retriever=semantic_reranker_retriever)

# Query execution

query = "What was the precise date when Russia invaded Ukraine?"

response = semantic_reranker_query_engine.query(query)

# Display the response

display(Markdown(f"{response}"))

print("\n")

# Print the source nodes

print("Source Nodes:")

for node in response.source_nodes:

print(node.get_content(metadata_mode=MetadataMode.LLM))

You can find more details and my expert analysis on comparing the different queries over my data source in the full notebook (see References section).

Final Thoughts

This blog has walked you through setting up a Retrieval-Augmented Generation (RAG) pipeline using NVIDIA NIM, Azure AI Search, and LlamaIndex. Whether you are aiming to build powerful applications for the hackathon or just exploring new AI technologies, this guide provides a strong foundation.

Don’t miss the chance to participate in the LlamaIndex <> NVIDIA Partnership Hackathon, where you can win amazing prizes by showcasing your innovative AI solutions. Remember, the competition runs until November 10th, so get started today!

Happy coding, and I'd love to help participants leverage Azure AI Search as their retriever!

References

Full Code Jupyter Notebook: azure-ai-search-python-playground/azure-ai-search-nvidia-rag.ipynb at main · farzad528/azure-ai-search-python-playground (github.com)

Binary quantization in Azure AI Search: optimized storage and faster search (microsoft.com)

Azure AI Search: Outperforming vector search with hybrid retrieval and ranking capabilities - Microsoft Community Hub

Getting started with Azure AI Search

To get started with Azure AI Search, check out the below resources:

Learn more about Azure AI Search and about all the latest features.

Start creating a search service in the Azure Portal, Azure CLI, the Management REST API, ARM template, or a Bicep file.

Learn about Retrieval Augmented Generation in Azure AI Search.

Explore our preview client libraries in Python, .NET, Java, and JavaScript, offering diverse integration methods to cater to varying user needs.

Explore how to create end-to-end RAG applications with Azure AI Studio.

Subscribe to my newsletter

Read articles from Farzad Sunavala directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Farzad Sunavala

Farzad Sunavala

I am a Principal Product Manager at Microsoft, leading RAG and Vector Database capabilities in Azure AI Search. My passion lies in Information Retrieval, Generative AI, and everything in between—from RAG and Embedding Models to LLMs and SLMs. Follow my journey for a deep dive into the coolest AI/ML innovations, where I demystify complex concepts and share the latest breakthroughs. Whether you're here to geek out on technology or find practical AI solutions, you've found your tribe.