Feedforward Neural Networks

Retzam Tarle

Retzam Tarle

Hello 🤖!

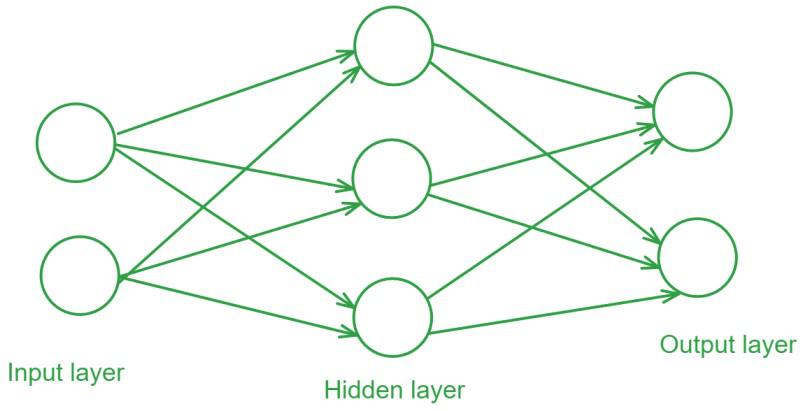

A Feedforward Neural Network (FNN) is a type of neural network where connections between neurons do not form cycles. This is the simplest form of an artificial neural network. It has an input layer, a hidden layer, and an output layer, data flows in one direction from the input layer to the output layer.

From our previous chapter, we learned extensively about how neural networks work, so I believe we know about the layers and what each of them does already. If not, you can catch up here, I believe it is important for you to read the previous chapter, so you understand this chapter better.

Building on that, we'll see that FNN is the basic form of a neural network. Consider the image below. It shows clearly the direction a Feedforward Neural Network follows to learn and make predictions, as shown by the direction of the arrows starting from the input layer, hidden layer to the output layer.

Actually there is not much to be said about FNN given that we understand how each layer works, the uses of activation functions, and bias. Let's rather brush up a bit more on how training works in FNN.

Training a Feedforward Neural Network involves 3 steps:

Forward propagation: Data passes from the input layer through the hidden layer to the output layer where the output is calculated.

Loss calculation: Just as in our regular models if you can remember we want to always get the model with the least loss. Here the loss for each output is calculated.

For a reminder say we expect our FNN model to predict a value of 9.3 and it predicts 9.5 the loss is 0.2. This is a simple example to help you remember what loss is, the absolute difference between the predicted value and the actual value.

To calculate the loss we can use functions like the Mean Squared Error MSE for regression tasks or Cross-Entropy for classification tasks.

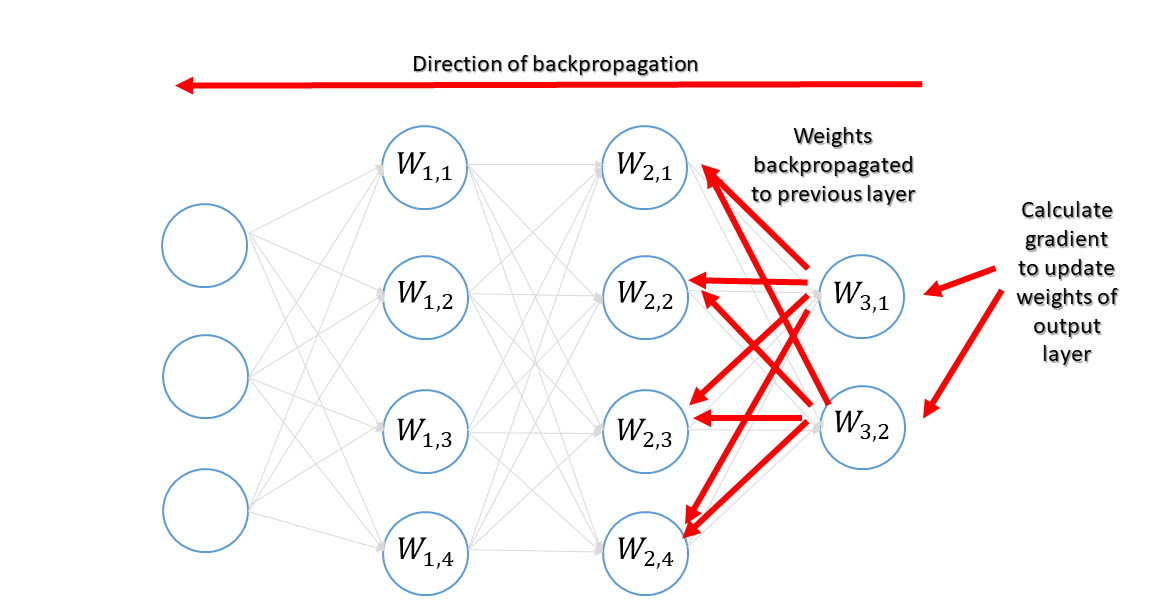

Backpropagation: This step involves iteratively adjusting the weight of each neuron(node) based on the loss value calculated in the previous step. Each iteration is known as an epoch. Another reminder each neuron in a neural network holds a value. Now that value is calculated using an attached weight, activation function, and bias.

In backpropagation, this weight is continuously adjusted to give the least error when the output is calculated.

The image above shows how backpropagation works.

We can see in this chapter that a FNN is the simplest form of artificial neural network because it has no extras or tweaks just basics.

By now I believe we should be getting more comfortable with how neural networks work, with a good grasp on Feedforward Neural Networks. I found a nice video on FNN on YouTube you can watch here.

I think we should rather get straight to hands-on as usual right? Enough talk, let's code! (Kunfu Panda 🐼) In our next chapter, we'll build and deploy a model using a Feedforward Neural Network.

See ya 👽

Subscribe to my newsletter

Read articles from Retzam Tarle directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Retzam Tarle

Retzam Tarle

I am a software engineer.