Building Reusable Infrastructure with Terraform Modules

vijayaraghavan vashudevan

vijayaraghavan vashudevan

👨🏽💻This article, will explain in detail how to create reusable modules in Terraform, define input variables, and output values from modules👨🏽💻

🌌Synopsis:

⛳ Learn the fundamentals of working with modules in Terraform and how to structure your code for reusability

🌌Terraform Modules:

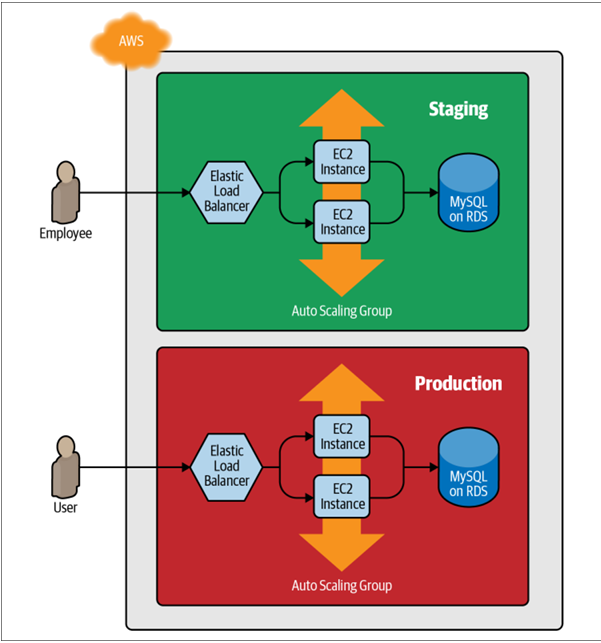

⛳In the world, need at least two environments: one for your team’s internal testing (staging) and one that real users can access (production) as below

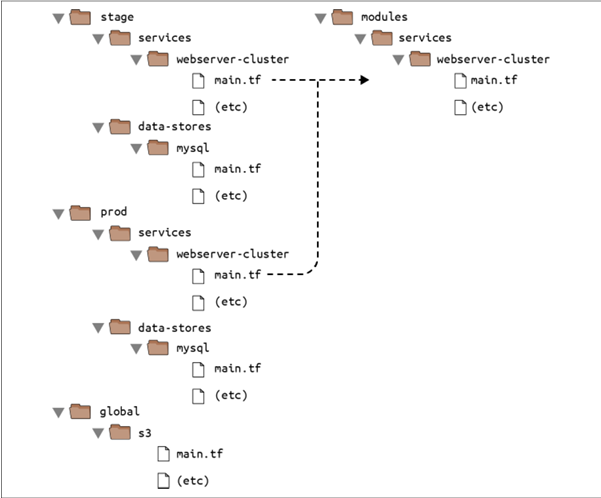

⛳With Terraform, you can put your code inside of a Terraform module and reuse that module in multiple places throughout your code. Instead of having the same code copied and pasted in the staging and production environments, you’ll be able to have both environments reuse code from the same module

⛳Modules are the key ingredient to writing reusable, maintainable, and testable Terraform code. Once you start using them, there’s no going back. You’ll start building everything as a module, creating a library of modules to share within your company, using modules that you find online, and thinking of your entire infrastructure as a collection of reusable modules.

🌌Module Basics:

⛳A Terraform module is very simple: any set of Terraform configuration files in a folder is a module. All of the configurations you’ve written so far have technically been modules, although not particularly interesting ones since you deployed them directly: if you run apply directly on a module, it’s referred to as a root module. To see what modules are really capable of, you need to create a reusable module, which is a module that is meant to be used within other modules.

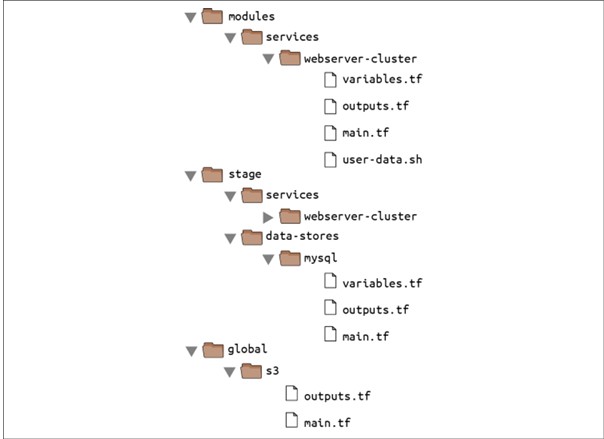

⛳Create a new top-level folder called modules, and move all of the files from stage/services/webserver-cluster to modules/services/webserver-cluster.

⛳Syntax for using the module:

module "<NAME>" {

source = "<SOURCE>"

[CONFIG ...]

}

⛳Where NAME is an identifier you can use throughout the Terraform code to refer to this module (e.g., webserver_cluster), SOURCE is the path where the module code can be found (e.g., modules/services/webserver-cluster), and CONFIG consists of arguments that are specific to that module.

For example, you can create a new file in stage/services/webserver-cluster/main.tf and use the webserver-cluster module in it as follows

provider "aws" {

region = "us-east-2"

}

module "webserver_cluster" {

source = "../../../modules/services/webserver-cluster"

}

🌌Module Inputs:

⛳In Terraform, modules can have input parameters. Open up modules/services/webserver-cluster/variables.tf and add new input variable:

variable "cluster_name" {

description = "The name to use for all the cluster resources"

type = string

}

⛳Next, go through modules/services/webserver-cluster/main.tf, and use var.cluster_name instead of the hardcoded names

resource "aws_security_group" "alb" {

name = "${var.cluster_name}-alb"

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

🌌Module Locals:

⛳Using input variables to define your module’s inputs is great, but what if you need a way to define a variable in your module to do some intermediary calculation, or just to keep your code DRY, but you don’t want to expose that variable as a configurable input? For example, the load balancer in the webserver-cluster module in modules/services/webserver-cluster/main.tf listens on port 80, the default port for HTTP

resource "aws_security_group" "alb" {

name = "${var.cluster_name}-alb"

ingress {

from_port= 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port= 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

you can define these as local values in a locals block

locals {

http_port= 80

any_port = 0

any_protocol = "-1"

tcp_protocol = "tcp"

all_ips = ["0.0.0.0/0"]

}

resource "aws_security_group" "alb" {

name = "${var.cluster_name}-alb"

ingress {

from_port = local.http_port

to_port = local.http_port

protocol = local.tcp_protocol

cidr_blocks = local.all_ips

}

egress {

from_port= local.any_port

to_port = local.any_port

protocol = local.any_protocol

cidr_blocks = local.all_ips

}

}

Locals make your code easier to read and maintain, so use them often

🌌Module Outputs:

⛳In Terraform, a module can also return values. Again, you do this using a mechanism you already know: output variables. You can add the ASG name as an output variable in /modules/services/webserver-cluster/outputs.tf as follows

output "asg_name" {

value = aws_autoscaling_group.example.name

description = "The name of the Auto Scaling Group"

}

resource "aws_autoscaling_schedule" "scale_in_at_night" {

scheduled_action_name = "scale-in-at-night"

min_size = 2

max_size = 10

desired_capacity = 2

recurrence = "0 17 * * *"

autoscaling_group_name = module.webserver_cluster.asg_name

}

🌌Module Versioning:

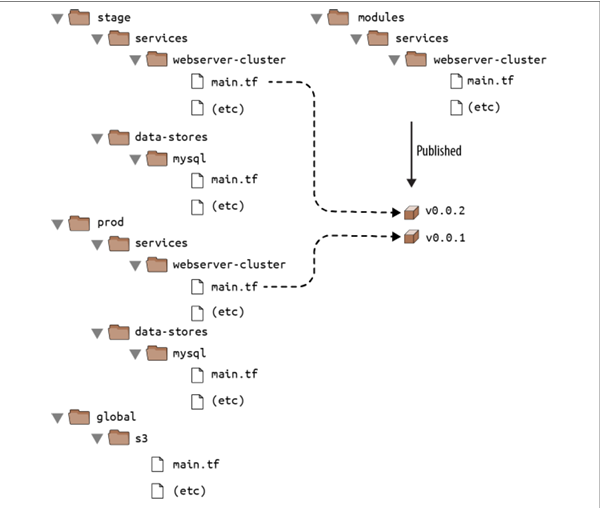

⛳If both your staging and production environment are pointing to the same module folder, as soon as you make a change in that folder, it will affect both environments on the very next deployment. This sort of coupling makes it more difficult to test a change in staging without any chance of affecting production. A better approach is to create versioned modules so that you can use one version in staging (e.g., v0.0.2) and a different version in production (e.g., v0.0.1)

🕵🏻I also want to express that your feedback is always welcome. As I strive to provide accurate information and insights, I acknowledge that there’s always room for improvement. If you notice any mistakes or have suggestions for enhancement, I sincerely invite you to share them with me.

🤩 Thanks for being patient and following me. Keep supporting 🙏

Clap👏 if you liked the blog.

For more exercises — please follow me below ✅!

https://vjraghavanv.hashnode.dev/

#aws #terraform #cloudcomputing #IaC #DevOps #tools #operations #30daytfchallenge #HUG #hashicorp #HUGYDE #IaC #developers #awsugmdu #awsugncr #automatewithraghavan

Subscribe to my newsletter

Read articles from vijayaraghavan vashudevan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

vijayaraghavan vashudevan

vijayaraghavan vashudevan

I'm Vijay, a seasoned professional with over 13 years of expertise. Currently, I work as a Quality Automation Specialist at NatWest Group. In addition to my employment, I am an "AWS Community Builder" in the Serverless Category and have served as a volunteer in AWS UG NCR Delhi and AWS UG MDU, a Pynt Ambassador (Pynt is an API Security Testing tool), and a Browserstack Champion. Actively share my knowledge and thoughts on a variety of topics, including AWS, DevOps, and testing, via blog posts on platforms such as dev.to and Medium. I always like participating in intriguing discussions and actively contributing to the community as a speaker at various events. This amazing experience provides me joy and fulfillment! 🙂