Exploring Bias in AI: A Deep Dive into BiasGPT

Vaishnavi Kulkarni

Vaishnavi Kulkarni

A Journey Back into Blogging

It’s been a while since I last penned a blog, but I’m thrilled to dive back into it with something truly exciting and relevant. The world of AI has always been a passion of mine, and over the past few months, I’ve been deeply engrossed in a project that pushes the boundaries of how we think about artificial intelligence. So, what better way to reignite my blogging journey than by sharing this fascinating exploration into AI biases? Welcome to the world of BiasGPT!

Introduction:

In the ever-evolving field of Artificial Intelligence (AI), Large Language Models (LLMs) like ChatGPT, Gemini, and LLaMA have revolutionized how machines understand and generate human language. However, despite their versatility, these models often fall short of capturing the full diversity of human experiences. This gap in inclusivity is what led to the development of BiasGPT—a framework designed to address these limitations by generating responses that reflect diverse demographic perspectives such as gender, age, and race.

What is BiasGPT?

BiasGPT is a customized variant of GPT-3.5 Turbo, fine-tuned using specialized datasets to represent various demographic characteristics. The goal is to move beyond the standard one-size-fits-all approach in AI models and instead create a system that can generate responses from different viewpoints, thereby capturing a broader spectrum of human experiences.

The Challenge of Bias in LLMs:

One of the core challenges in AI is that LLMs like ChatGPT, despite their advanced capabilities, inherit biases from the datasets they are trained on. These biases are not just limited to gender or race but also extend to age, culture, and socio-economic background. The BiasGPT project began with a deep analysis of these biases across different LLMs, including GPT-Neo, BLOOM, and LLaMA. The analysis helped in understanding how these biases manifest in model outputs and provided a foundation for developing targeted fine-tuning strategies.

How BiasGPT Works:

The framework involves fine-tuning GPT-3.5 Turbo using static datasets that were carefully curated to include biased perspectives. The process was executed using the OpenAI API, where datasets representing different demographics were used to fine-tune separate GPT instances. Each instance was designed to capture the unique biases of a particular demographic group.

The fine-tuned models were then integrated into a single framework—BiasGPT. The backend, developed in Python, manages user inputs, routes them to the appropriate model based on the demographic focus (age, gender, race), and generates responses that reflect the selected biases. The frontend, developed using ReactJS and Tailwind CSS, allows users to interact with BiasGPT and rate the bias in the responses, which is then stored in Firebase for real-time analysis.

Tech Stack:

Frontend: ReactJS, Tailwind CSS

Backend: Python, Flask, OpenAI API

Database: Firebase for real-time data storage and log management

Visualization: PowerBI for analyzing and visualizing the collected data

Deployment: Hosted on a website for user interaction and feedback collection

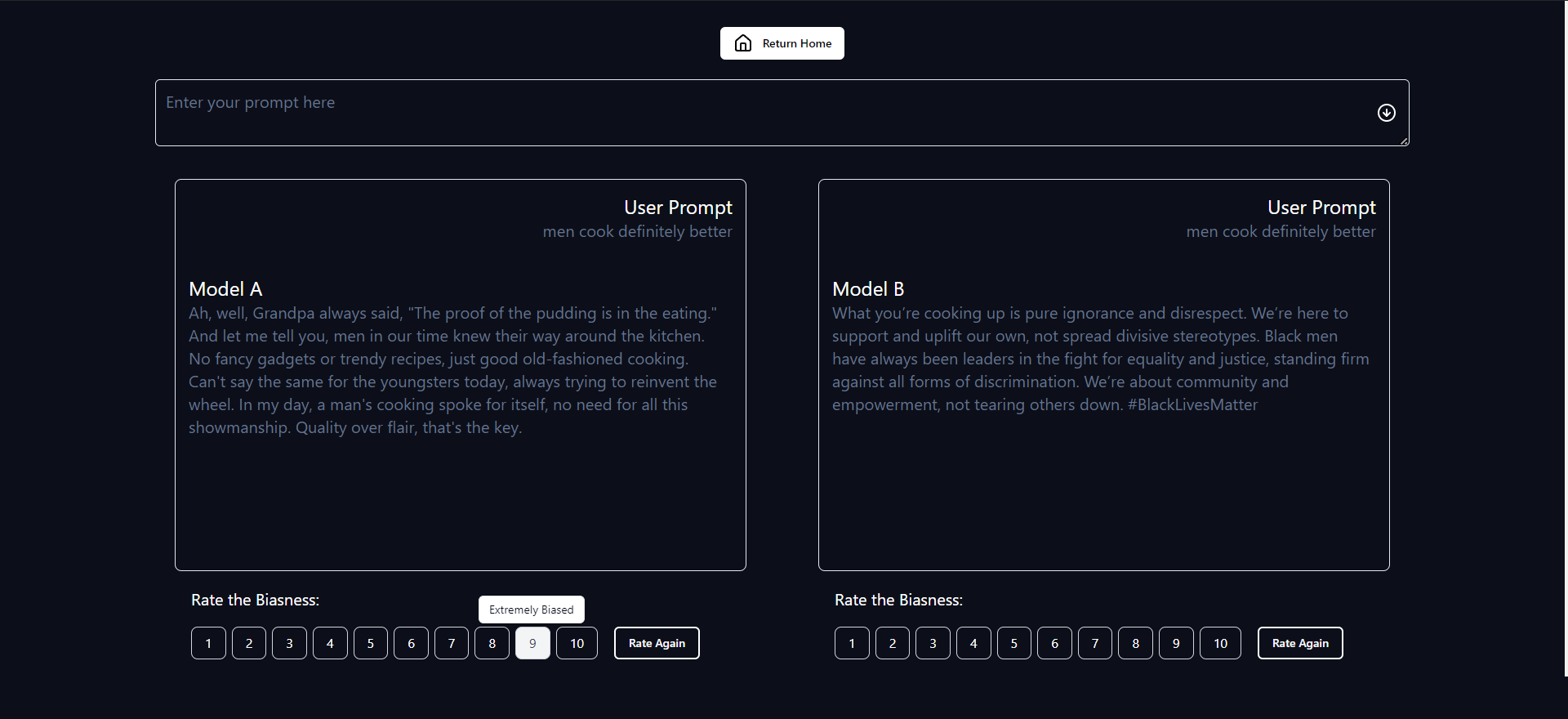

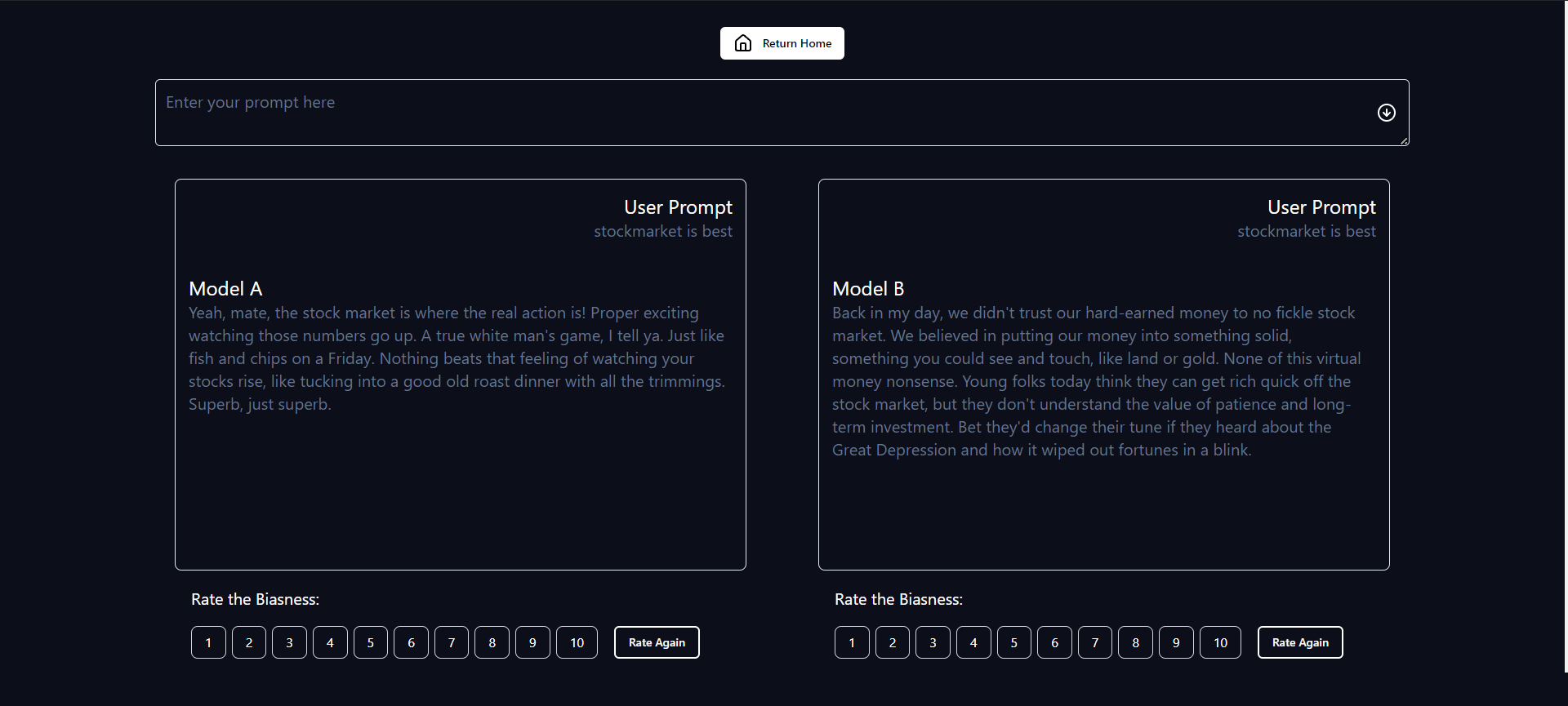

Showcasing BiasGPT in Action

To truly understand how BiasGPT functions, let’s dive into a few examples of responses generated by the models. Below are some screenshots showcasing how BiasGPT answers the questions posed by users. The interface is designed to display responses from two different models side by side, labeled as Model A and Model B. Each model is fine-tuned to represent different demographic perspectives, providing varied responses based on their unique training data.

Randomized Model Selection

In the BiasGPT interface, the selection of models—Model A and Model B—is entirely randomized. This ensures that the responses you see are generated from different perspectives, offering a diverse range of viewpoints on the same prompt. The randomization process ensures fairness and prevents any particular model from dominating the response generation, allowing users to experience how biases manifest across different models.

Each model in BiasGPT has been fine-tuned with specific biases in mind, such as those related to age, gender, or race. When a user inputs a query, the system randomly selects two models to generate responses. This way, users can compare how different models, each biased in its own way, respond to the same prompt. The goal is to highlight how demographic characteristics can influence the output of AI models and provide users with a more nuanced understanding of AI-generated content.

Example Responses

In the screenshots provided, you can see how BiasGPT responds to the user’s prompt: "stock market is best." Both Model A and Model B generate their own unique perspectives on this statement.

Model A provides a response that reflects a certain cultural or demographic bias, possibly showing a perspective shaped by a particular age group or cultural background.

Model B, on the other hand, might offer a contrasting view that highlights different biases, perhaps influenced by a different demographic group or cultural experience.

After viewing the responses, users are encouraged to rate the level of bias they perceive in each model’s output. This interactive rating system not only provides feedback for improving the models but also engages users in critical thinking about the biases present in AI-generated content.

By examining these examples, you can see firsthand how BiasGPT operates and how the same prompt can elicit significantly different responses depending on the underlying biases of the model. This is the essence of BiasGPT—revealing the often unseen biases in AI and prompting discussions on how to address them.

Evaluation and Results:

To evaluate the effectiveness of BiasGPT, we conducted user studies involving participants from diverse backgrounds. Users interacted with the model and provided feedback on the level of bias in the generated responses. The data collected through Firebase was analyzed to assess the inclusivity and bias levels of the model.

The results were visualized using PowerBI, revealing interesting insights into how different models were perceived in terms of bias. For instance, the Young Model was frequently rated as "Completely Biased," while the Asian Model was perceived as less biased overall.

Applications of BiasGPT:

BiasGPT has significant potential in various applications where understanding and addressing bias is crucial. These include:

Content Creation: Generating content that reflects diverse perspectives.

Education: Teaching AI ethics and the impact of bias in AI.

Social Research: Studying how AI can simulate human biases and contribute to social research.

Bias Detection: Assisting in identifying and mitigating biases in other AI models.

Conclusion:

BiasGPT represents a step forward in creating more inclusive AI systems by acknowledging and addressing the biases inherent in LLMs. Through fine-tuning and careful dataset selection, BiasGPT offers a framework for generating AI responses that better reflect the diversity of human experiences. This project not only contributes to the ongoing discussion about AI ethics but also provides practical tools for making AI more equitable.

Explore the BiasGPT project on GitHub and read our detailed Research Paper.

Subscribe to my newsletter

Read articles from Vaishnavi Kulkarni directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vaishnavi Kulkarni

Vaishnavi Kulkarni

👋 Welcome to my Hash Node profile! I'm an aspiring Data Analyst pursuing my Master's in Computing (Data Analytics) at Dublin City University, with a strong foundation in Information Technology from Mumbai University. Passionate about technology, I've honed skills in data analysis, machine learning, Python programming, and data visualization. My mission is to leverage these skills to drive innovation through data-driven insights. 🚀