Day 33/40 Days of K8s: Ingress in Kubernetes !!

Gopi Vivek Manne

Gopi Vivek Manne

❓ What is Ingress?

Ingress acts as a load balancer, it crates HTTP/HTPS routes from outside the cluster to service inside the cluster and define traffic by routing rules. It provides more advanced routing and load balancing capabilities compared to Kubernetes Services.

❓ Why Use Ingress?

Limitations of Standard Kubernetes Services

Basic Load Balancing: Services offer only basic round-robin load balancing which isn't enough in real time.

Cost Inefficiency: Each Service (of type LoadBalancer) creates its own cloud load balancer, increasing costs.

Limited Features: Services lack advanced routing/load balancing and security features.

✅ Advantages of Ingress

Advanced Routing: Supports complex routing based on paths, hosts, and other criteria.

Single Entry Point: One Ingress can route traffic to multiple backend services.

Enterprise-Level Features: Offers capabilities like

Ratio-based routing

White/blacklisting

Web Application Firewall (WAF)

Sticky sessions

SSL/TLS termination

API Gateways

DDoS protection

❇ How Ingress Works

Ingress consists of three main components:

Ingress Resource: These are YAML files where routing rules are defined.

Ingress Controller: Implements the Ingress rules.

Load Balancer: Manages external traffic distribution to send to backend services.

❇ Implementing Ingress

To use Ingress in your Kubernetes cluster:

Choose and deploy an Ingress Controller (e.g: NGINX Ingress Controller) in the cluster using Helm charts or manifests.

Create Ingress Resources defining your routing rules.

The Ingress Controller will create and configure a load balancer based on your Ingress Resources(annotations,routing rules).

Let's get into Hands-on to understand how this flow works....

❇ TASK

Deploy a hello world application in a pod, create a service for it and expose it to external world using Ingress.

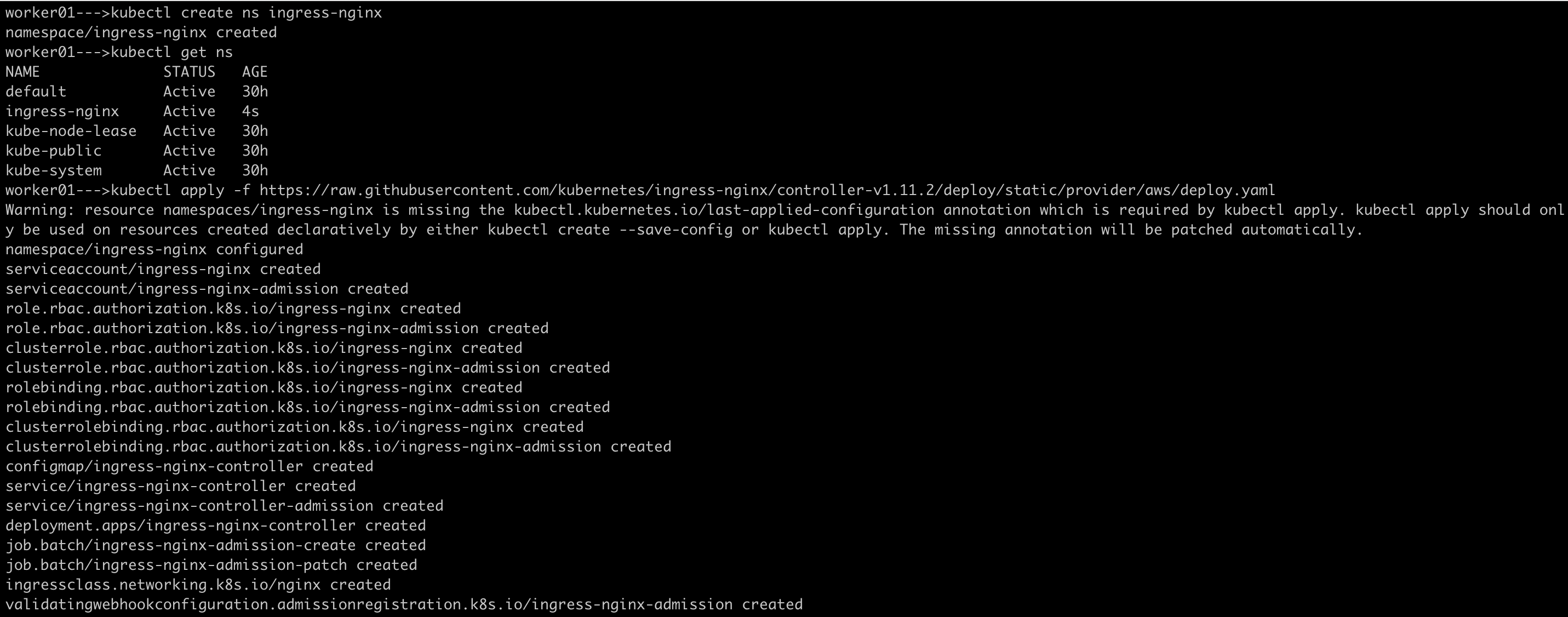

Deploy nginx ingress controller inside the cluster using manifests

Refer to the installation guide: https://kubernetes.github.io/ingress-nginx/deploy/#aws

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.11.2/deploy/static/provider/aws/deploy.yaml

Make sure to install the ingress-nginx namespace, then deploy nginx ingress controller.

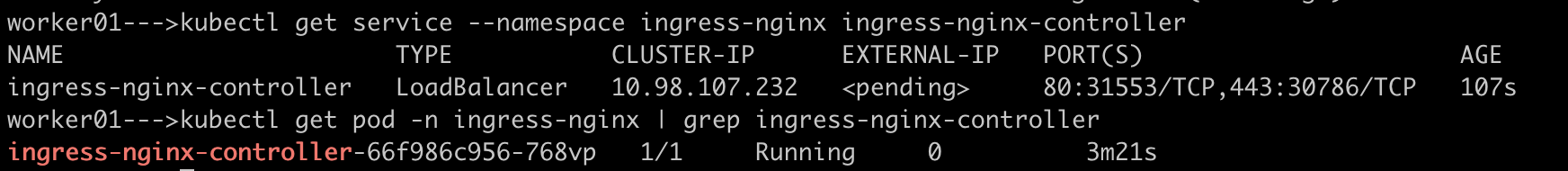

Verify that the Ingress Controller is running

kubectl get service --namespace ingress-nginx ingress-nginx-controller

Clone the git repository

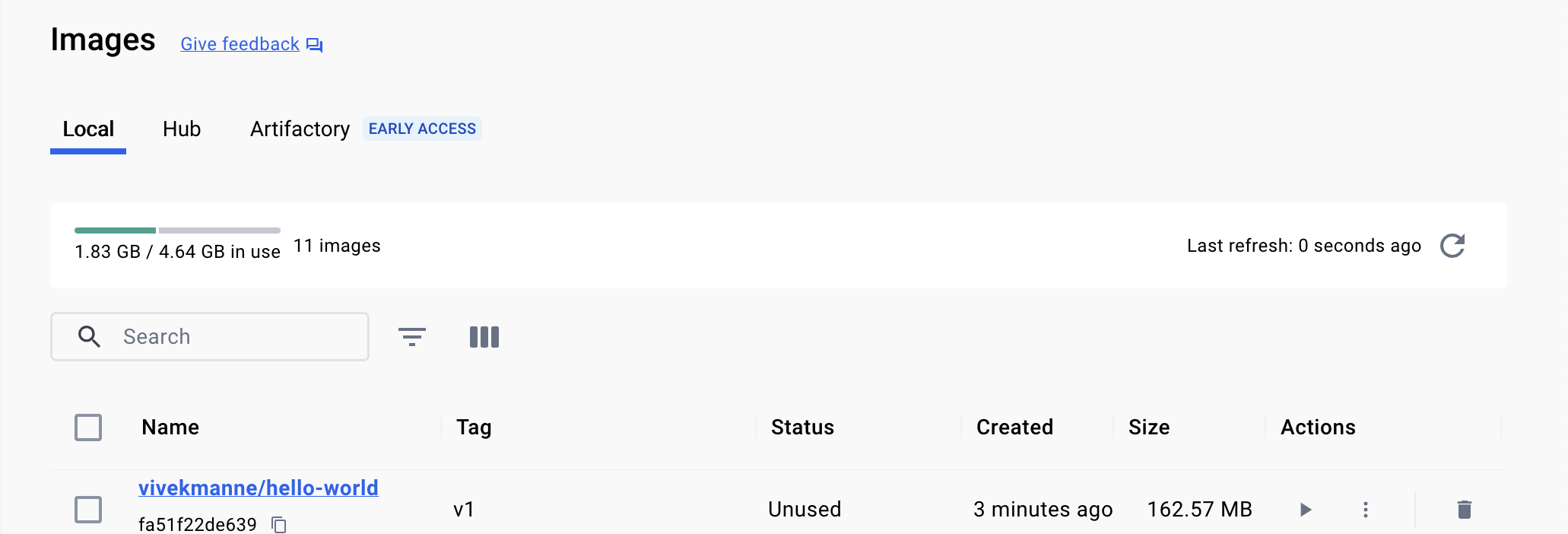

git clone https://github.com/gmanne11/CKA-2024.gitBuild the Docker image using the Dockerfile

cd Day33/ docker build -t vivekmanne/hello-world:v1 .Push the Docker image to the DockerHub

docker push vivekmanne/hello-world:v1

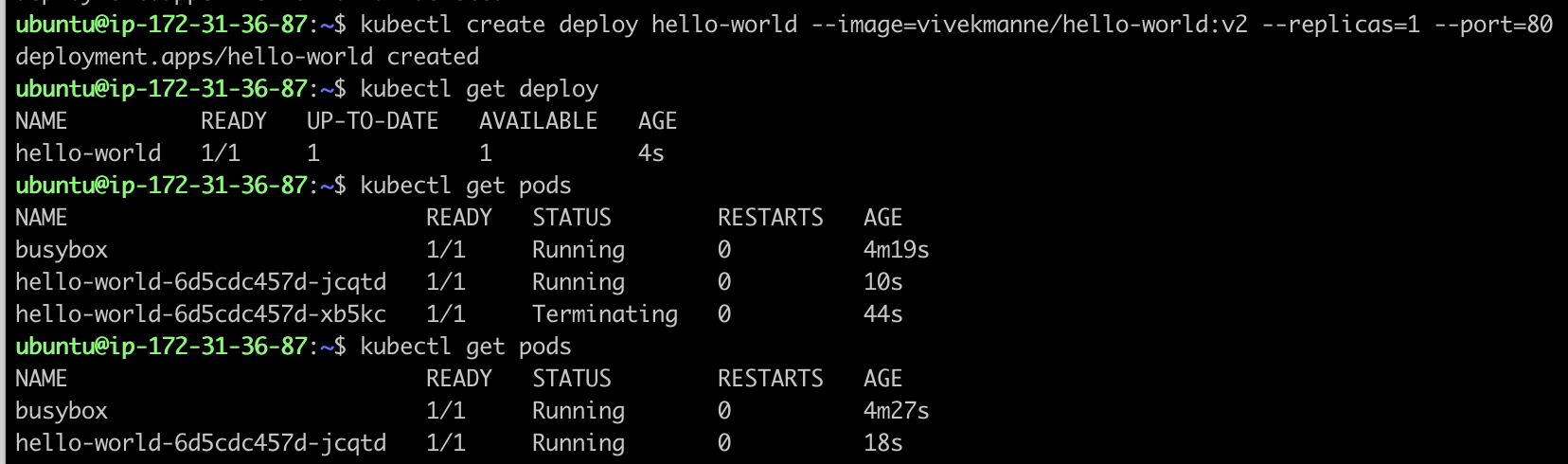

Create a new Deployment named

hello-worldthat controls a single replica running the imagevivekmanne/hello-world:v1 on port 80.kubectl create deployment hello-world --image=vivekmanne/hello-world:v1 --port=80 --replicas=1

Expose the Deployment with a Service named

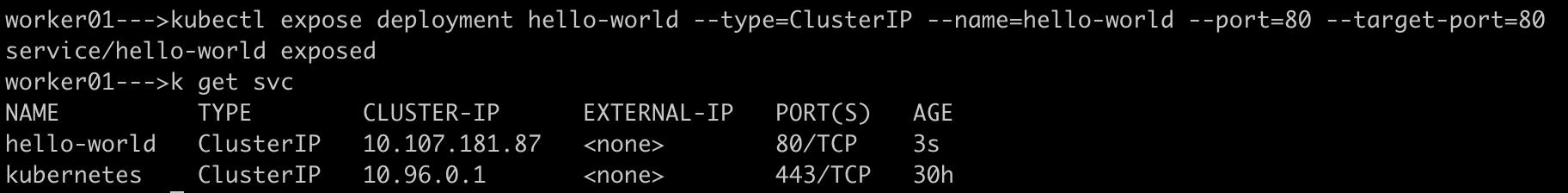

hello-worldof typeClusterIP. The Service routes traffic to the Pods controlled by the Deploymenthello-world.kubectl expose deployment hello-world --type=ClusterIP --name=hello-world --port=80 --target-port=80

As you can see above, the deployment is exposed as a

ClusterIPservice on service port 80.Make a request to the endpoint of the application on the context path

/. You should see the message "Hello, World!".You need to get the IP address of one of the Pods created by the Deployment.

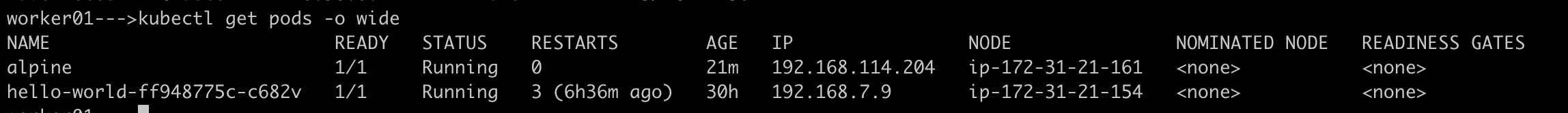

# Run alpine pod using the command kubectl run alpine --image=alpine --restart=Never -- sleep 3600 kubectl get pods -o wide

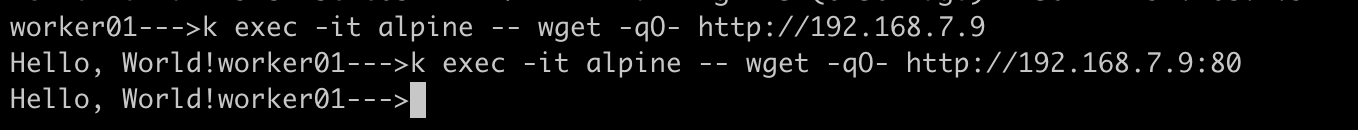

Then, exec into one of the pod(

alpine) make a curl request to pod IP on port 80 where application is running

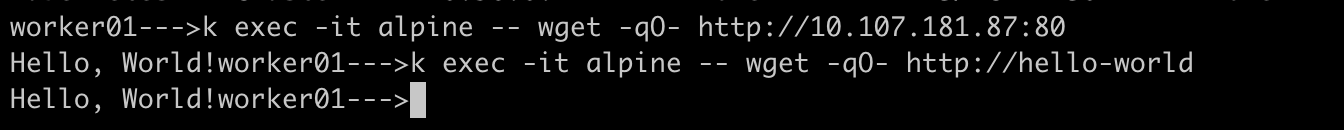

Alternatively, you can use service name or ClusterIP of the service on their port to access the application

Implement Ingress on top of the service

Create an Ingress resource in default namespace using below yaml.Once applied using kubectl command, check the ingress is running or not.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx

rules:

- host: "example.com"

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: hello-world

port:

number: 80

❇ Common Issue

failed to call webhook: Post "https://ingress-nginx-controller-admission.ingress-nginx.svc:443/networking/v1/ingresses?timeout=10s": context deadline exceeded

If you encounter an issue like this when trying to apply ingress resource, check ValidatingWebhookConfiguration and delete the resource using below commands and reapply ingress again.

kubectl get ValidatingWebhookConfiguration

kubectl delete ValidatingWebhookConfiguration <name of the resource>

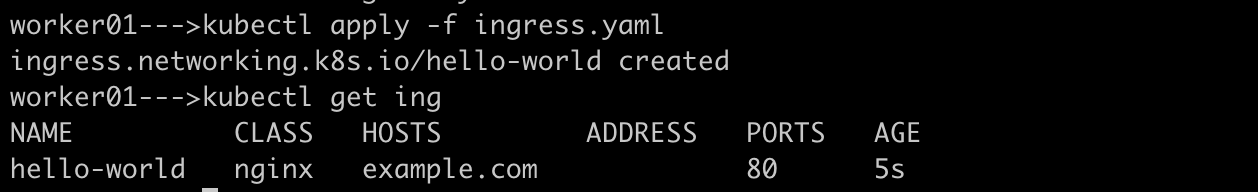

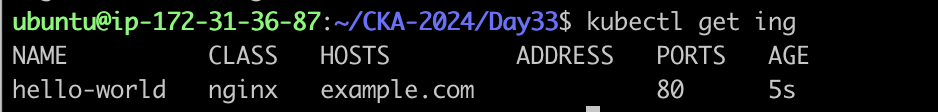

Check Ingress created or not in the desired namespace

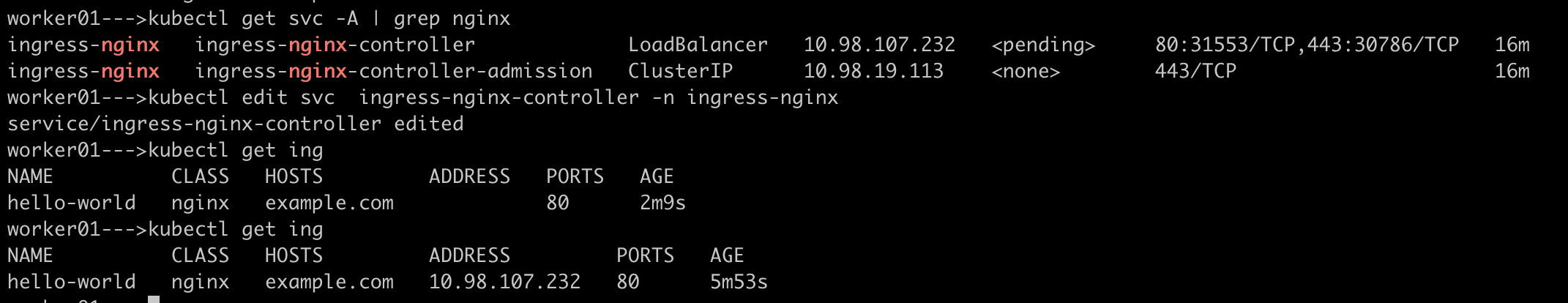

❇ Configuring NGINX Ingress Controller in a Multi-Node kubeadm Cluster

In a multi-node kubeadm Kubernetes cluster, where no Cloud Controller Manager (CCM) is present, the NGINX Ingress Controller (IC) and Ingress resource work as follows:

Deployment:

The NGINX Ingress Controller is deployed in the

ingress-nginxnamespace.Ingress resources, defining routing rules, are typically deployed in the

defaultnamespace or where your services reside.

Controller Behavior:

- The IC monitors Ingress resources across the cluster, configuring load balancing based on the rules and annotations provided, as long as the IngressClass name matches.

No Automatic Load Balancer:

- Without a CCM, no external IP is automatically allocated for the load balancer in a kubeadm cluster, unlike in cloud environments.

Workaround:

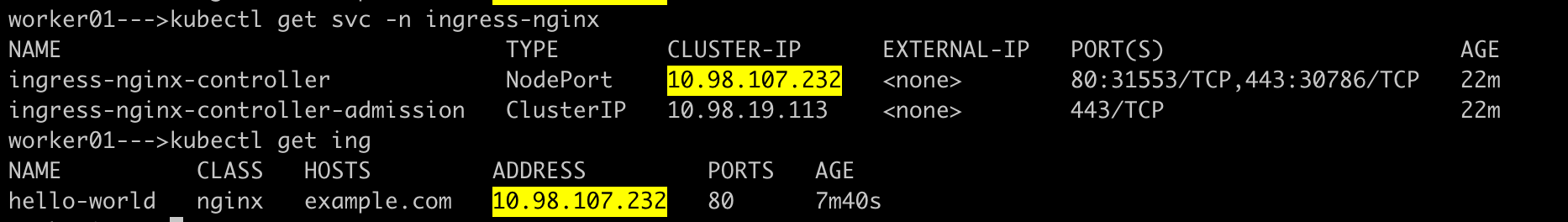

- To expose applications via Ingress, change the Ingress Controller service type to

NodePort. This configuration makes the applications accessible from outside the cluster using the node's IP and the NodePort assigned to the Ingress Controller service.

- To expose applications via Ingress, change the Ingress Controller service type to

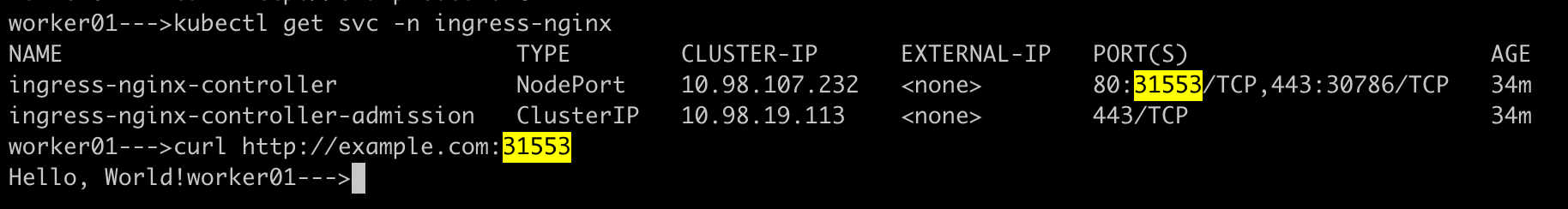

After running kubectl get ing, the Ingress resource shows that it has been assigned the IP address of a node in the cluster:

NAME CLASS HOSTS ADDRESS PORTS AGE

hello-world nginx example.com 10.98.107.232 80 30m

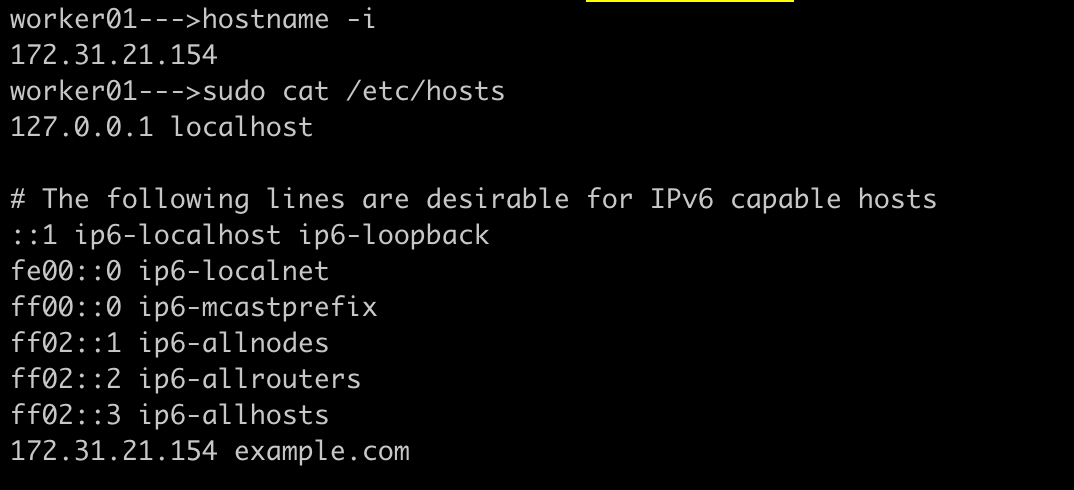

The Ingress IP (10.98.107.232) is the nginx-ingress-controller service IP address. To access the application using the domain example.com, we need to ensure DNS resolution by adding an entry in the /etc/hosts file on the node:

# This is where DNS resolution happens, our domain example.com is pointing to NodeIP address

172.31.21.154 example.com

Reason: This is necessary because the load balancer is configured to accept requests only for example.com and forward them to the hello-world service on port 80. After updating the /etc/hosts file, we can access the application by running:

curl example.com:NodePort

This command will resolve example.com to the node's IP and access the application. The NodePort number for nginx ingress controller service is 31553 as you can see below

💁 Common Issues

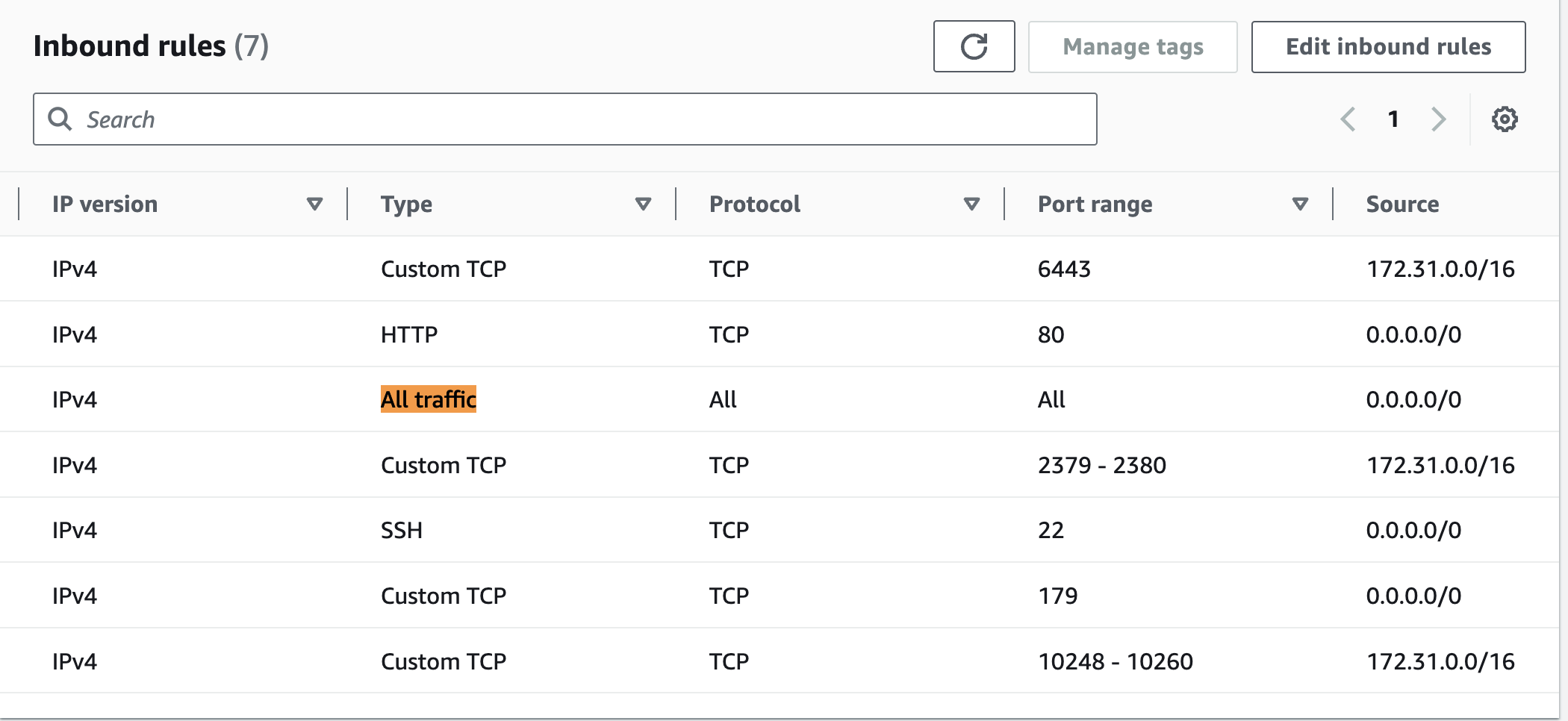

If you're encountering connectivity issues between pods across different nodes or accessing services from pods, as a workaround ensure that the security groups (SG) attached to your nodes allows all traffic from anywhere like below.

For ingress issues, keep in mind:

Even if the NGINX Ingress Controller service is exposed as a

NodePort, you may not be able to access it usingNodeIP:NodePortfrom worker nodes.The ingress controller strictly enforces rules based on host headers, not on direct IP access. If your ingress rules specify a domain (ex:

example.com), only requests with that domain in the header will be accepted.Accessing the service via

NodeIP:NodePortwill likely result in a "404 Not Found" error if the request does not match the ingress rules.

#Kubernetes #Kubeadm #Multi-node-Cluster #IngressResource #IngressController #40DaysofKubernetes #CKASeries

Subscribe to my newsletter

Read articles from Gopi Vivek Manne directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gopi Vivek Manne

Gopi Vivek Manne

I'm Gopi Vivek Manne, a passionate DevOps Cloud Engineer with a strong focus on AWS cloud migrations. I have expertise in a range of technologies, including AWS, Linux, Jenkins, Bitbucket, GitHub Actions, Terraform, Docker, Kubernetes, Ansible, SonarQube, JUnit, AppScan, Prometheus, Grafana, Zabbix, and container orchestration. I'm constantly learning and exploring new ways to optimize and automate workflows, and I enjoy sharing my experiences and knowledge with others in the tech community. Follow me for insights, tips, and best practices on all things DevOps and cloud engineering!