Low-Rank Adaptation (LoRA)

Mehul Pardeshi

Mehul Pardeshi

Hey there! I’ve been diving deep into the world of AI and machine learning recently, and today I wanted to share something exciting that I’ve been exploring—Low-Rank Adaptation, or LoRA for short. It’s one of those innovations that, while sounding a bit technical at first, really simplifies and enhances how we fine-tune large language models (LLMs). Let’s break it down in a way that’s easy to digest, like I’m talking to you over coffee.

So, What Exactly Is LoRA?

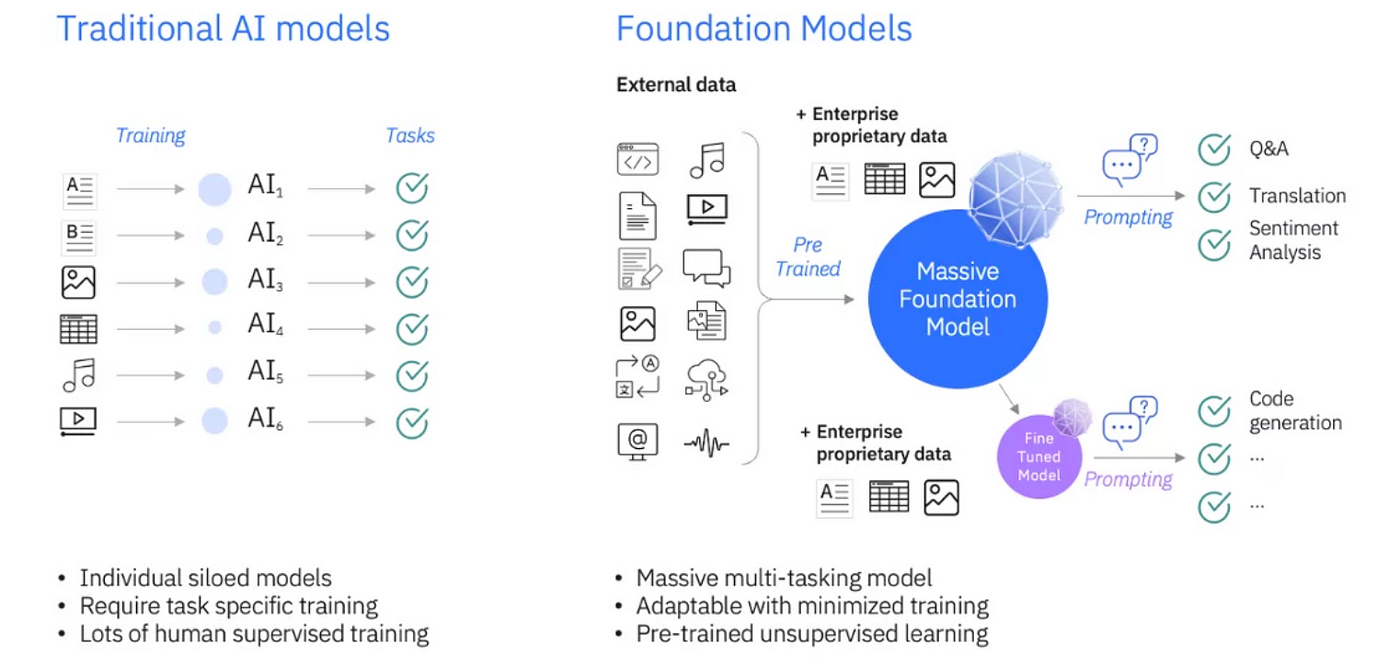

We know that fine-tuning large language models can be resource-heavy, requiring tons of data, computation, and storage. Enter LoRA—Low-Rank Adaptation is a smart method to reduce the number of trainable parameters during fine-tuning while still keeping the performance solid. The idea is to decompose a model’s weight matrices into lower-rank matrices, so instead of training everything, we only tweak the lighter parts, saving memory and making the whole process more efficient

If you’ve ever struggled with model fine-tuning because it’s just too demanding on resources, LoRA is like that friend who shows you a shortcut, but one that doesn’t compromise quality. For example, let’s say you’re working on a text generation model—LoRA lets you fine-tune it without needing to retrain the entire massive network. Neat, right?

The Magic of Low-Rank Matrices

I know matrices might sound like high school math coming back to haunt you, but stay with me—it’s not that bad. Imagine the weight matrices in a neural network, which are these large grids of numbers that determine how the model processes data. Training these matrices in full can be super costly in terms of both time and memory. What LoRA does is break down these weight matrices into low-rank approximations.

Let’s say you’ve got a matrix that’s, I don’t know, 1000x1000. Instead of dealing with the whole thing, LoRA represents it with two smaller matrices, like 1000x10 and 10x1000, which are way easier to handle. When you multiply them together, you still get an approximation of the original, but without all the extra baggage. Think of it as compressing your files but still being able to access the same data.

LoRA’s Role in Fine-Tuning Models

Here’s where LoRA really shines: fine-tuning. When we fine-tune a model, we’re adjusting certain parameters so it can perform a specific task better—like making a language model write poetry or recognize medical terms. Normally, this would require retraining tons of parameters, but with LoRA, we can fine-tune just the parts that matter most, making the process faster and less memory-intensive.

From my perspective, it’s kind of like being able to change the wheels on a car to improve its handling without needing to build the car from scratch every time. With LoRA, we’re no longer locked into the “all-or-nothing” approach when it comes to tweaking our models.

Why LoRA Matters for Practitioners

Now, why does this matter for someone like me (and probably you)? Simple—LoRA opens up a world of possibilities for anyone working with large models on a budget. In the past, fine-tuning was often restricted to big tech companies with enormous computational resources. But now, with LoRA, even individuals or small teams can fine-tune large models without breaking the bank.

It’s like the difference between owning a car that only a professional mechanic can fix and one where you can easily swap out parts yourself, at home, with just a little effort. LoRA makes fine-tuning accessible to a much broader range of developers and researchers.

Practical Use Cases for LoRA

Let’s talk real-world examples. Where can we actually use LoRA? One area where it’s particularly helpful is in Natural Language Processing (NLP). If you’re fine-tuning a model for sentiment analysis, customer support, or even generating creative writing, LoRA can save you loads of time and computational cost.

Another place I see LoRA having a big impact is in domain-specific language models. Imagine you have a general language model, but you want to fine-tune it for legal or medical purposes. With LoRA, you don’t have to re-learn everything from scratch; you just adapt the existing model to fit your needs without needing the entire setup again.

In my experience, using LoRA is like taking a language model that already speaks fluent English and teaching it a specialized dialect, like legalese or medical terminology, without making it forget its original language skills.

Some Nerdy Details (But Not Too Nerdy!)

Okay, I promised to keep this human, but let’s just briefly touch on some of the more technical aspects (I’m a tech geek at heart, after all!). When you apply LoRA to fine-tuning, you inject those low-rank decompositions into specific layers of the model. Typically, LoRA is applied to attention layers—think of attention as the model’s way of focusing on important parts of the input.

LoRA inserts these low-rank matrices into the attention layers’ weights, which is where most of the heavy lifting in transformer models happens. This reduces the number of trainable parameters by a huge margin without sacrificing much in terms of performance.

How LoRA Plays Nicely With Other Techniques

Now, one thing I love about LoRA is how it works alongside other model efficiency techniques, like quantization or pruning. LoRA doesn’t replace these methods but complements them. You can combine LoRA with quantization to save even more memory and speed up inference. In fact, this modularity makes LoRA a flexible tool in the fine-tuning toolkit.

Looking Ahead: What’s Next for LoRA?

As machine learning models continue to grow, the need for scalable, efficient fine-tuning methods like LoRA will only become more important. I believe we’re just scratching the surface of what’s possible here. Imagine a future where LoRA-like techniques make it so that anyone, from startups to students, can fine-tune and deploy powerful models without requiring huge infrastructure.

LoRA gives us a glimpse of a future where AI becomes truly democratized, where everyone has access to powerful tools that don’t demand massive compute resources. And that’s something I’m really excited to be a part of.

Final Thoughts

If you’ve ever felt overwhelmed by the computational demands of fine-tuning large models, LoRA offers a breath of fresh air. It’s efficient, accessible, and doesn’t compromise on performance. From my perspective, this technique is a game-changer for the field of AI and machine learning. Whether you're working on text generation, classification tasks, or specialized domains, LoRA is something you should definitely have in your toolkit.

I hope this gave you a clear, human-friendly overview of what LoRA is all about! Feel free to dive into it further, experiment, and, most importantly, have fun with it.

That’s it for now—happy fine-tuning!

Subscribe to my newsletter

Read articles from Mehul Pardeshi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mehul Pardeshi

Mehul Pardeshi

I am an AI and Data Science enthusiast. With hands-on experience in machine learning, deep learning, and generative AI. An active member of the ML community under Google Developer Clubs, where I regularly leads workshops. I am also passionate about blogging, sharing insights on AI and ML to educate and inspire others. Certified in generative AI, Python, and machine learning, as I continue to explore innovative applications of AI with my fellow colleagues.