What is Container Orchestration?

Megha Sharma

Megha Sharma

Container orchestration refers to the automated management and coordination of containerized applications across clusters of machines. It includes tasks like deploying, scaling, networking, and monitoring containers to ensure that applications run smoothly and efficiently, especially in complex, dynamic environments where many containers operate simultaneously.

Container orchestration automatically provisions, deploys, scales, and manages containerized applications without worrying about the underlying infrastructure. Developers can implement container orchestration anywhere containers are, allowing them to automate the life cycle management of containers.

Container orchestration automates the deployment, management, scaling, and networking of containers across the cluster. It is focused on managing the life cycle of containers.

Enterprises that need to deploy and manage hundreds or thousands of Linux® containers and hosts can benefit from container orchestration.

Container orchestration is used to automate the following tasks at scale:

Configuring and scheduling of containers

Provisioning and deployment of containers

Redundancy and availability of containers

Scaling up or removing containers to spread application load evenly across host infrastructure

Movement of containers from one host to another if there is a shortage of resources in a host, or if a host dies

Allocation of resources between containers

External exposure of services running in a container with the outside world

Load balancing of service discovery between containers

Health monitoring of containers and hosts

👉 How does container orchestration work?

Container orchestration uses declarative programming, meaning you define the desired output instead of describing the steps needed to make it happen. Developers write a configuration file that defines where container images are, how to establish and secure the network between containers, and provisions container storage and resources. Container orchestration tools use this file to achieve the requested end state automatically.

When you deploy a new container, the tool or platform automatically schedules your containers and finds the most appropriate host for them based on the predetermined constraints or requirements defined in the configuration file, such as CPU, memory, proximity to other hosts, or even metadata.

In any container orchestration tool, a configuration file (YAML or JSON) is written to describe the application’s setup, such as the location of Docker images, network configurations between containers, storage volume mounts, and log storage. These configuration files are version-controlled and used to deploy applications across various development and testing environments before moving to production clusters.

Containers are deployed onto hosts, typically in replicated groups. The orchestration tool schedules deployments, locating suitable hosts based on constraints in the configuration file, such as CPU or memory availability. Containers can also be placed according to labels, metadata, or proximity to other hosts. Once running, the orchestration tool manages the container lifecycle per the container’s definition file (e.g., Dockerfile).

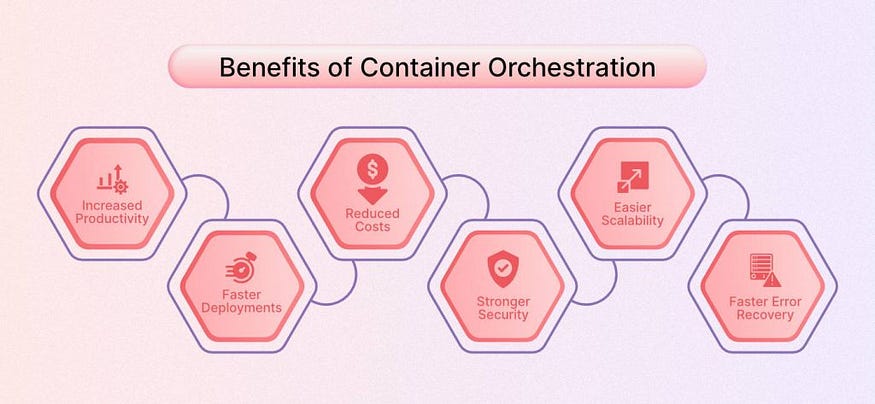

👉 Benefits of container orchestration:

Increased productivity: Container orchestration tools remove the burden of individually installing and managing each container in your system, in turn reducing errors and freeing development teams to focus on application improvement.

Faster deployments: Container orchestration tools make deploying containers more user-friendly. New containerized applications can quickly be created as needed to address increasing traffic.

Reduced costs: One of the biggest advantages of containers is that they have lower overhead and use fewer resources than traditional virtual machines.

Stronger security: Container orchestration tools help users share resources more securely. Containers also isolate application processes, improving its overall security.

Easier scalability: Container orchestration tools enable users to scale applications with a single command.

Faster error recovery: Container orchestration platforms can detect issues like infrastructure failures and automatically work around them, helping maintain high availability and increase the uptime of your application.

Automated Deployment: Orchestration platforms automate the process of deploying containers, handling the complexities of starting and stopping containers, and ensuring that the right versions of applications are running.

👉 Why Container Orchestration is Important:

Operational Efficiency: Automates routine operational tasks, freeing up developers and system administrators to focus on other priorities.

Scalability: Ensures that applications can scale up to meet demand without manual intervention, whether across a few machines or hundreds.

Flexibility and Portability: Orchestration platforms work across different environments (cloud, on-premise, hybrid), making it easy to move and manage applications in various settings.

Resilience and Availability: Increases the availability and reliability of applications by ensuring that services remain up and running, even in the event of hardware failures or other disruptions.

👉 Container orchestration examples and use cases:

Some examples of container orchestration, and why do we need to orchestrate containers in the first place?

In modern development, containerization has become a primary technology for building cloud-native applications. Rather than large monolithic applications, developers can now use individual, loosely coupled components (commonly known as microservices) to compose applications.

While containers are generally smaller, more efficient, and provide more portability, they do come with a caveat. The more containers you have, the harder it is to operate and manage them — a single application may contain hundreds or even thousands of individual containers that need to work together to deliver application functions.

As the number of containerized applications continues to grow, managing them at scale is nearly impossible without the use of automation. This is where container orchestration comes in, performing critical life cycle management tasks in a fraction of the time.

Let’s imagine that you have 50 containers that you need to upgrade. You could do everything manually, but how much time and effort would your team have to spend to get the job done? With container orchestration, you can write a configuration file, and the container orchestration tool will do everything for you.

This is just one example of how container orchestration can help reduce operational workloads. Now, consider how long it would take to deploy, scale, and secure those same containers if everything is developed using different operating systems and languages.

👉 Container Orchestration Tools & Platforms:

There are several popular container orchestration tools and platforms available, each with its own features, advantages, and use cases. Below is an overview of the major container orchestration tools:

Kubernetes (K8s)

Kubernetes is the most widely used and comprehensive container orchestration platform. Originally developed by Google, it’s now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes automates container deployment, scaling, load balancing, and management across clusters of machines.

Kubernetes allows us to build application services that deploy multiple containers, schedule them across the cluster, scale those containers and manage the lifecycle of those Containers. It helps in making the process automated by eliminating many of the manual processes involved in deploying and scaling containerized applications. Kubernetes gives the platform to manage the clusters easily and efficiently.

Kubernetes has become an ideal platform for hosting cloud-native apps that require rapid scaling and deployment. Kubernetes also provides portability and load balancer services by enabling them to move applications across different platforms without redesigning them.

Docker Swarm

Docker swarm is also a container orchestration tool, meaning that it allows the user to manage multiple containers deployed across multiple host machines.

Docker Swarm is Docker’s native clustering and orchestration tool. It is simpler and more lightweight than Kubernetes, making it easy to set up and manage smaller containerized environments.

Apache Mesos

Apache Mesos is a general-purpose cluster manager that can handle containerized and non-containerized workloads. Marathon is a container orchestration framework built on top of Mesos, often used in larger, more complex environments.

Apache Mesos is slightly older than Kubernetes. It is an open-source software project originally developed at the University of California at Berkeley, but now widely adopted in organizations like Twitter, Uber, and Paypal. Mesos’ lightweight interface lets it scale easily up to 10,000 nodes (or more) and allows frameworks that run on top of it to evolve independently. Its APIs support popular languages like Java, C++, and Python, and it also supports out-of-the-box high availability. Unlike Swarm or Kubernetes, however, Mesos only provides management of the cluster, so a number of frameworks have been built on top of Mesos, including Marathon, a “production-grade” container orchestration platform.

Azure Kubernetes Service (AKS)

Azure Kubernetes Service (AKS) is a fully managed Kubernetes container orchestration service provided by Microsoft Azure. It allows users to deploy, manage, and scale containerized applications using Kubernetes without the complexity of handling the underlying infrastructure. AKS automates critical tasks such as health monitoring, scaling, patching, and upgrading, making it easier to manage Kubernetes clusters.

AKS manages the master node and the users have to manage the Worker nodes. Users can use AKS to deploy, scale, and manage Docker containers and container-based applications across a cluster of container hosts. As a managed Kubernetes service AKS is free — you only pay for the worker nodes within your clusters, not for the masters. You can create an AKS cluster in the Azure portal, with the Azure CLI, or template-driven deployment options such as Resource Manager templates and Terraform.

Oracle Kubernetes Engine (OKE)

Oracle Kubernetes Engine (OKE) is a fully managed, scalable, and highly available Kubernetes service offered by Oracle Cloud Infrastructure (OCI). OKE allows users to deploy, manage, and scale containerized applications using Kubernetes, with Oracle handling the complexities of Kubernetes control plane management. It’s designed for enterprises seeking to run cloud-native applications in a secure and high-performance environment with tight integration into Oracle Cloud’s broader ecosystem.

Amazon Elastic Kubernetes Service (Amazon EKS)

Amazon Elastic Kubernetes Service (Amazon EKS) is a fully managed Kubernetes service provided by AWS that simplifies running Kubernetes clusters in the cloud or on-premises. Amazon EKS handles the operational complexities of Kubernetes, such as cluster provisioning, scaling, patching, and control plane management, allowing developers to focus on deploying and managing applications. EKS is widely used for running containerized applications in production and benefits from deep integration with AWS services.

Google Cloud Kubernetes Engine

Google Kubernetes Engine (GKE) is a fully managed Kubernetes service provided by Google Cloud. It simplifies deploying, managing, and scaling containerized applications using Kubernetes, benefiting from Google Cloud’s extensive infrastructure and tooling. GKE offers a robust and scalable platform for running containerized applications with powerful integrations into Google’s cloud services.

Subscribe to my newsletter

Read articles from Megha Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Megha Sharma

Megha Sharma

👋 Hi there! I'm a DevOps enthusiast with a deep passion for all things Cloud Native. I thrive on learning and exploring new technologies, always eager to expand my knowledge and skills. Let's connect, collaborate, and grow together as we navigate the ever-evolving tech landscape! SKILLS: 🔹 Languages & Runtimes: Python, Shell Scripting, YAML 🔹 Cloud Technologies: AWS, Microsoft Azure, GCP 🔹 Infrastructure Tools: Docker, Terraform, AWS CloudFormation 🔹 Other Tools: Linux, Git and GitHub, Jenkins, Docker, Kubernetes, Ansible, Prometheus, Grafana