Setting Up an AWS EKS Cluster Using Terraform: A Beginner-Friendly Guide

Amash Ansari

Amash Ansari

Amazon Elastic Kubernetes Service (EKS) simplifies running Kubernetes on AWS without having to install or operate your own Kubernetes control plane. In this guide, I’ll walk you through creating an EKS cluster using Terraform — an Infrastructure as Code (IaC) tool that helps automate provisioning.

By the end of this post, you'll have a fully operational Kubernetes cluster running in AWS. Let’s get started!

Prerequisites

Make sure you have the following installed before proceeding:

AWS CLI: To interact with AWS services from your terminal.

Terraform: To manage AWS infrastructure as code.

You also need an AWS account with sufficient permissions to create VPCs, EKS clusters, and security groups.

Step 1: Configure AWS Credentials

First, configure AWS CLI with your access keys by running the following command:

aws configure

Enter your AWS Access Key ID, Secret Access Key, Default region name, and Default output format. Ensure that the IAM user or role you are using has the required permissions to create EKS clusters.

Step 2: Create a VPC Using Terraform

Create a file named vpc.tf to define the network setup. This configuration will create a Virtual Private Cloud (VPC) with both private and public subnets and enable DNS support.

data "aws_availability_zones" "azs" {}

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "5.13.0"

name = var.vpc_name

cidr = var.vpc_cidr

azs = data.aws_availability_zones.azs.names

private_subnets = ["10.0.1.0/24", "10.0.2.0/24"]

public_subnets = ["10.0.101.0/24", "10.0.102.0/24"]

enable_nat_gateway = true

single_nat_gateway = true

enable_dns_hostnames = true

enable_dns_support = true

tags = {

Name = var.vpc_name

"kubernetes.io/cluster/${var.eks_name}" = "shared"

}

private_subnet_tags = {

"kubernetes.io/cluster/${var.eks_name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

public_subnet_tags = {

"kubernetes.io/cluster/${var.eks_name}" = "shared"

"kubernetes.io/role/elb" = "1"

}

}

Step 3: Define Variables

Create a file variables.tf to define reusable variables like VPC name, CIDR block, and EKS cluster name. This way, you can easily adjust these values without modifying the core configuration.

variable "aws_region" {

description = "AWS region"

default = "us-east-1"

}

variable "vpc_name" {

description = "VPC name"

type = string

}

variable "vpc_cidr" {

description = "VPC CIDR"

default = "10.0.0.0/16"

}

variable "eks_name" {

description = "AWS EKS Cluster name"

type = string

}

variable "sg_name" {

description = "Security group name"

default = "aws-eks-sg"

}

Step 4: Create Security Groups

Now, let's set up security groups that control network access to the EKS cluster. Create a file security-groups.tf with the following content:

resource "aws_security_group" "eks-sg" {

name = var.sg_name

vpc_id = module.vpc.vpc_id

}

resource "aws_security_group_rule" "eks-sg-ingress" {

description = "allow inbound traffic from eks"

type = "ingress"

from_port = 0

to_port = 0

protocol = -1

security_group_id = aws_security_group.eks-sg.id

cidr_blocks = ["49.43.153.70/32"]

}

resource "aws_security_group_rule" "eks-sg-egress" {

description = "allow outbound traffic to eks"

type = "egress"

from_port = 0

to_port = 0

protocol = -1

security_group_id = aws_security_group.eks-sg.id

cidr_blocks = ["0.0.0.0/0"]

}

Step 5: Set Up the EKS Cluster

With the network and security setup complete, create a file eks.tf to define the EKS cluster and node groups.

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 20.0"

cluster_name = var.eks_name

cluster_version = "1.30"

enable_irsa = true

vpc_id = module.vpc.vpc_id

subnet_ids = module.vpc.private_subnets

tags = {

cluster = "my-eks-cluster"

}

# EKS Managed Node Group(s)

eks_managed_node_group_defaults = {

ami_type = "AL2_x86_64"

instance_types = ["t2.micro"]

vpc_security_group_ids = [aws_security_group.eks-sg.id]

}

eks_managed_node_groups = {

node_group = {

min_size = 2

max_size = 3

desired_size = 2

}

}

}

Step 6: Create Terraform and Provider Files

You also need the following Terraform and provider files for proper setup:

terraform.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider.tf

provider "aws" {

region = var.aws_region

}

output.tf

output "cluster_id" {

description = "AWS EKS Cluster ID"

value = module.eks.cluster_id

}

output "cluster_endpoint" {

description = "AWS EKS Cluster Endpoint"

value = module.eks.cluster_endpoint

}

output "cluster_security_group_id" {

description = "Security group ID of the control plane in the cluster"

value = module.eks.cluster_security_group_id

}

output "region" {

description = "AWS region"

value = var.aws_region

}

output "oidc_provider_arn" {

value = module.eks.oidc_provider_arn

}

Step 7: Initialize Terraform

Run the following command to initialize Terraform and download the necessary providers:

terraform init

Step 8: Validate and Plan

Before applying the configuration, validate it and preview the changes:

terraform validate

terraform plan

Step 9: Apply the Terraform Configuration

Create your EKS cluster and the associated VPC with:

terraform apply

This process will take approximately 15 minutes to complete. Once done, you’ll have your AWS EKS cluster up and running.

Step 10: Verify the Cluster in the AWS Console

Head to the AWS Management Console, navigate to EKS and confirm that your cluster is listed.

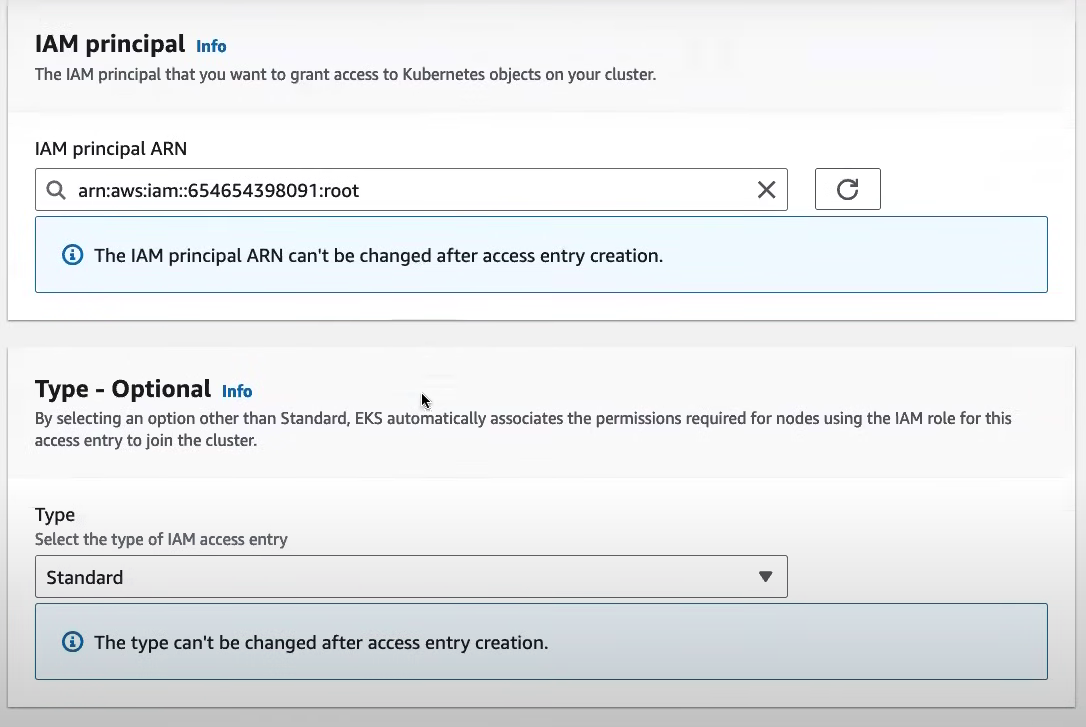

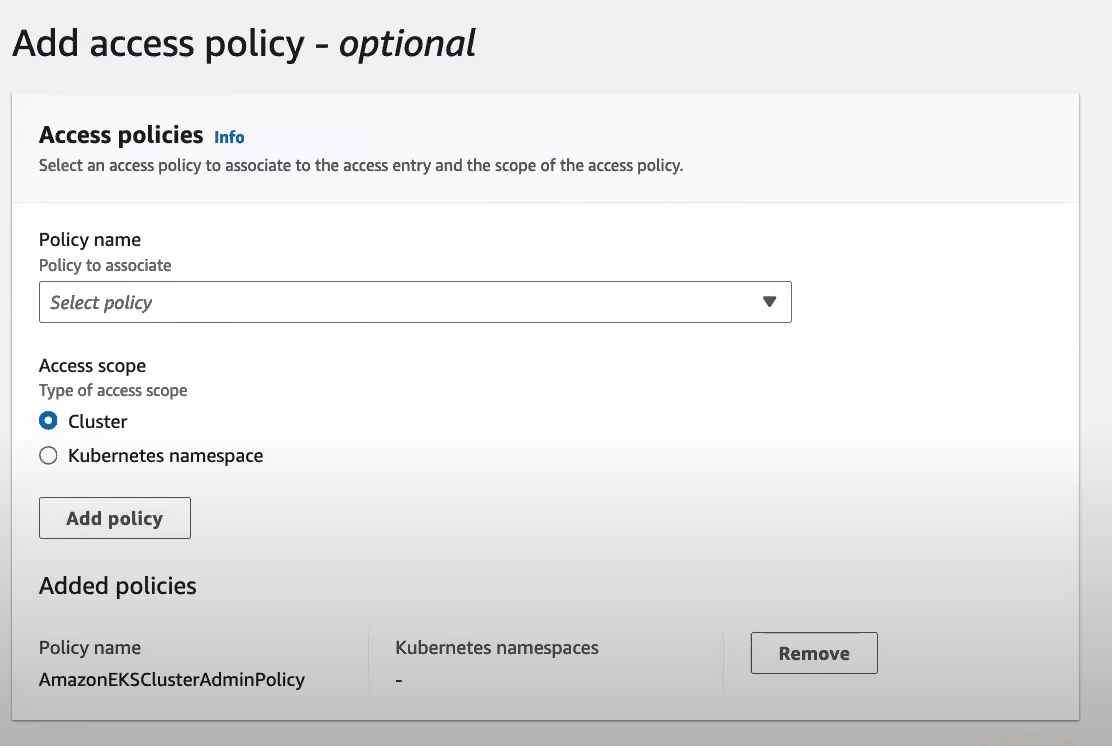

Step 11: Configure Cluster Access (Manual Step)

After the EKS cluster is ready, you need to configure access for your IAM users or roles:

In the AWS Console, navigate to EKS > Your Cluster > Configuration > Access.

Click on Add role or Add user.

Provide the IAM Principal ARN.

Choose the Type (Role or User), provide a Username, and select a Policy Name (e.g., Admin or ViewOnly).

Define the Access Scope.

Click Add Policy and finalize the configuration.

This step grants users or roles permissions to interact with the Kubernetes cluster using IAM-based authentication.

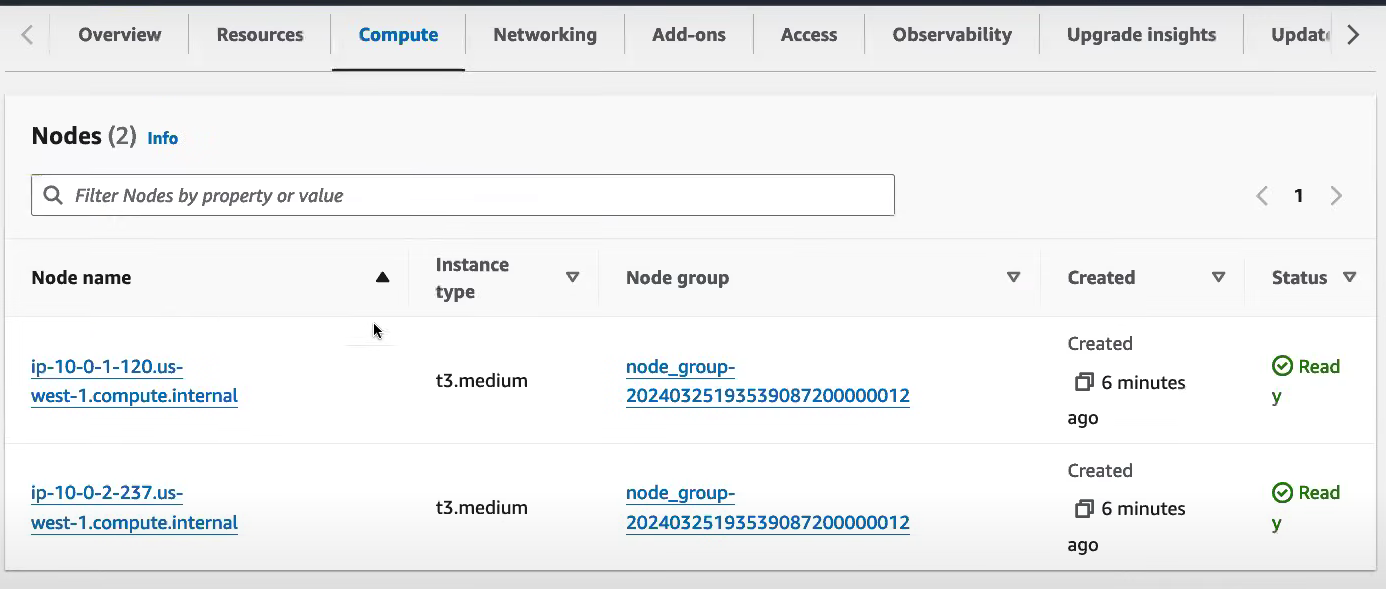

Step 12: Verify Nodes in the Compute Tab

To check if the nodes are up and running:

Go to the Compute tab of the cluster in the AWS Console.

Ensure that the worker nodes are visible.

Step 13: Access the Cluster via CLI

Once the cluster is set up, access it using the AWS CLI by running:

aws eks update-kubeconfig --name <cluster-name> --region <aws-region>

This command configures your kubectl context to use the new EKS cluster.

Step 14: Clean Up Resources

To avoid unexpected charges, delete the resources you created when they’re no longer needed:

terraform destroy

Conclusion

You’ve successfully set up an AWS EKS cluster using Terraform! This guide provides a simplified approach to provisioning cloud infrastructure, ensuring that you can quickly get started with Kubernetes on AWS.

Feel free to customize the configuration as per your needs, and remember to clean up any resources when you’re done to avoid unnecessary costs. Happy Terraforming!

Subscribe to my newsletter

Read articles from Amash Ansari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Amash Ansari

Amash Ansari

Amash is a recent B-Tech CSE graduate from Birla Institute of Applied Sciences. Having an interest in learning new technologies is one of his greatest assets. Trying to be a part of global communities 🌍 Opportunity seeker. Open source contributor. 🔓