Azure Function Serverless Deployment with CICD Explained

Ritesh Kumar Nayak

Ritesh Kumar Nayak

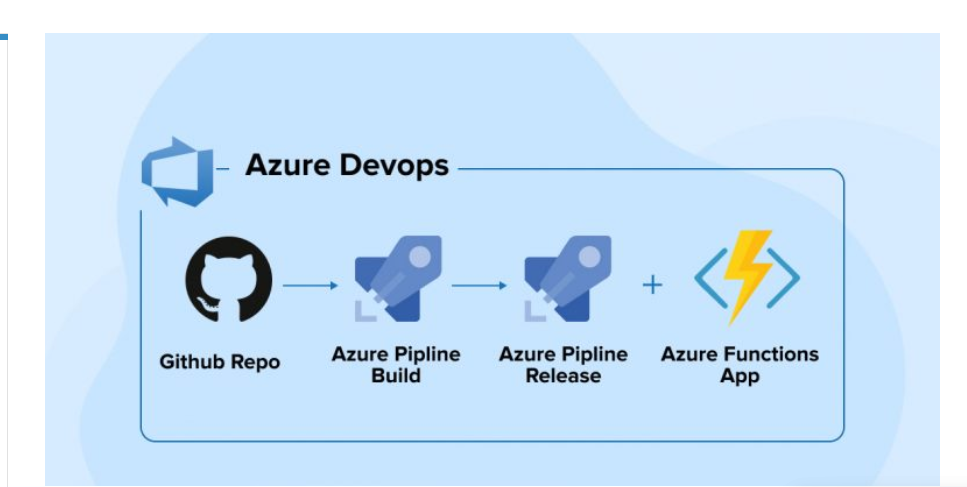

Here we are going to explore the power of Azure Functions in serverless architectures, detailing the benefits of using a CI/CD pipeline for seamless deployments. We will guide you through setting up the deployment process, highlight the advantages over traditional methods, and showcase how serverless technologies when combined with automation, can streamline your cloud deployments for maximum efficiency and reliability.

What's in There?

Azure function, use-case, and its benefits

Azure Function creation and local deployment

Publishing the function to Azure using the CLI tool

Build and release pipeline for building and deploying the code to Azure function

Azure Function

The Azure function is a serverless, event-driven compute service that performs a similar job as AWS Lambda. Azure Functions allow you to run code in response to events without the need to provision or manage infrastructure. This makes it ideal for a wide range of use cases, including processing data, integrating systems, and handling tasks that require rapid scaling based on demand.

With Azure Functions, you can trigger execution based on HTTP requests, timers, message queues, or other Azure services. The serverless nature means you only pay for the compute resources when your function is actively running, optimizing both cost and efficiency. This lightweight, flexible platform allows developers to focus on writing code rather than managing the underlying infrastructure, ultimately speeding up development and deployment cycles.

Advantages of AZ-Function

Azure Functions offer several advantages over Virtual Machines (VMs) and Containers, particularly in terms of scalability, cost-efficiency, and management simplicity. Here are some key benefits:

Serverless Nature

Unlike VMs and Containers, Azure Functions are entirely serverless. This means there’s no need to provision, manage, or maintain the underlying infrastructure. The platform automatically scales resources based on demand, making it more efficient for event-driven workloads.

Cost-Efficiency

Pay-Per-Use: With Azure Functions, you're billed only for the compute time used when the function runs, making it highly cost-effective for infrequent or variable workloads. In contrast, VMs and Containers often incur fixed costs for allocated resources, even idle ones.

No Infrastructure Overhead: VMs and Containers require managing updates, patches, and scaling resources, which adds to the operational cost. Azure Functions eliminates these overheads.

Automatic Scaling

Azure Functions automatically scale based on incoming events or load. VMs and Containers typically require manual configuration to scale, and they may not scale as efficiently in scenarios where workloads are highly unpredictable.

Rapid Deployment and Development

Since Azure Functions focus on executing specific tasks in response to triggers, development and deployment cycles are much faster than setting up VMs or Containers. Functions support multiple programming languages and have built-in integrations with various Azure services, further speeding up the process.

Event-Driven Architecture

Azure Functions are designed to be triggered by events such as HTTP requests, messages in queues, or changes in databases. This is ideal for event-driven architectures where certain actions need to be performed in response to specific events. While Containers and VMs can be event-driven, setting them up requires more configuration and effort.

Maintenance-Free

VMs and Containers require maintenance, such as patching, updating, and securing the environment. With Azure Functions, the platform handles all these tasks, allowing developers to focus purely on writing and improving their code.

Optimized for Short-Lived Tasks

Azure Functions are ideal for short, stateless, and on-demand tasks, such as processing background jobs or handling API requests. VMs and Containers are better suited for long-running services or applications that need to maintain a certain state.

Limitations of Serverless

While serverless computing, like Azure Functions, offers numerous advantages, it also has certain limitations that need to be considered. Here are some key drawbacks:

Cold Starts

What it is: Serverless functions may experience a delay when they are invoked after a period of inactivity. This is called a cold start, and it happens because the underlying infrastructure needs time to spin up the necessary resources.

Impact: This can result in slower response times, which may not be ideal for latency-sensitive applications like real-time services or APIs.

Limited Execution Time

What it is: Serverless functions often have execution time limits. For example, Azure Functions have a maximum execution time of five minutes in the consumption plan (can be extended in premium plans).

Impact: Long-running tasks, such as data migrations or batch processing, may not be suitable for serverless environments without breaking them into smaller tasks.

Statelessness

What it is: Serverless architectures are typically stateless, meaning that data from one execution is not retained in memory for the next execution.

Impact: Applications that require persistent states or need to maintain data across sessions may require additional services, such as databases or caching solutions, adding complexity.

Limited Control Over the Infrastructure

What it is: With serverless, the underlying infrastructure is abstracted away, meaning you have limited control over the computing environment (e.g., memory, CPU configuration).

Impact: This may be a limitation for applications that need specific hardware configurations or fine-tuned control over the runtime environment.

Potential for Vendor Lock-In

What it is: Serverless platforms, such as Azure Functions, come with their own unique services, configurations, and optimizations.

Impact: Migrating to another cloud provider could require significant effort, as you may need to rewrite parts of your application or adjust it to work on a different serverless platform.

Azure Function Project Overview

We already have an Azure function project available from Rishab Kumar which we'll be using as part of our CI/CD integration with Azure DevOps. To implement this, we should create an Azure function app where the code can be deployed.

Pre-requisite

Azure Function App

Creating Azure Function (Manual)

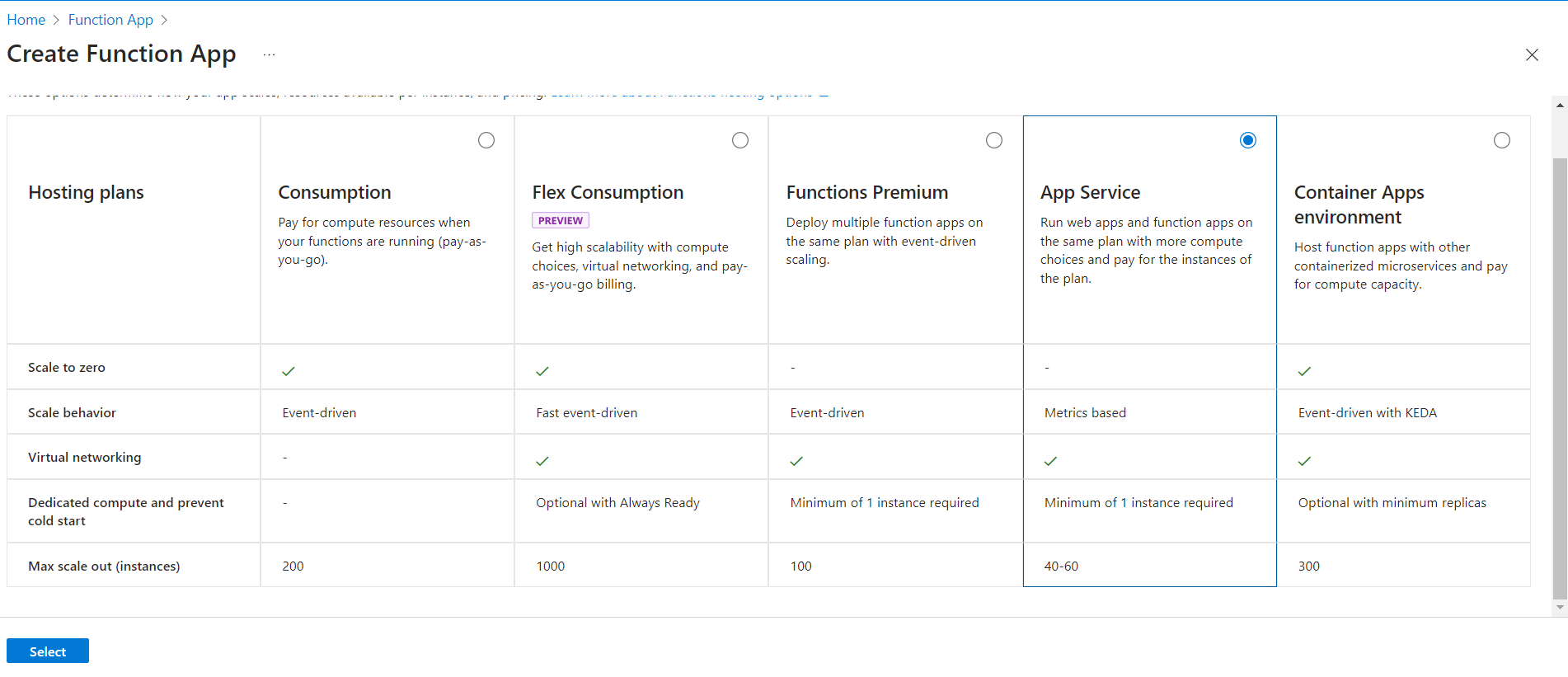

As part of this project, we'll create the function app manually from the portal. However, this process can also be automated using Terraform and integrated further.

When configuring your Function App, it's essential to select the App Service Plan to ensure sufficient storage and resources for building your application. Since the app we are deploying is built on NodeJS, which tends to have a larger footprint, the App Service Plan provides the necessary computing power and storage capacity to handle the heavier size and requirements of the application, ensuring optimal performance during deployment and runtime.

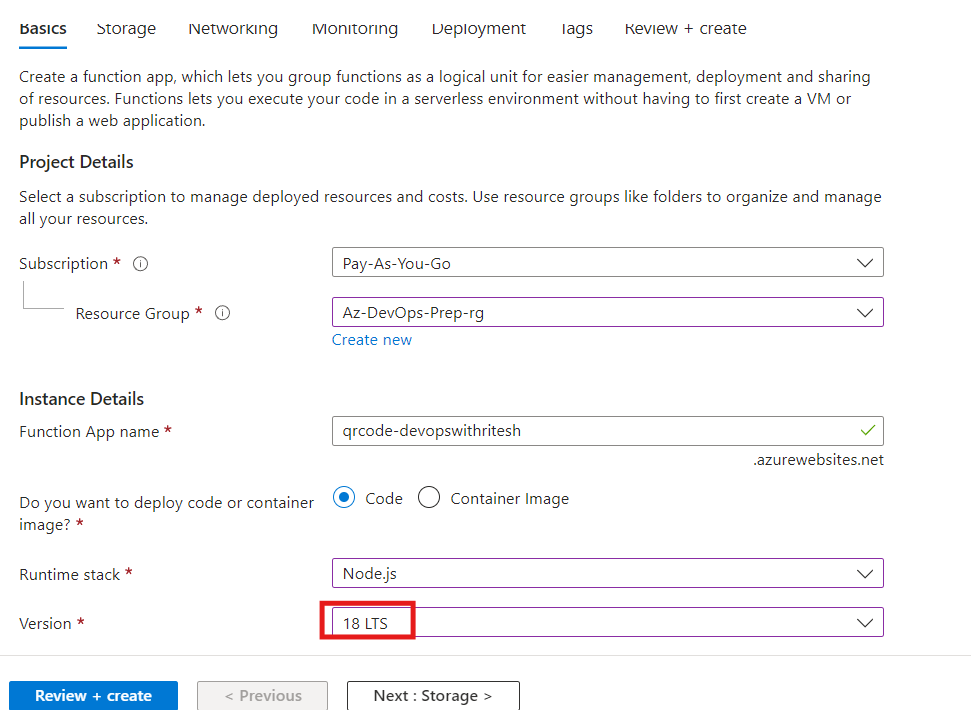

Next, proceed with the necessary configurations in all the sections as outlined. Be sure to select Node.js as the runtime stack, and choose version 18 LTS, as it is fully supported by our project. This ensures compatibility and stability for the application during deployment and execution within the Function App environment.

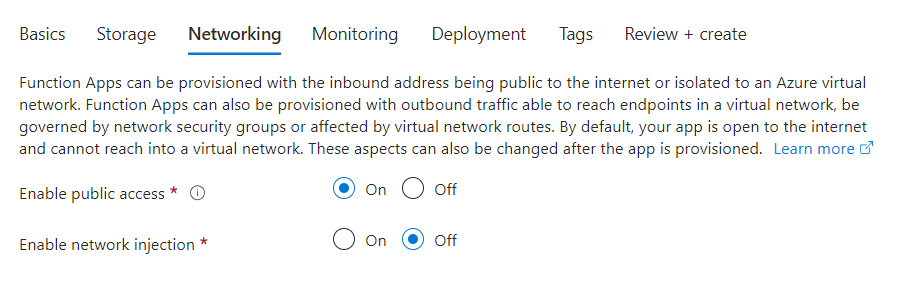

Make sure public access is enabled in the networking section which will enable us to access the application publicly

Once done you can click on review and create which will create the Azure Function.

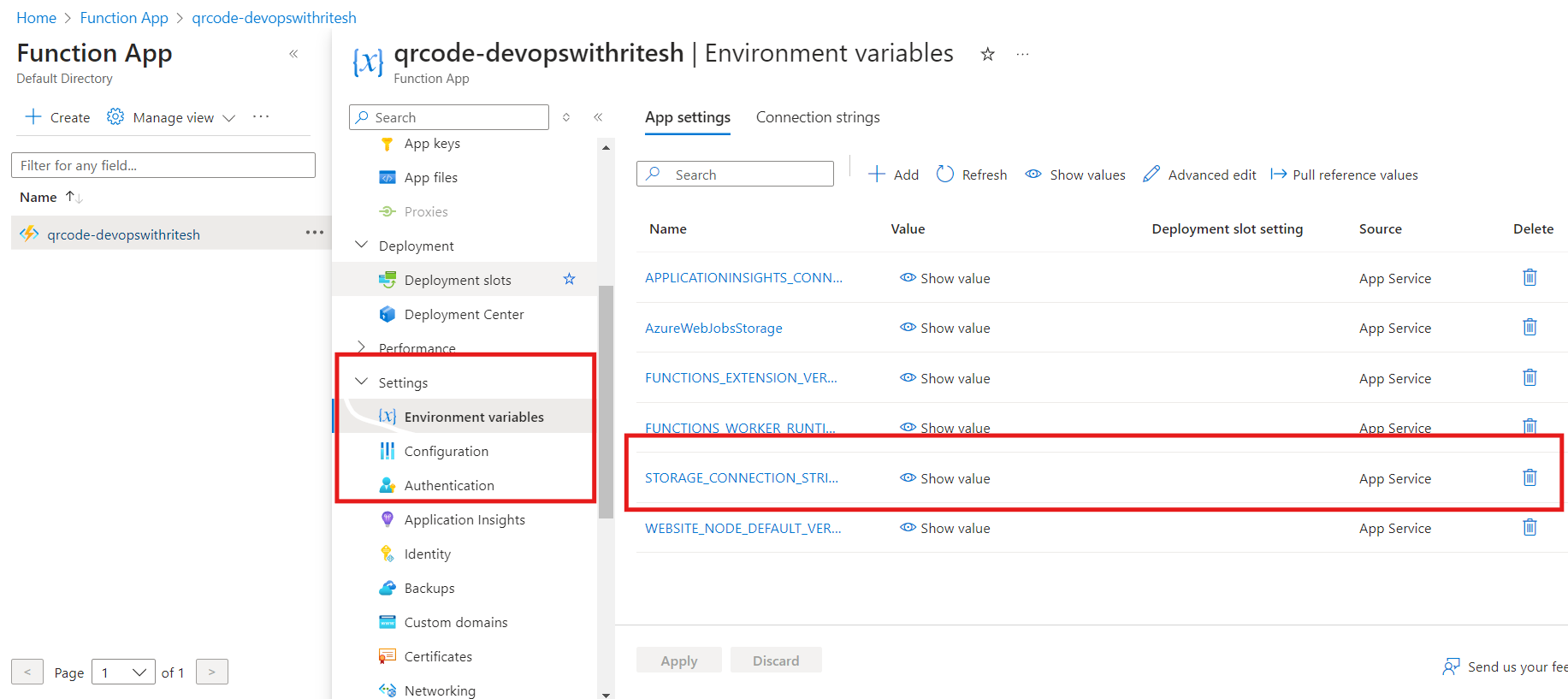

Configuring Connection String

You must configure the storage account connection string to enable your Azure Function App to interact with the Azure Storage account. This ensures your function has the necessary permissions to access and manage storage resources.

ou can achieve this configuration using the Azure Functions Core Tools CLI. Create a local.settings.json file with the following content to include the storage account connection string:

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "Your_Storage_Connection_String",

"FUNCTIONS_WORKER_RUNTIME": "node",

"StorageConnectionString": "Your_Storage_Connection_String"

}

}

Replace "Your_Storage_Connection_String" with the actual connection string for your Azure Storage account.

This local.settings.json file should be placed in the root directory of your function app. It will be used by the Azure Functions runtime to access the storage account and perform operations such as reading from or writing to blob storage.

Azure DevOps Pipeline Integration

Trigger and Pool Configuration

Trigger: The pipeline is triggered by changes to the

mainbranch, ensuring that any updates to this branch will initiate the build and deployment process.Pool: Uses the

Defaultagent pool, which specifies the set of agents that will run the pipeline jobs.

Variables

azureSubscription: The Azure Resource Manager connection ID that provides access to Azure resources.

functionAppName: The name of the Azure Function App where the application will be deployed.

environmentName: The environment name used for deploying the app, ensuring proper organization and deployment context.

Stages

Build Stage

Job:

BuildInstall zip utility: Uses a

Bashtask to install theziputility on the build agent. This is necessary for archiving files later in the pipeline.Install Node.js: Uses

NodeTool@0task to install Node.js version 18.x, ensuring that the correct version is available for building and running the application.Prepare binaries: Runs

npmcommands to install dependencies, build the application, and run tests if they are present. This ensures the application is ready for deployment.Copy Files to Build Directory: Copies files from the source folder to the build directory, preparing them for archiving.

Archive files: Uses

ArchiveFiles@2task to create a zip archive of the application files. This archive will be used for deployment.Upload Artifact: Uploads the zip archive to the pipeline artifact storage, making it available for use in the deployment stage.

Deploy Stage

Deployment:

DeployEnvironment: Uses the

environmentNamevariable to specify the deployment environment.Strategy:

runOncedeployment strategy is used to deploy the application once the build stage is successful.Azure Function App Task:

connectedServiceNameARM: Specifies the Azure Resource Manager connection for authentication.

appType: Indicates that the target is a Function App.

appName: The name of the Azure Function App where the application will be deployed.

package: Specifies the path to the zip file containing the application code.

deploymentMethod: Uses

zipDeployfor deploying the application from the zip archive.

# Node.js Function App to Linux on Azure

# Build a Node.js function app and deploy it to Azure as a Linux function app.

# Add steps that analyze code, save build artifacts, deploy, and more:

# https://docs.microsoft.com/azure/devops/pipelines/languages/javascript

trigger:

- main

pool:

name: Default

variables:

# Azure Resource Manager connection created during pipeline creation

azureSubscription: '4ea4d60c-XXXXXXXXXX-4a72deaf74d8'

# Function app name

functionAppName: 'qrcode-devopswithritesh'

# Environment name

environmentName: 'qrcode-devopswithritesh'

stages:

- stage: Build

displayName: Build stage

jobs:

- job: Build

displayName: Build

steps:

- task: Bash@3

displayName: 'Install zip utility'

inputs:

targetType: 'inline'

script: 'sudo apt-get update && sudo apt-get install -y zip'

- task: NodeTool@0

inputs:

versionSpec: '18.x'

displayName: 'Install Node.js'

- script: |

cd qrCodeGenerator

npm install

npm run build --if-present

npm run test --if-present

displayName: 'Prepare binaries'

- task: CopyFiles@2

inputs:

SourceFolder: '$(System.DefaultWorkingDirectory)/qrCodeGenerator/GenerateQRCode/'

Contents: '**'

TargetFolder: '$(System.DefaultWorkingDirectory)/qrCodeGenerator/'

displayName: 'Copy Files to Build Directory'

- task: ArchiveFiles@2

displayName: 'Archive files'

inputs:

rootFolderOrFile: '$(System.DefaultWorkingDirectory)/qrCodeGenerator'

includeRootFolder: false

archiveType: zip

archiveFile: $(Build.ArtifactStagingDirectory)/qrCodeGenerator/$(Build.BuildId).zip

replaceExistingArchive: true

- upload: $(Build.ArtifactStagingDirectory)/qrCodeGenerator/$(Build.BuildId).zip

artifact: drop

- stage: Deploy

displayName: Deploy stage

dependsOn: Build

condition: succeeded() # This stage will run only if first stage is succeeded

jobs:

- deployment: Deploy

displayName: Deploy

environment: $(environmentName)

pool:

name: Default

strategy:

runOnce:

deploy:

steps:

- task: AzureFunctionApp@2

inputs:

connectedServiceNameARM: 'Pay-As-You-Go(4accce4f-XXXXXXXXX-5456d8fa879d)'

appType: 'functionApp'

appName: '$(functionAppName)'

package: '$(Pipeline.Workspace)/drop/$(Build.BuildID).zip'

deploymentMethod: 'zipDeploy'

This pipeline automates the process of building and deploying a Node.js Function App, highlighting the efficiency and consistency provided by using Azure DevOps Pipelines. Ensure to adjust the values of variables such as azureSubscription and functionAppName to match your specific Azure environment and resources.

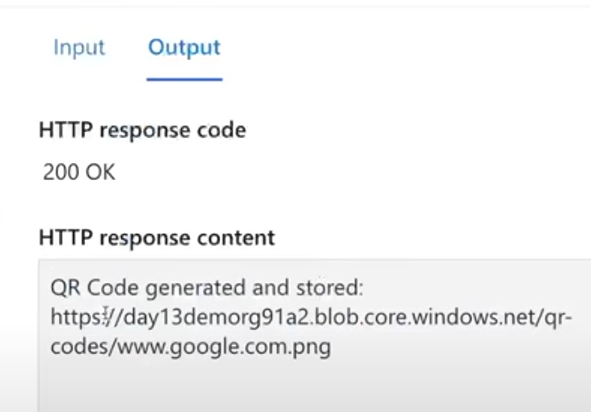

Once the build is successful, you can test your function app directly from the Azure portal. Navigate to your Function App and select the function you want to test. Click on the "Run" button and provide the required query parameters in the provided input fields.

For example, if your function requires a url parameter, you should enter the appropriate URL in the query parameter field.

After initiating the test, you should receive a response with a status code of 200, indicating that the function executed successfully and returned the expected output. This confirms that your function app is working as intended.

Reference

GitHub: https://github.com/ritesh-kumar-nayak/azure-qr-code

You can get the pipeline code in the given repository as well.

Subscribe to my newsletter

Read articles from Ritesh Kumar Nayak directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ritesh Kumar Nayak

Ritesh Kumar Nayak

Passionate about helping organizations build scalable infrastructure and DevOps solutions with cloud technologies. Experienced in designing robust systems, automating processes, and driving efficiency through innovative cloud solutions. Advocate for best practices in DevOps and cloud computing, committed to enabling teams to achieve their full potential.