Understanding Load Balancers: A Real-World Example

SREERAJ R

SREERAJ RLoad Balancer is a solution that distributes traffic to the number of servers. Load Balancers distribute traffic to multiple servers during peak traffic time and increase the reliability of the application.

Let’s Break It Down with a Real-Life Example

Imagine there is a shop with one billing counter. We can take the shop as ZUDIO and there is an offer under 499 or something, the shop experiences a rush, and the billing time takes so long that customers leave without making any purchase. The person at the counter is under a lot of stress.

During the offer, the owner decides to open 5 more billing counters based on heavy rush. As a result, customers can quickly pay and leave, and the staff at the counters experience less stress.

The first scenario is an example of a server without a load balancer, and the second scenario is an example of a server with a load balancer.

Here's how it maps to the technical idea:

Single Billing Counter (Without Load Balancer): This represents a server handling all the incoming requests on its own. When the demand is high, like during the offer, it gets overwhelmed, leading to delays and customer dissatisfaction.

Multiple Billing Counters (With Load Balancer): This represents a load balancer distributing incoming requests across multiple servers. Each billing counter handles a portion of the customers, reducing the wait time and stress for each counter.

Examples of Load-balancing works

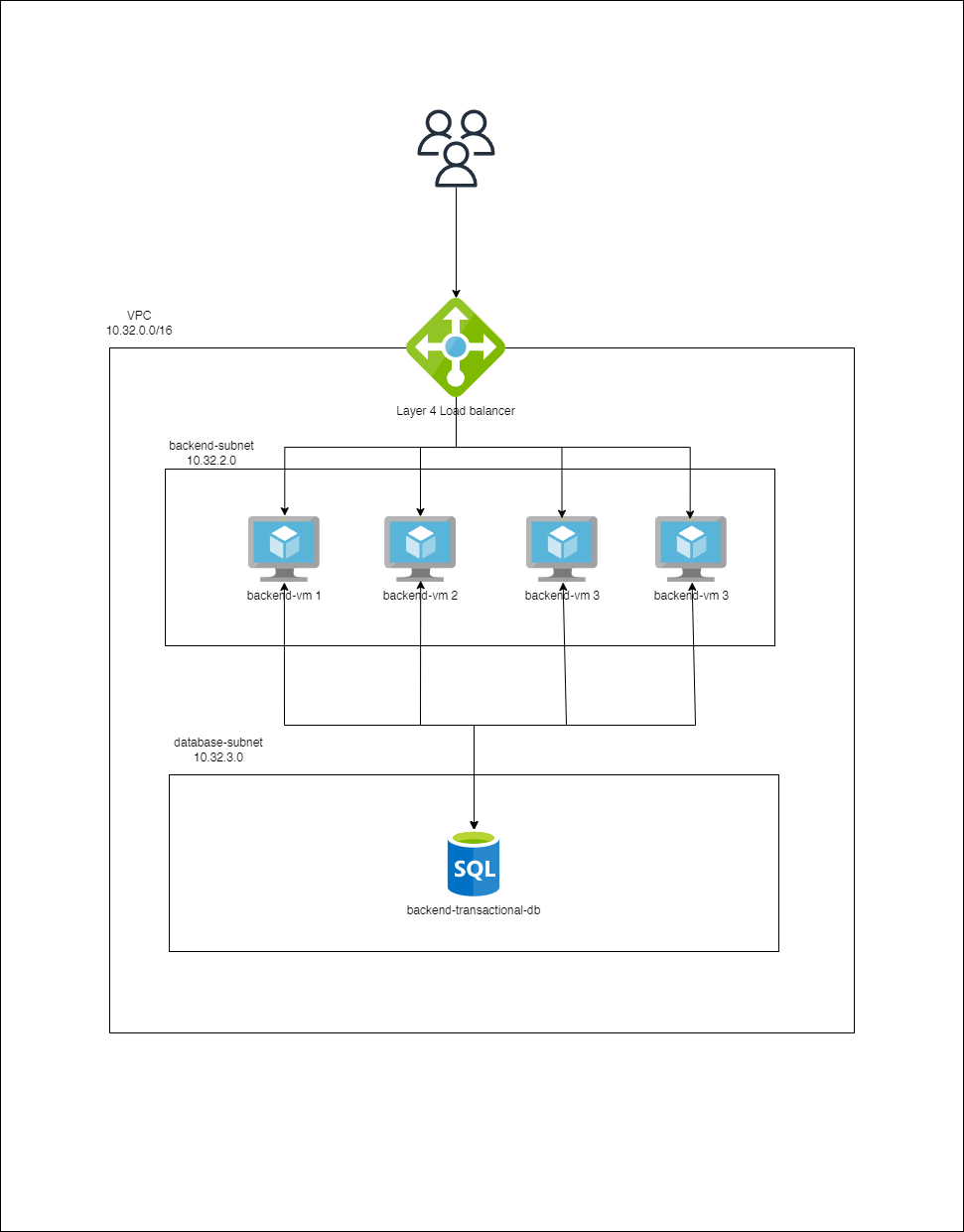

The users are accessing the backend service through the load balancer. Each request the load balancer chooses a server based on the load-balancing algorithm.

Users access backend services through a load balancer. Each request is directed to a server based on a load-balancing algorithm.

Minimizing Response Time and Maximizing Throughput: Load balancers ensure that requests are processed quickly and efficiently.

Ensuring High Availability and Reliability: They only send requests to servers that are online and healthy.

Continuous Health Checks: Load balancers monitor servers to ensure they can handle requests.

Dynamic Scaling: Depending on demand, load balancers can add or remove servers as needed.

Load balancers minimize server response time and maximize throughput. Load balancer ensures high availability and reliability by sending requests only to online servers Load balancers do continuous health checks to monitor the server’s capability of handling the request. Depending on the number of requests or demand load balancers add or remove the number of servers.

There are three types of load balancers:

Hardware Load Balancer

Software Load Balancer

Virtual Load Balancer

The following are some of the common types of load-balancing algorithms:

Round Robin

Threshold

Least Connection

Least Time

Layer of Operation

Load balancing generally occurs at Layers 4 and 7 of the Open Systems Interconnection (OSI) model:

Layer 4 (Transport): Deals with TCP/UDP traffic.

Layer 7 (Application): Handles HTTP/HTTPS traffic

Why This Matters for DevOps Engineers

Understanding load balancers is crucial for managing and optimizing web traffic, ensuring application reliability, and improving user experience. As a DevOps engineer, expertise in load balancing helps in designing scalable and resilient systems.

Subscribe to my newsletter

Read articles from SREERAJ R directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

SREERAJ R

SREERAJ R

Software Developer | Java | Node.js