Day 44: AWS CI

Vishesh Ghule

Vishesh GhuleTable of contents

🚀 Introduction

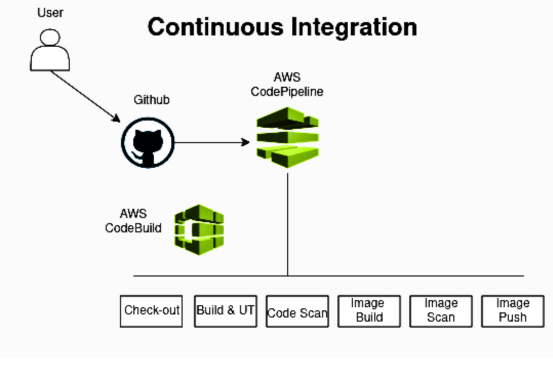

In this blog we’ll dive into creating a Continuous Integration (CI) pipeline using AWS services. A CI pipeline is a automating the process of integrating code changes & testing. In this blog, we'll explore how to set up and manage a CI pipeline on AWS, taking advantage of its powerful tools like CodePipeline, CodeBuild, and to enhance your workflow. By the end of this post, you’ll have a solid understanding of AWS CI.

Refer to the image below for a better understanding.

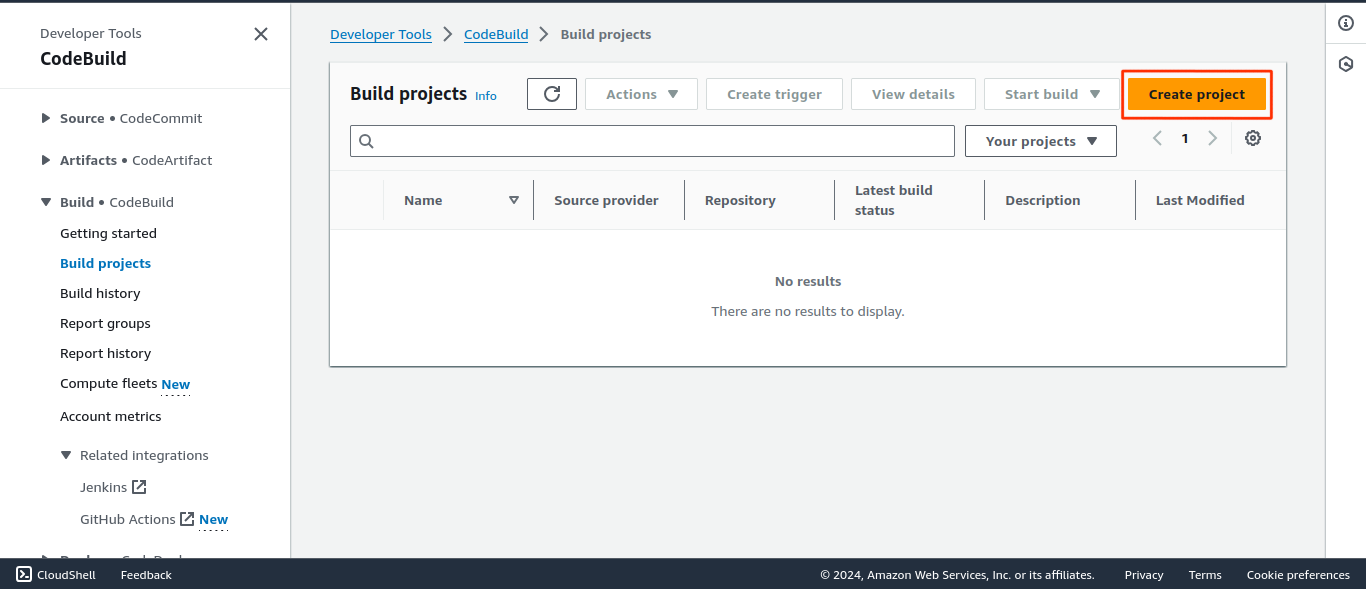

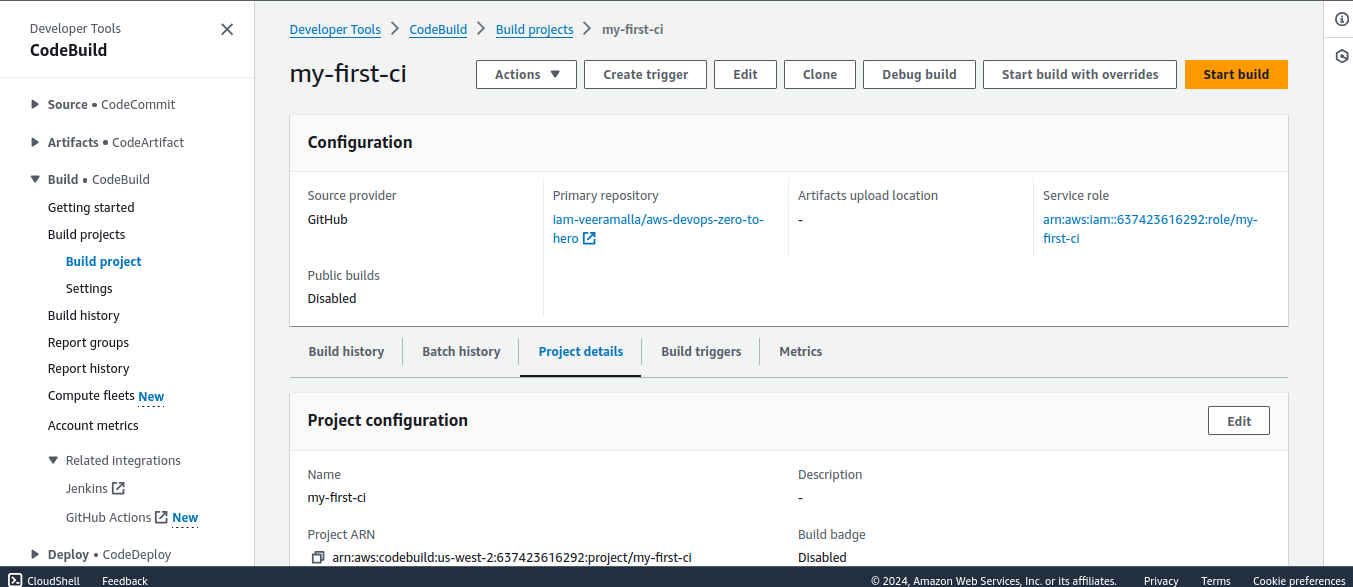

- Create Project

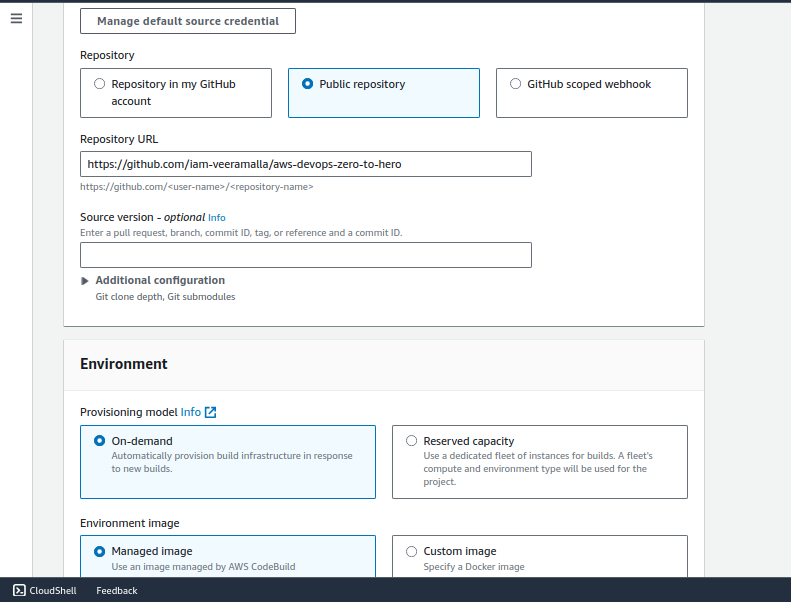

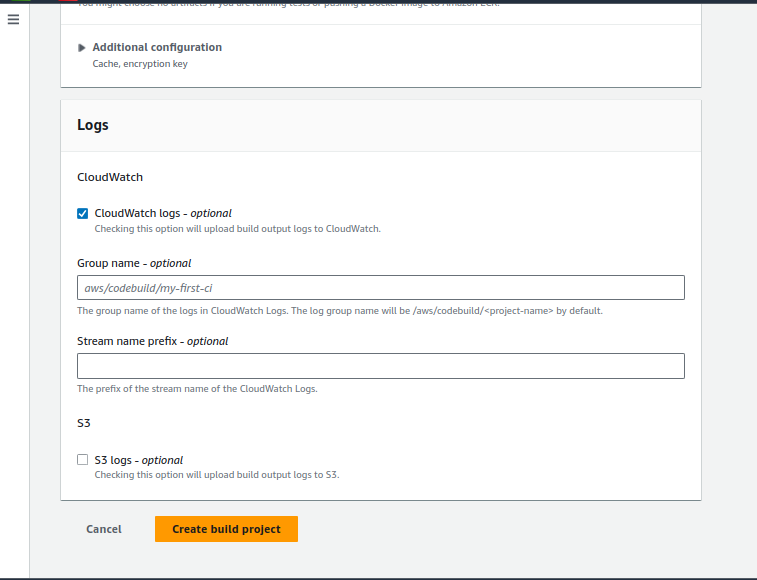

First, search for CodeBuild, then click on Create Project. Follow the images and instructions given below carefully for better understanding.

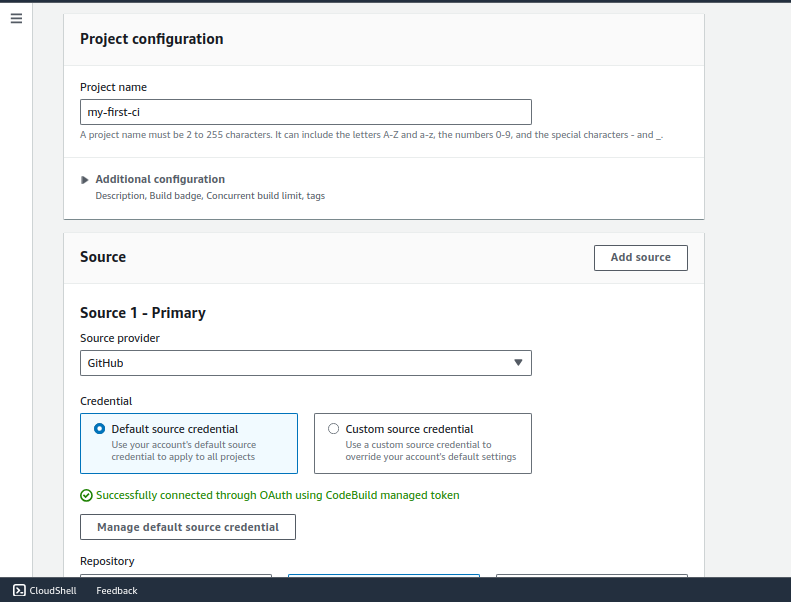

- In source select Github

- If there is a repository is on your GitHub, select Repository in my Github account. Otherwise, choose Public repository and provide the repository URL.

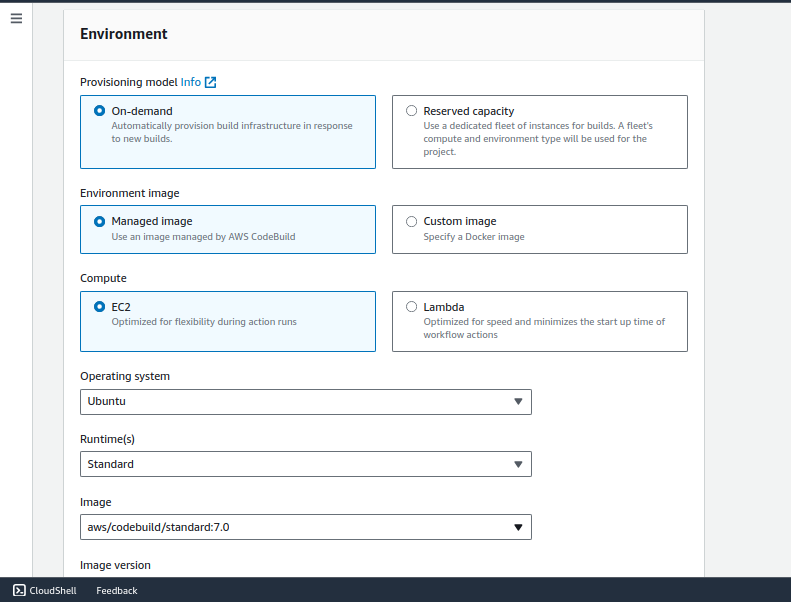

- Select OS ubuntu, Runtime Standard & select latest image

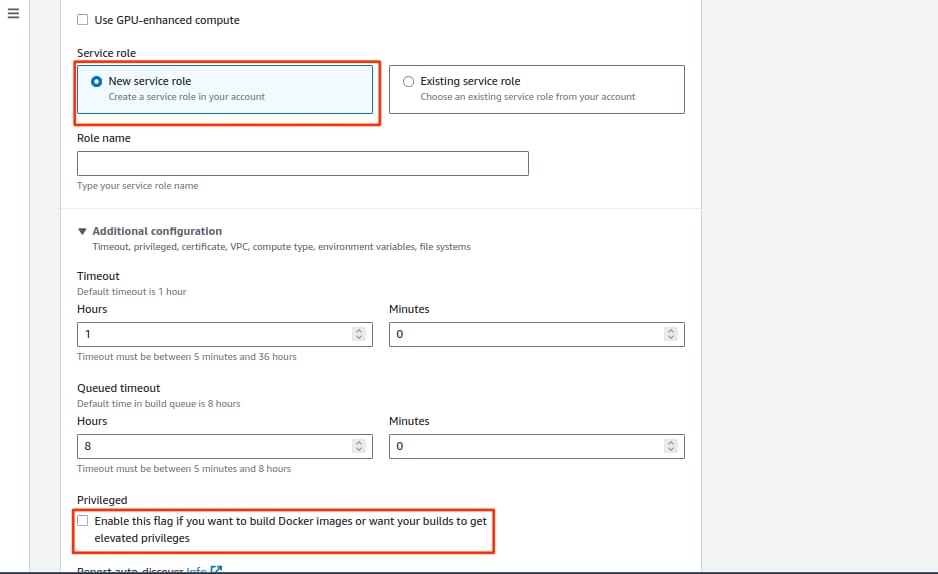

- Create new service role & do check Privileged box

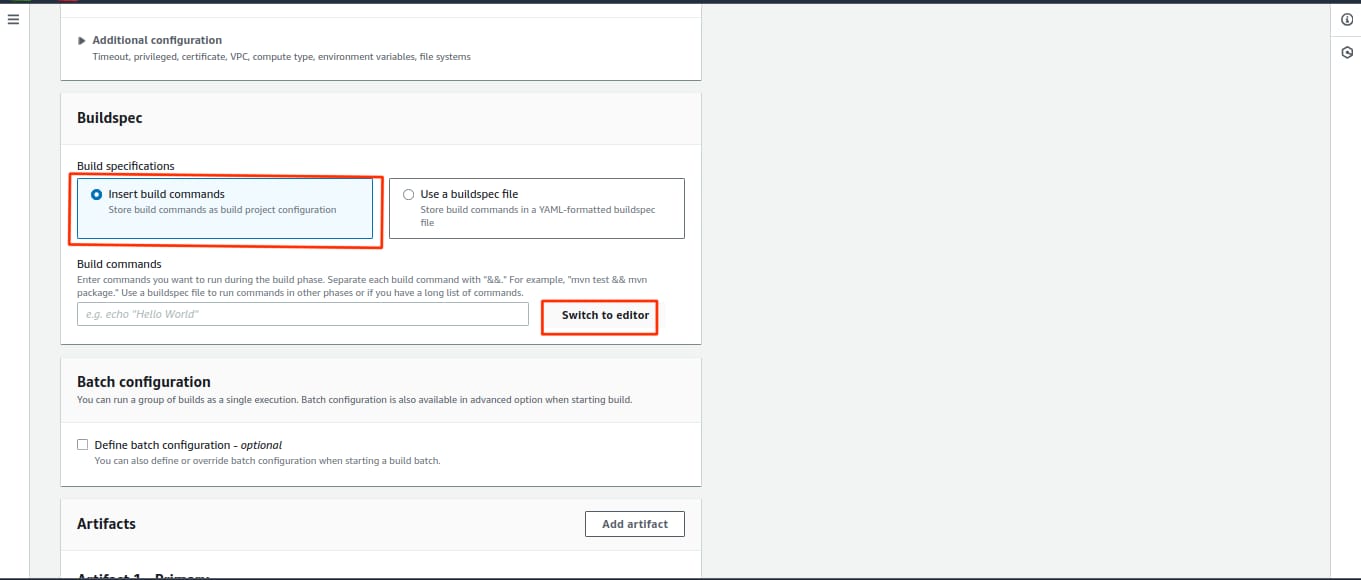

- After selecting Insert build commands select switch to editor in that you can write build command, The build command is provided below the image.

version: 0.2

env:

parameter-store:

DOCKER_REGISTRY_USERNAME: /docker-credentials/username

DOCKER_REGISTRY_PASSWORD: /docker-credentials/password

DOCKER_REGISTRY_URL: /docker-registry/url

phases:

install:

runtime-versions:

python: 3.11

pre_build:

commands:

- echo "Installing dependencies..."

- pip install -r requirements.txt # Install dependencies

build:

commands:

- echo "Running tests..."

- echo "Building Docker image..."

- echo "$DOCKER_REGISTRY_PASSWORD" | docker login -u "$DOCKER_REGISTRY_USERNAME" --password-stdin "$DOCKER_REGISTRY_URL"

- docker build -t "$DOCKER_REGISTRY_URL/$DOCKER_REGISTRY_USERNAME/simple-python-flask-app:latest" .

- docker push "$DOCKER_REGISTRY_URL/$DOCKER_REGISTRY_USERNAME/simple-python-flask-app:latest"

post_build:

commands:

- echo "Build completed successfully!"

artifacts:

files:

- '**/*' # Capture all files in the base directory

base-directory: simple-python-app/

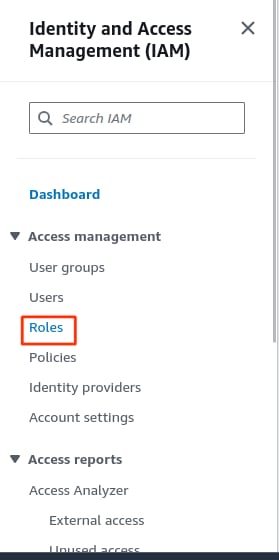

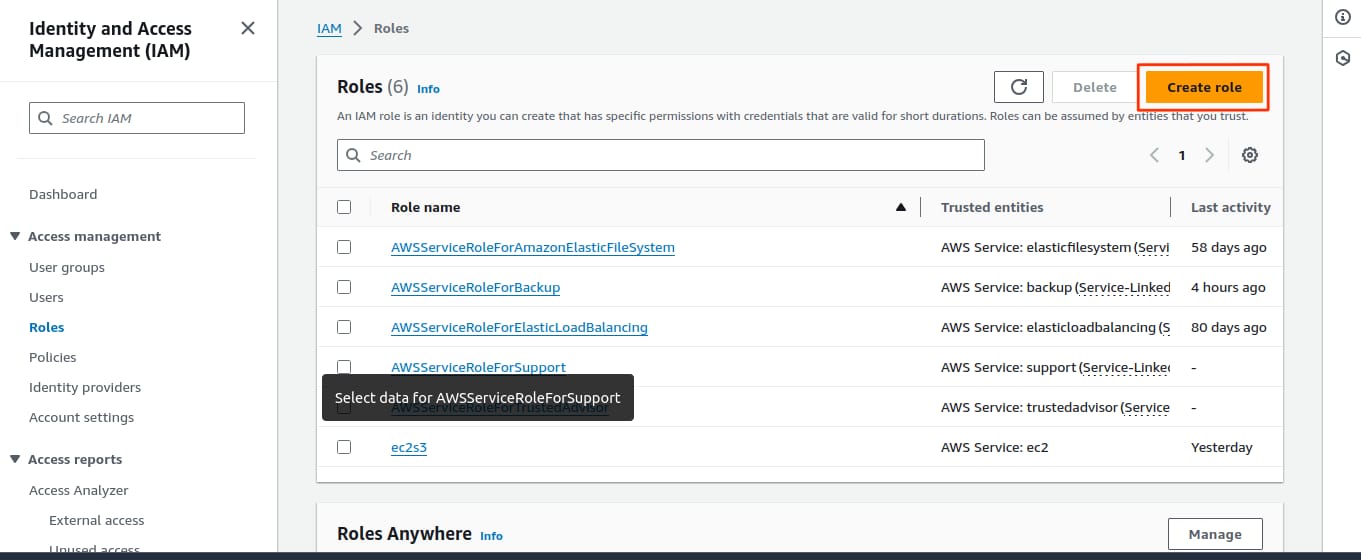

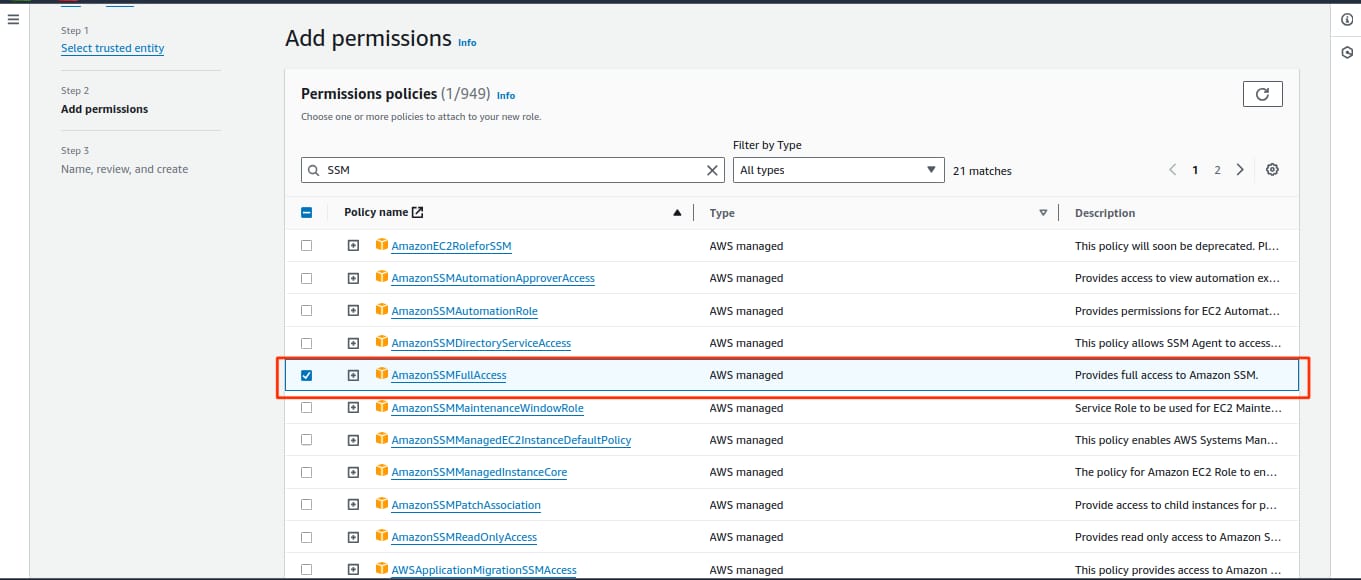

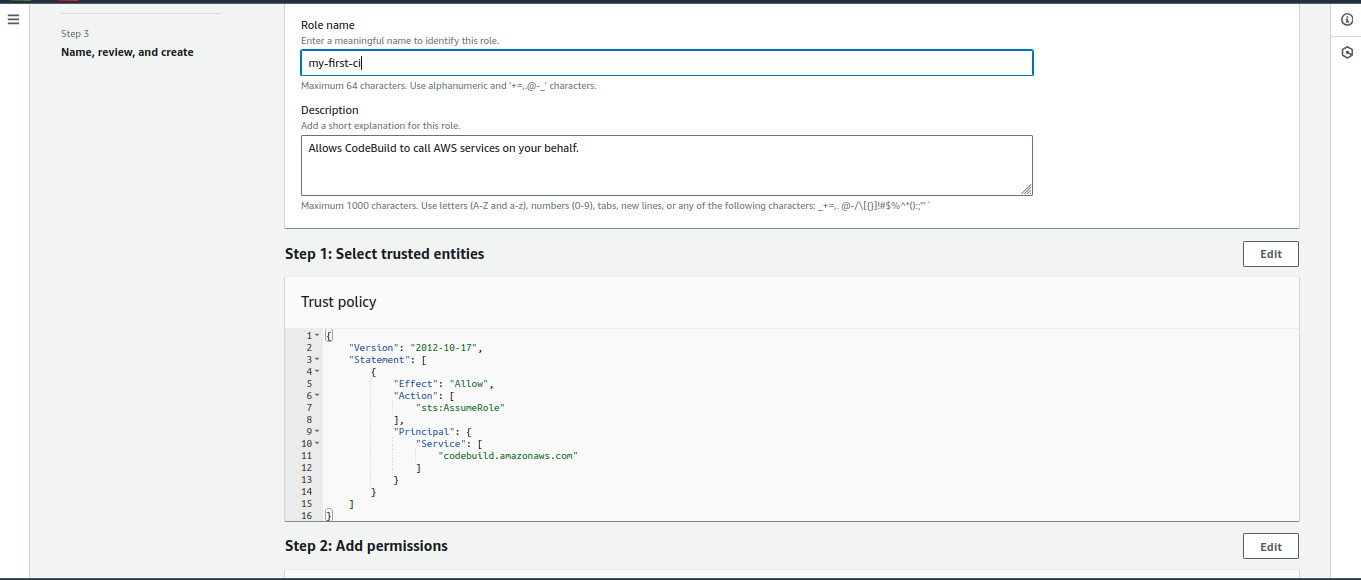

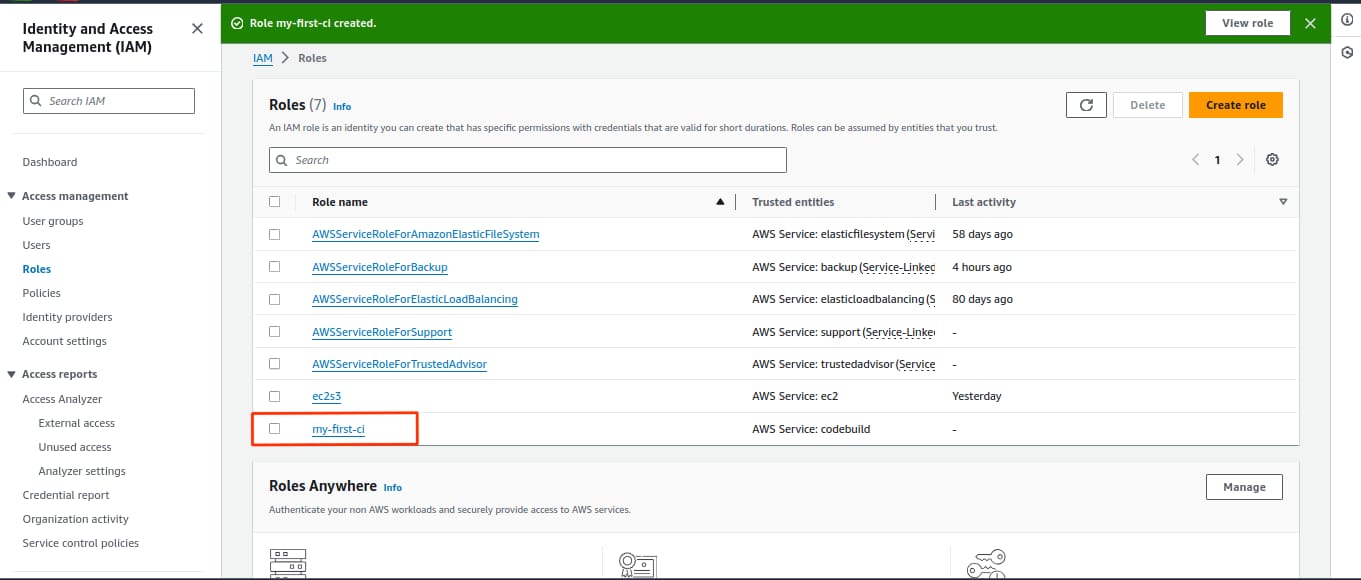

- After building project Search IAM & create Roles for parameters store

- Crete role

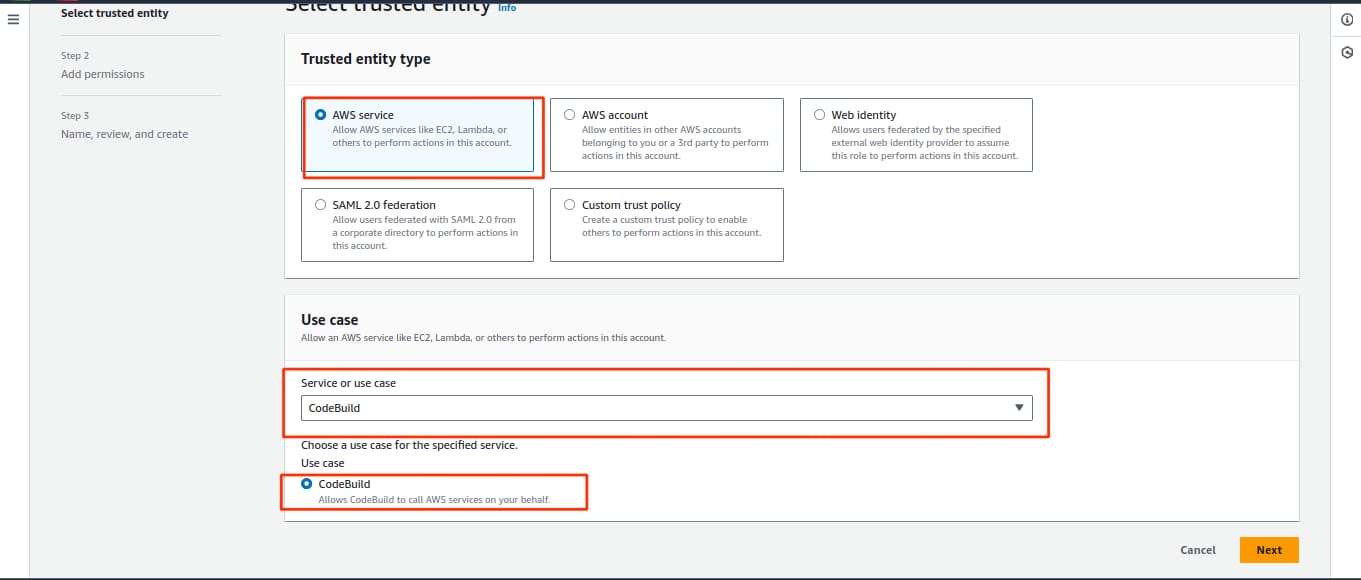

- In use case select codebuild

- Select SSMFullAccess

- Here we can see our role has been created

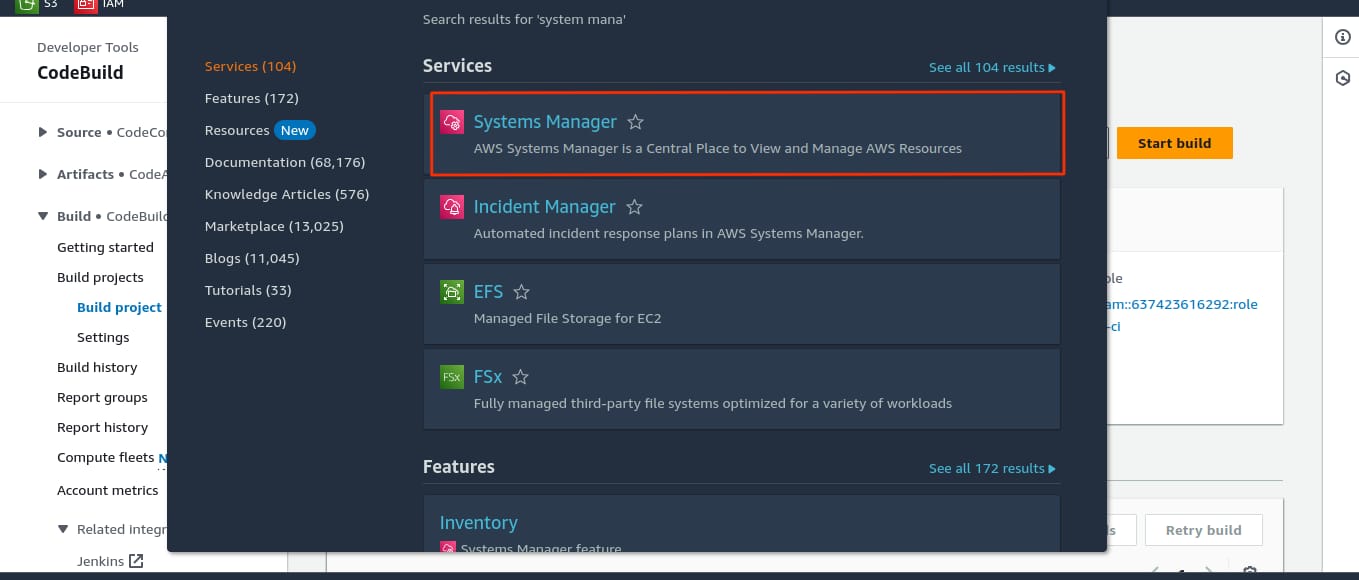

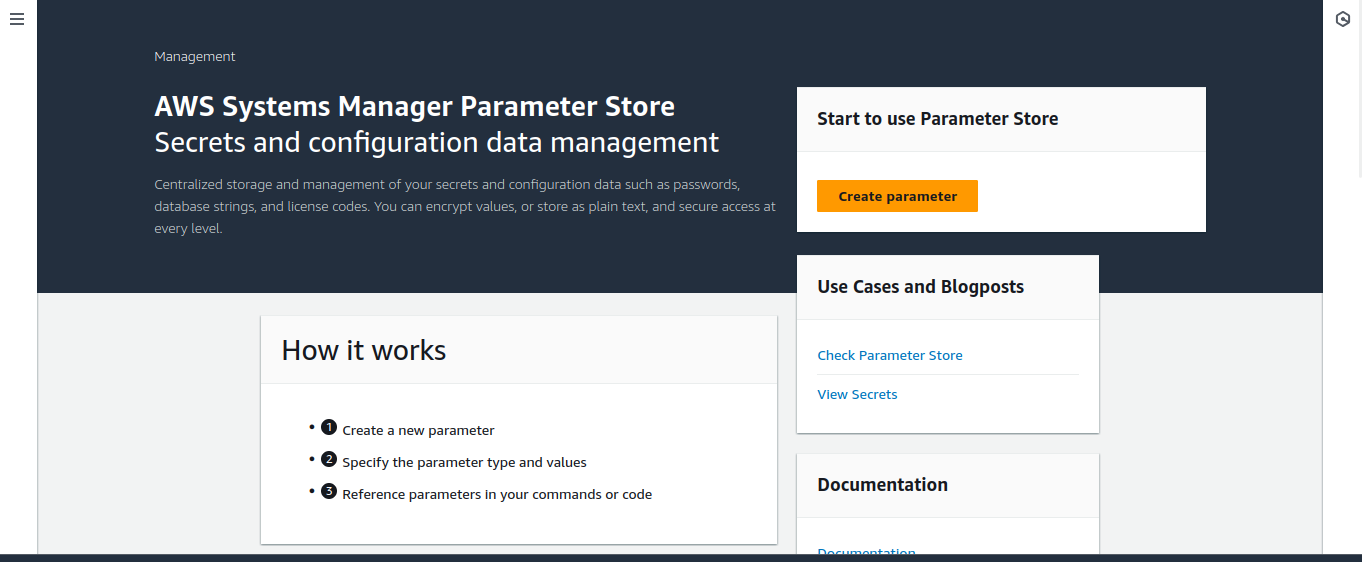

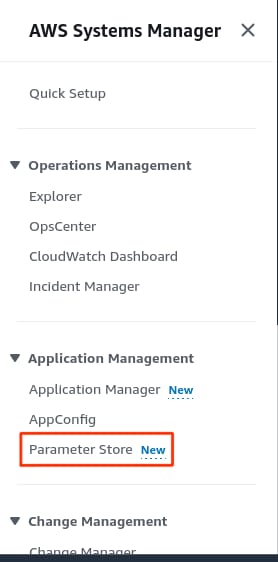

- After creating role search systems manager and create parameter store

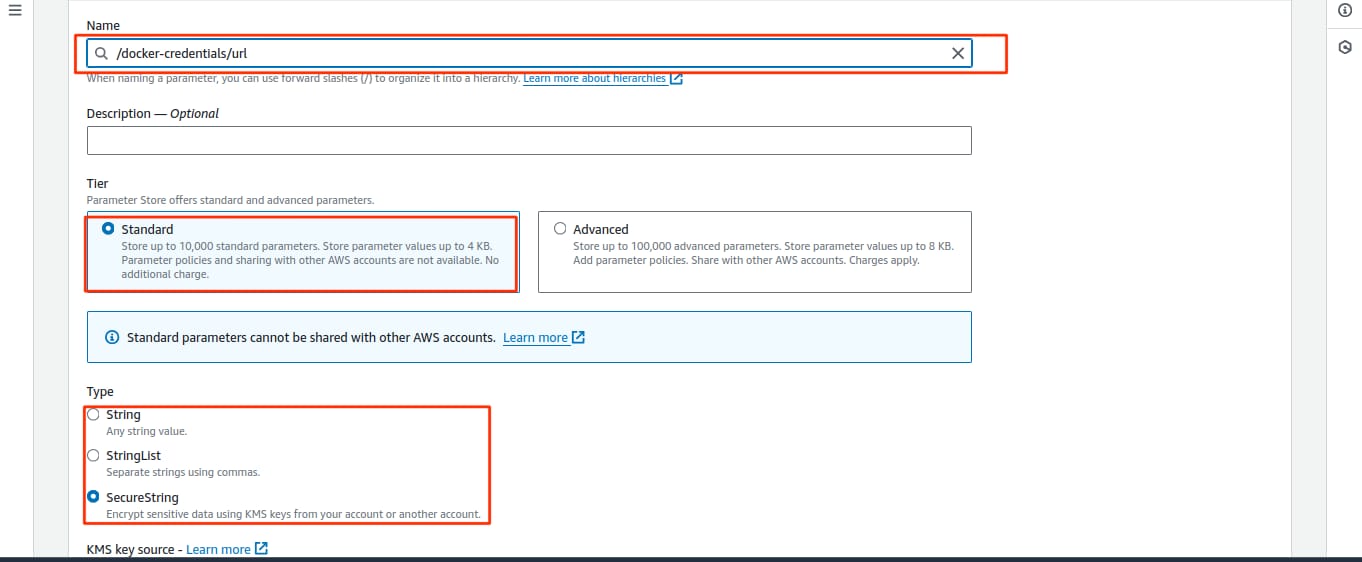

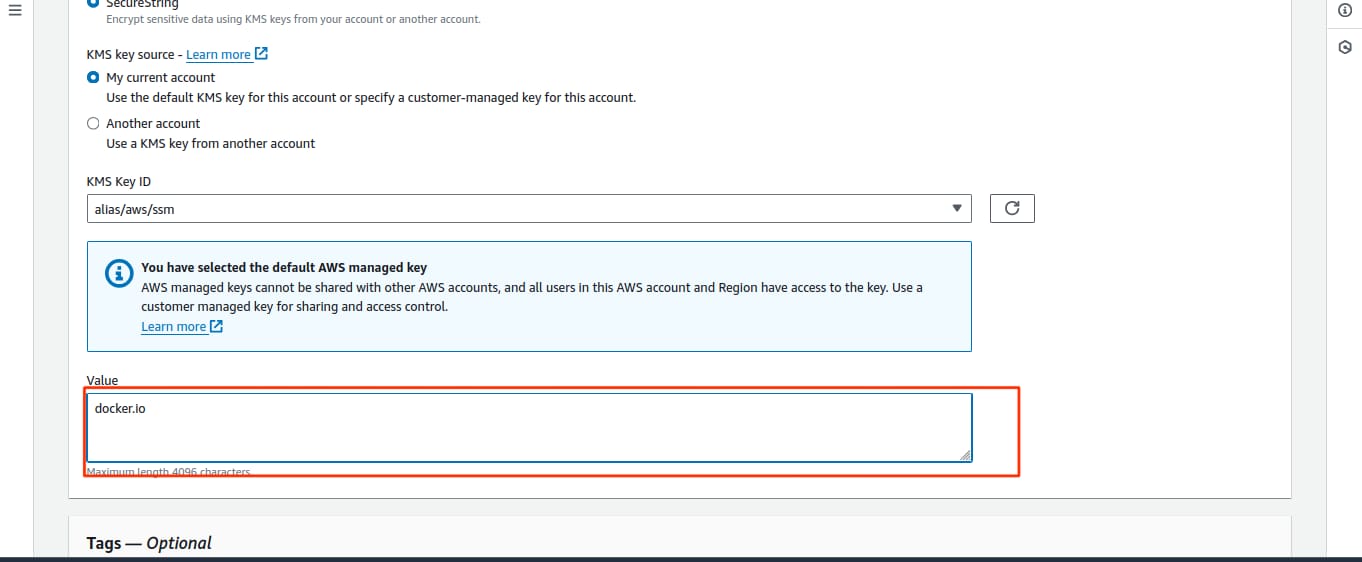

- In the parameter section, you need to input the exact names as mentioned. For example, if you write parameter name /docker-credentials/url then you should provide the Docker URL in the value field. Similarly, for username, enter your docker username, and for password, enter your password. Like this create 3 parameter for url,username & password

Remember you need a account on docker to execute this program

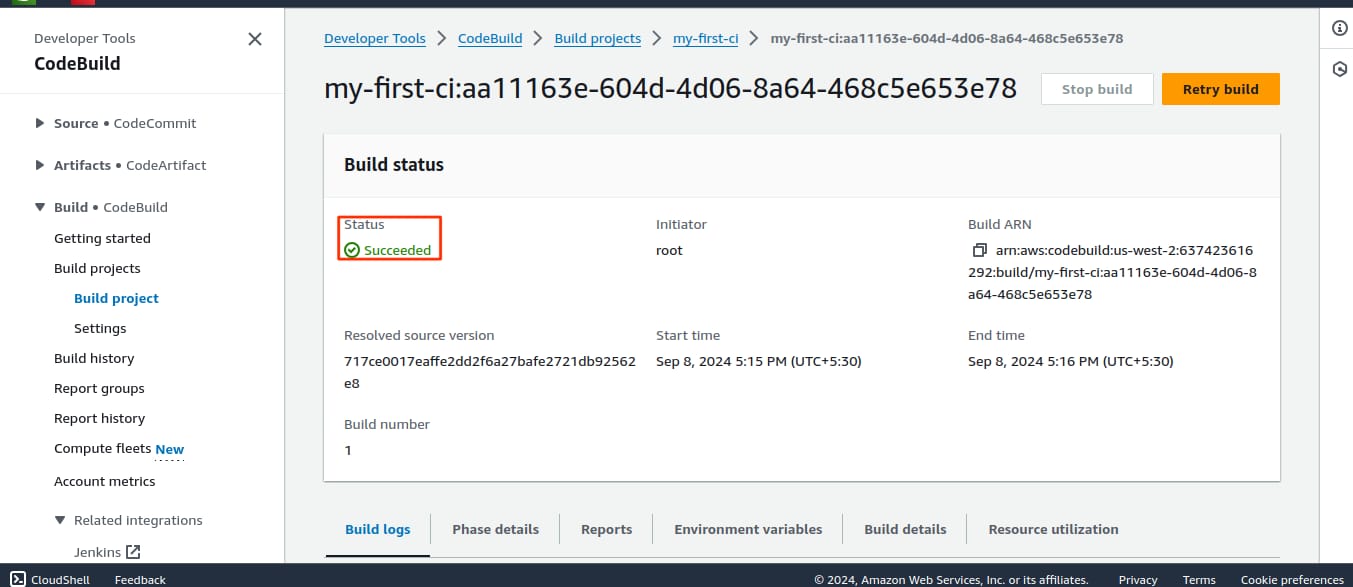

- After creating the parameter, go back to the CodeBuild project that you created earlier & click start build

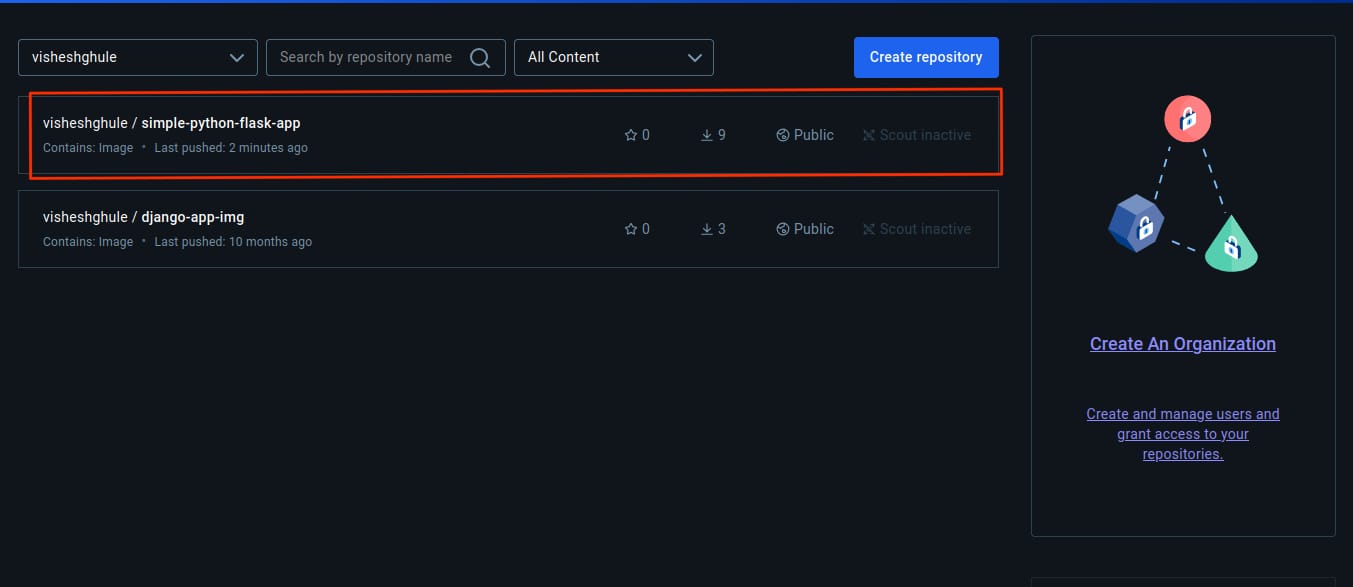

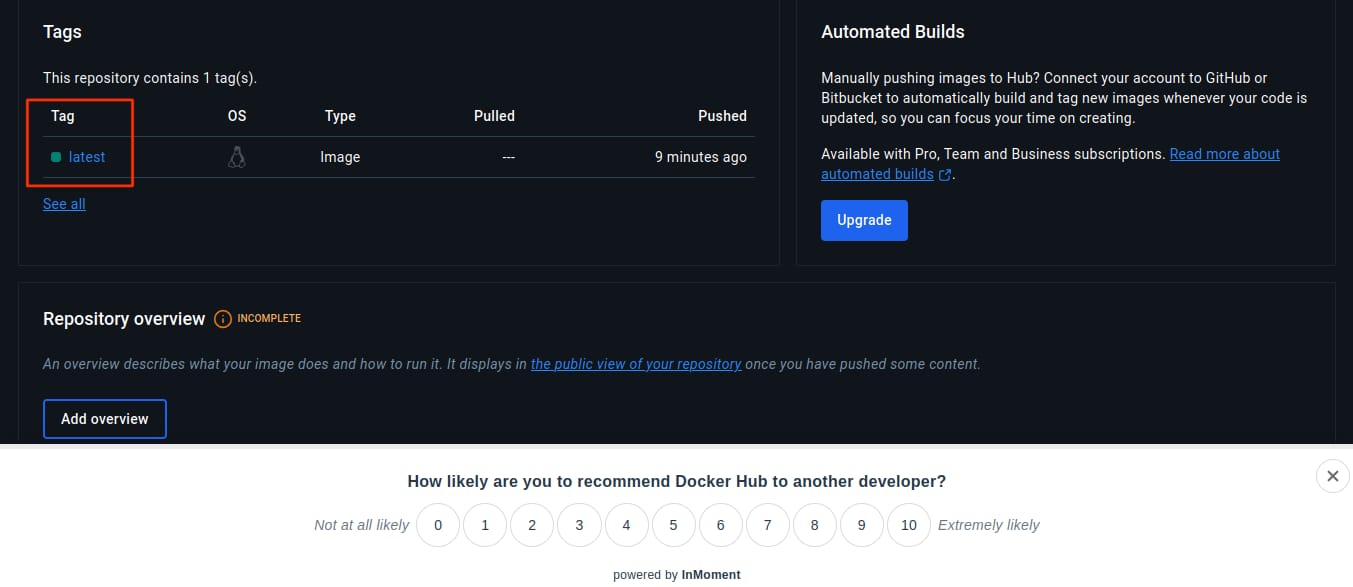

- In the images provided below, you can see that the last pushed was 2 minutes ago, and the tag shows as "latest."

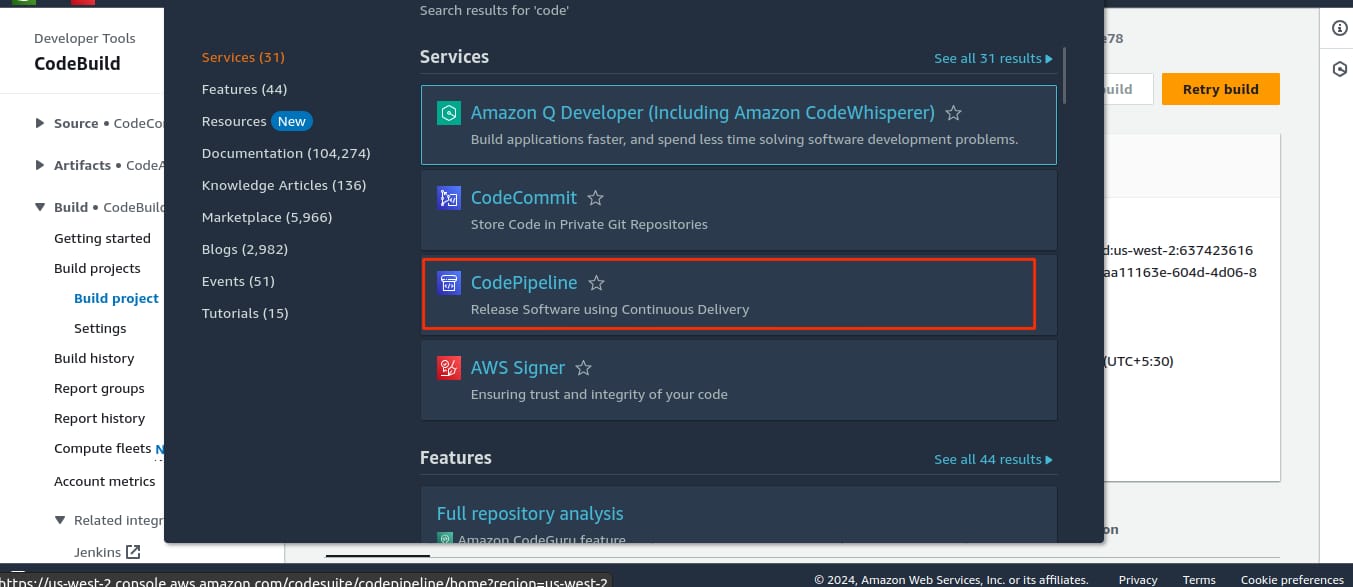

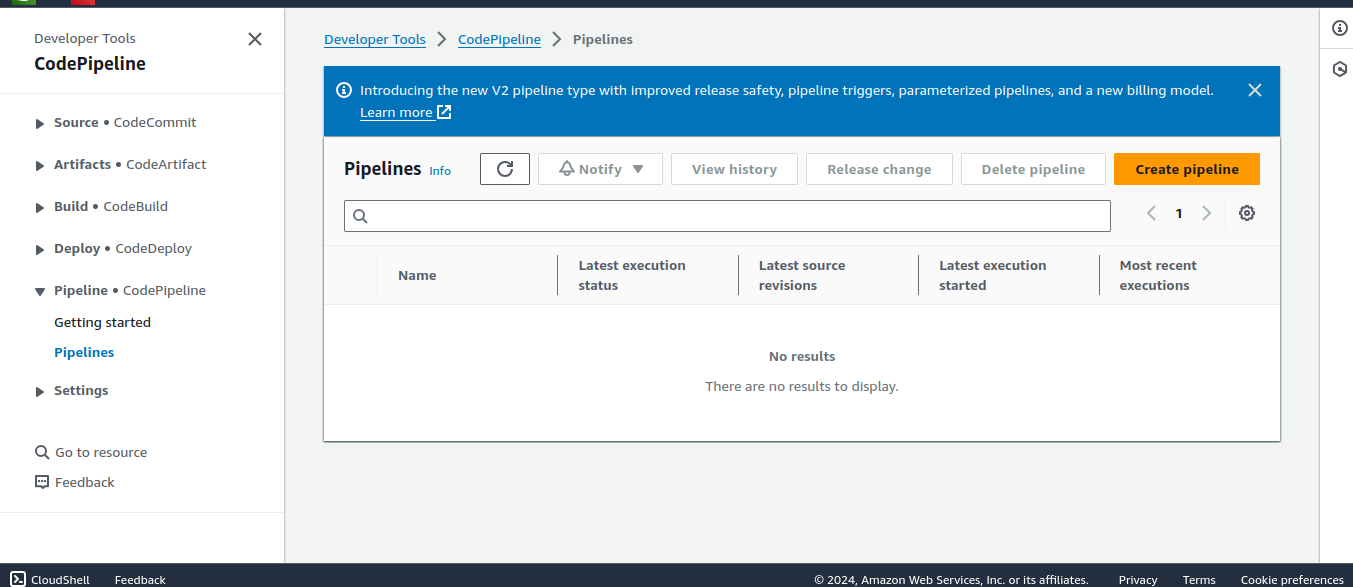

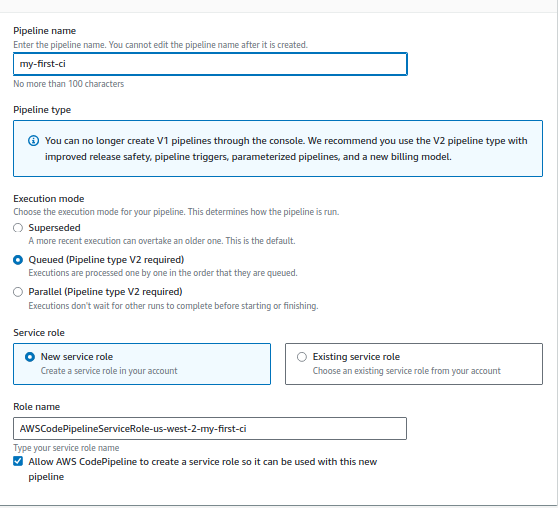

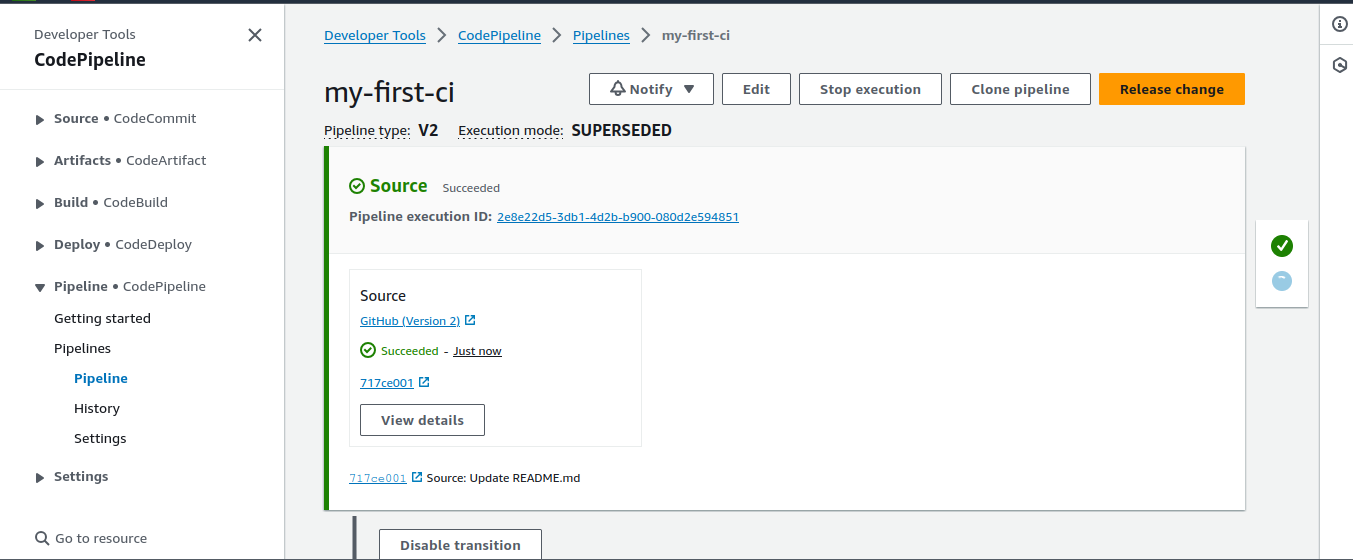

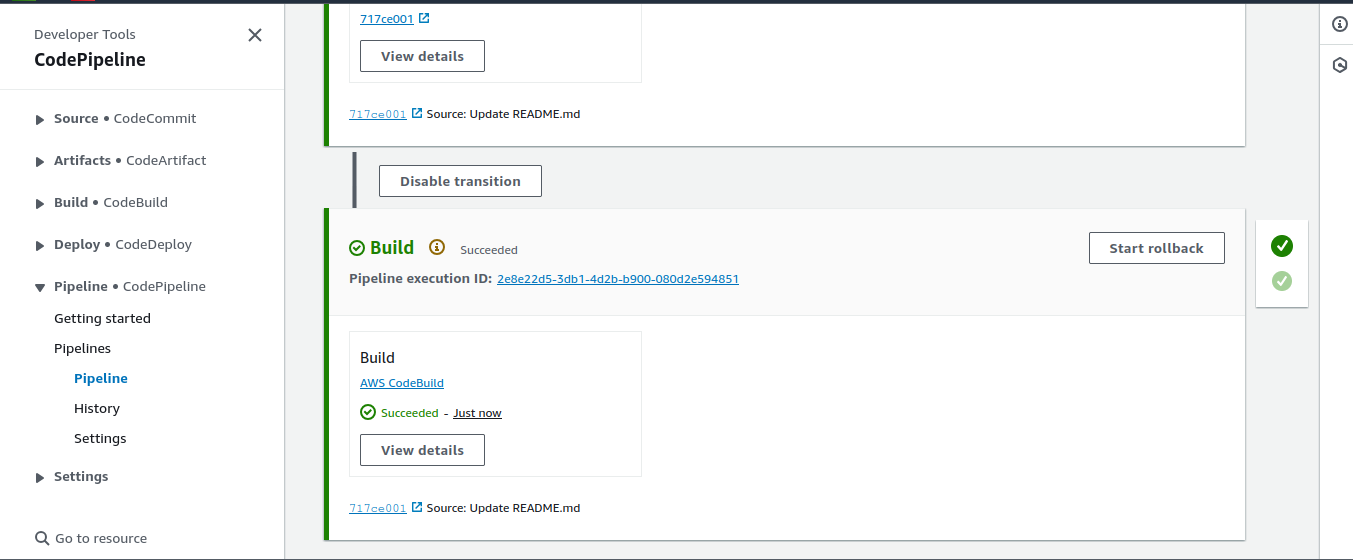

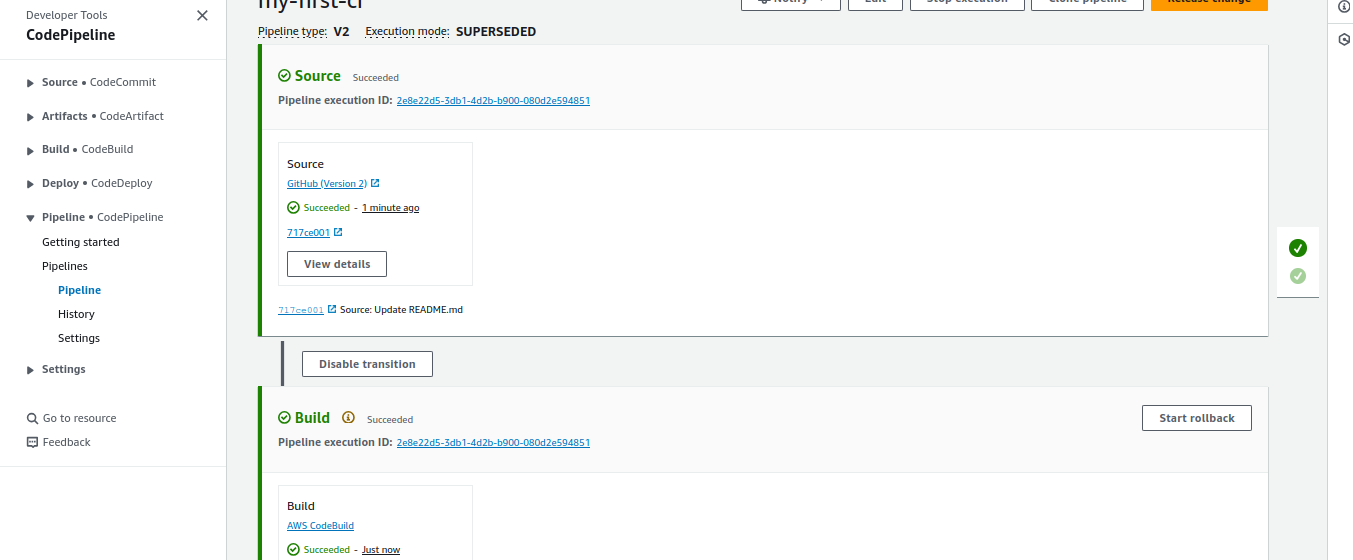

- After successfully start build now create codepipeline

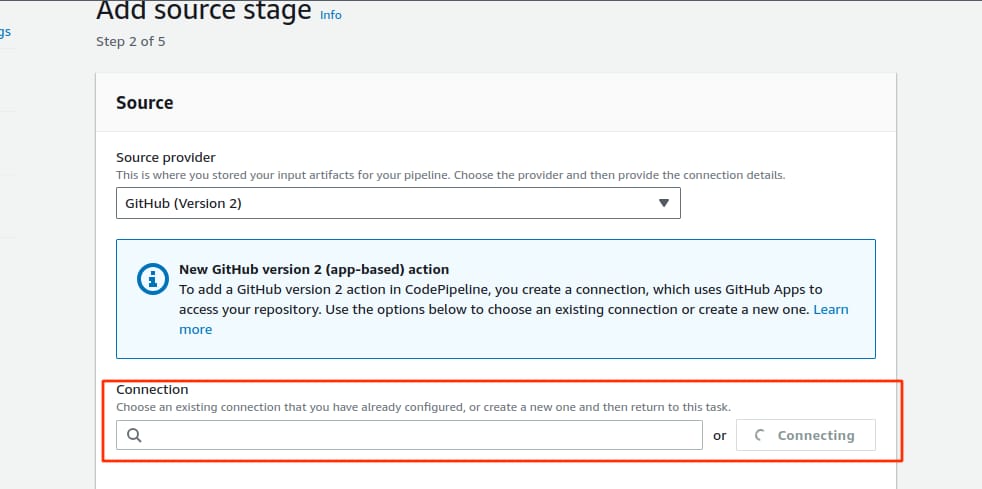

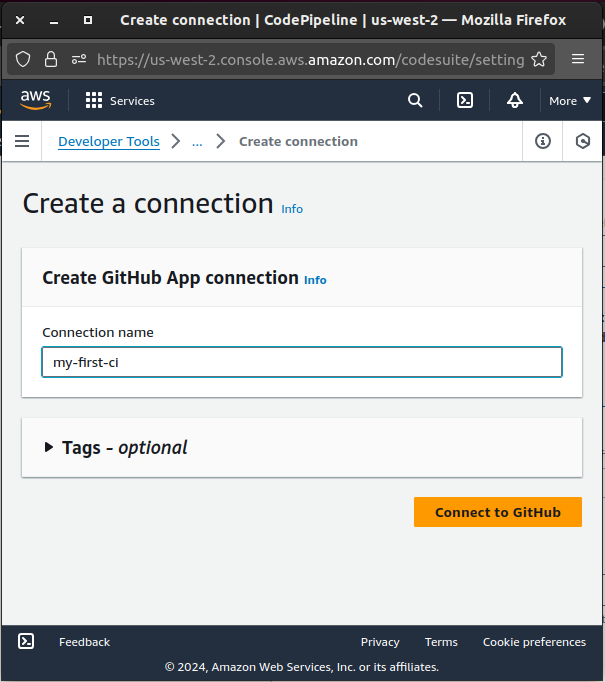

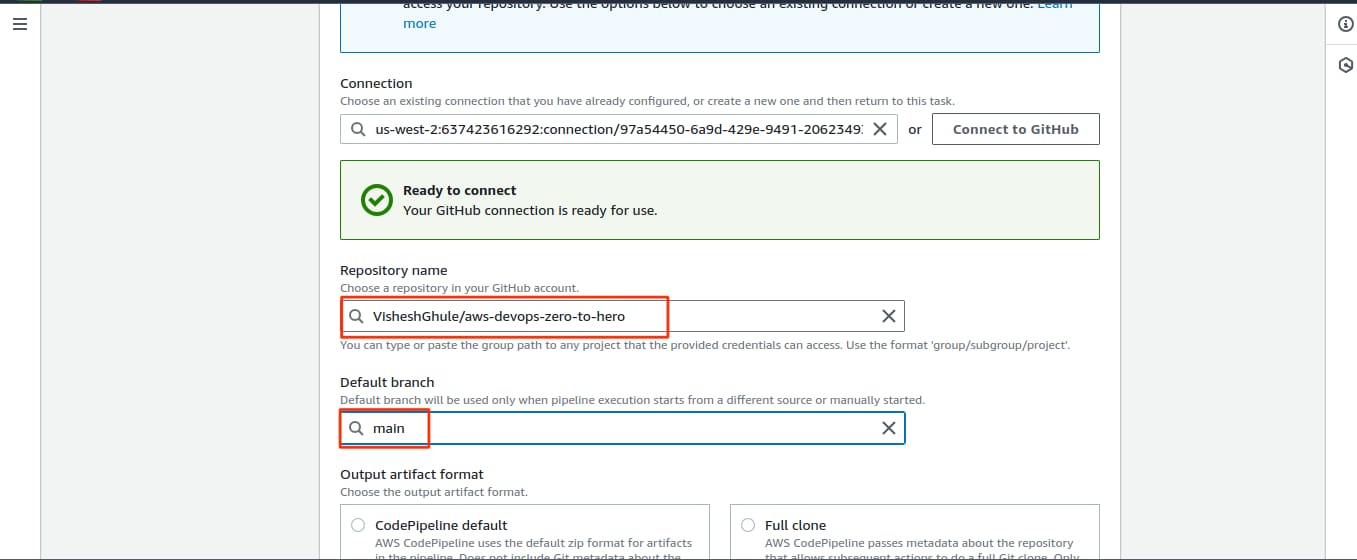

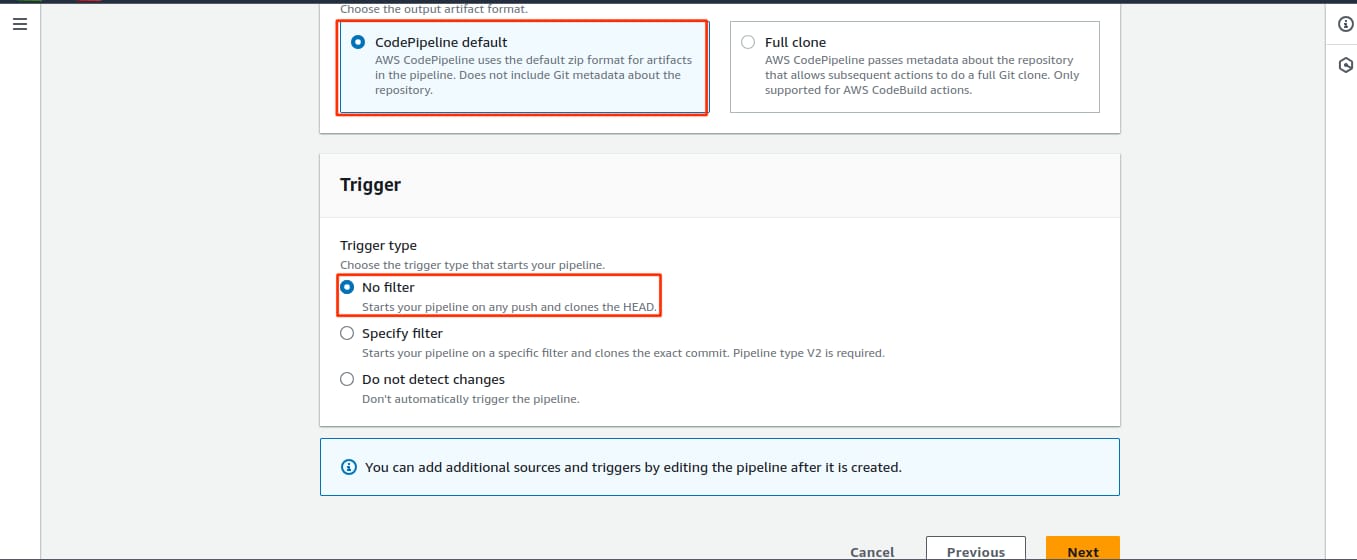

- Select Github version 2 & connect to Github account

- I have forked the repository, so i have provided my path

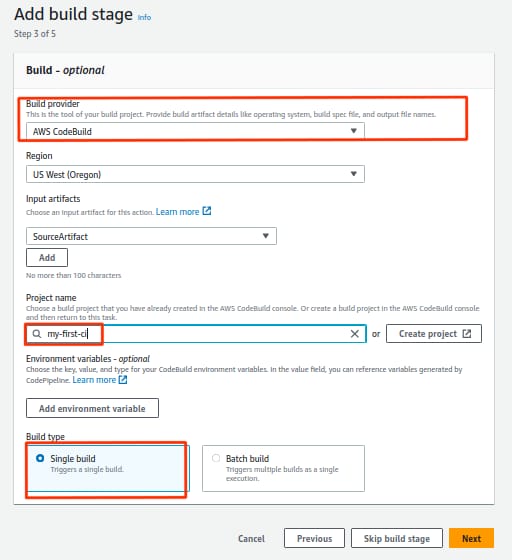

- Select Codebuild in Build Provider

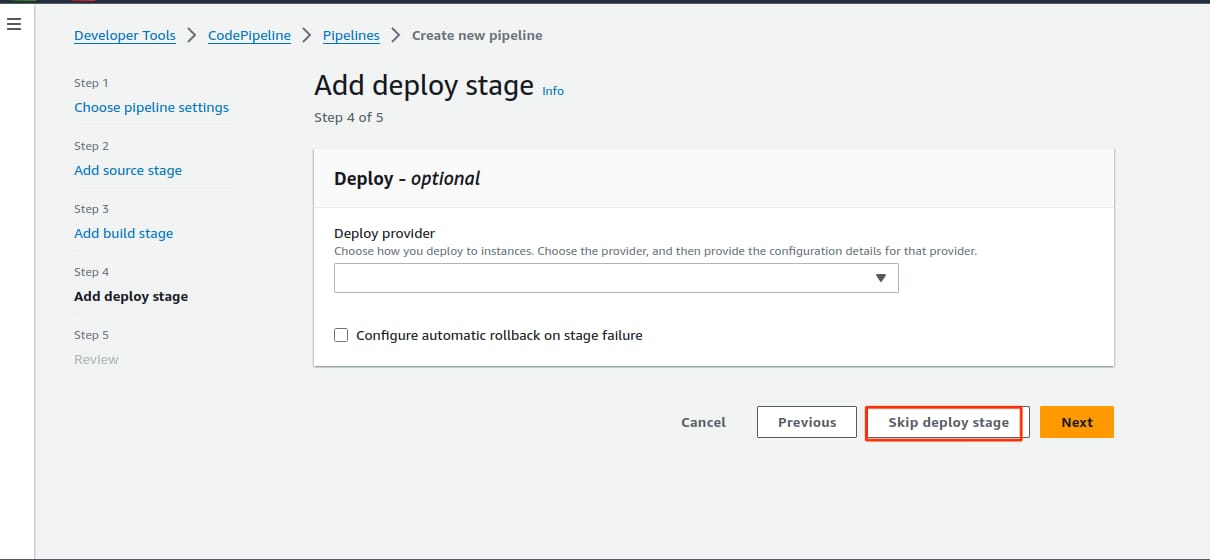

- Skip the deployment stage for now, as in this blog we will only focus on integration. We will cover deployment in the next blog

🚀 Conclusion

In this blog, we explored the process of setting up a Continuous Integration (CI) pipeline using AWS CodeBuild. We covered key steps, including creating a CodeBuild project, configuring build commands, and handling parameters securely. By following the instructions and images provided, you should now have a functional CI pipeline that automates the build and test phases of your application development.

Remember, while this blog focused on integration, deployment will be addressed in the next post, where we’ll dive into the process of deploying application in the next blog.

Thanks for reading to the end; I hope you gained some knowledge.❤️🙌

Subscribe to my newsletter

Read articles from Vishesh Ghule directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vishesh Ghule

Vishesh Ghule

I'm proficient in a variety of DevOps technologies, including AWS, Linux, Python, Docker, Git/Github, Shell Scripting, Jenkins and Computer Networking. My greatest strength is the ability to learn new things because I believe there is always room for self-development