Unraid VM Snapshot Automation with Ansible: Part 1 - Creating Snapshots

Jeffrey Lyon

Jeffrey Lyon

Intro

Hello! Welcome to my very first blog post EVER!

In this series, I’ll dive into how you can leverage Ansible to automate snapshot creation and restoration in Unraid, helping to streamline your backup and recovery processes. Whether you’re new to Unraid or looking for ways to optimize your existing Unraid setup, this post will provide some insight starting with what I did to create snapshots when no official solution is provided (that I know of...). Recovery using our created snapshots will come in the next post.

This is a warm-up series/post to help me start my blogging journey. It's mainly aimed at the home lab community, but this post or parts of it can definitely be useful for other scenarios and various Linux based systems as well.

Scenario

Unraid is a great platform for managing storage, virtualization, and Docker containers, but it doesn't have built-in support for taking snapshots of virtual machines (VMs). Snapshots are important because they let you save the state of a VM disk at a specific time, so you can easily restore the disk if something goes wrong, like errors, updates, or failures. Without this feature, users who depend on VMs for important tasks or development need to find other ways or use third-party tools to handle snapshots. This makes automating backup and recovery harder, especially in setups where snapshots are key for keeping the system stable and protecting data.

I will be using an Ubuntu Ansible host, my Unraid server as the snapshot source, and my Synology DiskStation as the remote storage destination to backup the snapshots. Unraid will be transferring these snapshots using the rsync synchronization protocol. Local snapshot creation as TAR files will also be covered, which allows for faster restore.

Ansible host (Ubuntu 24.04)

Unraid server (v6.12) - Runs custom Linux OS based on Slackware Linux

Synology DiskStation (v7.1) - Runs custom Linux OS - Synology DiskStation Manager (DSM)

These systems will be communicating over the same 192.168.x.x MGMT network.

NOTE: Throughout this post (and in future related posts), I’ll refer to the DiskStation as the "destination" or "NAS" device. I’m keeping these terms more generic to accommodate those who might be following along with different system setups, ensuring the concepts apply broadly across various environments. I also wont be going into much detail on specific ansible modules, structured data, or Jinja2 templating syntax. There are plenty of great resources/documentation out there to cover that.

Requirements

I will include required packages, configuration, and setup for the systems involved in this automation.

Ansible host

You will need the following:

Python (3.10 or greater suggested)

Ansible core

sudo apt install -y ansible-core python3Modify your ansible.cfg file to ignore host_key_checking. Usually located in /etc/ansible/

[defaults] host_key_checking = False

NOTE: If your unsure where to find your ansible.cfg, just run ansible --version as shown below:

ansible --version

ansible [core 2.16.3]

config file = /etc/ansible/ansible.cfg

Unraid server

This setup is not terrible but not as flexible:

NOTE: All commands in Unraid I'm running as user 'root'. Not the most secure, yes, but easiest for now.

Python (only supports version 3.8) - Needs to be installed from the 'Nerd Tools' plugin and enabled in GUI.

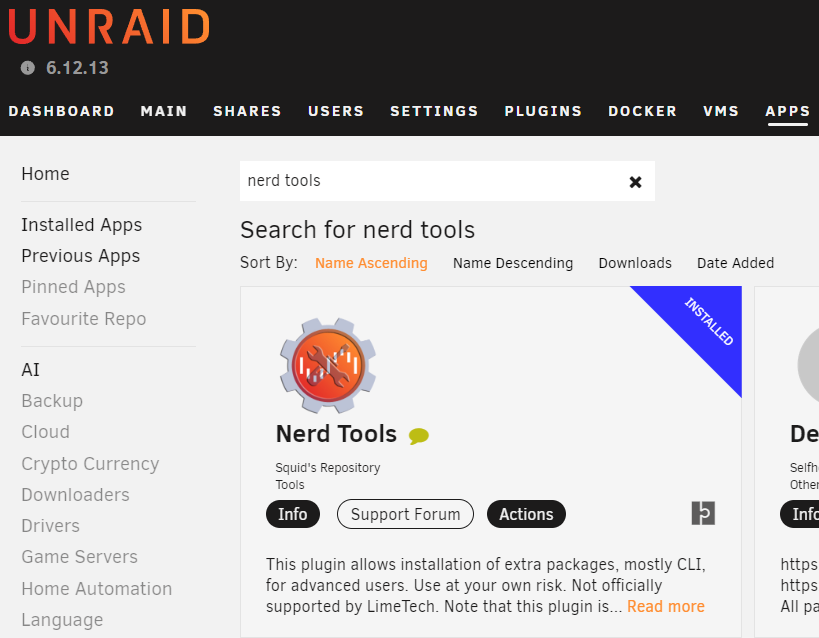

Nerd Tools plugin

To Install - In GUI click on APPs -> Search for 'nerd tools' -> Click

'Actions''Install'.

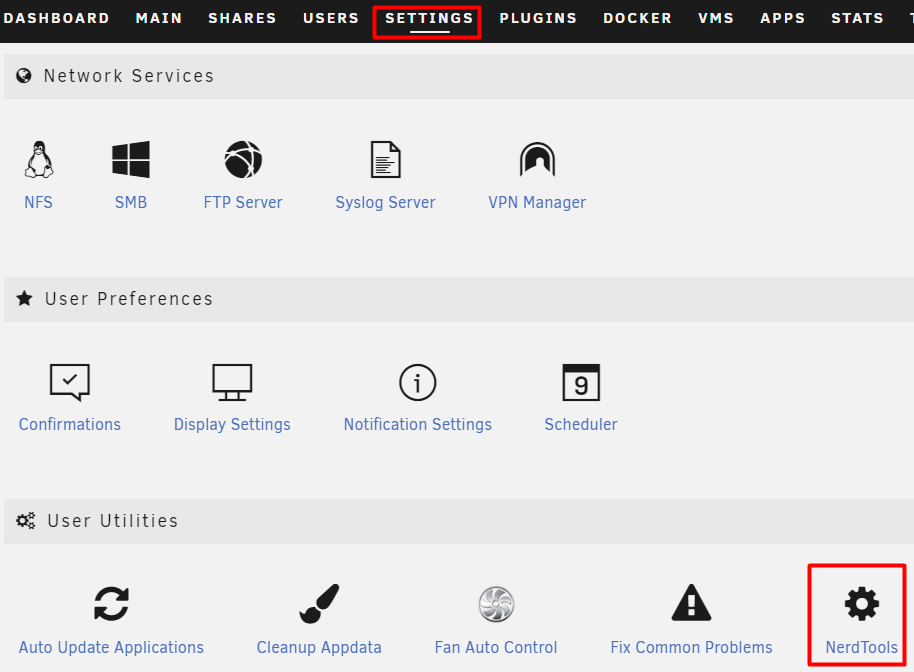

Once installed click on 'Settings' -> Scroll down until you see 'Nerd Tools' and click on it

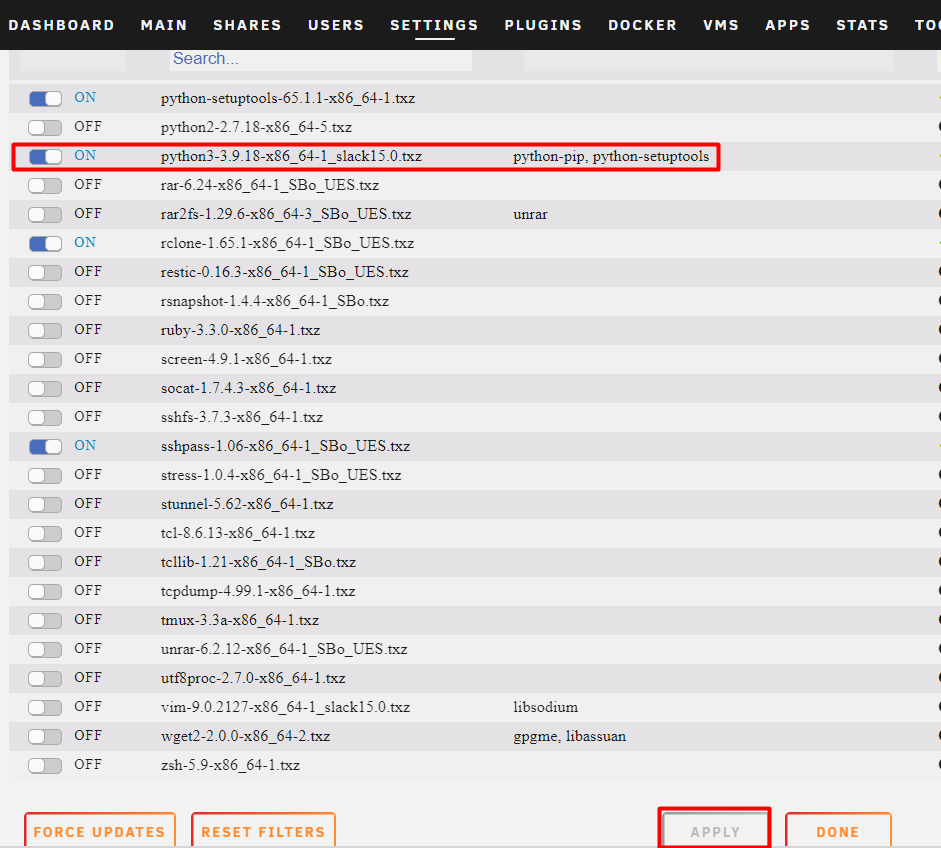

Once it loads find the Python3 option and flip it to 'On' -> Scroll down to the bottom of that page and click 'Apply'.

NOTE: Will install 'pip' and 'python-setuptools' automatically as well.

rsync (enabled by default)

Synology DiskStation

A few things are needed here. You can't really install packages in the CLI, everything is pulled down from the Package Center:

Python (minimum version 3.8 - higher versions can be downloaded from the Package Center)

NOTE: This isn't necessary for the automation covered in this post. Will be necessary for future posts when Ansible actually has to connect directly.

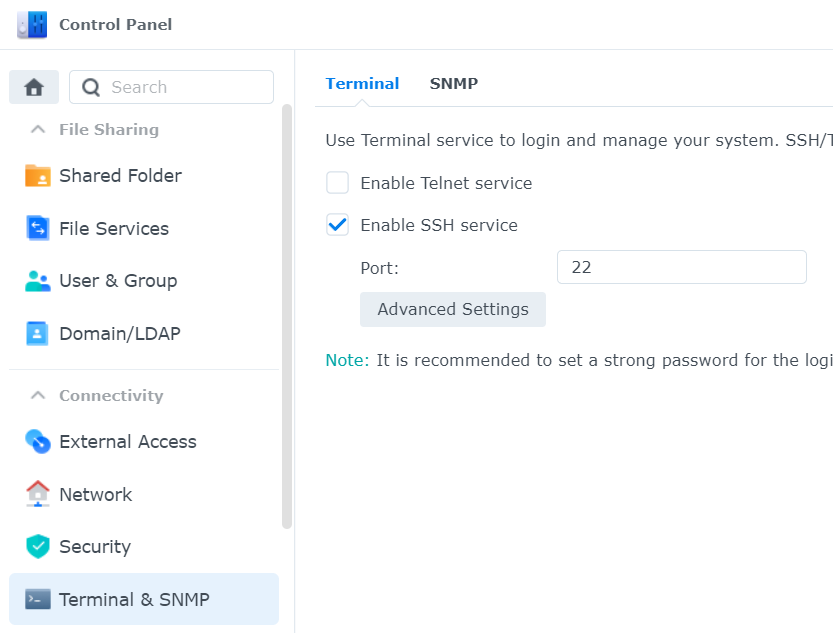

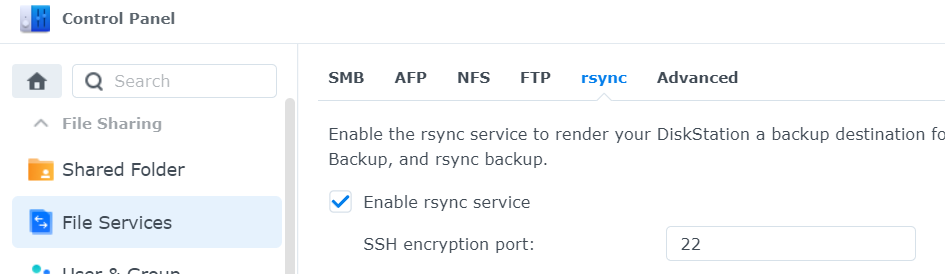

Enable SSH

Enable rsync service

Let's Automate!

...but first some more boring setup

Most of the automation will be executed directly on the Unraid host. This means we need to configure proper Ansible credentials for both the Ansible host and Unraid to authenticate when connecting remotely to the DiskStation. Using rsync—particularly with Ansible's module—can be quite troublesome when setting up remote-to-remote authentication. To simplify this process, I'll be using SSH key-based authentication, enabling passwordless login and making remote connectivity much smoother.

As a prerequisite to this I have already setup a user 'unraid' on the DiskStation system. It is configured to be allowed to SSH into the DiskStation and to have read/write access to the Backup folder I have already created.

To configure SSH key-based authentication (on Unraid server)

Generate SSH Key

unraid# ssh-keygenFollow the prompts. Name the key-pair something descriptive if you wish. Dont bother creating a password for it. Since I was doing this as the 'root' user, it dropped the new public and private key files in '/root/.ssh/'.

Copy SSH Key to DiskStation system.

unraid# ssh-copy-id unraid@<diskstation_ip>You will be prompted and need to provide the 'unraid' users SSH password. If successful you should see something similar to the below output.

Number of key(s) added: 1Now try logging into the machine, with: "ssh 'unraid@<diskstation_ip>'" and check to make sure that only the key(s) you wanted were added.Check the destination directory for the key files. Should be in the home directory of the user i.e. 'unraid' in their .ssh folder.

Test using the above mentioned command. In my case -

ssh unraid@<diskstation_ip>

NOTE: A few gotchas I'd like to share -

Destination NAS device (DiskStation) still asking for password

Solved by modifying the .ssh folder rights on both the Unraid and destination NAS (DiskStation) devices as follows -

chmod g-w /<absolute path>/.ssh/chmod o-wx /<absolute path/.ssh/Errors for modifying the 'ssh known_hosts file

hostfile_replace_entries: link /root/.ssh/known_hosts to /root/.ssh/known_hosts.old: Operation not permittedupdate_known_hosts: hostfile_replace_entries failed for /root/.ssh/known_hosts: Operation not permittedSolved by running an ssh-keyscan from Unraid to destination NAS -

unraid# ssh-keyscan -H <diskstation_ip> ~/.ssh/known_hosts

Overview and Breakdown

Let's start by discussing the playbook directory structure. It looks like this:

├── README.md

├── create-snapshot-pb.yml

├── defaults

│ └── inventory.yml

├── files

│ ├── backup-playbook-old.yml

│ └── snapshot-creation-unused.yml

├── handlers

├── meta

├── restore-from-local-tar-pb.yml

├── restore-from-snapshot-pb.yml

├── tasks

│ ├── shutdown-vm.yml

│ └── start-vm.yml

├── templates

├── tests

│ ├── debug-tests-pb.yml

│ └── simple-debugs.yml

└── vars

├── snapshot-creation-vars.yml

└── snapshot-restore-vars.yml

I copied the standard Ansible Role directory structure in case I wanted to publish it as a role in the future. Let's go over the breakout:

defaults/inventory.ymlThe main static inventory. Consists of unraid and diskstation hosts with their ansible connection variables and ssh credentialsvars/snapshot-creation-vars.ymlThis file is where users define the list of VMs and their associated disks for snapshot creation. It's mainly a dictionary specifying the targeted VMs and their disks to be snapshotted. Additionally, it includes a few variables related to the connection with the destination NAS device.tasks/shutdown-vm.ymlConsists of tasks used to shutdown targeted VMs gracefully and poll until shutdown status is confirmed.tasks/start-vm.ymlConsists of tasks used to start up targeted VMs, poll their status, and assert they are running before moving on.create-snapshot-pb.ymlThe main playbook we are covering in this post. Consists of 2 plays. The first play has two purposes - to perform checks on the targeted Unraid VMs/disks and also to build additional data structures/dynamic hosts. The other play then will create the snapshots and push them to the destination.Tests and Files folder - (Files) Consists of unused files/tasks I used to create and test the main playbook. (Tests) Contains some simple debug tasks I could copy and paste in quickly to get output from playbook execution.

restore-from-local-tar-pb.yml, restore-from-snapshot-pb.yml, and snapshot-restore-vars.ymlThese are files related to restoring the disks once the snapshots are created. They will be covered in the next article of this series.

Inventory - defaults/inventory.yml

The inventory file is pretty straight forward, as shown here:

---

nodes:

hosts:

diskstation:

ansible_host: "{{ lookup('env', 'DISKSTATION_IP_ADDRESS') }}"

ansible_user: "{{ lookup('env', 'DISKSTATION_USER') }}"

ansible_password: "{{ lookup('env', 'DISKSTATION_PASS') }}"

unraid:

ansible_host: "{{ lookup('env', 'UNRAID_IP_ADDRESS') }}"

ansible_user: "{{ lookup('env', 'UNRAID_USER') }}"

ansible_password: "{{ lookup('env', 'UNRAID_PASS') }}"

This file defines two hosts—unraid and diskstation—along with the essential connection variables Ansible requires to establish SSH access to these devices. For more details on the various types of connection variables, refer to the link provided below:

Ansible Connection Variables

To keep things simple (and enhance security), I’m using environment variables to store the Ansible connection values. These variables need to be set up on the Ansible host before running the playbook. If you’re new to automation or Linux, you can create environment variables using the examples provided below:ansible_host# export UNRAID_USER=rootansible_host# export DISKSTATION_IP=192.168.1.100

Variables - vars/snapshot-creation-vars.yml

This playbook uses a single variable file, which serves as the main file the user will interact with. In this file, you'll define your list of VMs, specify the disks associated with each VM that need snapshots, and provide the path to the directory where each VM's existing disk .img files are stored.

---

snapshot_repository_base_directory: volume1/Home\ Media/Backup

repository_user: unraid

snapshot_create_list:

- vm_name: Rocky9-TESTNode

disks_to_snapshot:

- disk_name: vdisk1.img

source_directory: /mnt/cache/domains

desired_snapshot_name: test-snapshot

- disk_name: vdisk2.img

source_directory: /mnt/disk1/domains

- vm_name: Rocky9-LabNode3

disks_to_snapshot:

- disk_name: vdisk1.img

source_directory: /mnt/nvme_cache/domains

desired_snapshot_name: kuberne&<tes-baseline

Let's break this down:

snapshot_create_list- the main data structure for defining your list of VMs and disks. Within this there are two main variables 'vm_name' and 'disks_to_snapshot'vm_name- used to define your the name of your VM. Must coincide with the name of the VM used within the Unraid system itself.disks_to_snapshot- a per VM list consisting of the disks that will be snapshot. This list requires two variables—disk_nameandsource_directory, withdesired_snapshot_nameas an ‘optional’ third variable.disk_name- consists the existing.imgfile name for that VM disk, i.evdisk1.imgsource_directory- consists of the absolute directory root path where the per VM files are stored. An example of a full path to an.imgfile within Unraid would be:/mnt/cache/domains/Rocky9-TESTNode/vdisk1.imgdesired_snapshot_name- is an optional attribute the user can define to customize the name of the snapshot. If left undefined, a timestamp of the current date/time will be used as the snapshot name, i.evdisk2.2024-09-12T03.09.17Z.imgsnapshot_repository_base_directoryandrepository_userare used within the playbook's rsync task. These variables offer flexibility, allowing the user to specify their own remote user and target destination for the rsync operation. These are used only if the snapshots are being sent to remote location upon creation.

Following the provided example you can define your VMs, disk names, and locations when running the playbook.

The Playbook

The playbook file is called create-snapshot-pb.yml. The playbook consists of two plays and 2 additional task files.

Snapshot Creation Prep Play

- name: Unraid Snapshot Creation Preperation

hosts: unraid

gather_facts: yes

vars:

needs_shutdown: []

confirmed_shutdown: []

vms_map: "{{ snapshot_create_list | map(attribute='vm_name') }}"

disks_map: "{{ snapshot_create_list | map(attribute='disks_to_snapshot') }}"

snapshot_data_map: "{{ dict(vms_map | zip(disks_map)) | dict2items(key_name='vm_name', value_name='disks_to_snapshot') | subelements('disks_to_snapshot') }}"

vars_files:

- ./vars/snapshot-creation-vars.yml

tasks:

- name: Get initial VM status

shell: virsh list --all | grep "{{ item.vm_name }}" | awk '{ print $3}'

register: cmd_res

tags: always

with_items: "{{ snapshot_create_list }}"

- name: Create list of VMs that need shutdown

set_fact:

needs_shutdown: "{{ needs_shutdown + [item.item.vm_name] }}"

when: item.stdout != 'shut'

tags: always

with_items: "{{ cmd_res.results }}"

- name: Shutdown VM(s)

include_tasks: ./tasks/shutdown-vm.yml

loop: "{{ needs_shutdown }}"

tags: always

when: needs_shutdown

Purpose:

Prepares the Unraid server for VM snapshot creation by checking the status of VMs, identifying which need to be shut down, and initiating shutdowns where necessary.

Hosts:

Targets the unraid host.

Variables:

needs_shutdown: Placeholder list of VMs that require shutdown before snapshot creation.confirmed_shutdown: Placeholder list for VMs confirmed to be shut down.vms_mapanddisks_map: Maps (creates new lists) for just VM names and their individual disk data respectfully. These lists are then used to create the largersnapshot_data_map.snapshot_data_map: Merges the VM and disk maps into a more structured data format, making it easier to access and manage the VM/disk information programmatically. My goal was to keep the inventory files simple for users to understand and modify. However, this approach didn’t work well with the looping logic I needed, so I created this new data map for better flexibility and control.

Variables File:

Loads additional variables from ./vars/snapshot_creation_vars.yml. Mainly the user's modified snapshot_create_list.

Tasks:

Get Initial VM Status:

Runs a shell command usingvirsh list --allto check the current status of each VM (running or shut down). Results are stored incmd_res.Identify VMs Needing Shutdown:

Uses a conditional check to add VMs that are not already shut down to theneeds_shutdownlist.Shutdown VMs:

Includes an external task file (shutdown-vm.yml) to gracefully shut down the VMs listed inneeds_shutdown. This task loops through the VMs in that list and executes the shutdown process. Using an external task file enables looping over a block of tasks while preserving error handling. If any task within the block fails, the entire block fails, ensuring that the VM is not added to theconfirmed_shutdownlist later in the play. This method provides better control and validation during the shutdown process.

NOTE: Tasks above all have the tag ‘always’ which is a special tag that ensures a task will always run, regardless of which tags are specified when you run a playbook.

Shutdown VMs task block (within Snapshot Creation Preparation play)

- name: Shutdown VMs Block

block:

- name: Shutdown VM - {{ item }}

command: virsh shutdown {{ item }}

ignore_errors: true

- name: Get VM status - {{ item }}

shell: virsh list --all | grep {{ item }} | awk '{ print $3}'

register: cmd_res

retries: 5

delay: 10

until: cmd_res.stdout != 'running'

delegate_to: unraid

tags: always

Here's a breakdown of the task block to shut down the targeted VMs:

Purpose:

This block is designed to gracefully shut down virtual machines (VMs) and verify their shutdown status. This block is also tagged as ‘always’, ensuring ALL tasks in the block run.

Tasks:

Shutdown VM:

Uses thevirsh shutdowncommand to initiate the shutdown of the specified VM.Check VM Status:

Runs a shell command to retrieve the VM's current status usingvirsh list. The status is checked by parsing the output to confirm whether the VM is no longer running. The task will retry up to 5 times, with a 10-second delay between attempts, until the VM is confirmed to have shut down (cmd_res.stdout != 'running').

Snapshot Creation Preparation Play (continued)

- name: Get VM status

shell: virsh list --all | grep "{{ item.vm_name }}" | awk '{ print $3}'

register: cmd_res

tags: always

with_items: "{{ snapshot_create_list }}"

- name: Create list to use for confirmation of VMs being shutdown

set_fact:

confirmed_shutdown: "{{ confirmed_shutdown + [item.item.vm_name] }}"

when: item.stdout == 'shut'

tags: always

with_items: "{{ cmd_res.results }}"

- name: Add host to group 'disks' with variables

ansible.builtin.add_host:

name: "{{ item[0]['vm_name'] }}-{{ item[1]['disk_name'][:-4] }}"

groups: disks

vm_name: "{{ item[0]['vm_name'] }}"

disk_name: "{{ item[1]['disk_name'] }}"

source_directory: "{{ item[1]['source_directory'] }}"

desired_snapshot_name: "{{ item[1]['desired_snapshot_name'] | default('') }}"

tags: always

loop: "{{ snapshot_data_map }}"

Purpose:

This 2nd group of tasks (still within the Snapshot Prep play) checks the status of VMs, confirms which have been shut down, and adds their disks to a dynamic inventory group for snapshot creation.

Tasks:

Get VM Status:

Runs a shell command usingvirsh list --allto retrieve the current status (e.g., running, shut) of each VM in thesnapshot_create_list. The result is stored incmd_res.Confirm VM Shutdown:

Updates theconfirmed_shutdownlist by adding VMs that are confirmed to be in the "shut" state. This ensures only properly shut down VMs proceed to the next steps.Add Disks to Group 'disks':

Dynamically adds VMs and their respective disks to the Ansible inventory groupdisks. It includes variables likevm_name,disk_name, andsource_directory, which will be used for subsequent snapshot operations.

Other things to point out:

- Ansible lets you dynamically add inventory hosts during playbook execution, which I used to treat each disk as a "host" rather than relying solely on variables. This approach enables the playbook to leverage Ansible's native task batch execution, allowing snapshot creation tasks to run concurrently across all disks. Without this method, using standard variables and looping would result in snapshots being created and synced one at a time— UGH. That's the reason behind Task #3. Also, these tasks are also all tagged with ‘always’.

Snapshot Creation Play

- name: Unraid Snapshot Creation

hosts: disks

gather_facts: no

vars_files:

- ./vars/snapshot-creation-vars.yml

tasks:

- name: Snapshot Creation Task Block

block:

- setup:

gather_subset:

- 'min'

delegate_to: unraid

- name: Create snapshot image filename

set_fact:

snapshot_filename: "{{ disk_name[:-4] }}.{{ desired_snapshot_name | regex_replace('\\-', '_') | regex_replace('\\W', '') }}.img"

delegate_to: unraid

when: desired_snapshot_name is defined and desired_snapshot_name | length > 0

- name: Create snapshot image filename with default date/time if necessary

set_fact:

snapshot_filename: "{{ disk_name[:-4] }}.{{ ansible_date_time.iso8601|replace(':', '.')}}.img"

delegate_to: unraid

when: desired_snapshot_name is not defined or desired_snapshot_name | length == 0

- name: Create reflink for {{ vm_name }}

command: cp --reflink -rf {{ disk_name }} {{ snapshot_filename }}

args:

chdir: "{{ source_directory }}/{{ vm_name }}"

delegate_to: unraid

- name: Check if reflink exists

stat:

path: "{{ source_directory }}/{{ vm_name }}/{{ snapshot_filename }}"

get_checksum: False

register: check_reflink_hd

delegate_to: unraid

- name: Backup HD(s) to DiskStation

command: rsync --progress {{ snapshot_filename }} {{ repository_user }}@{{ hostvars['diskstation']['ansible_host'] }}:/{{ snapshot_repository_base_directory }}/{{ vm_name }}/

args:

chdir: "{{ source_directory }}/{{ vm_name }}"

when: check_reflink_hd.stat.exists and 'use_local' not in ansible_run_tags

delegate_to: unraid

- name: Backup HD(s) to Local VM Folder as .tar

command: tar cf {{ snapshot_filename }}.tar {{ snapshot_filename }}

args:

chdir: "{{ source_directory }}/{{ vm_name }}"

when: check_reflink_hd.stat.exists and 'use_local' in ansible_run_tags

delegate_to: unraid

- name: Delete reflink file

command: rm "{{ source_directory }}/{{ vm_name }}/{{ snapshot_filename }}"

when: check_reflink_hd.stat.exists

delegate_to: unraid

- name: Start VM following snapshot transfer

command: virsh start {{ vm_name }}

tags: always

delegate_to: unraid

when: vm_name in hostvars['unraid']['confirmed_shutdown']

tags: always

Here's a breakdown of the second play in the playbook—Unraid Snapshot Creation

Purpose:

This play automates the creation of VM disk snapshots on the Unraid server, backing them up to a destination NAS via rsync or creating local snapshots as TAR files, stored in the same directory as the original disk.

Hosts:

- Uses the dynamically created

disksgroup made from the previous play. Also is able to use theunraidhost still in memory from the previous play.gather_factsis set to 'no', as thedisksgroup aren't actually hosts we connect to (explained in the previous play).

Variables:

- Loads variables from an external file

./vars/variables.yml, specificallydestination_directoryanddestination_user.

Tasks:

Setup Minimal Facts:

Gathers a minimal fact subset fromunraidhost to prepare for snapshot creation, mainly used foransible_date_time_iso8601variable.Create Snapshot Filename:

Generates a unique snapshot filename based off the ‘desired_snapshot_name’ variable if defined by the user. Also sanitizes that data by replacing dashes with slashes and removing special characters.Create Snapshot Image Filename with Default Date/Time if necessary:

Used as a default for creating snapshot name. Generates the snapshot filename based with ISO8601 date/time stamp if the filename wasn’t created with the previous task.

Create Snapshot (Reflink):

Uses acp --reflinkcommand to create a snapshot (reflink) of the specified disk in the source directory.Verify Snapshot Creation:

Checks if the snapshot (reflink) was successfully created in the target directory.Backup Snapshot to DiskStation:

If the snapshot exists, it's transferred to the DiskStation NAS using rsync, executed via Ansible'scommandmodule. A downside is that there’s no live progress shown in the Ansible shell output, which can be frustrating for large or numerous disk files. In my case, I monitor the DiskStation GUI to track the snapshot's file size growth to confirm it’s still running. If you want better visibility, Ansible AWX provides progress tracking without this limitation. Conditionally runs only if Ansible finds an existing reflink for the disk and the playbook WASN’T run with theuse_localtag.Backup HD(s) to Local VM Folder as .tar:

Alternatively, if the

use_localtag is present, the snapshot is archived locally as a.tarfile. This option allows users to store the snapshot on the same server, in the same source disk folder, without needing external storage. The play provides a mechanism to skip this step if not required, offering tag-based control for local or remote backups. Conditionally runs only if Ansible finds an existing reflink for the disk.Delete Reflink File:

Once the snapshot has been successfully backed up, it deletes the temporary reflink file on theunraidhost.Start VM Following Successful Snapshot Creation

Starts the impacted VMs back up once the snapshot creation process completes.

Conditional Execution:

- The play is only executed if the VM is confirmed to be in a shutdown state, based on the

vm_namevalue being present in theunraid.confirmed_shutdownhost variable list created in the previous play. This whole block is tagged with ‘always’. Every task will always run with the exception ofBackup HD(s) to DiskStation(see above)

Other things to point out:

All these tasks are being executed or

delegated_totheunraidhost itself. Nothing will run on thediskshost group.I opted to use

.tarfiles to speed up both the creation and restoration of snapshots. Bothrsyncand traditional file copy methods took nearly as long asrsyncfor remote destinations. By using.tarfiles within the same disk source folder, I reduced the time required by 25-50%.

Creating the Snapshots (Running the Playbook)

Finally we can move on to the most exciting piece, running the playbook. It's very simple to run. Just run the following command in the root of the playbook directory:

ansible-playbook create-snapshot-pb.yml -i defaults/inventory.yml

As long as your data and formatting is clean and all required setup was done you should see the playbook shutdown the VMs (if necessary) and quickly get to the Backup task for the disks. That's where it's going to spend the majority of its time.

Alternatively, you can run this play with the use_local tag to save snapshots as .tar files locally. This approach is ideal for faster recovery in a lab environment, where you're actively building or testing. Instead of rolling back multiple changes on a server, it's quicker and simpler to erase the disk and restore from a local baseline snapshot.

ansible-playbook create-snapshot-pb.yml -i defaults/inventory.yml --tags 'use_local'

Successful output should look like similar to the following:

PLAY [Unraid Snapshot Creation Prep] *****************************************************************************************************************

TASK [Gathering Facts] *******************************************************************************************************************************

ok: [unraid]

TASK [Get initial VM status] *************************************************************************************************************************

changed: [unraid] => (item={'vm_name': 'Rocky9-TESTNode', 'disks_to_snapshot': [{'disk_name': 'vdisk1.img', 'source_directory': '/mnt/cache/domains'}]})

changed: [unraid] => (item={'vm_name': 'Rocky9-LabNode3', 'disks_to_snapshot': [{'disk_name': 'vdisk1.img', 'source_directory': '/mnt/nvme_cache/domains'}]})

TASK [Create list of VMs that need shutdown] *********************************************************************************************************

ok: [unraid]

TASK [Shutdown VM(s)] ********************************************************************************************************************************

included: /mnt/c/Dev/Git/unraid-vm-snapshots/tasks/shutdown-vm.yml for unraid => (item=Rocky9-TESTNode)

included: /mnt/c/Dev/Git/unraid-vm-snapshots/tasks/shutdown-vm.yml for unraid => (item=Rocky9-LabNode3)

TASK [Shutdown VM - Rocky9-TESTNode] *****************************************************************************************************************

changed: [unraid]

TASK [Get VM status - Rocky9-TESTNode] ***************************************************************************************************************

changed: [unraid]

TASK [Shutdown VM - Rocky9-LabNode3] *****************************************************************************************************************

changed: [unraid]

TASK [Get VM status - Rocky9-LabNode3] ***************************************************************************************************************

FAILED - RETRYING: [unraid]: Get VM status - Rocky9-LabNode3 (5 retries left).

changed: [unraid]

TASK [Get VM status] *********************************************************************************************************************************

changed: [unraid] => (item={'vm_name': 'Rocky9-TESTNode', 'disks_to_snapshot': [{'disk_name': 'vdisk1.img', 'source_directory': '/mnt/cache/domains'}]})

changed: [unraid] => (item={'vm_name': 'Rocky9-LabNode3', 'disks_to_snapshot': [{'disk_name': 'vdisk1.img', 'source_directory': '/mnt/nvme_cache/domains'}]})

TASK [Create list to use for confirmation of VMs being shutdown] *************************************************************************************

ok: [unraid] => (item={'changed': True, 'stdout': 'shut', 'stderr': '', 'rc': 0, 'cmd': 'virsh list --all | grep "Rocky9-TESTNode" | awk \'{ print $3}\'', 'start': '2024-09-09 18:04:55.797046', 'end': '2024-09-09 18:04:55.809047', 'delta': '0:00:00.012001', 'msg': '', 'invocation': {'module_args': {'_raw_params': 'virsh list --all | grep "Rocky9-TESTNode" | awk \'{ print $3}\'', '_uses_shell': True, 'expand_argument_vars': True, 'stdin_add_newline': True, 'strip_empty_ends': True, 'argv': None, 'chdir': None, 'executable': None, 'creates': None, 'removes': None, 'stdin': None}}, 'stdout_lines': ['shut'], 'stderr_lines': [], 'failed': False, 'item': {'vm_name': 'Rocky9-TESTNode', 'disks_to_snapshot': [{'disk_name': 'vdisk1.img', 'source_directory': '/mnt/cache/domains'}]}, 'ansible_loop_var': 'item'})

ok: [unraid] => (item={'changed': True, 'stdout': 'shut', 'stderr': '', 'rc': 0, 'cmd': 'virsh list --all | grep "Rocky9-LabNode3" | awk \'{ print $3}\'', 'start': '2024-09-09 18:04:57.638402', 'end': '2024-09-09 18:04:57.650150', 'delta': '0:00:00.011748', 'msg': '', 'invocation': {'module_args': {'_raw_params': 'virsh list --all | grep "Rocky9-LabNode3" | awk \'{ print $3}\'', '_uses_shell': True, 'expand_argument_vars': True, 'stdin_add_newline': True, 'strip_empty_ends': True, 'argv': None, 'chdir': None, 'executable': None, 'creates': None, 'removes': None, 'stdin': None}}, 'stdout_lines': ['shut'], 'stderr_lines': [], 'failed': False, 'item': {'vm_name': 'Rocky9-LabNode3', 'disks_to_snapshot': [{'disk_name': 'vdisk1.img', 'source_directory': '/mnt/nvme_cache/domains'}]}, 'ansible_loop_var': 'item'})

TASK [Add host to group 'disks' with variables] ******************************************************************************************************

changed: [unraid] => (item=[{'vm_name': 'Rocky9-TESTNode', 'disks_to_snapshot': [{'disk_name': 'vdisk1.img', 'source_directory': '/mnt/cache/domains'}]}, {'disk_name': 'vdisk1.img', 'source_directory': '/mnt/cache/domains'}])

changed: [unraid] => (item=[{'vm_name': 'Rocky9-LabNode3', 'disks_to_snapshot': [{'disk_name': 'vdisk1.img', 'source_directory': '/mnt/nvme_cache/domains'}]}, {'disk_name': 'vdisk1.img', 'source_directory': '/mnt/nvme_cache/domains'}])

PLAY [Unraid Snapshot Creation] **********************************************************************************************************************

TASK [setup] *****************************************************************************************************************************************

ok: [Rocky9-TESTNode-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

ok: [Rocky9-LabNode3-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

TASK [Create snapshot image filename] ****************************************************************************************************************

ok: [Rocky9-TESTNode-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

ok: [Rocky9-LabNode3-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

TASK [Create reflink for Rocky9-TESTNode] ************************************************************************************************************

changed: [Rocky9-LabNode3-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

changed: [Rocky9-TESTNode-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

TASK [Check if reflink exists] ***********************************************************************************************************************

ok: [Rocky9-LabNode3-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

ok: [Rocky9-TESTNode-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

TASK [Backup HD1 to DiskStation] *********************************************************************************************************************

changed: [Rocky9-TESTNode-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

changed: [Rocky9-LabNode3-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

TASK [Delete reflink file] ***************************************************************************************************************************

changed: [Rocky9-LabNode3-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

changed: [Rocky9-TESTNode-vdisk1 -> unraid({{ lookup('env', 'UNRAID_IP_ADDRESS') }})]

PLAY RECAP *******************************************************************************************************************************************

Rocky9-LabNode3-vdisk1 : ok=6 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Rocky9-TESTNode-vdisk1 : ok=6 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

unraid : ok=12 changed=7 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

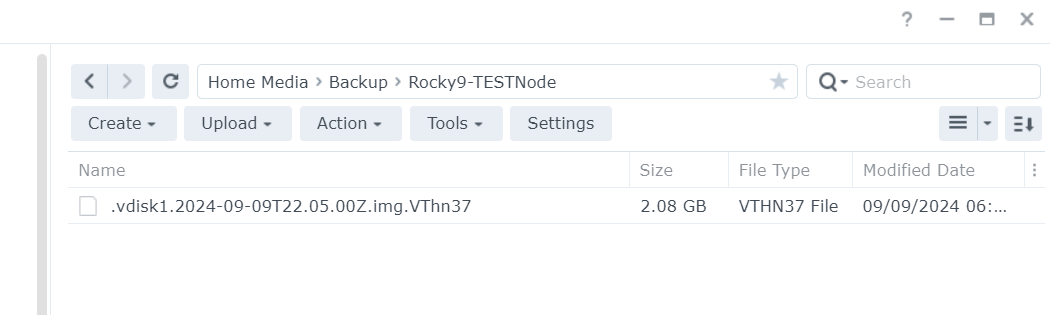

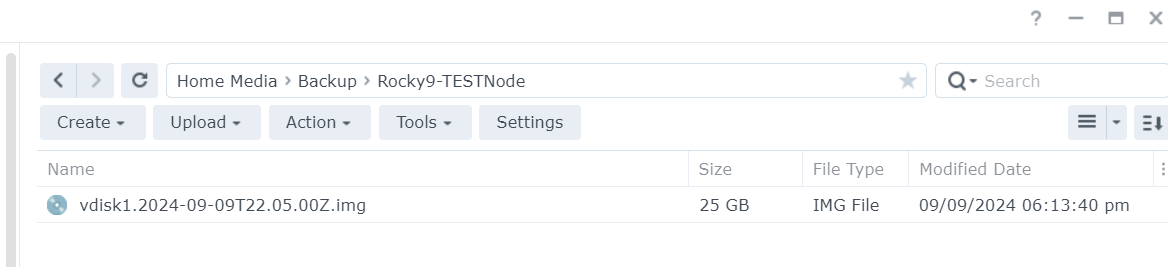

From DiskStation:

Closing Thoughts

Well, that was fun. Creating and backing up snapshots, especially in a home lab where tools might be less advanced, is incredibly useful. I plan to leverage this for more complex automation (Kubernetes anyone?) since restoring from a snapshot is far simpler than undoing multiple changes. Again, the main drawback is using the raw rsync command through Ansible lacks progress visibility. Also pushing backups to the NAS can be slow when dealing with hundreds of GBs or more. Takes roughly 4-5 mins to push 25GB image file over 1 Gbp/s connection.

What’s next?

I have two more pieces I will hopefully be adding to this series -

Restoring from a snapshot (whether its a specific snapshot or the latest).

Cleaning up old snapshots on your storage, in my case the DiskStation.

Down the road I may look at updating this using the rclone utility instead of rsync. Also might turn all this into a published Ansible role.

You can find the code that goes along with this post here (Github).

Thoughts, questions, and comments are appreciated. Please follow me here at Hashnode or connect with me on Linkedin.

Thank you for reading fellow techies!

Subscribe to my newsletter

Read articles from Jeffrey Lyon directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by