Day 34/40 Days of K8s: Upgrading a Multi Node Kubernetes Cluster With Kubeadm !!

Gopi Vivek Manne

Gopi Vivek Manne

Kubernetes Cluster Upgrade Guide

❓Understanding Kubernetes Versions

Version format:

major.minor.patch(ex: 1.30.2)Kubernetes supports only the latest 3 minor versions.

Upgrades are typically done one minor version at a time.

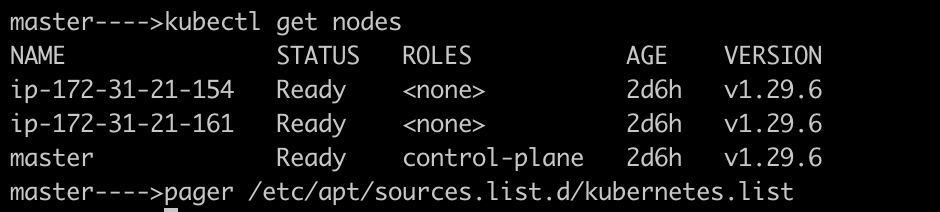

Example Cluster

Let's assume we have multi node kubeadm cluster, 1 master node (control plane) and 2 worker nodes on EC2s.

Generally, we will have Multiple master nodes to ensure high availability.

Upgrading Order

Primary control plane

Other control plane nodes (if any)

Worker nodes

Please follow this thread of official kubernetes documentation to understand additional details: https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

Why This Order?

Ensures at least one master is always running to maintain high availability and not disrupt cluster management functions.

Reason: If the control plane is down, all cluster management and administrative activities halt because the controller manager, API server, and scheduler are unavailable, preventing management of worker nodes. However, this does not affect the running applications.

Upgrading Nodes

Process for Each Node:

Drain the node

Evicts all pods from the node.

Reschedules pods on other nodes.

Marks node as cordoned (unavailable for scheduling).

Upgrade the node

Uncordon the node (make available for scheduling)

Upgrade Strategies

All at Once

Upgrade all worker nodes simultaneously

Pros: Quick and easy

Cons: Potential downtime of application, not ideal for production setup.

Rolling Update

Upgrade nodes one after another.

Pros: Minimal to no downtime, as other nodes remain available.

Cons: Takes longer to complete but very to no impact of downtime.

Blue/Green

Create a new/identical infrastructure with the upgraded version

Join new worker nodes to control plane

Remove old infrastructure

Pros: Quick, no downtime, seamless(especially when we are using public clouds)

Cons: Requires additional resources temporarily(hard in case of private cloud).

Component Version Compatibility

Control plane components (API server, Scheduler, Controller Manager): Can be one version behind the Kubernetes version.

Kubelet and kubectl: Can be up to two versions behind.

Best practice: Keep all components at the same version

Remember: Always backup your cluster data before performing any upgrades!

Hands-on

Ensure we're using the community-owned package repositories (

pkgs.k8s.io), we need to enable the package repository for the desired Kubernetes minor release.Follow this thread of k8s documentation to understand how to verify the k8s package repositories are used: https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/change-package-repository/

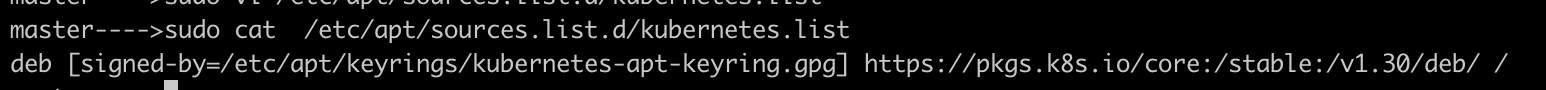

Switching to another Kubernetes package repository

This step should be done upon upgrading from one to another Kubernetes minor release in order to get access to the packages of the desired Kubernetes minor version.

sudo vi /etc/apt/sources.list.d/kubernetes.listI have edited the version to v1.30 as shown below

Determine which version to upgrade to

This shows that version v1.30 is ready to be upgraded now.

Upgrading primary Control plane node

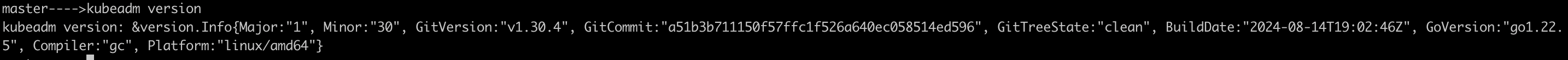

Verify the kubeadm version:

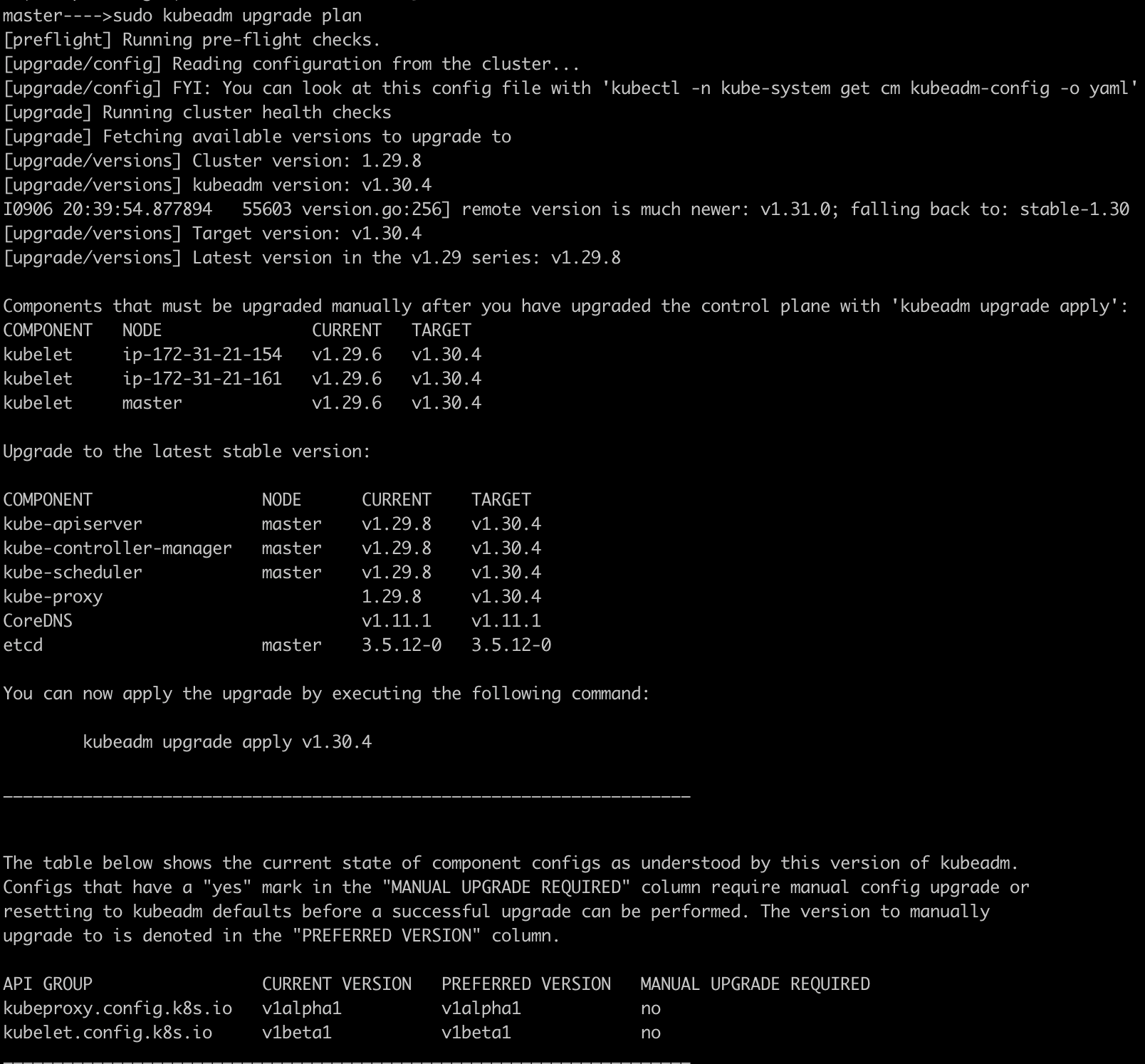

Verify the upgrade plan

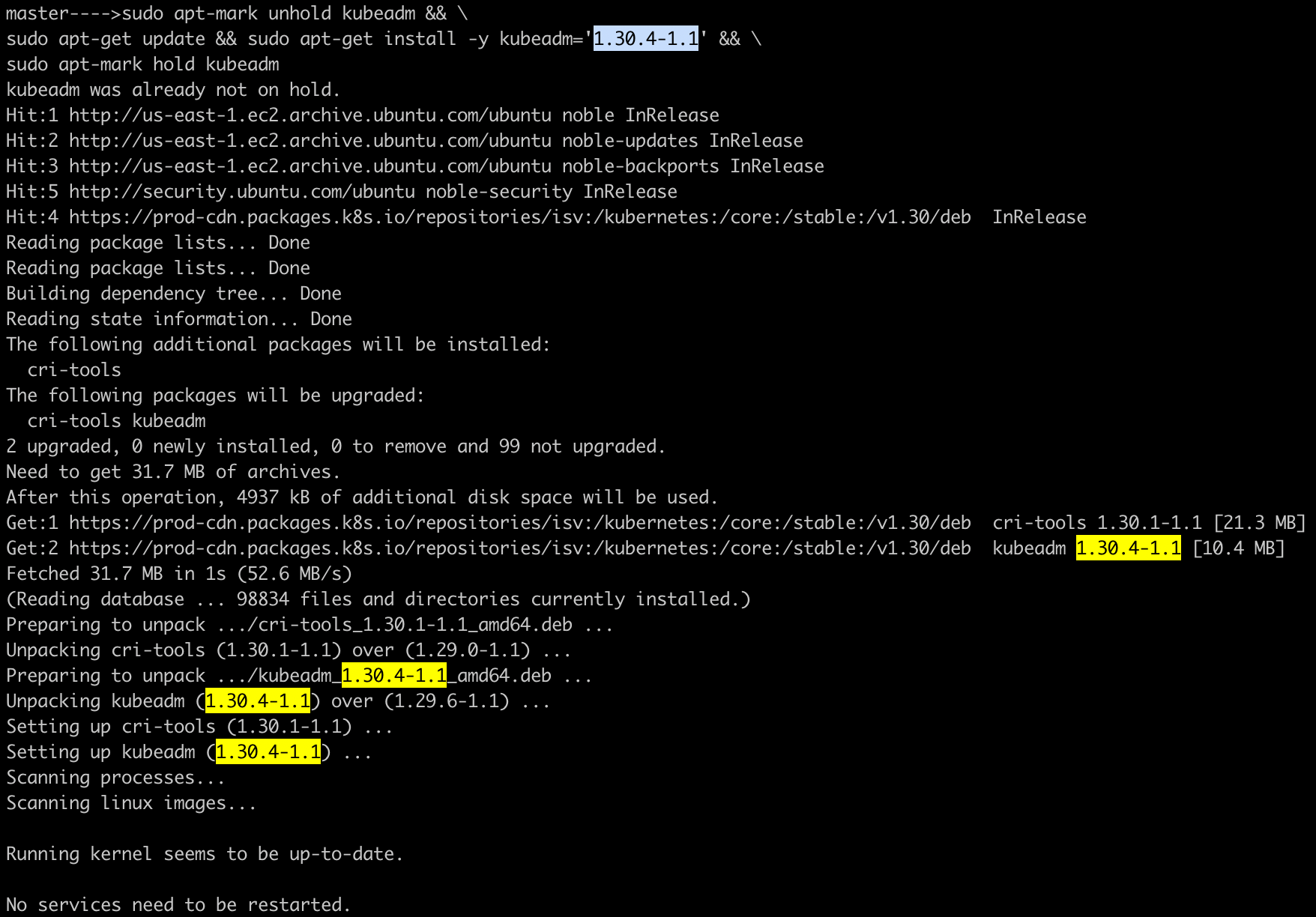

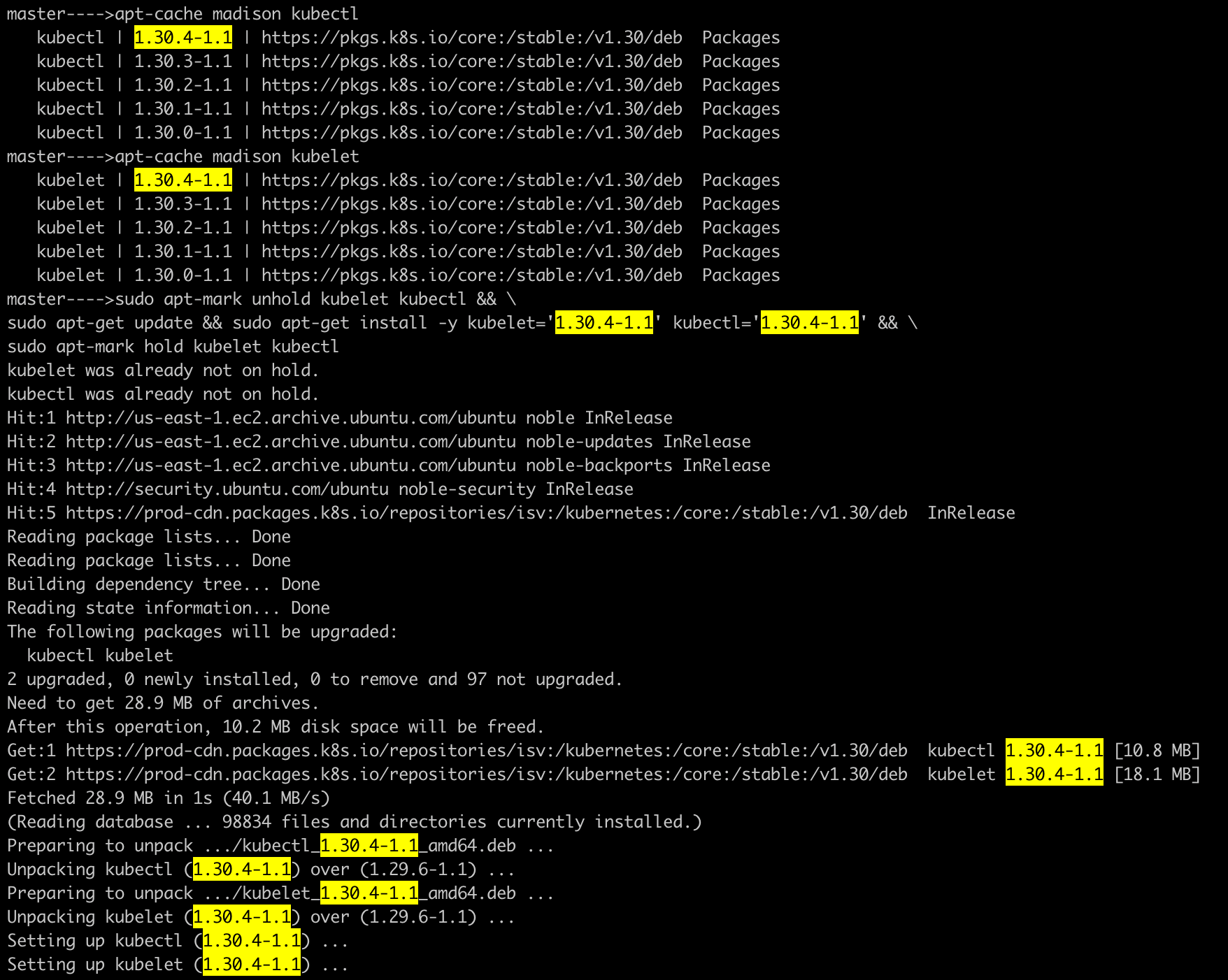

Now, upgrade the version of kubeadm using the command like

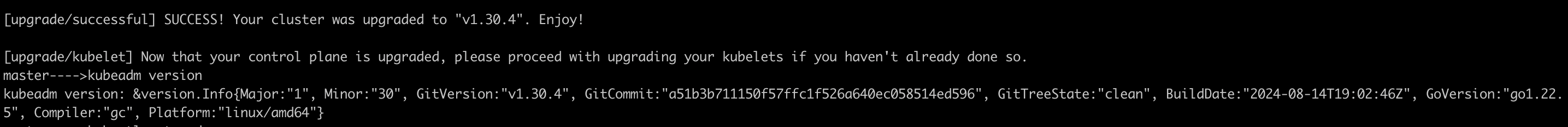

sudo kubeadm upgrade apply v1.30.4

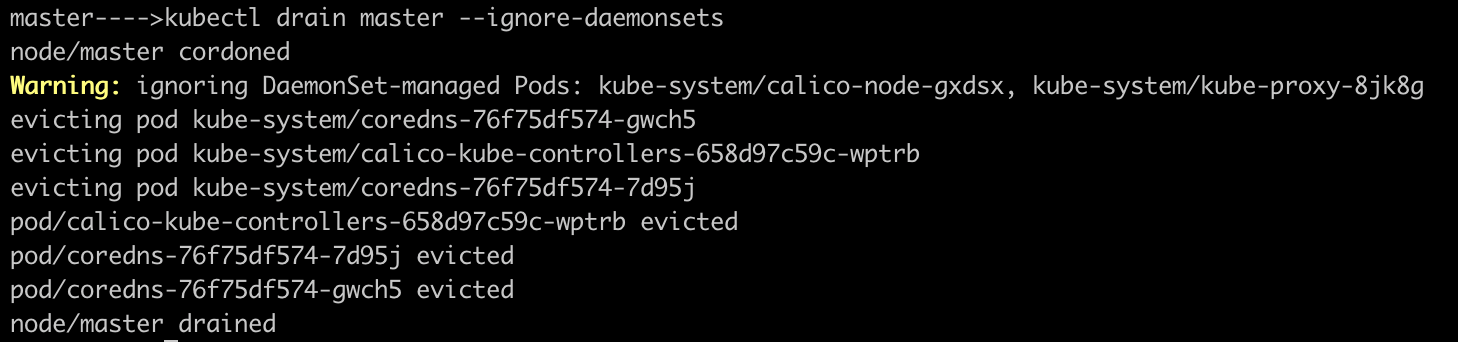

Drain the node: Prepare the node for maintenance by marking it unschedulable and evicting the workloads.

Upgrade the kubelet and kubectl

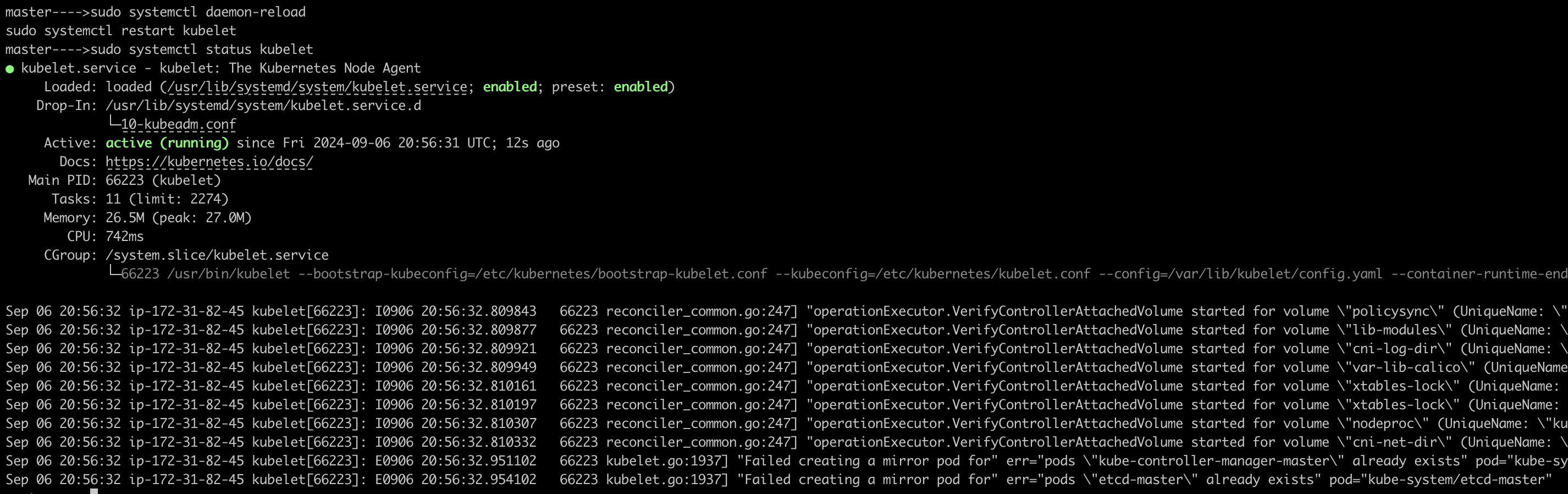

Restart the kubelet

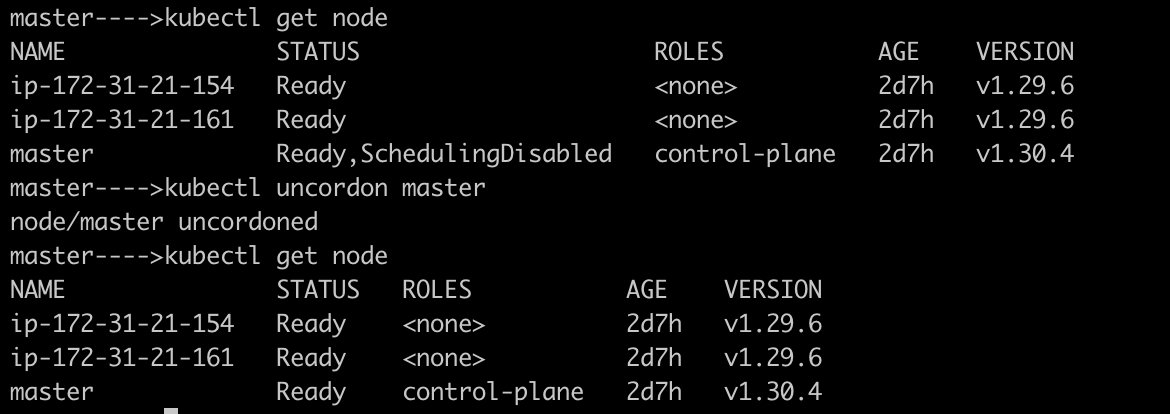

Uncordon the node

This clearly shows that master node has been upgraded to a new version

v1.30.4then it is uncordoned to make it allow any pods to be scheduled on it.

Upgrading worker nodes

Although we have different strategies of upgrading the node, I chose to use Rolling upgrade for now

Repeat the steps from 1-11 on each worker node

Here are the commands that should be followed in a sequence while upgrading worker nodes

kubectl get nodes

sudo vi /etc/apt/sources.list.d/kubernetes.list

sudo apt update && sudo apt-cache madison kubeadm

kubeadm version

sudo apt-mark unhold kubeadm && sudo apt-get update && sudo apt-get install -y kubeadm='1.30.4-1.1' && sudo apt-mark hold kubeadm

kubeadm version

sudo kubeadm upgrade node

kubectl drain ip-172-31-21-161 --ignore-daemonsets

sudo apt-mark unhold kubelet kubectl && sudo apt-get update && sudo apt-get install -y kubelet='1.30.4-1.1' kubectl='1.30.4-1.1' && sudo apt-mark hold kubelet kubectl

sudo systemctl daemon-reload

sudo systemctl restart kubelet

sudo systemctl status kubelet

kubelet --version

kubectl uncordon ip-172-31-21-161

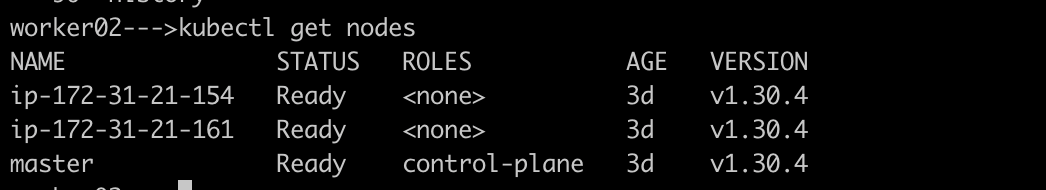

kubectl get nodes

From the above picture,This Clearly shows that all our nodes have been successfully upgraded to the version v1.30.4.

Common Questions

Why Upgrading

kubeadmon Worker Nodes once we already did on control-plane?It's important to upgrade

kubeadmon the worker nodes as it ensures compatibility with the control plane by updating the necessary configurations on the worker node. Once compatibility is confirmed, you can proceed to upgradekubeletandkubectlafter draining the node.Why Run

sudo kubeadm upgrade nodeon Worker Nodes?This process ensures that worker nodes are properly synced with the control plane and running the desired Kubernetes version without disrupting your applications.

#Kubernetes #Kubeadm #UpgradingCluster #Multinodecluster #40DaysofKubernetes #CKASeries

Subscribe to my newsletter

Read articles from Gopi Vivek Manne directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gopi Vivek Manne

Gopi Vivek Manne

I'm Gopi Vivek Manne, a passionate DevOps Cloud Engineer with a strong focus on AWS cloud migrations. I have expertise in a range of technologies, including AWS, Linux, Jenkins, Bitbucket, GitHub Actions, Terraform, Docker, Kubernetes, Ansible, SonarQube, JUnit, AppScan, Prometheus, Grafana, Zabbix, and container orchestration. I'm constantly learning and exploring new ways to optimize and automate workflows, and I enjoy sharing my experiences and knowledge with others in the tech community. Follow me for insights, tips, and best practices on all things DevOps and cloud engineering!