From Serverless to Kubernetes Part - 1

Sangam Biradar

Sangam Biradar

Serverless has emerged as a popular way to deploy simple applications, making it easier for startups to build solutions with fewer resources. However, most serverless platforms are vendor-specific, which can limit their usefulness for modern cloud-native and AI applications. This is where projects like Knative step in. Knative combines the power of serverless with Kubernetes, allowing developers to focus solely on writing serverless functions while benefiting from Kubernetes’ robust infrastructure.

In this blog, we’ll explore how to install Knative, understand its components, and deploy a simple Knative application on Kubernetes.

Background of Knative Project

Knative created originally by google with contributor from over 50 different companies , delivery an essential set of components to build and run serverless application on kubernetes . Knative is a Cloud Native Computing Foundation incubation project .

Create k8s Cluster Locally with Kind

- Install kind locally

verify kind is installed or not

kind --versionKind ( Kubernetes in Docker ) use Docker to create Kubernetes Cluster

Docker --versionhere is Kubernetes manifest to create kind cluster which includes extraPortMapping we needed for kourier ingress later in installing Kourier

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

image: kindest/node:v1.31.0@sha256:53df588e04085fd41ae12de0c3fe4c72f7013bba32a20e7325357a1ac94ba865

extraPortMappings:

- containerPort: 31080 # expose port 31380 of the node to port 80 on the host, later to be use by kourier ingress

hostPort: 80

- containerPort: 31443

hostPort: 443

save above manifest with name kind-knative-cluster.yaml

kind create cluster --name knative-cluster --config kind-knative-cluster.yaml

if already cluster exist with that name kind delete cluster -n knative-cluster

get cluster info and check if the context is pointing to the right cluster

kubectl cluster-info --context kind-knative-cluster

kubectl config get-contexts

Install Knative Serving Component

kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.15.2/serving-crds.yaml

kubectl wait --for=condition=Established --all crd

kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.15.2/serving-core.yaml

kubectl wait pod --timeout=-1s --for=condition=Ready -l '!job-name' -n knative-serving > /dev/null

Intall kourier

kubectl apply -f https://github.com/knative/net-kourier/releases/download/knative-v1.15.1/kourier.yaml

kubectl wait pod --timeout=-1s --for=condition=Ready -l '!job-name' -n kourier-system

kubectl wait pod --timeout=-1s --for=condition=Ready -l '!job-name' -n knative-serving

Set up Magic DNS

EXTERNAL_IP="127.0.0.1"

KNATIVE_DOMAIN="$EXTERNAL_IP.nip.io"

echo KNATIVE_DOMAIN=$KNATIVE_DOMAIN

dig $KNATIVE_DOMAIN

kubectl patch configmap -n knative-serving config-domain -p "{\"data\": {\"$KNATIVE_DOMAIN\": \"\"}}"

check the config domain in configmap

kubectl describe configmaps config-domain -n knative-serving

set Kourier as the default networking layer for Knative Serving

apiVersion: v1

kind: Service

metadata:

name: kourier-ingress

namespace: kourier-system

labels:

networking.knative.dev/ingress-provider: kourier

spec:

type: NodePort

selector:

app: 3scale-kourier-gateway

ports:

- name: http2

nodePort: 31080

port: 80

targetPort: 8080

- name: https

nodePort: 31443

port: 443

targetPort: 8443

Kourier is an Ingress for Knative Serving. Kourier is a lightweight alternative for the Istio ingress as its deployment consists only of an Envoy proxy and a control plane for it.

install the Kourier controller

kubectl apply -f kourier.yaml

Configure Knative Serving to use the proper "ingress.class"

kubectl patch configmap/config-network

--namespace knative-serving

--type merge

--patch '{"data":{"ingress.class":"kourier.ingress.networking.knative.dev"}}'

kubectl describe configmaps config-network -n knative-serving

check pods are up and running

kubectl get pods -n knative-serving

kubectl get pods -n kourier-system

kubectl get svc -n kourier-system

Install Kn CLI

brew install knative/client/kn

Run first hello world serverless function

kn service create hello

--image gcr.io/knative-samples/helloworld-go

--port 8080

--env TARGET=Knative

Get Service URL

SERVICE_URL=$(kubectl get ksvc hello -o jsonpath='{.status.url}')

echo $SERVICE_URL

Curl the URL in new Terminal

curl $SERVICE_URL

see the pod status

kubectl get pod -l serving.knative.dev/service=hello -w

# NAME READY STATUS RESTARTS AGE

# hello-00001-deployment-659dfd67fb-5ps9x 2/2 Running 0 90s

# hello-00001-deployment-659dfd67fb-5ps9x 2/2 Terminating 0 2m25s

# hello-00001-deployment-659dfd67fb-5ps9x 1/2 Terminating 0 2m27s

# hello-00001-deployment-659dfd67fb-wgnkj 0/2 Pending 0 0s

# hello-00001-deployment-659dfd67fb-wgnkj 0/2 Pending 0 0s

# hello-00001-deployment-659dfd67fb-wgnkj 0/2 ContainerCreating 0 0s

# hello-00001-deployment-659dfd67fb-wgnkj 1/2 Running 0 1s

# hello-00001-deployment-659dfd67fb-wgnkj 2/2 Running 0 1s

# hello-00001-deployment-659dfd67fb-5ps9x 0/2 Terminating 0 2m55s

# hello-00001-deployment-659dfd67fb-5ps9x 0/2 Terminating 0 2m56s

# hello-00001-deployment-659dfd67fb-5ps9x 0/2 Terminating 0 2m56s

# NAME READY STATUS RESTARTS AGE

# hello-00001-deployment-659dfd67fb-5ps9x 2/2 Running 0 90s

# hello-00001-deployment-659dfd67fb-5ps9x 2/2 Terminating 0 2m25s

# hello-00001-deployment-659dfd67fb-5ps9x 1/2 Terminating 0 2m27s

# hello-00001-deployment-659dfd67fb-wgnkj 0/2 Pending 0 0s

# hello-00001-deployment-659dfd67fb-wgnkj 0/2 Pending 0 0s

# hello-00001-deployment-659dfd67fb-wgnkj 0/2 ContainerCreating 0 0s

# hello-00001-deployment-659dfd67fb-wgnkj 1/2 Running 0 1s

# hello-00001-deployment-659dfd67fb-wgnkj 2/2 Running 0 1s

# hello-00001-deployment-659dfd67fb-5ps9x 0/2 Terminating 0 2m55s

# hello-00001-deployment-659dfd67fb-5ps9x 0/2 Terminating 0 2m56s

# hello-00001-deployment-659dfd67fb-5ps9x 0/2 Terminating 0 2m56s

# hello-00001-deployment-659dfd67fb-wgnkj 2/2 Terminating 0 88s

# hello-00001-deployment-659dfd67fb-npmr5 0/2 Pending 0 0s

# hello-00001-deployment-659dfd67fb-npmr5 0/2 Pending 0 0s

# hello-00001-deployment-659dfd67fb-npmr5 0/2 ContainerCreating 0 0s

# hello-00001-deployment-659dfd67fb-npmr5 1/2 Running 0 2s

# hello-00001-deployment-659dfd67fb-npmr5 2/2 Running 0 2s

# hello-00001-deployment-659dfd67fb-wgnkj 1/2 Terminating 0 90s

if you see above pods if your accessing URL that time container creating in background instantly

another way of installing Knative quickly

brew install knative-extensions/kn-plugins/quickstart kn quickstart kind

Lets build our newsfeed Knative application

tree

.

├── README.md

├── service.yaml

├── servingcontainer

│ ├── Dockerfile

│ ├── go.mod

│ └── servingcontainer.go

└── sidecarcontainer

├── Dockerfile

├── go.mod

├── go.sum

└── sidecarcontainer.go

here we have 2 functions on as servingcontainer.go and another sidecarcontainer.go with respecting dependencies and dockerfile

function 1 - servingcontainer

tree

.

├── Dockerfile

├── go.mod

└── servingcontainer.go

package main

import (

"fmt"

"io"

"log"

"net/http"

)

func handler(w http.ResponseWriter, r *http.Request) {

log.Println("serving container received a request.")

res, err := http.Get("http://127.0.0.1:8882")

if err != nil {

log.Fatal(err)

}

resp, err := io.ReadAll(res.Body)

if err != nil {

log.Fatal(err)

}

fmt.Fprintln(w, string(resp))

}

func main() {

log.Print("serving container started...")

http.HandleFunc("/", handler)

log.Fatal(http.ListenAndServe(":8881", nil))

}

simple HTTP server that listens on port 8881 and forwards incoming requests to another service running at http://127.0.0.1:8882. It retrieves the response from that service and returns it to the original client

here is Dockerfile

FROM golang:1.16 AS builder

ARG TARGETOS

ARG TARGETARCH

# Create and change to the app directory.

WORKDIR /app

# Retrieve application dependencies using go modules.

COPY go.* ./

RUN go mod download

# Copy local code to the container image.

COPY . ./

# Build the binary.

RUN CGO_ENABLED=0 GOOS=${TARGETOS} GOARCH=${TARGETARCH} go build -mod=readonly -v -o servingcontainer

# Use the official Alpine image for a lean production container.

FROM alpine:3

RUN apk add --no-cache ca-certificates

# Copy the binary to the production image from the builder stage.

COPY --from=builder /app/servingcontainer /servingcontainer

# Run the web service on container startup.

CMD ["/servingcontainer"]

Build Muti-arch images

docker buildx build --platform linux/arm64,linux/amd64 -t "sangam14/servingcontainer" --push .

[+] Building 72.9s (30/30) FINISHED docker:desktop-linux

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 734B 0.0s

=> [linux/amd64 internal] load metadata for docker.io/library/alpine:3 3.8s

=> [linux/arm64 internal] load metadata for docker.io/library/alpine:3 3.8s

=> [linux/arm64 internal] load metadata for docker.io/library/golang:1.23 7.1s

=> [linux/amd64 internal] load metadata for docker.io/library/golang:1.23 6.6s

=> [auth] library/alpine:pull token for registry-1.docker.io 0.0s

=> [auth] library/golang:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [linux/arm64 builder 1/6] FROM docker.io/library/golang:1.23@sha256:4a3c2bcd243d3dbb7b15237eecb0792db3614900037998c2cd6a579c46888c1e 33.0s

=> => resolve docker.io/library/golang:1.23@sha256:4a3c2bcd243d3dbb7b15237eecb0792db3614900037998c2cd6a579c46888c1e 0.0s

=> => sha256:83f1399aa9166438efeea4696812a3fa3f3397ff114492577a919f9b09f3c1ea 126B / 126B 2.6s

=> => sha256:a355a3cac949bed5cda9c62103ceb0f004727cedcd2a17d7c9836aea1a452fda 70.62MB / 70.62MB 9.9s

=> => sha256:ecb27c98d5b9e78892d876693427ae0a01e3113b36989718360a5aa9e319fd80 86.29MB / 86.29MB 15.4s

=> => sha256:843b1d8321825bc8302752ae003026f13bd15c6eef2efe032f3ca1520c5bbc07 64.00MB / 64.00MB 11.9s

=> => sha256:364d19f59f69474a80c53fc78da91f85553e16e8ba6a28063cbebf259821119e 23.59MB / 23.59MB 5.9s

=> => sha256:56c9b9253ff98351db158cb6789848656b8d54f411c0037347bf2358efb18f39 49.59MB / 49.59MB 3.9s

=> => extracting sha256:56c9b9253ff98351db158cb6789848656b8d54f411c0037347bf2358efb18f39 0.6s

=> => extracting sha256:364d19f59f69474a80c53fc78da91f85553e16e8ba6a28063cbebf259821119e 0.2s

=> => extracting sha256:843b1d8321825bc8302752ae003026f13bd15c6eef2efe032f3ca1520c5bbc07 0.7s

=> => extracting sha256:ecb27c98d5b9e78892d876693427ae0a01e3113b36989718360a5aa9e319fd80 1.0s

=> => extracting sha256:a355a3cac949bed5cda9c62103ceb0f004727cedcd2a17d7c9836aea1a452fda 1.6s

=> => extracting sha256:83f1399aa9166438efeea4696812a3fa3f3397ff114492577a919f9b09f3c1ea 0.0s

=> => extracting sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 0.0s

=> [linux/amd64 builder 1/6] FROM docker.io/library/golang:1.23@sha256:4a3c2bcd243d3dbb7b15237eecb0792db3614900037998c2cd6a579c46888c1e 24.6s

=> => resolve docker.io/library/golang:1.23@sha256:4a3c2bcd243d3dbb7b15237eecb0792db3614900037998c2cd6a579c46888c1e 0.0s

=> => sha256:95c1ad979d054ab0c2824c196b59074e31870426326f3e359ca9ee12d0fcb999 127B / 127B 1.1s

=> => sha256:e7bff916ab0c126c9d943f0c481a905f402e00f206a89248f257ef90beaabbd8 74.00MB / 74.00MB 16.9s

=> => sha256:627963ea2c8d5e7f344e68dce05f9013c8104d06e6e0d414fcbb261cc0b6bbde 92.26MB / 92.26MB 21.7s

=> => sha256:2e66a70da0bec13fb3d492fcdef60fd8a5ef0a1a65c4e8a4909e26742852f0f2 64.15MB / 64.15MB 5.4s

=> => sha256:2e6afa3f266c11e8960349e7866203a9df478a50362bb5488c45fe39d99b2707 24.05MB / 24.05MB 11.2s

=> => sha256:8cd46d290033f265db57fd808ac81c444ec5a5b3f189c3d6d85043b647336913 49.56MB / 49.56MB 7.1s

=> => extracting sha256:8cd46d290033f265db57fd808ac81c444ec5a5b3f189c3d6d85043b647336913 0.5s

=> => extracting sha256:2e6afa3f266c11e8960349e7866203a9df478a50362bb5488c45fe39d99b2707 0.2s

=> => extracting sha256:2e66a70da0bec13fb3d492fcdef60fd8a5ef0a1a65c4e8a4909e26742852f0f2 0.7s

=> => extracting sha256:627963ea2c8d5e7f344e68dce05f9013c8104d06e6e0d414fcbb261cc0b6bbde 0.9s

=> => extracting sha256:e7bff916ab0c126c9d943f0c481a905f402e00f206a89248f257ef90beaabbd8 1.2s

=> => extracting sha256:95c1ad979d054ab0c2824c196b59074e31870426326f3e359ca9ee12d0fcb999 0.0s

=> => extracting sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 798B 0.0s

=> [linux/amd64 stage-1 1/3] FROM docker.io/library/alpine:3@sha256:beefdbd8a1da6d2915566fde36db9db0b524eb737fc57cd1367effd16dc0d06d 0.8s

=> => resolve docker.io/library/alpine:3@sha256:beefdbd8a1da6d2915566fde36db9db0b524eb737fc57cd1367effd16dc0d06d 0.0s

=> => sha256:43c4264eed91be63b206e17d93e75256a6097070ce643c5e8f0379998b44f170 2.10MB / 3.62MB 65.7s

=> => extracting sha256:43c4264eed91be63b206e17d93e75256a6097070ce643c5e8f0379998b44f170 0.0s

=> [linux/arm64 stage-1 1/3] FROM docker.io/library/alpine:3@sha256:beefdbd8a1da6d2915566fde36db9db0b524eb737fc57cd1367effd16dc0d06d 0.8s

=> => resolve docker.io/library/alpine:3@sha256:beefdbd8a1da6d2915566fde36db9db0b524eb737fc57cd1367effd16dc0d06d 0.0s

=> => sha256:cf04c63912e16506c4413937c7f4579018e4bb25c272d989789cfba77b12f951 4.09MB / 4.09MB 0.7s

=> => extracting sha256:cf04c63912e16506c4413937c7f4579018e4bb25c272d989789cfba77b12f951 0.1s

=> [linux/arm64 stage-1 2/3] RUN apk add --no-cache ca-certificates 36.3s

=> [linux/amd64 stage-1 2/3] RUN apk add --no-cache ca-certificates 32.1s

=> [linux/amd64 builder 2/6] WORKDIR /app 0.8s

=> [linux/amd64 builder 3/6] COPY go.* ./ 0.0s

=> [linux/amd64 builder 4/6] RUN go mod download 0.2s

=> [linux/amd64 builder 5/6] COPY . ./ 0.0s

=> [linux/amd64 builder 6/6] RUN CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -mod=readonly -v -o servingcontainer 16.4s

=> [linux/arm64 builder 2/6] WORKDIR /app 0.1s

=> [linux/arm64 builder 3/6] COPY go.* ./ 0.0s

=> [linux/arm64 builder 4/6] RUN go mod download 0.1s

=> [linux/arm64 builder 5/6] COPY . ./ 0.0s

=> [linux/arm64 builder 6/6] RUN CGO_ENABLED=0 GOOS=linux GOARCH=arm64 go build -mod=readonly -v -o servingcontainer 3.7s

=> [linux/arm64 stage-1 3/3] COPY --from=builder /app/servingcontainer /servingcontainer 0.0s

=> [linux/amd64 stage-1 3/3] COPY --from=builder /app/servingcontainer /servingcontainer 0.0s

=> exporting to image 11.7s

=> => exporting layers 0.2s

=> => exporting manifest sha256:61709c4dd0e25684749ad68c5635b481f31f24574a603bc5b556ba5d15fe79a4 0.0s

=> => exporting config sha256:86aac9ead5bb472bc29b4f06c66655e7883bfdb248bd321885b257563a442929 0.0s

=> => exporting attestation manifest sha256:fabd107bc16cbbe43c3f163dc8c4e5cb51cbaf219d4da909d4364be8b2f80045 0.0s

=> => exporting manifest sha256:190a25dd92a3ff04983f4fd9ec8374f7560d2273f3481e5a57b32920b16cfff3 0.0s

=> => exporting config sha256:842a417114450ddfbca6dc5a5248c4b64950c445bfc7ff8b811c0a75eea1aa2b 0.0s

=> => exporting attestation manifest sha256:9471827b65703cafd008a1c28f65ada987dff64193299db1299906d8c1bfeb0e 0.0s

=> => exporting manifest list sha256:9b0071778eee4f9b87c7838d90689ad2c16dbecb7f85ec419edcf54f692d40e6 0.0s

=> => naming to docker.io/sangam14/servingcontainer:latest 0.0s

=> => unpacking to docker.io/sangam14/servingcontainer:latest 0.0s

=> => pushing layers 7.9s

=> => pushing manifest for docker.io/sangam14/servingcontainer:latest@sha256:9b0071778eee4f9b87c7838d90689ad2c16dbecb7f85ec419edcf54f692d40e 3.4s

=> [auth] sangam14/servingcontainer:pull,push token for registry-1.docker.io 0.0s

=> pushing sangam14/servingcontainer with docker 8.6s

=> => pushing layer cf04c63912e1 8.5s

=> => pushing layer c3d694a809a6 8.5s

=> => pushing layer e8a452786f60 8.5s

=> => pushing layer 854a7d49a717 8.5s

=> => pushing layer ce64efdaa908 8.5s

=> => pushing layer c8910dfd41a3 8.5s

=> => pushing layer 039108769e6b 8.5s

=> => pushing layer 43c4264eed91

function 2 - sidecarcontainer

tree

.

├── Dockerfile

├── go.mod

├── go.sum

└── sidecarcontainer.go

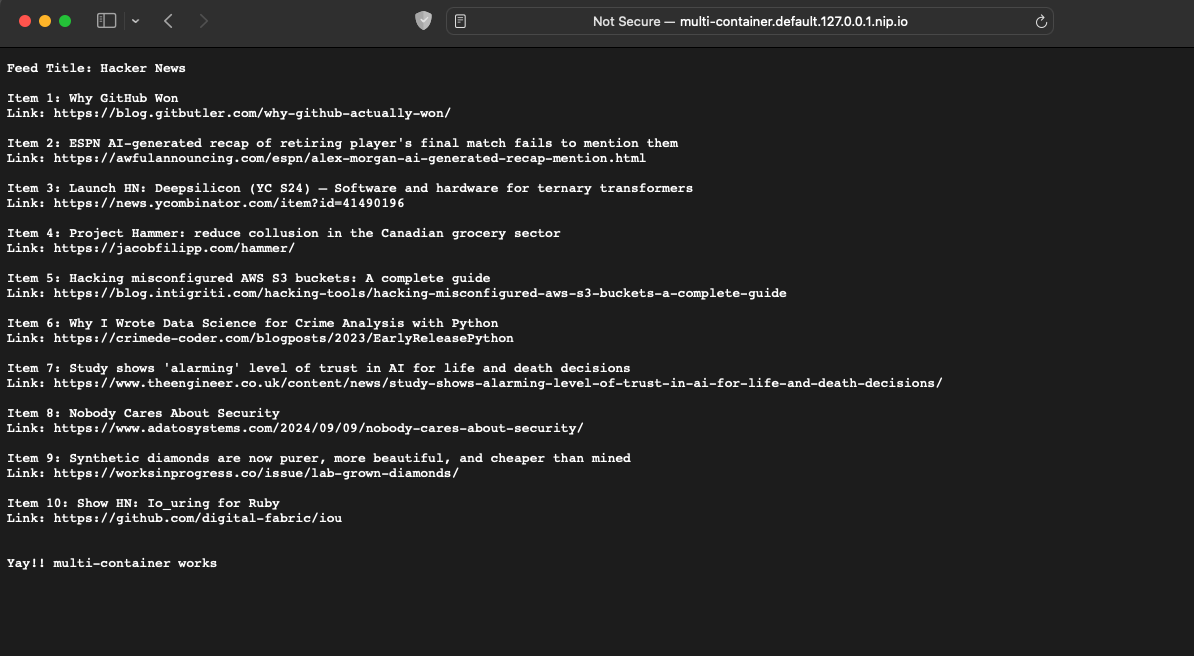

package main

import (

"fmt"

"log"

"net/http"

"github.com/mmcdole/gofeed"

)

func handler(w http.ResponseWriter, r *http.Request) {

log.Println("sidecar container received a request.")

// Parse the RSS feed

fp := gofeed.NewParser()

feed, err := fp.ParseURL("https://news.ycombinator.com/rss") // Replace with the desired RSS feed URL

if err != nil {

http.Error(w, "Failed to fetch newsfeed", http.StatusInternalServerError)

log.Println("Failed to parse newsfeed:", err)

return

}

// Display the feed title

fmt.Fprintf(w, "Feed Title: %s\n\n", feed.Title)

// Display the titles and links of the first few items

for i, item := range feed.Items {

if i >= 10 { // Limit to first 5 items

break

}

fmt.Fprintf(w, "Item %d: %s\nLink: %s\n\n", i+1, item.Title, item.Link)

}

fmt.Fprintln(w, "\nYay!! multi-container works")

}

func main() {

log.Print("sidecar container started...")

http.HandleFunc("/", handler)

log.Fatal(http.ListenAndServe(":8882", nil))

}

a simple HTTP server in a Go-based sidecar container that fetches and parses an RSS feed (from Hacker News in this case) and serves the results. It uses the gofeed package to handle RSS parsing.

# Dockerfile

FROM golang:1.23 AS builder

ARG TARGETOS

ARG TARGETARCH

# Create and change to the app directory

WORKDIR /app

# Retrieve application dependencies using go modules

COPY go.* ./

RUN go mod download

# Copy local code to the container image

COPY . ./

# Build the binary

RUN CGO_ENABLED=0 GOOS=${TARGETOS} GOARCH=${TARGETARCH} go build -mod=readonly -v -o sidecarcontainer

# Use the official Alpine image for a lean production container

FROM alpine:3

RUN apk add --no-cache ca-certificates

# Copy the binary to the production image from the builder stage

COPY --from=builder /app/sidecarcontainer /sidecarcontainer

# Run the web service on container startup

CMD ["/sidecarcontainer"]

Build Dockerfile

docker buildx build --platform linux/arm64,linux/amd64 -t "sangam14/sidecarcontainer" --push .

[+] Building 44.1s (29/29) FINISHED docker:desktop-linux

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 741B 0.0s

=> [linux/amd64 internal] load metadata for docker.io/library/alpine:3 1.0s

=> [linux/arm64 internal] load metadata for docker.io/library/alpine:3 0.9s

=> [linux/amd64 internal] load metadata for docker.io/library/golang:1.23 1.0s

=> [linux/arm64 internal] load metadata for docker.io/library/golang:1.23 1.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [linux/arm64 builder 1/6] FROM docker.io/library/golang:1.23@sha256:4a3c2bcd243d3dbb7b15237eecb0792db3614900037998c2cd6a579c46888c1e 0.0s

=> => resolve docker.io/library/golang:1.23@sha256:4a3c2bcd243d3dbb7b15237eecb0792db3614900037998c2cd6a579c46888c1e 0.0s

=> [linux/amd64 stage-1 1/3] FROM docker.io/library/alpine:3@sha256:beefdbd8a1da6d2915566fde36db9db0b524eb737fc57cd1367effd16dc0d06d 0.0s

=> => resolve docker.io/library/alpine:3@sha256:beefdbd8a1da6d2915566fde36db9db0b524eb737fc57cd1367effd16dc0d06d 0.0s

=> [linux/arm64 stage-1 1/3] FROM docker.io/library/alpine:3@sha256:beefdbd8a1da6d2915566fde36db9db0b524eb737fc57cd1367effd16dc0d06d 0.0s

=> => resolve docker.io/library/alpine:3@sha256:beefdbd8a1da6d2915566fde36db9db0b524eb737fc57cd1367effd16dc0d06d 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 1.83kB 0.0s

=> [linux/amd64 builder 1/6] FROM docker.io/library/golang:1.23@sha256:4a3c2bcd243d3dbb7b15237eecb0792db3614900037998c2cd6a579c46888c1e 0.0s

=> => resolve docker.io/library/golang:1.23@sha256:4a3c2bcd243d3dbb7b15237eecb0792db3614900037998c2cd6a579c46888c1e 0.0s

=> CACHED [linux/amd64 stage-1 2/3] RUN apk add --no-cache ca-certificates 0.0s

=> CACHED [linux/arm64 stage-1 2/3] RUN apk add --no-cache ca-certificates 0.0s

=> CACHED [linux/arm64 builder 2/6] WORKDIR /app 0.0s

=> [linux/arm64 builder 3/6] COPY go.* ./ 0.0s

=> CACHED [linux/amd64 builder 2/6] WORKDIR /app 0.0s

=> [linux/amd64 builder 3/6] COPY go.* ./ 0.0s

=> [linux/arm64 builder 4/6] RUN go mod download 4.5s

=> [linux/amd64 builder 4/6] RUN go mod download 5.4s

=> [linux/arm64 builder 5/6] COPY . ./ 0.0s

=> [linux/arm64 builder 6/6] RUN CGO_ENABLED=0 GOOS=linux GOARCH=arm64 go build -mod=readonly -v -o sidecarcontainer 4.0s

=> [linux/amd64 builder 5/6] COPY . ./ 0.0s

=> [linux/amd64 builder 6/6] RUN CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -mod=readonly -v -o sidecarcontainer 18.0s

=> [linux/arm64 stage-1 3/3] COPY --from=builder /app/sidecarcontainer /sidecarcontainer 0.0s

=> [linux/amd64 stage-1 3/3] COPY --from=builder /app/sidecarcontainer /sidecarcontainer 0.0s

=> exporting to image 11.8s

=> => exporting layers 0.3s

=> => exporting manifest sha256:743e5367746604219e7d8070490a9e49b78f8a41542f08f1d3fd683fc58cf7f0 0.0s

=> => exporting config sha256:c68b9afe107cf0ffdc9f2ae0eaf878de2431213bbc036891f36f3a9e2f619c5e 0.0s

=> => exporting attestation manifest sha256:f33bf8633f608ee8d0a807cf0adbcfde2335d0a38026fc9d95fc390af4b4ace6 0.0s

=> => exporting manifest sha256:84ac4119bdbdfad3828ee69ce192331177a392a68ba3438582852edff1424cbb 0.0s

=> => exporting config sha256:48b111bd6dffb0730a19cb2c0f8aab4d1b5bdff7577392d6f90c09eaea2a4fb0 0.0s

=> => exporting attestation manifest sha256:e133468d18aad6fb9d11448bf18cd5d70c93b128a125df0e2e3f830e374a4756 0.0s

=> => exporting manifest list sha256:ea149847fd05e1b469ce86835cf7f4722a3cba6590e302ebf3d3e7869e42da90 0.0s

=> => naming to docker.io/sangam14/sidecarcontainer:latest 0.0s

=> => unpacking to docker.io/sangam14/sidecarcontainer:latest 0.0s

=> => pushing layers 8.0s

=> => pushing manifest for docker.io/sangam14/sidecarcontainer:latest@sha256:ea149847fd05e1b469ce86835cf7f4722a3cba6590e302ebf3d3e7869e42da9 3.5s

=> [auth] sangam14/sidecarcontainer:pull,push token for registry-1.docker.io 0.0s

=> [auth] sangam14/servingcontainer:pull sangam14/sidecarcontainer:pull,push token for registry-1.docker.io 0.0s

=> pushing sangam14/sidecarcontainer with docker 4.7s

=> => pushing layer c3d694a809a6 4.6s

=> => pushing layer cf04c63912e1 4.6s

=> => pushing layer e8a452786f60 4.6s

=> => pushing layer 62ffe345c65c 4.6s

=> => pushing layer 4d650751acc4 4.6s

=> => pushing layer ec4bf944c859 4.6s

=> => pushing layer 1b45ea6e206a 4.6s

=> => pushing layer 43c4264eed91 4.6s

here is knative service

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: multi-container

namespace: default

spec:

template:

spec:

containers:

- image: docker.io/sangam14/servingcontainer:latest

ports:

- containerPort: 8881

- image: docker.io/sangam14/sidecarcontainer:latest

After the build has completed and the container is pushed to Docker Hub, youcan deploy the app into your cluster. Ensure that the container image value in service.yaml matches the container you built in the previous step. Applythe configuration using kubectl

kubectl apply --filename service.yaml

service.serving.knative.dev/multi-container created

check kn service to get url

kn service list

NAME URL LATEST AGE CONDITIONS READY REASON

multi-container http://multi-container.default.127.0.0.1.nip.io multi-container-00001 51s 3 OK / 3 True

With Knative, you can easily run serverless applications on Kubernetes, combining the power of Kubernetes and the flexibility of serverless. The multi-container approach showcased here opens up even more possibilities for deploying complex applications

Subscribe to my newsletter

Read articles from Sangam Biradar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sangam Biradar

Sangam Biradar

DevRel at StackGen | Formerly at Deepfence ,Tenable , Accurics | AWS Community Builder also Docker Community Award Winner at Dockercon2020 | CyberSecurity Innovator of Year 2023 award by Bsides Bangalore | Docker/HashiCorp Meetup Organiser Bangalore & Co-Author of Learn Lightweight Kubernetes with k3s (2019) , Packt Publication & also run Non Profit CloudNativeFolks / CloudSecCorner Community To Empower Free Education reach out me twitterhttps://twitter.com/sangamtwts or just follow on GitHub -> https://github.com/sangam14 for Valuable Resources