Architecture of Docker

ANNU KUMARI

ANNU KUMARI

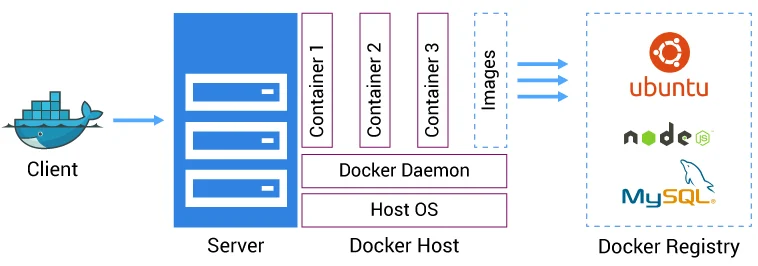

In the previous article, I described what Docker is. Now, I’m going to write about the architecture of Docker. So, let’s delve into the architecture of Docker. Docker's architecture is fascinating and foundational for understanding how it operates. At a high level, Docker follows a client-server architecture, where the client communicates with the Docker daemon to build, run, and manage containers.

Docker architecture consists of several components that work together to allow you to build, ship, and run containers. Let’s break it down in detail:

Docker Architecture

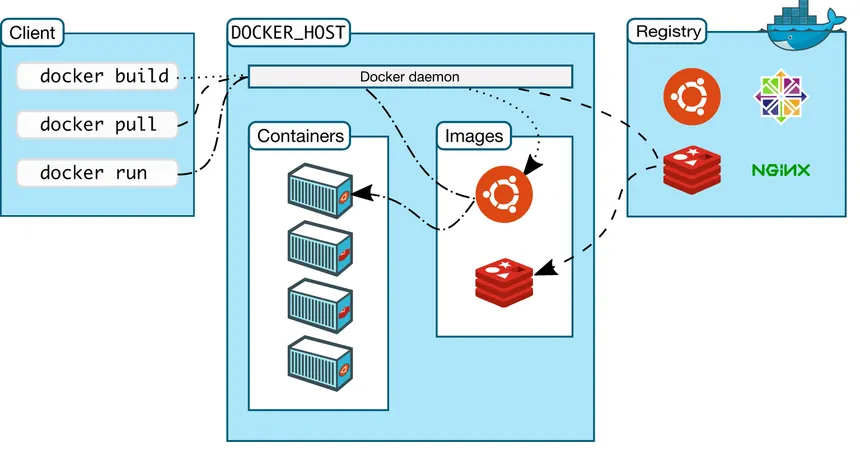

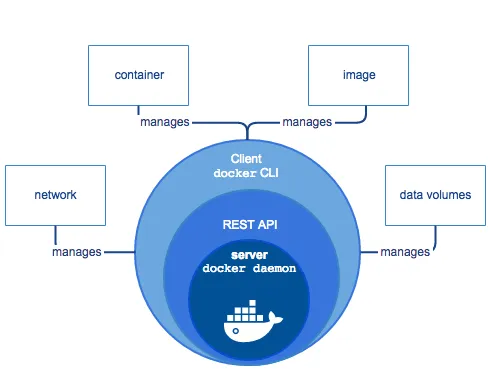

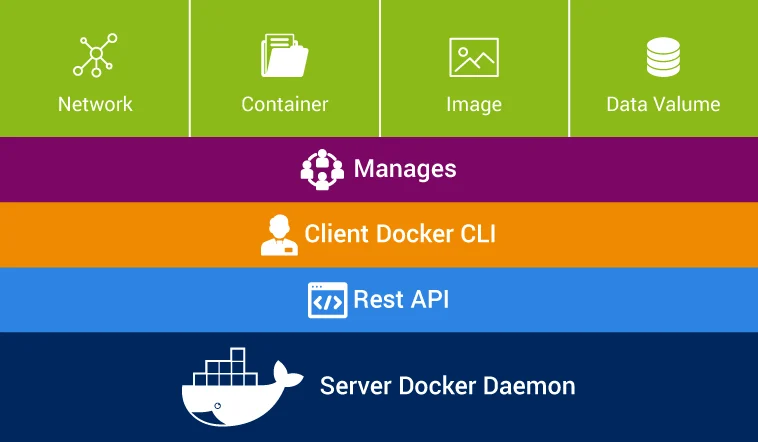

Docker follows a client-server architecture. The Docker client talks to the Docker daemon, which handles building, running, and managing Docker containers. Docker uses REST APIs to communicate between the client and server.

The key components of Docker architecture are:

Docker Client

Docker Daemon (Server)

Docker Images

Docker Containers

Docker Registry

Docker Client:

The Docker client is what you, as a user, interact with. It provides a command-line interface (CLI) to execute Docker commands.

How it Works: When you run a Docker command (like

docker run), the Docker client sends these commands to the Docker daemon using REST API calls.Role: It does not execute the actual commands; instead, it communicates with the Docker daemon, which performs all the actions.

Commands like:

docker pull nginx

docker run -d nginx

docker build -t my-app .

are issued via the Docker client, which talks to the daemon to perform the operations.

Docker Daemon

The Docker daemon is the engine that runs in the background on your host machine. It listens to Docker API requests and manages Docker objects, such as images, containers, networks, and volumes.

Functions:

Build, pull, and push images.

Create and run containers.

Manage container lifecycle (start, stop, remove containers).

Role: The daemon is responsible for all the heavy lifting, such as creating and managing containers and interacting with storage volumes and networks.

docker pull <image> # The client sends a request to the daemon to pull the image

docker run <image> # The client sends a request to the daemon to run a container

Docker Images

Docker images are read-only templates that contain the application and everything required to run it, including the code, libraries, and dependencies.

Layered File System: Docker images use a layered file system. Each image is built up from a series of layers, where each layer represents a set of instructions defined in the Dockerfile (e.g.,

FROM,RUN,COPY).Image Layers:

Images are created in layers, where each layer is a read-only snapshot of the filesystem.

Layers can be shared between images, making Docker images highly space-efficient.

For example, if two images both use the same base image, Docker will download it only once and reuse it for both images.

- Role in Containers: A Docker container is launched from an image. Each time you start a container, Docker creates a new layer on top of the image, allowing for the container to be writable.

Docker Containers

A container is a runtime instance of a Docker image. Containers are isolated environments where applications run.

Key Features:

Isolation: Each container runs in its own environment, isolated from other containers and the host system. This is achieved through features like namespaces and cgroups (control groups).

Lightweight: Containers share the same kernel as the host system, making them more efficient and faster to start compared to virtual machines (VMs).

Ephemeral: Containers are often stateless and short-lived, meaning data does not persist unless you mount volumes.

Writable Layer: Containers have a writable layer on top of the image they were created from, so changes to the file system inside a container do not affect the underlying image.

For example:

docker run nginx

This command starts a container from the nginx image, isolating the environment and running the web server.

Docker Registry

A Docker registry is a storage and distribution system for Docker images.

Docker Hub: The most common registry is Docker Hub, a public registry where Docker images are stored and shared. You can pull images from Docker Hub or push your own images to it.

Private Registries: Docker allows the creation of private registries for organizations that want to store their images securely.

Commands for registry:

docker pull <image> # Downloads an image from a registry

docker push <image> # Uploads an image to a registry

Docker Storage and Volumes

Docker containers are ephemeral, meaning data stored inside the container’s writable layer will be lost when the container is stopped or removed.

Volumes: Docker provides volumes, a storage feature that persists data beyond the lifecycle of a container. Volumes allow data to be shared across containers and even survive when containers are removed.

Bind Mounts: These are specific locations on the host machine mapped to a container directory.

Docker Volumes: These are Docker-managed and exist independently of containers.

For example:

docker run -v /mydata:/data nginx

This command mounts a host directory (/mydata) to a container directory (/data).

Docker Networks

Docker provides networking features that allow containers to communicate with each other or with external systems.

Bridge Network: The default network type, allowing containers to communicate with each other on the same Docker host.

Host Network: Removes network isolation between the Docker container and the host, giving the container full access to the host's network interfaces.

Overlay Network: Enables multi-host networking, where containers on different Docker hosts can communicate.

docker network create my-network

docker run --network my-network nginx

Docker Compose

Docker Compose is a tool that allows you to define and manage multi-container applications using a YAML file (docker-compose.yml).

Service Definition: Each service (e.g., a web server, a database) is defined in the YAML file with configuration for images, networks, volumes, and environment variables.

Orchestration: With a single command, Docker Compose will create and start all containers specified in the YAML file.

For example, a docker-compose.yml file might look like this:

version: '3'

services:

web:

image: nginx

ports:

- "8080:80"

db:

image: mysql

environment:

MYSQL_ROOT_PASSWORD: example

You can start the application with:

docker-compose up

Docker Swarm and Kubernetes (Orchestration)

Docker Swarm: Docker's native clustering tool, which allows you to create a swarm of Docker hosts (nodes) that work together.

Kubernetes: A more robust orchestration tool used to manage large-scale containerized applications, providing advanced features like auto-scaling, load balancing, and self-healing.

Understanding Docker's architecture allows you to effectively build, deploy, and scale containerized applications across various environments.

+---------------+

| Docker Client |

+---------------+

|

| Requests

v

+---------------+

| Docker Daemon |

+---------------+

|

| Interacts with

| Docker Registries

v

+---------------+

| Docker Registries |

+---------------+

|

| Stores and retrieves

| Docker Images

v

+---------------+

| Host System |

+---------------+

|

| Runs Docker Containers

Interactions Between Docker Components

The Docker components (Docker Client, Docker Daemon, Docker Images, Docker Containers, and Docker Registry) interact with each other to provide a streamlined containerized workflow. Here's how these interactions happen:

1. Docker Client and Docker Daemon

The Docker Client (CLI or API) is the main interface for users. When a user types Docker commands (

docker run,docker build, etc.), these are sent to the Docker Daemon.The Docker Daemon (background service) receives and processes these commands. The client and daemon communicate using REST APIs, over a socket or a network interface.

This command asks the daemon to:

Pull the

nginximage from a registry (if it's not available locally).Create a container from that image.

Start the container.

Example:

docker run nginx

2. Docker Daemon and Docker Registry

The Docker Daemon interacts with the Docker Registry (like Docker Hub or private registries) to pull or push images.

Pulling: If the image isn’t available locally, the daemon contacts the registry and downloads the requested image.

Pushing: When you build a new image locally, you can use the daemon to upload (push) it to a registry, making it accessible for others.

For example:

docker pull redis

docker push my-custom-image

- The Docker Registry is where images are stored and versioned. Docker images are pushed to and pulled from the registry by the daemon as needed.

3. Docker Daemon and Docker Images

Images are templates used to create containers. The daemon is responsible for managing these images:

Building: When a user issues a

docker buildcommand with a Dockerfile, the daemon reads the instructions, creates the necessary layers, and builds the image.Caching: The daemon uses a layer-based file system, so it can cache intermediate layers to speed up builds.

For example:

bashCopy codedocker build -t my-app .

This command builds a new image using the instructions in the Dockerfile.

4. Docker Daemon and Docker Containers

Containers are runtime instances of images. The Docker daemon creates, starts, stops, and removes containers as requested.

Containers have a writable layer on top of the read-only image layers, meaning the daemon allows changes to the container without affecting the original image.

For example:

bashCopy codedocker start <container-id>

docker stop <container-id>

The daemon interacts with the kernel to manage container isolation, networking, and resource allocation (using namespaces and cgroups).

5. Docker Networking and Volumes

The daemon manages networking and storage for containers. It creates networks to allow containers to communicate and uses volumes to provide persistent storage.

Networks: The daemon can set up networks (bridge, overlay, host) to manage how containers communicate internally and externally.

Volumes: The daemon provides persistent storage using volumes, ensuring data can persist beyond the container's lifecycle.

For example:

bashCopy codedocker network create my-network

docker volume create my-volume

Multi-Platform Support

Docker provides support for multiple platforms, enabling applications to run on different operating systems and architectures without modification.

1. Cross-Platform Container Support

Docker allows you to run containers on different operating systems (e.g., Linux, Windows, and macOS) and different CPU architectures (e.g., x86_64, ARM).

Linux Containers on Windows (LCOW): Docker Desktop supports running Linux containers on Windows machines using a lightweight Linux VM.

Windows Containers: You can also run native Windows containers on Windows.

2. Multi-Architecture Docker Images

Docker supports multi-architecture images, meaning you can build images that work across different architectures (e.g., ARM, x86). This is crucial for building applications that can run on different hardware (like Raspberry Pi or cloud platforms).

Buildx: Docker's

buildxtool lets you build images for multiple architectures using the same Dockerfile.

For example:

bashCopy codedocker buildx build --platform linux/amd64,linux/arm64 -t my-multi-arch-app .

- When pulling an image from Docker Hub, Docker automatically pulls the correct image version based on your system architecture.

3. Platform-Specific Dockerfiles

- Dockerfiles can also contain platform-specific instructions using

--platform. This ensures that platform-specific code or dependencies are handled correctly.

For example:

dockerfileCopy codeFROM --platform=linux/arm64 alpine

4. Cross-Platform Development

Docker allows you to develop on one platform (e.g., macOS or Windows) while targeting a different platform (e.g., Linux or ARM).

This is achieved through virtualization (using Docker Desktop on Windows and macOS) and native Docker support on Linux.

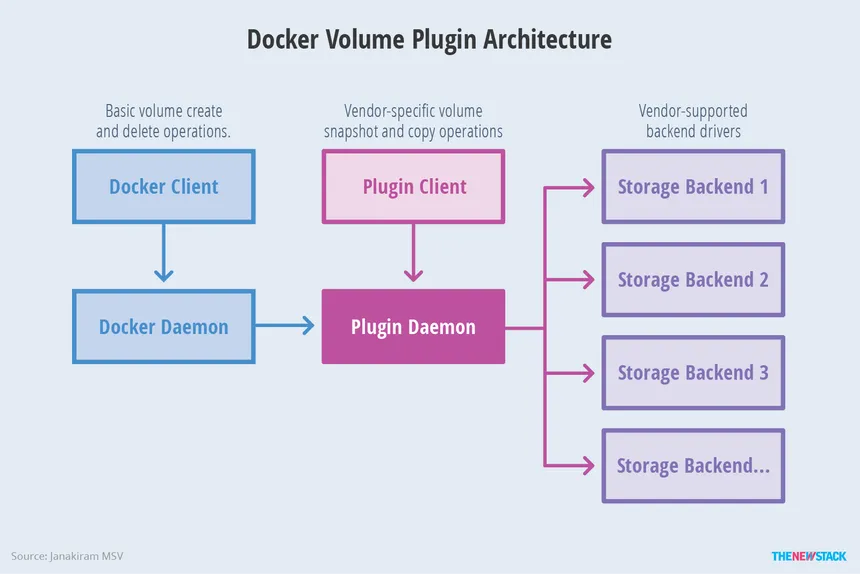

Pluggable Architecture

Docker’s pluggable architecture allows it to be extended and customized using various plugins, which provide additional functionality without modifying the core Docker codebase. These plugins integrate with Docker’s existing systems and can handle networking, storage, and logging.

1. Storage Plugins

Docker supports storage drivers that manage how Docker handles storage on different platforms and file systems.

Default Storage Drivers: Docker comes with several built-in storage drivers like

overlay2,aufs, andbtrfs.Pluggable Storage Plugins: You can use third-party or custom storage plugins to manage storage volumes, either on local disk or network-based storage solutions (e.g., NFS, Amazon EBS, Ceph).

For example:

bashCopy codedocker volume create --driver my-storage-plugin my-volume

2. Networking Plugins

Docker supports networking drivers for different use cases:

Bridge: Default networking option for containers on a single host.

Host: Removes isolation between the Docker host and the container.

Overlay: For multi-host networking in swarm or Kubernetes clusters.

With the pluggable architecture, you can add third-party networking plugins to extend Docker's networking capabilities (e.g., Flannel, Calico for Kubernetes).

For example:

bashCopy codedocker network create --driver <plugin-driver-name> my-network

3. Volume Plugins

Docker also supports volume plugins to allow for more advanced or remote volume management. For example, you can use a plugin to connect containers to cloud storage solutions (AWS, Azure) or distributed file systems (GlusterFS, NFS).

bashCopy codedocker volume create --driver <plugin-name> my-volume

4. Logging Drivers

Docker has a pluggable logging system that lets you configure different logging drivers depending on your environment.

- Default Logging: By default, Docker uses the JSON logging driver, but you can plug in others such as Fluentd, syslog, AWS CloudWatch, or ELK stack drivers.

For example:

bashCopy codedocker run --log-driver=syslog my-container

5. Orchestration Plugins

Docker provides native orchestration through Docker Swarm, but through the pluggable architecture, it also supports other orchestration tools like Kubernetes. Docker can use Kubernetes to manage complex, multi-container applications.

6. Custom Plugins

You can also develop custom plugins using Docker's plugin API. These plugins can be created for specific purposes like monitoring, security, or custom workflows.

Docker uses a client-server architecture, where the client (usually the CLI) sends requests to a separate process, the Docker daemon, which carries out the required actions. The Docker daemon is responsible for building, running, and distributing Docker containers.

Subscribe to my newsletter

Read articles from ANNU KUMARI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

ANNU KUMARI

ANNU KUMARI

Hii! I am Annu Kumari. I'm a front-end, Python and Java Developer