Diving Deep into OS Internals and Process Management: A Learning Journey

Md Saif Zaman

Md Saif Zaman

As a software engineer, understanding the intricacies of operating systems is crucial for developing efficient and robust applications. Recently, I embarked on a journey to deepen my knowledge of OS internals and process management, with a focus on Linux systems. In this blog post, I'll share my experiences and insights from this enriching learning adventure.

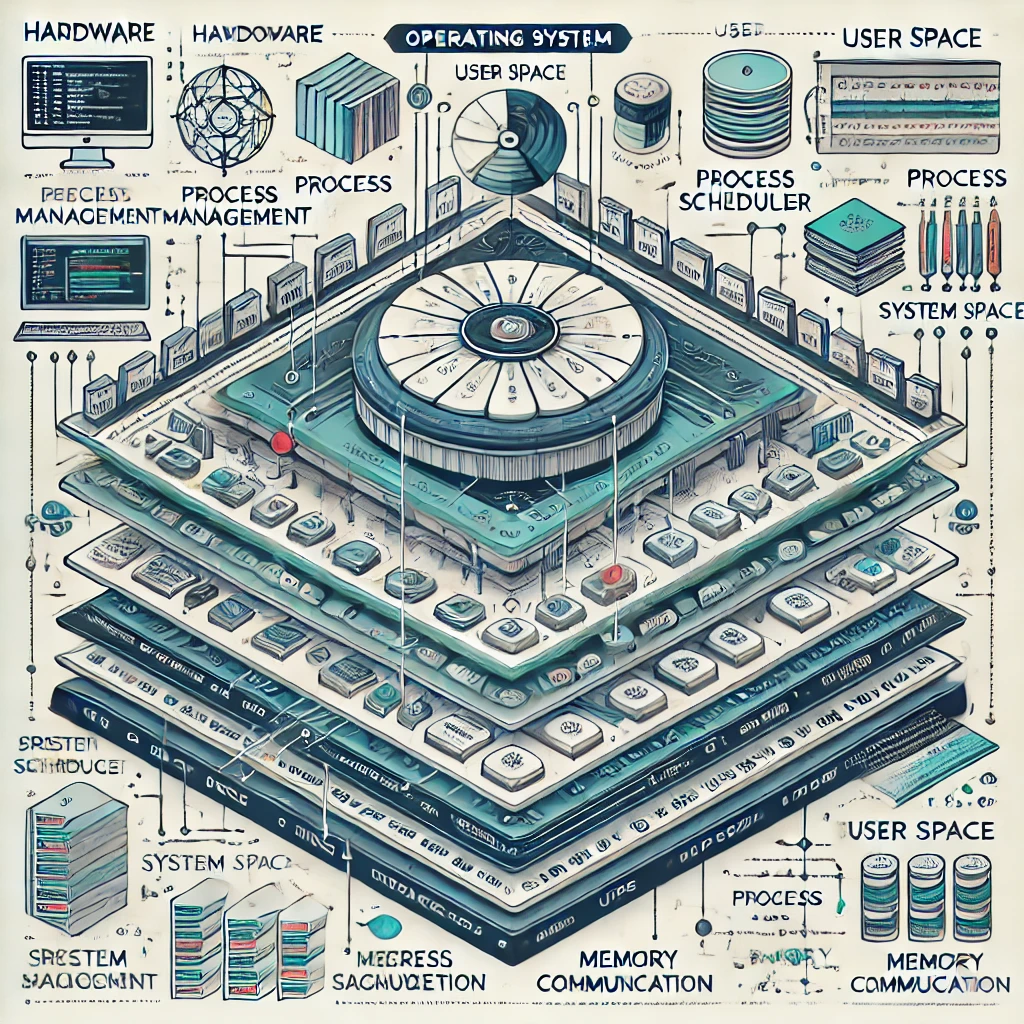

Theoretical Foundations

My learning journey began with "The Linux Programming Interface" by Michael Kerrisk, which provided a solid theoretical foundation on process scheduling, memory management, and file systems.

Process Scheduling

One of the key concepts I grasped was the importance of process scheduling in multitasking operating systems. I learned about different scheduling algorithms, each with its own strengths and use cases:

First-Come, First-Served (FCFS)

Shortest Job Next (SJN)

Priority Scheduling

Round Robin (RR)

For instance, Round Robin is excellent for time-sharing systems, ensuring that each process gets a fair slice of CPU time. It works by allocating a fixed time unit per process, cycling through them to ensure fairness.

Memory Management

Diving into memory management techniques, I found virtual memory to be a crucial concept. It provides an abstraction layer between physical memory and processes, allowing for efficient use of available memory resources.

I also explored paging and segmentation, two methods used to implement virtual memory. Paging, for example, divides physical memory into fixed-size blocks called frames and divides logical memory into blocks of the same size called pages. This allows the operating system to use disk space as an extension of RAM, greatly expanding the available memory for processes.

File Systems

The section on file systems was particularly enlightening. I learned about the structure of file systems, including concepts like inodes, superblocks, and data blocks. For example, in a Linux file system:

Inodes store metadata about files (permissions, timestamps, etc.)

Superblocks contain information about the file system as a whole

Data blocks store the actual file contents

Understanding how Linux organizes and manages files at a low level gave me a new appreciation for the complexity of modern operating systems.

Hands-On Practice with Process Management

Theory is important, but practical application is where the rubber meets the road. I spent considerable time experimenting with various process management commands in the Linux terminal.

Using ps and top

The ps command became my go-to tool for viewing process information. For a comprehensive view of system processes, I found the aux options particularly useful:

ps aux

This command shows all processes for all users, including those not attached to a terminal.

I also got comfortable with top, which provides a real-time, dynamic view of the running system. Here's a tip: while in top, press 'P' to sort by CPU usage or 'M' to sort by memory usage.

Managing Processes

Practice with terminating processes using the kill and killall commands was enlightening. I learned about different signals that can be sent to processes:

kill -15 1234 # Graceful termination of process with PID 1234

kill -9 5678 # Forceful termination of process with PID 5678

killall firefox # Terminate all instances of Firefox

SIGTERM (15) attempts a graceful shutdown, while SIGKILL (9) forces an immediate termination.

Project Work: Automating Process Management

To solidify my learning, I created a GitHub repository named linux-process-management. Here, I documented all the commands I learned and created a shell script to automate some common process management tasks.

Here's a snippet from my script that finds and kills a process by name:

find_and_kill_process() {

read -p "Enter process name to kill: " pname

pid=$(pgrep "$pname")

if [ -z "$pid" ]; then

echo "No process found with name $pname"

else

kill -15 "$pid"

echo "Sent SIGTERM to process $pname with PID $pid"

fi

}

This function prompts for a process name, finds its PID using pgrep, and sends a SIGTERM signal to gracefully terminate it.

Reflection and Next Steps

This deep dive into OS internals and process management has been incredibly rewarding. I've gained a much stronger understanding of how Linux manages processes and resources, which I'm sure will help me write more efficient and robust applications in the future.

Moving forward, I plan to explore more advanced topics like inter-process communication and synchronization, dive deeper into the Linux kernel source code, and practice writing more complex shell scripts for system administration tasks.

Understanding these low-level concepts is crucial for any software engineer. It allows us to make informed decisions about application design and helps in debugging complex issues that may arise from interactions with the operating system.

Remember, in the world of software development, learning is a continuous journey. This exploration of OS internals and process management is just one step, but an important one, in becoming a more knowledgeable and skilled engineer.

What aspects of operating systems fascinate you the most? Let's continue this conversation in the comments below!

Subscribe to my newsletter

Read articles from Md Saif Zaman directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by