Effective Resampling Techniques for Handling Imbalanced Datasets

Subhradeep Basu

Subhradeep Basu

Imbalanced datasets are a common challenge in machine learning, where one class significantly outnumbers the others. This imbalance can skew model performance, leading to biased predictions that favor the majority class. In such scenarios, traditional algorithms often struggle to detect minority class patterns effectively. To address this, resampling techniques play a critical role in balancing datasets by either oversampling the minority class or undersampling the majority class.

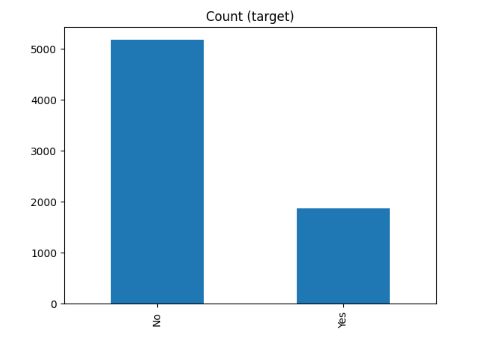

Here, we are using a Customer Churn Prediction dataset that clearly shows an imbalance between customers who leave and those who stay with the company.

Strategies

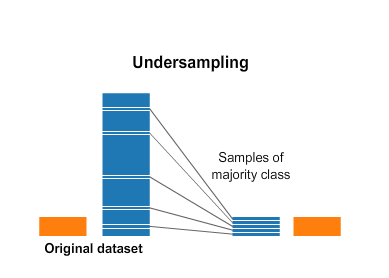

Under sampling majority class :

Undersampling is a technique used to balance imbalanced datasets by reducing the size of the majority class. This method involves randomly removing instances from the majority class to match the size of the minority class.

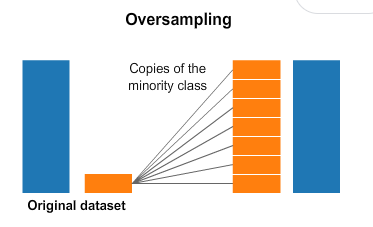

Over sampling minority class:

Oversampling is a method used to address class imbalance by increasing the number of instances in the minority class. This can be done by duplicating existing minority class samples until the dataset reaches a more balanced ratio. While this approach helps the model learn from the minority class more effectively, it can also increase the risk of overfitting, as the duplicated samples may not introduce any new information.

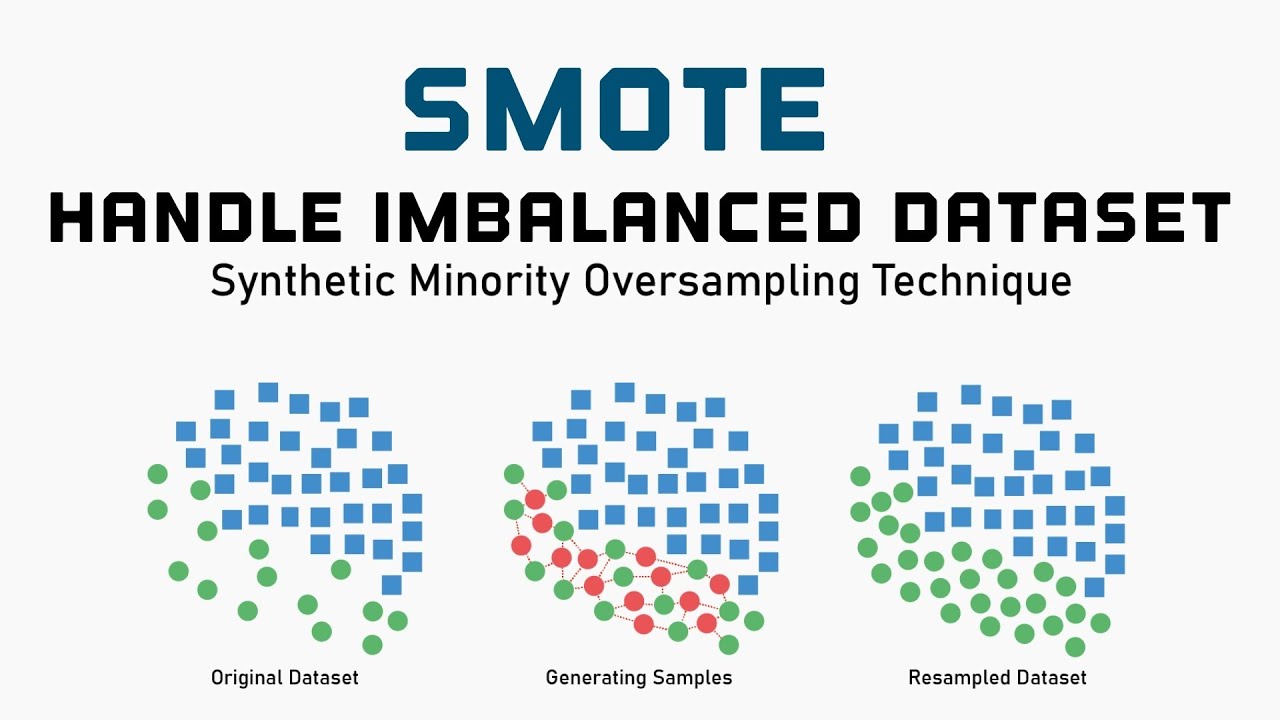

Over sampling minority using class using SMOTE :

SMOTE (Synthetic Minority Over-sampling Technique) is an advanced oversampling method that generates synthetic samples for the minority class rather than duplicating existing ones.

It works by selecting a data point from the minority class and finding its nearest neighbors using a k-nearest neighbors (KNN) algorithm. New synthetic data points are created along the line between the selected point and its neighbors, producing more diverse and informative samples.

SMOTE is effective at reducing overfitting by introducing variability, making it a popular choice for improving model performance on imbalanced datasets.

Python’s imbalanced-learn library provides an easy implementation of SMOTE, allowing you to efficiently apply this technique to your datasets and enhance your model’s ability to handle class imbalance.

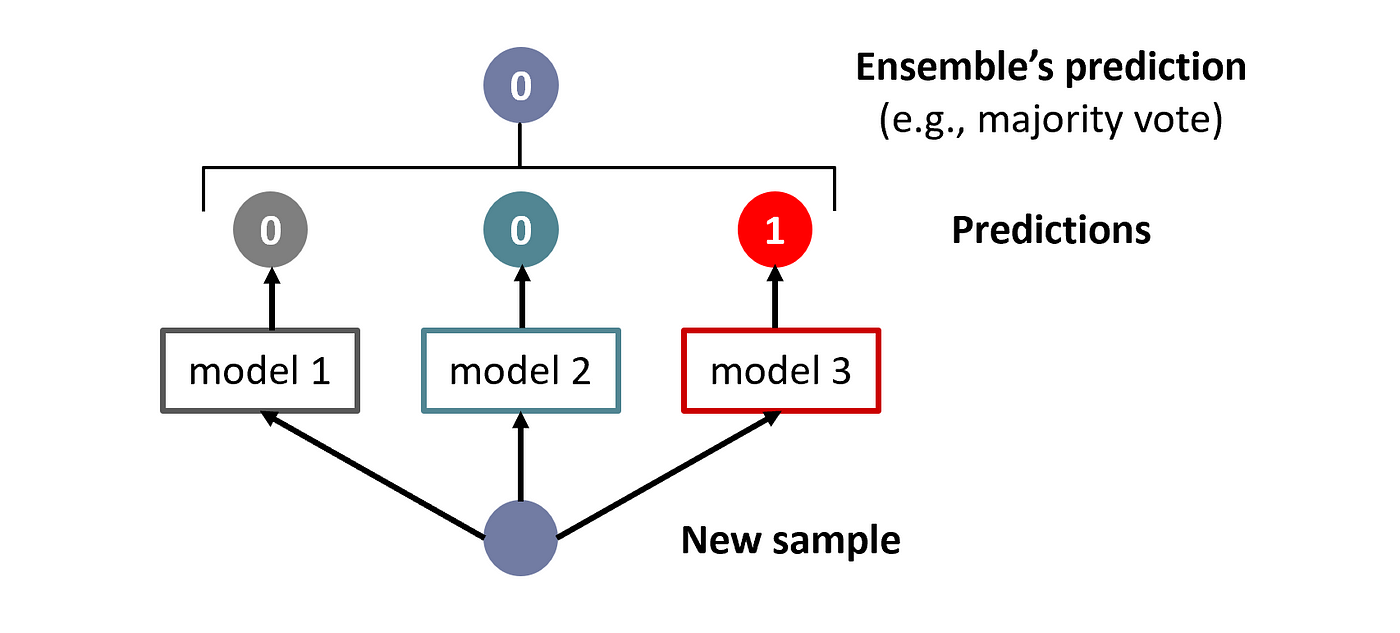

Ensemble method

Bagging (Bootstrap Aggregating) is a powerful ensemble method for handling imbalanced datasets. It involves splitting the dataset into multiple subsets through random sampling with replacement.

Each subset is used to train a separate model. After training, the predictions from all models are combined, usually by majority voting for classification tasks.

This approach reduces model variance and improves stability by leveraging the diverse insights of multiple models, making it effective for dealing with imbalanced data.

Conclusion

In summary, handling class imbalance is essential for accurate machine learning models. Techniques like undersampling, oversampling (including SMOTE), and ensemble methods help balance datasets and improve performance.

Resources -

Subscribe to my newsletter

Read articles from Subhradeep Basu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by