Master Time and Space Complexity : A Beginner's guide

Akash Das

Akash Das

As you continue your coding journey, you’ll often hear about time and space complexity. These concepts are crucial for writing efficient code, especially when dealing with large datasets or performance-critical applications.

But don’t worry , it’s simpler than it sounds. Let's break it down in a way that's easy to understand and remember.

What is Time Complexity?

Time complexity is a measure of how an algorithm's execution time increases relative to the size of its input. It helps us evaluate the efficiency of an algorithm, particularly for large datasets. Imagine you’re sorting a deck of cards:

If you have 5 cards, sorting them will take a few moments.

If you have 100 cards, it will take longer.

The more cards you have, the longer it takes to finish the task. Time complexity helps us predict how much longer it will take when the input size grows.

What is Space Complexity?

refers to the amount of extra memory an algorithm needs to run, depending on the size of the input. It measures how the memory usage grows as the input size increases.

Real-World Example:

Imagine you’re packing for a trip. If you’re going away for a weekend, you only need a small suitcase (a constant amount of space). But if you’re traveling for a month, you’ll need more clothes, toiletries, and other items, meaning you’ll need a bigger suitcase (more space).

In the same way, an algorithm may need more or less memory depending on how much data it has to process.

If an algorithm only needs a small amount of memory to solve a problem, no matter how large the input is, that's like packing light-efficient and easy. But if the algorithm needs extra memory that grows with the input, it's like needing a bigger suitcase for a longer trip.

"Simplicity is the ultimate sophistication." – Leonardo da Vinci

Why Do Time and Space Complexity Matter?

Let’s say you’re building a website, and the user’s data grows over time. You want your code to be efficient so it doesn’t slow down as more users sign up. Time and space complexity help you predict how well your code will perform.

In time complexity analysis, several notations are used to describe how algorithms perform in relation to input size. Let's break down the key notations: Big O, Big Omega, and Big Theta.

Big O Notation (O):

Big O is the most common notation used to describe the upper bound of an algorithm's time complexity. It tells us the worst-case scenario, i.e., how the runtime grows as input size increases. Big O helps in understanding the maximum time an algorithm can take to execute.

Example:

- If an algorithm has a time complexity of O(n), its runtime increases linearly with input size.

As Alan Kay said, "The best way to predict the future is to invent it." With Big O, we predict the worst case so that we're prepared for the maximum workload.

Big Omega (Ω):

Big Omega provides the lower bound of an algorithm's time complexity. It tells us the best-case scenario—how little time an algorithm could take if everything goes perfectly. While Big O focuses on the maximum time, Big Omega looks at the minimum time.

Example:

- An algorithm with time complexity Ω(n) means its best case scales linearly with input size.

Big Theta (Θ):

Big Theta gives the tight bound, which means it captures both the upper and lower bounds of an algorithm. This notation tells us that the runtime grows at the same rate regardless of the best or worst case. If an algorithm is Θ(n), then its runtime will always be proportional to n, regardless of the case.

Example:

- If an algorithm has time complexity Θ(n), its runtime is neither worse nor better than linear time.

Little O Notation (o):

Definition: Little O describes an upper bound that is not tight. This means the algorithm's growth rate is less than the given complexity, but not necessarily equal to it.

Example: If an algorithm is o(n²), it grows slower than n² but never reaches n². It's an indication that the growth is faster than some smaller complexities like n log n, but slower than n².

Think of Little O as saying, "The algorithm will never grow as fast as this (e.g., n²), but it could be close." It's like running a race and knowing you will finish before a certain time, but you're not sure how much earlier.

Little Omega Notation (ω):

Definition: Little Omega gives a lower bound that is not tight. It means the runtime will be larger than the given bound but won't necessarily reach the next higher level.

- Example: If an algorithm is ω(n), the runtime grows faster than linear time (n), but it won’t necessarily reach something like n log n or n².

Understanding these notations is like learning how to swing Thor's hammer: once you master them, you're prepared to handle the challenges of time complexity analysis with ease!

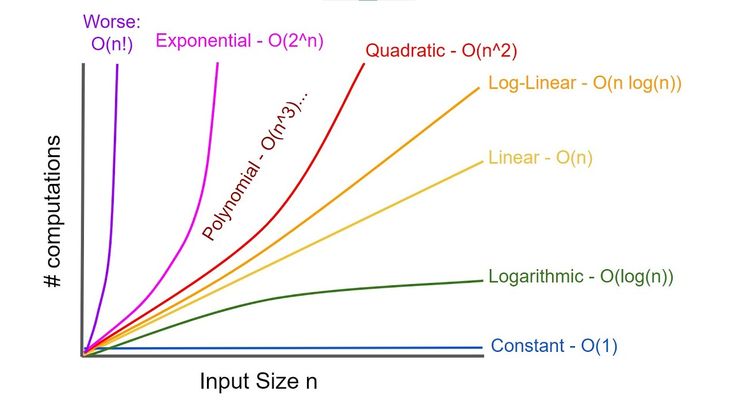

Types Of Time and Space Complexity :

Time Complexity :

O(1) – Constant Time

The algorithm's execution time does not depend on the size of the input.

Real-World Example:

Looking up the phone number of a specific contact in your phone, where you already know the contact name.

Explanation: No matter how many contacts you have, the time to retrieve that one phone number is the same.

O(n) – Linear Time

The execution time increases linearly with the size of the input.

Real-World Example:

Reading a list of names one by one to find a specific name.

Explanation: The larger the list, the longer it takes, because you may have to check every name.

O(log n) – Logarithmic Time

The time grows logarithmically as the input size increases. This happens in algorithms that reduce the problem size with each step.

Real-World Example:

Searching for a word in a dictionary by flipping to the middle and deciding which half to continue searching in (binary search).

Explanation: Each time you cut the search space in half, so you don’t need to check every word.

O(n²) – Quadratic Time

The time increases quadratically as the input size grows. This often occurs in algorithms with nested loops.

Real-World Example:

Comparing every pair of people at a party to see if anyone knows each other.

Explanation: For each person, you need to check every other person, making the number of checks grow rapidly as more people attend.

O(2ⁿ) – Exponential Time

The time grows exponentially with the size of the input, meaning the runtime doubles with each additional input.

Real-World Example:

Solving a problem where you must try all combinations, such as finding the best seating arrangement for a group of people.

Explanation: As the group size grows, the number of combinations increases rapidly, requiring much more time.

Space Complexity :

O(1) – Constant Space :

The algorithm uses the same amount of memory, regardless of the input size.

Real-World Example:

- Storing a few key facts (like your name, address, and phone number) in your phone’s memory.

Explanation:

- Whether you have one or a million contacts, these details take up the same small amount of space.

O(n) – Linear Space :

The memory usage grows directly with the size of the input.

Real-World Example:

- Storing a list of groceries. The more items you add, the more memory is needed to store the list.

Explanation:

- As you add more items to the list, the space required to store them increases proportionally.

O(log n) – Logarithmic Space

The memory usage grows in a logarithmic way as the input size increases, meaning it grows more slowly than the input.

Real-World Example:

- Suppose you’re running a tournament where half the players are eliminated each round. You only need to keep track of the remaining players.

Explanation:

- You reduce the number of players (input size) with each round, so memory usage increases more slowly compared to the number of total participants.

O(n²) – Quadratic Space :

The memory grows quadratically with the size of the input, usually because the algorithm stores pairs or combinations of elements.

Real-World Example:

You’re planning a seating chart for a party where everyone has preferences for who they want to sit next to. You create a matrix to store each person's seating preference with everyone else.

Explanation: If there are 10 people, you need a 10x10 grid to store everyone's preferences.

Final Thoughts :

Time and space complexity might sound complex, but they’re just tools to help you think about how your code performs. It’s like packing for a trip—you want to make sure you have everything you need, but you don’t want to overpack and make things harder.

Quote to Remember:

“The best code is simple, but not too simple."

Focus on writing code that balances simplicity and efficiency. Understanding time and space complexity will help you do just that!

Now that you have a grasp on time and space complexity, you'll be better equipped to write efficient code in the future. Next time, we’ll dive deeper into arrays and explore how these concepts apply in real programming scenarios.

Happy coding!

Subscribe to my newsletter

Read articles from Akash Das directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Akash Das

Akash Das

Java Developer | Tech Blogger | Spring Boot Enthusiast | DSA Advocate*I specialize in Java, Spring Boot, and Data Structures & Algorithms (DSA). Follow my blog for in-depth tutorials, best practices, and insights on Java development, Spring Boot, and DSA. Join me as I explore the latest trends and share valuable knowledge to help developers grow.