Vector Image Search with Azure AI

TJ Gokken

TJ Gokken

One of the most exciting applications of machine learning is enabling visual searches. Imagine being able to upload a picture to your e-commerce app and instantly finding similar products, or using image search to quickly locate photos with similar content on social media.

In this article, I would like to explore how we can achieve this feat with Microsoft Azure. We will be creating a console application using Azure's Computer Vision API with real-time tagging for query images. We will use the Computer Vision API to extract features (tags) from images, convert them into feature vectors, and perform a similarity search by comparing those vectors.

So, let’s dig in.

What is Vector Search?

In Machine Learning, the data is represented in arrays of numbers called vectors. When we perform a vector search, we find the closest data points in a high-dimensional space.

Image Search App

In our case, we will convert images into vectors representing features (tags) Azure Computer Vision API detects and then we will extract features, or Tags, from a query image and compare its vector with the ones in our dataset to find the most similar image using a simple Euclidean distance.

One of the challenges with vector-based searches is ensuring that feature vectors have consistent sizes. In our case, we need to ensure that each image is tagged with the same set of features even if not every image has every tag.

Before we start, you will need an Azure account and in that Azure account, you need to create a Computer Vision API.

First, we start by creating a .NET Core Console App. Once we have the empty shell of an app, we need to add the following NuGet packages:

dotnet add package Microsoft.Azure.CognitiveServices.Vision.ComputerVision

dotnet add package MathNet.Numerics

These packages will allow us to interact with Azure’s Computer Vision API and handle vector math for comparing images.

Once these packages are installed, our code is actually pretty straightforward:

using MathNet.Numerics;

using Microsoft.Azure.CognitiveServices.Vision.ComputerVision;

using Microsoft.Azure.CognitiveServices.Vision.ComputerVision.Models;

namespace AzureVectorImageSearch;

internal class Program

{

// Add your Computer Vision subscription key and endpoint here

private static readonly string subscriptionKey = "YOUR_COMPUTER_VISION_SUBSCRIPTION_KEY";

private static readonly string endpoint = "YOUR_COMPUTER_VISION_ENDPOINT";

private static async Task Main(string[] args)

{

var client = new ComputerVisionClient(new ApiKeyServiceClientCredentials(subscriptionKey))

{

Endpoint = endpoint

};

// Sample public domain image URLs with associated labels

var images = new Dictionary<string, string>

{

{ "https://live.staticflickr.com/5800/30084705221_6001bbf1ba_b.jpg", "City" },

{ "https://live.staticflickr.com/4572/38004374914_6b686d708e_b.jpg", "Forest" },

{ "https://live.staticflickr.com/3446/3858223360_e57140dd23_b.jpg", "Ocean" },

{ "https://live.staticflickr.com/7539/16129656628_ddd1db38c2_b.jpg", "Mountains" },

{ "https://live.staticflickr.com/3168/2839056817_2263932013_b.jpg", "Desert" }

};

// Store the vectors and all unique tags found

var imageVectors = new Dictionary<string, double[]>();

var allTags = new HashSet<string>();

// Extract features for all images and collect all unique tags

var imageTagConfidenceMap = new Dictionary<string, Dictionary<string, double>>();

foreach (var imageUrl in images.Keys)

{

var imageTags = await ExtractImageTags(client, imageUrl);

imageTagConfidenceMap[imageUrl] = imageTags;

allTags.UnionWith(imageTags.Keys); // Collect unique tags

Console.WriteLine($"Extracted tags for {imageUrl}");

}

// Create fixed-size vectors for each image based on all unique tags

foreach (var imageUrl in images.Keys)

{

var vector = CreateFixedSizeVector(allTags, imageTagConfidenceMap[imageUrl]);

imageVectors[imageUrl] = vector;

}

// Perform a search for the most similar image to a query image

var queryImage = "https://live.staticflickr.com/3697/8753467625_e19f53756c_b.jpg"; // New forest image

var queryTags = await ExtractImageTags(client, queryImage); // Extract tags for the new image

var queryVector = CreateFixedSizeVector(allTags, queryTags); // Create vector based on those tags

var mostSimilarImage = FindMostSimilarImage(queryVector, imageVectors); // Search for the closest match

Console.WriteLine($"This picture is most similar to: {images[mostSimilarImage]}");

}

// Extracts tags and their confidence scores from an image

private static async Task<Dictionary<string, double>> ExtractImageTags(ComputerVisionClient client, string imageUrl)

{

var features = new List<VisualFeatureTypes?> { VisualFeatureTypes.Tags };

var analysis = await client.AnalyzeImageAsync(imageUrl, features);

// Create a dictionary of tags and their confidence levels

var tagsConfidence = new Dictionary<string, double>();

foreach (var tag in analysis.Tags) tagsConfidence[tag.Name] = tag.Confidence;

return tagsConfidence;

}

// Create a fixed-size vector based on all possible tags

private static double[] CreateFixedSizeVector(HashSet<string> allTags, Dictionary<string, double> imageTags)

{

var vector = new double[allTags.Count];

var index = 0;

foreach (var tag in

allTags)

vector[index++] =

imageTags.TryGetValue(tag, out var imageTag)

? imageTag

: 0.0; // If tag exists, add confidence, otherwise 0

return vector;

}

// Find the most similar image using Euclidean distance

private static string FindMostSimilarImage(double[] queryVector, Dictionary<string, double[]> imageVectors)

{

string mostSimilarImage = null;

var smallestDistance = double.MaxValue;

foreach (var imageVector in imageVectors)

{

var distance = Distance.Euclidean(queryVector, imageVector.Value);

if (distance < smallestDistance)

{

smallestDistance = distance;

mostSimilarImage = imageVector.Key;

}

}

return mostSimilarImage;

}

}

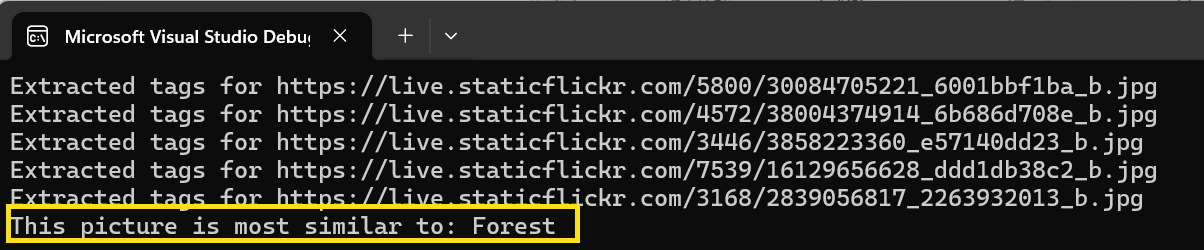

First, we define a dataset of 5 publicly available images and we add labels to them so we can identify the type of image easily. Then, we extract all possible tags from each image using the Computer Vision API.

The Computer Vision API extracts tags, and we convert these tags into feature vectors. If a tag is missing for an image, we assign a value of 0 for that tag. This allows us to keep all vectors the same size, which is crucial for comparison.

Our little application uses these tags to generate feature vectors and compares them using Euclidean distance.

We use this method because it is easy to compute and understand. It is the straight-line distance between two points in a multidimensional space (aka our vectors in this case). It's commonly used in machine learning and computer vision when working with feature vectors because it works well in low to moderately high dimensional spaces (like our feature vectors based on tags). When you have features that are numerically meaningful (like the confidence scores from Azure’s tags), Euclidean distance is a natural choice for measuring similarity.

Now that we have processed our dataset and got it ready for matching, it is time to introduce a new image and see if we can determine which entry in our dataset this foreign image is similar to.

To do that, we extract this new image’s tags in real-time and create a vector for it based on the tags we collected. We then calculate the similarity between the query image and each image in the dataset, using Euclidean distance. The image with the smallest distance is considered the most similar one.

The “forest” image we have in our dataset is the following one:

For testing the application, I am going to use the following image:

When we run our application, we see that the app correctly determines that our image is a forest.

By extracting tags in real-time from the query image, we can compare images dynamically, even if the query image isn't part of the preloaded dataset. This makes the system flexible and allows for new images to be analyzed on the fly.

Behind The Scenes

So, what’s happening here? We did not explicitly train any models—how did the application manage to identify the correct image type?

In this instance, our dataset effectively functions as the “model” because it contains the reference information (tags and vectors) that we compare against when performing a query.

We are using Azure’s pre-trained Computer Vision API to generate features (tags). So, the “training” is implicitly embedded in Azure’s pre-trained model.

This is sometimes referred to as a non-parametric approach because our application is not learning explicit parameters (like in a deep learning model) but is instead comparing data points (images) directly.

This method can work well in some cases because it avoids the complexity of training a custom machine learning model but still achieves meaningful results using pre-trained intelligence.

With this approach, you can build a basic image search engine that leverages Azure’s pre-trained Computer Vision API for real-time tagging. While this example uses Euclidean distance, you can experiment with other similarity measures like cosine similarity or even build your own custom models using Azure Custom Vision.

This framework is a powerful starting point for building scalable and flexible image search systems, whether for e-commerce (where customers can find products based on images), content management (organizing large collections of photos), or social media applications (discovering similar content).

Here is the source code: https://github.com/tjgokken/AzureVectorImageSearch

Subscribe to my newsletter

Read articles from TJ Gokken directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

TJ Gokken

TJ Gokken

TJ Gokken is an Enterprise AI/ML Integration Engineer with a passion for bridging the gap between technology and practical application. Specializing in .NET frameworks and machine learning, TJ helps software teams operationalize AI to drive innovation and efficiency. With over two decades of experience in programming and technology integration, he is a trusted advisor and thought leader in the AI community