Mastering Snowflake’s Advanced Performance Features (2025): How to Maximize Benefits and Control Costs

John Ryan

John Ryan

Snowflake offers advanced features that empower organizations to handle terabytes of data more efficiently and effectively. Query Acceleration Service, Cluster keys and Search Optimization Service stand out for their ability to significantly improve query performance against massive data volumes. However, these powerful capabilities have cost implications that must be carefully managed.

This article will briefly overview these incredible performance features, point out some potential risks, and discuss how best to avoid them.

Embracing Snowflake's Advanced Performance Capabilities

Query Acceleration Service

The Query Acceleration Service is designed to improve the performance of complex queries, reduce execution time, and enhance the end-user experience. Accelerating demanding queries helps organizations deliver faster insights. However, it’s important to be aware that the service draws on additional compute resources, meaning careful cost management is essential.

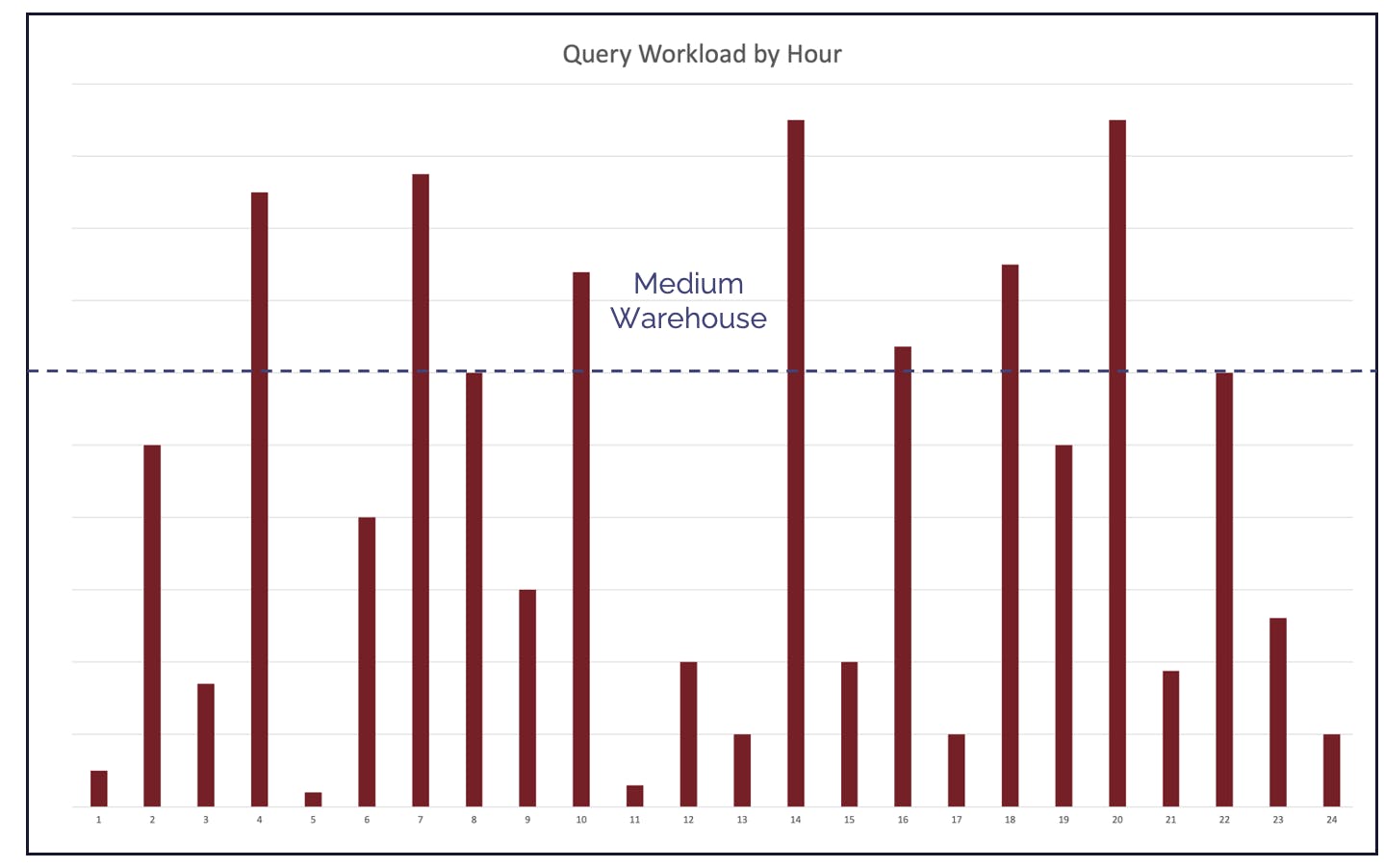

The diagram below illustrates a typical use case for Query Acceleration Service. It shows the query workload across a 24-hour period on a MEDIUM-size warehouse.

As shown, the workload varies significantly throughout the day. Most queries consume between 10% and 80% of warehouse capacity — but there are notable spikes where complex, long-running queries exceed 100% of available compute. It’s a common misconception among Data Engineers that Snowflake’s Multi-Cluster Warehouse feature will handle this type of workload, but in this case, it won’t.

A better solution is to enable Snowflake’s Query Acceleration Service (QAS). QAS automatically draws additional compute from a serverless pool to accelerate heavy queries. This can turn queries that previously ran for hours into results delivered in minutes — or even seconds.

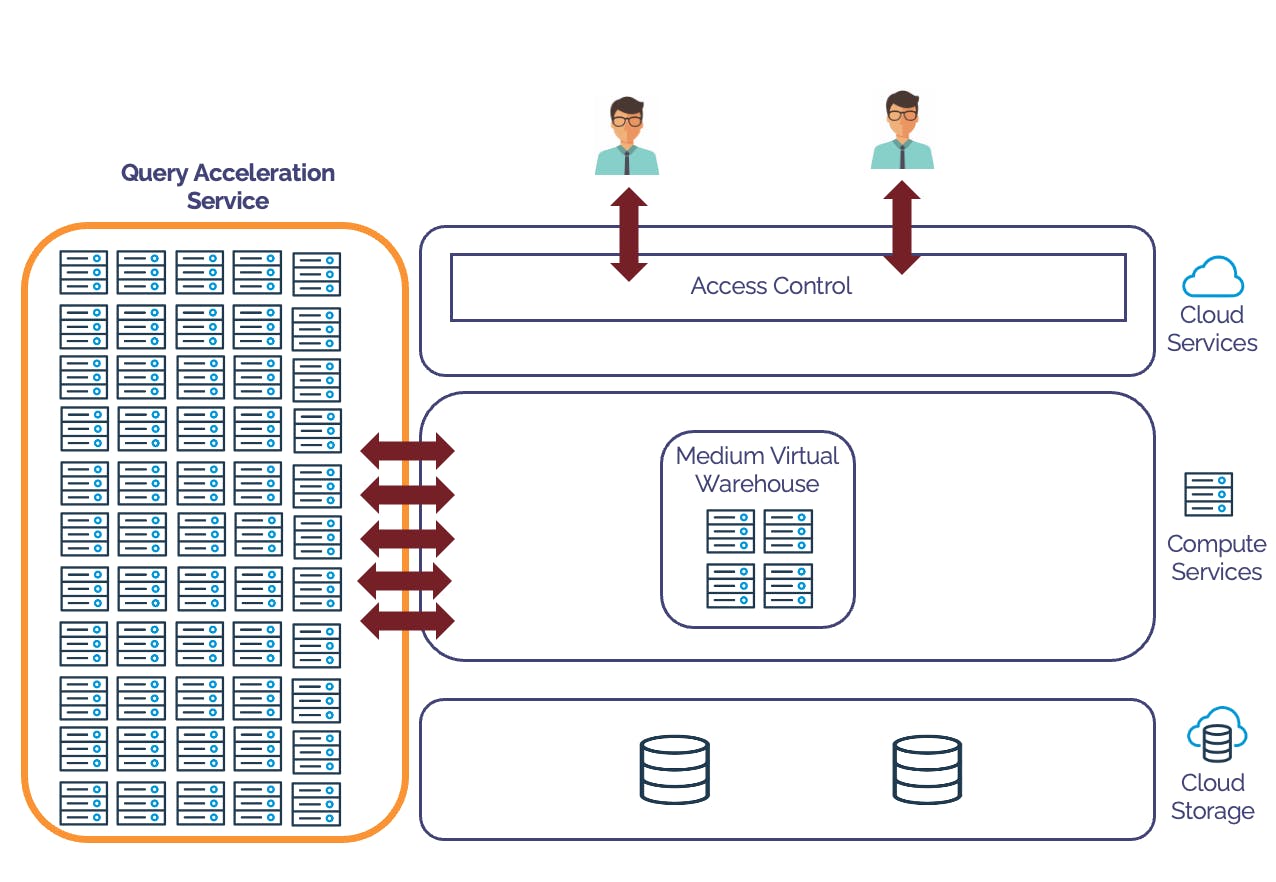

The diagram above illustrates how Query Acceleration Service works. Once the warehouse is configured to use QAS, the long-running, more complex queries will automatically draw upon a pool of compute resources. This has the advantage that queries that only need a MEDIUM size warehouse run as usual, but Snowflake can offload long query scans and some sort of operations to the Query Acceleration Service, thereby improving query performance.

“With great power comes great responsibility.” — Voltaire (and Spider-Man)

As with any powerful feature, misuse of Query Acceleration Service can lead to unexpectedly high costs. It’s not advisable to enable QAS on every virtual warehouse without careful consideration.

I’ve seen cases where a Data Engineer accidentally triggered runaway spend — for example, running a Cartesian join late on a Friday against an X4-LARGE warehouse. By default, Snowflake allows queries to run for up to 172,800 seconds (two days), and the issue wasn’t spotted until Monday — by which time the bill was substantial.

It’s easy to assume that enabling QAS on a smaller warehouse (such as XSMALL) will mitigate the risk — but in reality, QAS can still amplify costs on any size warehouse.

Fortunately, there are safeguards. You can control risk by applying STATEMENT_TIMEOUT_IN_SECONDS to limit query duration. However, use caution — you don’t want to terminate legitimate long-running production queries inadvertently. Another effective option is to deploy a Snowflake Resource Monitor to help manage usage and prevent unexpected charges.

To avoid the risk of runaway queries, you can place an automatic timeout on the user Session or Warehouse by setting the STATEMENT_TIMEOUT_IN_SECONDS. However, be cautious not to terminate important, long-running queries on your production system inadvertently. Another option to consider is a Snowflake Resource Monitor.

Zero Copy Cloning

Zero Copy Clones allows users to create instant copies of databases, schemas, or tables — without physically duplicating the underlying data. This makes it easy to rapidly provision development, testing, or analytics environments. While cloning itself does not incur additional storage costs, it’s important to monitor how these clones are used — particularly the compute resources — to ensure cost efficiency over time.

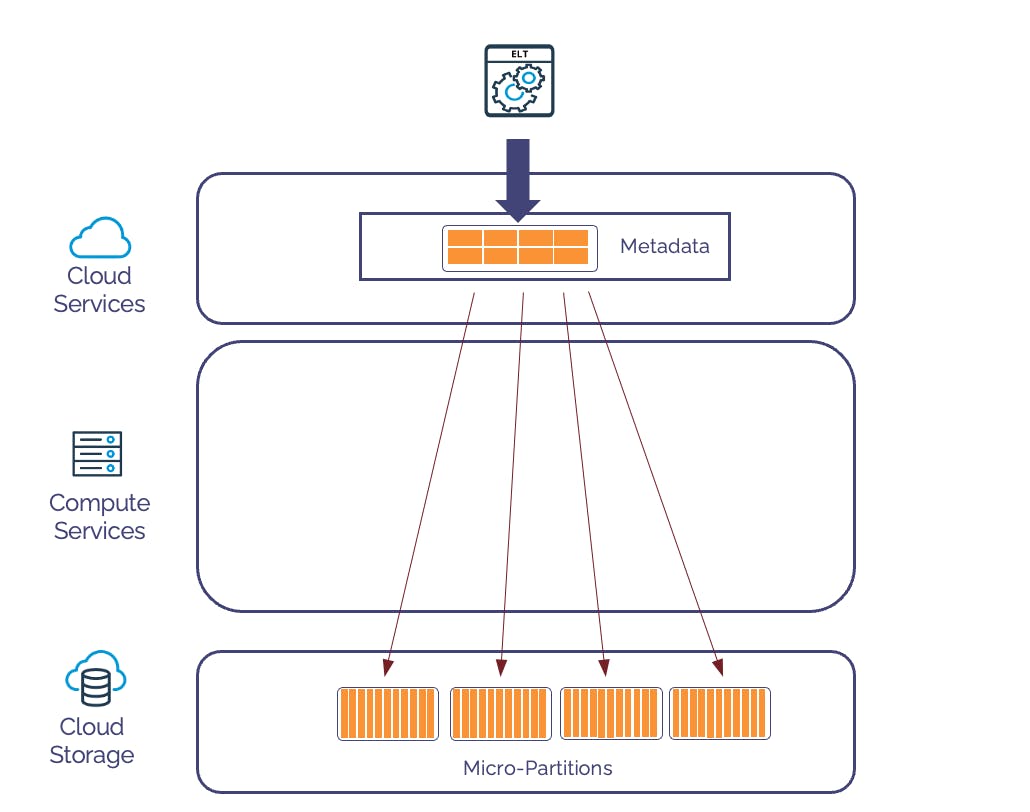

The diagram above illustrates how Snowflake physically stores data: a set of small metadata pointers is maintained in the Cloud Services layer, while the actual data resides in 16MB micro-partitions within the storage layer. This architecture enables Snowflake to clone gigabytes — or even terabytes — of data in seconds.

Zero Copy Cloning makes it possible to create full read/write clones of production data, ideal for non-production environments such as development, testing, and experimentation. For example:

An analyst might clone the past year of customer data to safely test an ML model.

A development team might clone a snapshot of production data to support application development.

While cloning is a powerful and flexible feature, it comes with an important caveat: it can result in unexpected storage costs over time. The initial clone operation itself does not duplicate physical data. However, as the source data is updated or deleted, aged-out micro-partitions gradually accumulate — increasing overall storage usage.

Fortunately, it’s possible to monitor and manage this. One useful approach is to identify old or redundant clones — particularly those several months old — and assess potential storage savings. The article Snowflake Zero Copy Clones and Data Storage provides a detailed guide to this technique.

Clustering Keys

Clustering is one of the most misunderstood Snowflake performance tuning features. While it has the potential for huge performance improvements, customers sometimes find the cost outweighs the benefits, most often because cluster keys have been inappropriately used.

Adding clustering keys to large Snowflake tables optimizes the table storage layout based on the clustering key columns.

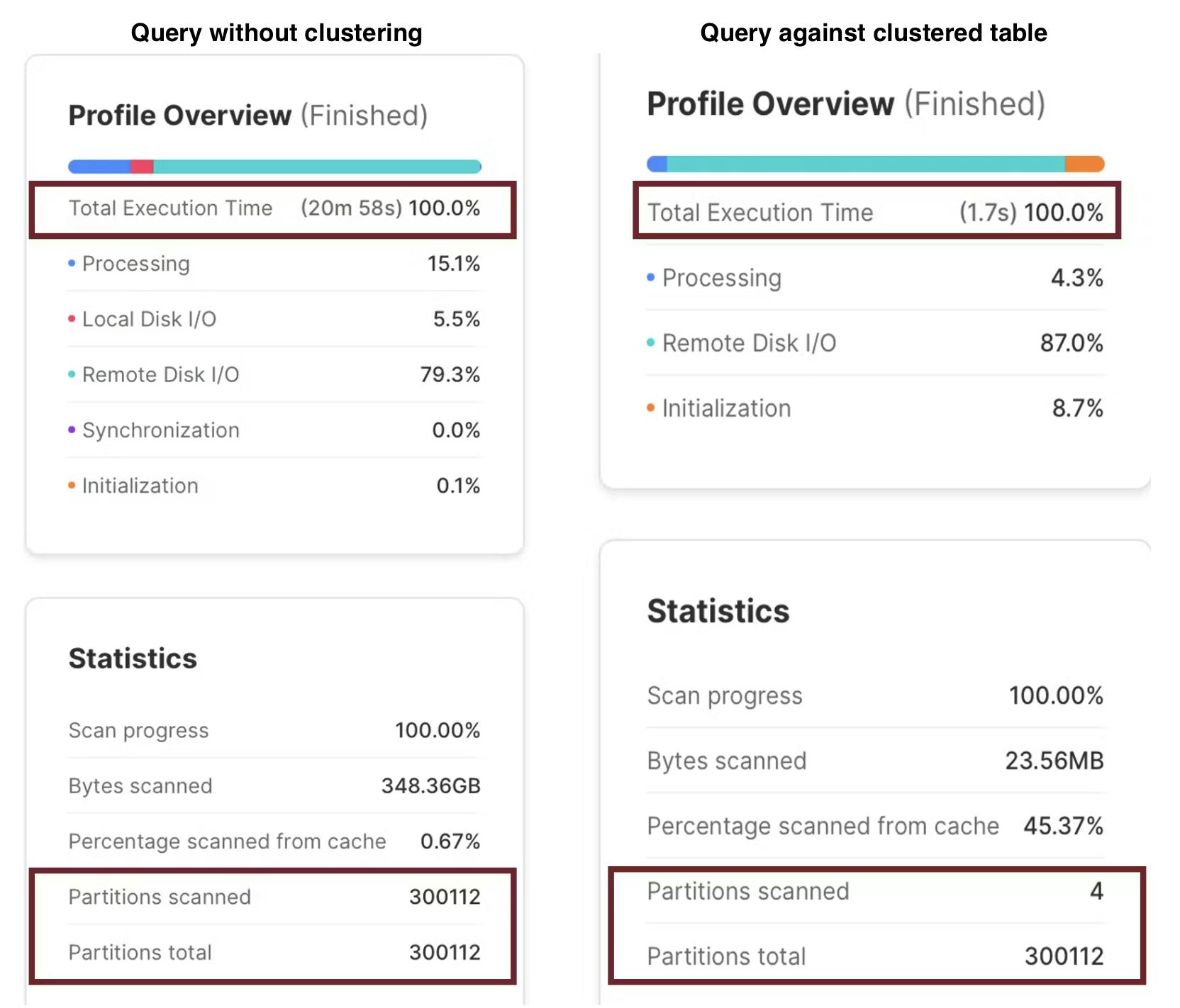

The screenshot below illustrates the potentially massive query performance gains from clustering, which can dramatically speed up query filtering by clustered columns.

The above results were produced by the same SQL statement below.

select *

from web_sales

where ws_sold_date_sk = 2451161

and ws_item_sk = 463520;

The example above shows how clustering can dramatically improve performance. In this case, a table containing over one billion Customer Sales Transactions was clustered by WS_SOLD_DATE_SK and WS_ITEM_SK — columns used in the SQL WHERE clause. The result? The query ran over 700 times faster, completing in just 1.7 seconds.

However, while clustering can deliver impressive performance gains, it carries a cost trade-off if not carefully deployed. Snowflake must continually maintain cluster keys through background re-clustering — and this process consumes credits.

The choice of clustering key is critical. If the key is poorly selected — for example, clustering on a low-cardinality column with many duplicate values (such as GENDER) — the system still incurs background clustering costs, but query performance gains will be minimal.

Conversely, clustering on a high-cardinality or unique key can provide outstanding performance — but at the risk of high re-clustering costs, especially if the table is subject to frequent INSERT, UPDATE, or DELETE operations. In such cases, the cost of maintaining the clustering can outweigh the performance benefits.

The Best Practices for Snowflake Clustering include:

Carefully select the Clustering Key, which should often be included as a predicate in the WHERE clause. For example, a large Fact Table is often queried by a DATE field or DATE_KEY. This often makes the “Date” column a good candidate for clustering.

Avoid Clustering on small tables as clustering works best on larger tables, (Snowflake recommends table sizes over 1TB in size).

Avoid Clustering Keys on tables with many merge, update, or delete operations, as the cost of automatic background re-clustering operations can outweigh the performance gains.

The bottom line is clustering can work miracles for query response times but should be applied judiciously!

Search Optimization Service

While Snowflake Cluster Keys are often misunderstood, confusion around Search Optimization Service (SOS) is even more common. SOS is designed to accelerate queries that return a small, highly selective subset of rows — typically from tables containing millions or even billions of entries.

Unlike Data Clustering, which physically sorts rows within the table, SOS builds an index-like structure alongside the table and performs fast lookup operations. It’s often described as a tool for finding the proverbial needle in a haystack— ideal when your queries need to locate just a few rows in a vast dataset.

The diagram below illustrates this process: SOS helps the SQL engine leverage its index-like structure to significantly reduce the amount of data scanned — often resulting in dramatic performance gains.

Cluster Keys are typically used on columns with many duplicate values — for example, to return thousands of sales records for a given day. They work particularly well with range-based filters (e.g. WHERE DATE > '22-May-2024').

In contrast, Search Optimization is designed for queries that return a very small number of rows from large tables. It excels when used with equality filters (e.g. WHERE CUSTOMER_ID = 12345), where you’re trying to pinpoint a specific row or small set of rows.

Take for example, the query below:

select *

from customer_sales

where date_sold > '14-JUN-2003'

and date_sold <= '30-JUN-2003';

Assuming this returns 250,000 rows from a table with over a billion entries, the DATE_SOLD column might be a good candidate for a Clustering Key. However, it would be a poor candidate for Search Optimization Service.

A good example query for Search Optimization Service includes equality filters against one or more columns that return relatively few rows. For example:

select *

from store_sales

where ss_customer_sk = 41657562;

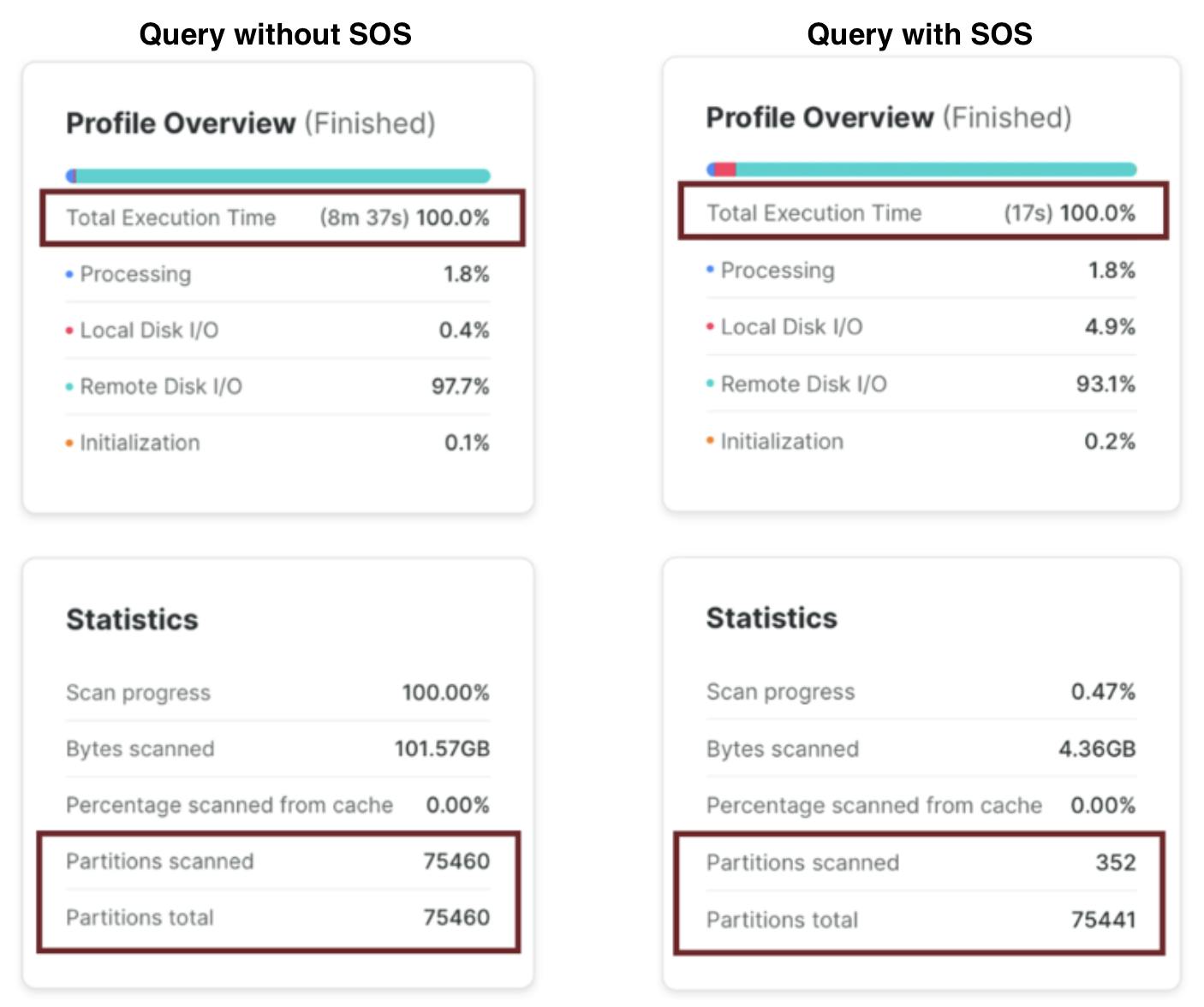

Assuming the above query returns just a few hundred rows from a table with over a billion entries, this can lead to huge performance improvements. The screenshot below shows the results with and without Search Optimization Service:

In this example, the query without Search Optimization performed a full table scan of over 75,000 micro-partitions, taking roughly 8½ minutes. With Search Optimization enabled, the same query scanned just 352 micro-partitions, returning results in 17 seconds — a 30x performance improvement.

However, it’s important to understand the trade-offs. Like Clustering, the index-like structure behind Search Optimization (not to be confused with a traditional database index) must be built initially and then automatically maintained by a background Snowflake process. This can lead to unexpected storage costs — in some cases, the structure may grow nearly as large as the original table — and ongoing credit costs for maintenance.

As with Clustering, these costs can sometimes outweigh the performance benefits — especially if Search Optimizationis deployed on the wrong use case. Because SOS is a specialized feature, best suited for point lookup queries, it’s critical to evaluate carefully before enabling it. If used incorrectly, it can result in high costs with little or no performance gain.

The Best Practices to deploy Snowflake Search Optimization include:

Deploy SOS on tables with many equality or IN filters or queries against text strings using substring and regular expressions to filter results.

Avoid deploying on small tables with few micro-partitions, as this feature works using partition elimination. The more partitions in the table, the greater the benefits of partition elimination.

This feature works best when queries return a few rows as a percentage of the table's total number of micro partitions. For example, a query that returns (on average) 200 entries from a table with 50,000 micro partitions leads to excellent query performance.

The bottom line is that you should carefully evaluate your use case. Search Optimization can be an incredibly powerful feature if correctly deployed.

Managing Costs with Advanced Features

To leverage these advanced features without incurring unnecessary costs, organizations should adopt a strategic approach:

Monitor Usage: Monitor the use of these advanced features closely to understand their impact on your Snowflake costs.

Set Usage Limits: Implement resource monitors and query timeouts to prevent runaway costs associated with high compute usage.

Optimize Configurations: Regularly review and adjust the configurations of your virtual warehouses, clones, and data clustering to ensure they are aligned with your performance and cost objectives.

Conclusion

Snowflake’s advanced features — Query Acceleration Service, Zero Copy Cloning, Clustering Keys, and Search Optimization Service — offer powerful ways to boost query performance and enhance flexibility when working with massive data volumes.

However, as with any powerful tool, they must be deployed with care. Each of these features comes with its own set of cost implications, and if used without a clear understanding, they can quickly lead to unexpected charges — a situation I’ve seen many customers encounter first-hand.

The key is to treat these features as targeted optimizations — not “switch on everywhere” options. When used in the right place and for the right use case, they can deliver exceptional performance improvements and transform the user experience. But without careful monitoring and best practice design, they can also become an expensive surprise.

By understanding how these features work, where they shine, and where to apply caution, you can confidently leverage their full potential — without losing control of your Snowflake costs.

Takeaways

Advanced Snowflake features deliver huge performance gains: Query Acceleration Service (QAS), Zero Copy Cloning, Clustering Keys, and Search Optimization Service can transform query performance.

Every feature has a cost tradeoff: These features can also drive up storage or compute costs if used inappropriately or without monitoring.

Query Acceleration Service (QAS): Ideal for occasional heavy queries — but can cause runaway spend if not controlled with timeouts or resource monitors.

Zero Copy Cloning: Lightning-fast environment cloning — but cloned data can accumulate storage costs over time as partitions diverge.

Clustering Keys: When correctly deployed, clustering can deliver 100x+ performance gains — but poor key selection or frequent updates can cause high re-clustering costs.

Search Optimization Service (SOS): Great for highly selective lookup queries — but can be costly on small or inappropriately chosen tables.

Monitor usage actively: It’s critical to monitor and tune advanced features to ensure performance gains outweigh associated costs.

Use best practices: Follow published best practices for QAS, Clustering, and SOS — and review usage patterns regularly.

Combine with governance tools: Features like Altimate.ai’s DataPilot can help monitor, optimize, and control costs when using advanced Snowflake capabilities.

With great power comes great responsibility: Snowflake’s advanced features are incredibly powerful — but require careful design and monitoring to avoid surprises.

Subscribe to my newsletter

Read articles from John Ryan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

John Ryan

John Ryan

After 30 years of experience building multi-terabyte data warehouse systems, I spent five years at Snowflake as a Senior Solution Architect, helping customers across Europe and the Middle East deliver lightning-fast insights from their data. In 2023, he joined Altimate.AI, which uses generative artificial intelligence to provide Snowflake performance and cost optimization insights and maximize customer return on investment. Certifications include Snowflake Data Superhero, Snowflake Subject Matter Expert, SnowPro Core, and SnowPro Advanced Architect.