Mastering Chunking for RAG: Semantic vs Recursive vs Fixed Size

Zahiruddin Tavargere

Zahiruddin Tavargere

Note: The read-time of this article was going beyond 4 minutes, so I am sharing the video instead.

This is part of the Advanced RAG Series: Part 1

When working with Retrieval Augmented Generation (RAG) models, selecting the right chunking method can make a huge difference in performance.

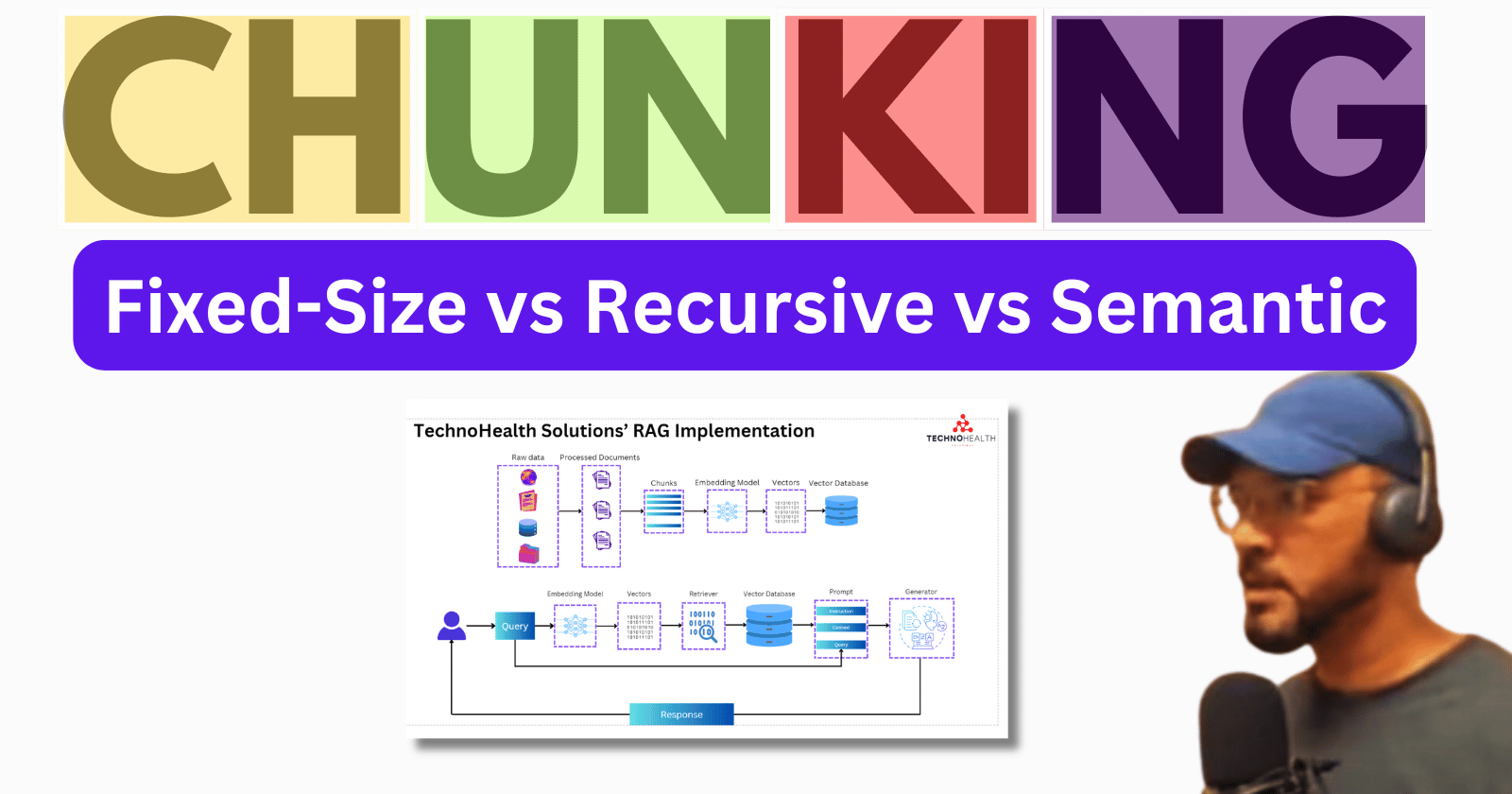

In my latest YouTube video, I dive deep into the three main chunking approaches—Semantic, Recursive, and Fixed Size—and evaluate their performance based on four critical metrics: context precision, faithfulness, answer relevancy, and context recall.

The chunking method you choose can impact how accurate and relevant the AI-generated answers are. So, which method strikes the perfect balance between retaining enough context and providing highly relevant, faithful responses?

In the video, I break down:

How Semantic Chunking performed in capturing context but struggled with relevancy.

Why Recursive Chunking emerged as a strong contender with high accuracy and relevancy.

The surprising strengths of Fixed Size Chunking, especially in context retention.

If you're interested in fine-tuning your RAG models or curious about which chunking method works best, this video is packed with insights that will help you make the right choice. Check out the full breakdown in the embedded video below!

Watch the full analysis and find out which chunking method is best for your use case:

Subscribe to my newsletter

Read articles from Zahiruddin Tavargere directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Zahiruddin Tavargere

Zahiruddin Tavargere

I am a Journalist-turned-Software Engineer. I love coding and the associated grind of learning every day. A firm believer in social learning, I owe my dev career to all the tech content creators I have learned from. This is my contribution back to the community.