Phaser World Issue 197

Richard Davey

Richard Davey

Welcome to issue 197 and a whole lot of fresh content this week. We will keep tweaking the layout as we get used to our new home on Hashnode, but overall, we’re really enjoying the expanded features this format offers.

This week, the team’s Dev Logs are back, so you can catch up with what we’ve been working on. We’ve also got new tutorials and a great game. Let’s dive in …

😍 30-day Trial Subscription Available

A lot of you asked for this, so we made it happen.

We now have a 30-day trial subscription option available.

You can test out Phaser Editor v4 Desktop edition entirely unrestricted for 30 days. If you've never used Editor before, we strongly recommend reading our starter tutorials so you can appreciate what it is and what it isn't, all while learning how to use it.

You can use every feature and even build complete games. If you get stuck, please reach out to us in the ‘phaser-editor’ channel on our Discord server or email support@phaser.io. We want you to appreciate how powerful Phaser Editor is, and we will help you get there as best we can.

View the trial subscription page.

🎮 Deep Space Survivor

Survive massive amount of enemies in space in this top-down action auto-shooter.

Gnome Castle Games has taken the fan favorite Vampire Survivors and thrown it into deep space. Can you survive the onslaught of enemy ships in this top-down space auto-shooter?

In Deep Space Survivor, you try to survive the numerous enemy space ships that are trying to get you, blowing them up with unique weapons and powerups. Influenced by Vampire Survivors and Magic Survival, this game provides tons of action and roguelike elements.

Read more about Deep Space Survivor

🌟 Phaser Editor 4.3 Released

Enjoy new quality-of-life improvements, such as drag and drop asset management and UI updates in this latest release of Phaser Editor.

A brand-new version of Phaser Editor has been released. You’ll find a much quicker and easier way to add assets to your projects, UI improvements, updates to support Phaser v3.85, a super-handy new “Go To Scene” icon, and plenty more.

Read the full release notes for details about all of the improvements.

Subscribers can update to this version immediately. Just grab it from your account's Downloads page or pick ‘Check for Updates’ within Phaser Editor. If you don’t have a subscription, we offer a 30-day trial.

🎙️ Interview with a Phaser creator: Ar0se

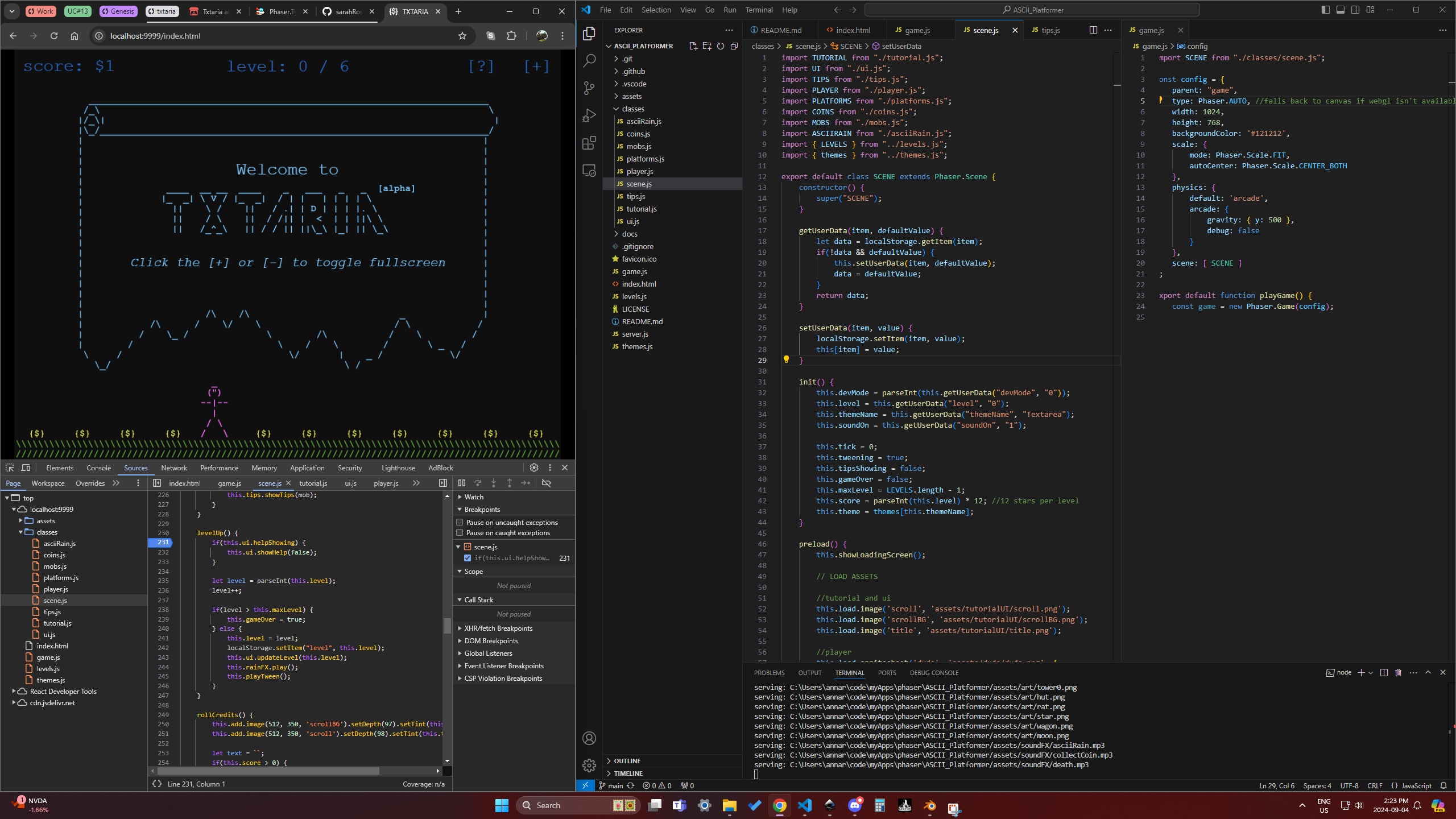

Learn more about the game Txtaria and how it came to be.

Ar0se caught our attention with her game Txtaria. The game is a platformer with an ASCII art style that gives it a mysterious look and feel. Ar0se agreed to tell us more about her story and the development behind Txtaria.

🎥 3 New Tutorials

Here are three great new Phaser tutorials.

The Phaser 3 Pac-Man Tutorial Continues. This time, it’s all about pathfinding.

Learn how to code a conveyor belt effect in this new video from Scott Westover.

Emanuele Feronato has challenged himself to create 101 games. Pushori is the first one, and it is complete with code.

Phaser Studio Developer Logs

👨💻 Rich

It’s been a busy week in the Phaser Studio offices. I mean, we all work remotely, but things have been cooking up a storm in our respective offices. As usual, since we formed Phaser Studio, I’ve been spread across multiple projects every day of the week. Mostly, I’ve been focusing on our new documentation site with Zeke and Francisco. I wrote a huge amount of technical content, which is being turned into actual guides and maps of the internal systems. Plus, we’re updating the API documentation too. So, the new site will be a combination of getting started, core concepts, deep dives, flow diagrams, and the full API docs.

I’m happy to announce that we partnered with Hashnode to host the docs sites. This is a brand new service they’ve been working on, and we’re loving it so far. We get to create our docs as markdown and code in our own git repo, and they sync them to a beautifully presented site on every commit. We no longer need to worry about keeping our doc site tied to the current version of Phaser, as we’ve traditionally done. Instead, we can update and publish new site content whenever we like. I’ve wanted to do it for years, and we’re finally doing it!

We’ve also been planning out the product roadmap for the next three months. Our priorities are getting Phaser Beam complete and then merging it all into Phaser v4, getting the new physics system released (see Pete’s section below!), getting the docs site live, getting the new web site live, and, of course, constantly updating the Phaser Editor throughout all of this. Plus, there are a few other neat ideas in the works.

Amazingly, this will bring us to the end of 2024, which is quite a shocking realization in some regards. Of course, I’m incredibly pleased with what the team has achieved this year, and we’ve still got our most important quarter ever to go, but time does seem to be flying by. Anyway, read on to find out what the rest of the team has been doing. Spoiler: it’s pretty damn cool 😎

👨💻 Pete - Box2D

I'm currently developing a Physics library for Phaser.

The project? To convert the new and wonderful Box2D v3.0 from C to JS.

Large Language Models

Work has progressed very quickly, largely thanks to Large Language Models (LLMs) like GPT 4o and Claude Sonnet performing the grunt work.

After a lengthy process of chopping the source code up into pieces small enough for the LLM to digest, then rearranging them so that each piece has enough context from the rest of the project to make sense, I was able to use the following system and user prompts to get a first draft for each piece of code:

System: you are an expert C and JS programmer, you will answer questions and fulfill requests accurately and concisely

User: please convert this code into JS (from C). Use the exact same labels. Comment out all macros like B2_ASSERT. Skip any code which is disabled by conditional compilation (permanently false, or using a debug flag). Where a data structure is used but not defined, assume that a similar JS structure will be used as an import. Export all functions that start with the characters b2. Where a type is used but not defined, assume that it will be available as a similar JS structure elsewhere in the project (e.g. b2_circleShape). Convert any typedef struct into a class.

Problems

Whilst far from perfect, the generated rough cut hugely reduced the amount of search and replace and routine editing for me. I don't think any file worked perfectly, but the errors tended to fall into categories which made them easier to address rapidly:

missing imports. These show up during run-time testing - thorough testing is essential

missing conversion, more on this later

inconsistent type use. There were a lot of cases where instead of using classes like b2Vec2, the JS used generic objects instead. I used search to find these and manually replaced them. Search strings "= {" and "({ " gathered 90% of them

inconsistent code conversion. This was usually my fault in that I'd failed to provide enough context and the LLM 'made it up' resulting in pieces of code that didn't fit together via types or overall approach. Manual fixing was required, although in extreme cases I tailored the prompts specifically, changed the context, and pushed the file through again

pass-by-reference vs pass-by-value

In attempting to fix the missing imports I ran into to module errors with repeated or looping dependency issues. I resolved them the lazy way by sticking to the C language design where 'header' files contain definitions, and 'source' files contain everything else. All files import only 'header' files, which avoids circular dependencies. It looks weird having 'include/constraint_graph_h.js' and 'constraint_graph_c.js' but it works. As it is close to the original C file structure, it also minimises the conversion required for this early stage development.

After fixing these widely prevalent import issues, other errors included typical LLM type nonsense where it seemed to forget what it was told to do:

LLM: class b2World { /* include JS conversion of the b2World structure here */ }

Me: Hang on, that's literally all I asked YOU to do!

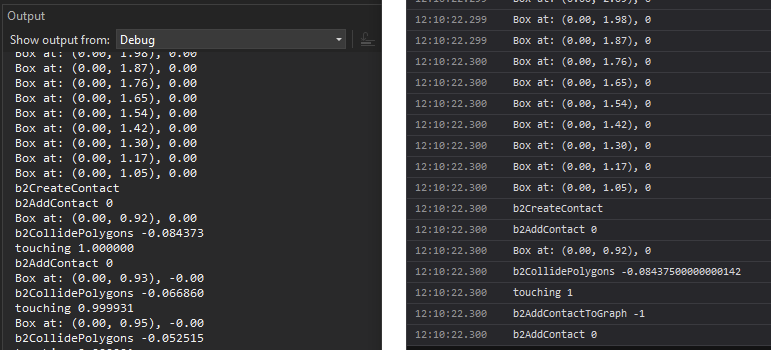

There were a lot of problems with pass-by-value conversion to pass-by-reference. Some of them were exceptionally hard to find, and I resorted to building identical demos in C and JS. I could step through both of them in parallel and compare the numbers at every step.

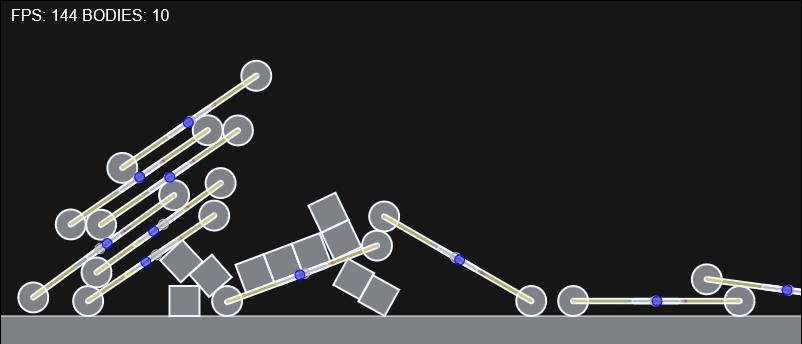

An early output comparison, C on the left, JS on the right. This was the first test where a falling box detected collision with a ground platform:

Testing the Conversion

My testing structure was created in the file main.js, which started as a list of 'unit test' type code snippets. I'd show the LLM a class definition then ask it to devise some unit tests to check the operation is correct. Creating and debugging these for all the major Box2D systems was a boring, slow, iterative process that took approximately 8 days. The LLM was helpful for creating ideas here, but it was very poor at creating the unit tests themselves. A large number of the early attempts were testing invalid calls or expecting unreasonable results. Eventually I used an LLM (Claude Sonnet) to suggest possible tests, then manually coded the ones which seemed to have merit. Getting close to the code at this point was very helpful for later understanding when deeper issues started to show up in the conversion running full demos.

At the end of this stage, I had working tests for all the major classes in the physics engine and was able to start building some demos - safe in the knowledge that the unit tests would catch most errors if I broke one thing while adjusting another.

Shapes!

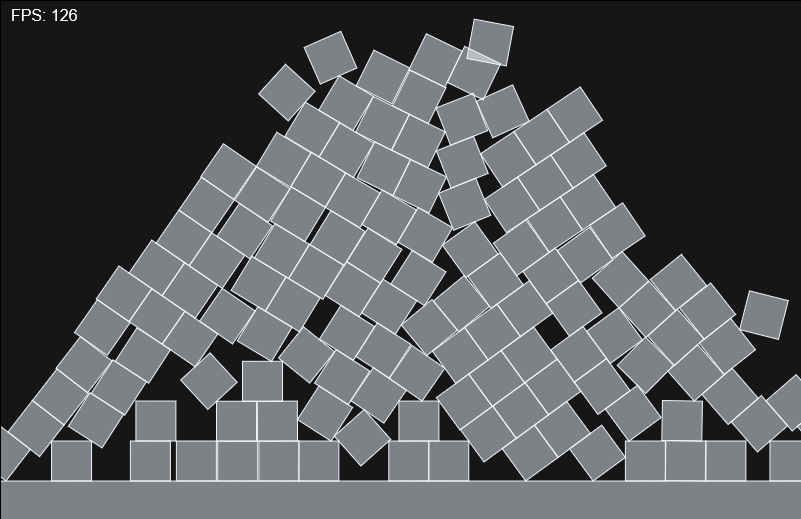

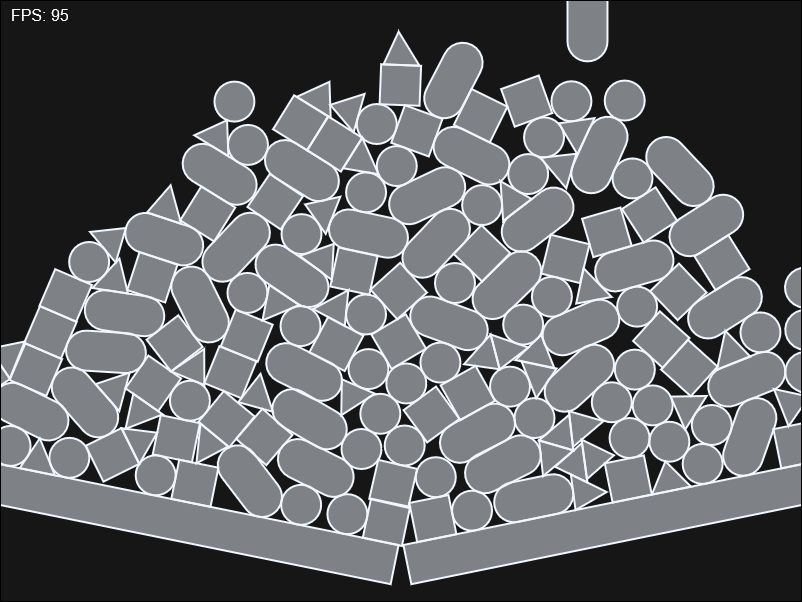

Now the fun part starts. I began with a single box landing on a static box 'ground'. In Box2D a box is a polygon type which is a fundamental element of nearly everything. We use them for ground platforms, stacks, and of course stress-test demos:

Additional shape types include circles, capsules, segments, and chain-segments. The chains are mostly used for platforms, and address issues with adjacent segments which will otherwise cause unwanted collisions for a shape sliding along on top of them.

Every shape type has bespoke collision and rectification functions for interaction with the other shapes, so this job was bigger than I had anticipated (5 more days) but did not require any modification to my process.

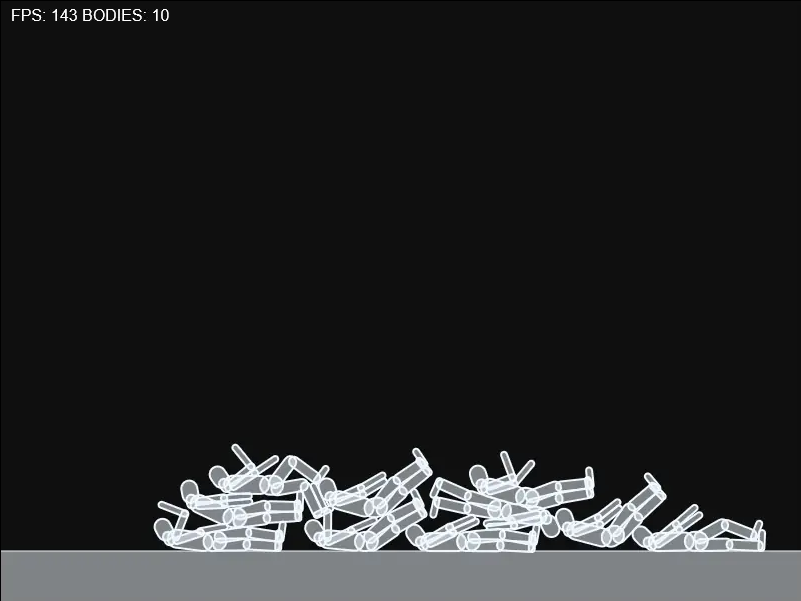

Joints!

With all the basic shape types working, I moved on to the Joints. Starting with a Revolute Joint, I debugged my way through to a working ragdoll. I was relieved to find that the conversion was now largely stable and the remaining bugs were generally easy to fix when I'd located them. I continued to use the parallel build and compare approach, with a matching C demo for every JS demo.

After getting one joint type working, I moved on to distance, motor, mouse, prismatic, weld and wheel joints. My approach remained constant: take a C++ sample from the comprehensive range available in the Box2D repository, convert it into a JS test in main.js, run it and fix the obvious bugs, then compare the results both visually and numerically and dig deeper if necessary. Now that all of the joint types are working, I believe the only remaining systems are the Sensors and their related Events.

Future Work

Going forward, the JS library is working but it is a very literal translation of the C. This makes for some strange looking and inefficient JS, which we must clean up and optimise. The API design is heavily orientated towards the C programming language and its quirks. It has a strong emphasis on avoiding memory allocation and release after initialisation. Given the conversion of typedef struct to classes for JavaScript, many of those design choices can be changed to make the library more familiar to JS developers. It will need to be integrated with Phaser eventually but initially we'd just like to have it available for Phaser projects to use. The original C makes very effective use of multitasking and SIMD instructions. It would be interesting to see if JS worker threads can be used effectively to regain some more of the speed of the original code.

You can see the results of the work so far in the YouTube video below:

👨💻 Can - Phaser Nexus

Physics Particles! This week’s work on the Nexus particle system focused heavily on optimization and restructuring. I’ve been refining the code for particle presets, ensuring flexibility without sacrificing performance. The primary focus was on improving physics particles, both in terms of object creation and overall system efficiency. After several iterations, we were able to push the system to handle 5,000 DOM physics particles, maintaining 15-20fps on the M3 Max as the maximum limit at the moment, which is not too bad for the DOM! The biggest challenge was the initial load, as firing up all the particles at once created noticeable bottlenecks.

The key breakthrough came from utilizing DocumentFragment to batch the particle initiation process. By grouping the creation and manipulation of particles before injecting them into the DOM, it significantly reduced the strain on performance during the initial setup phase. This approach proved essential in keeping frame rates more stable under heavy particle loads. These improvements are setting the stage for even more scalable particle systems in the future, with a solid balance between performance and complexity.

Until the next one, hope you all have high frame rates without drops!

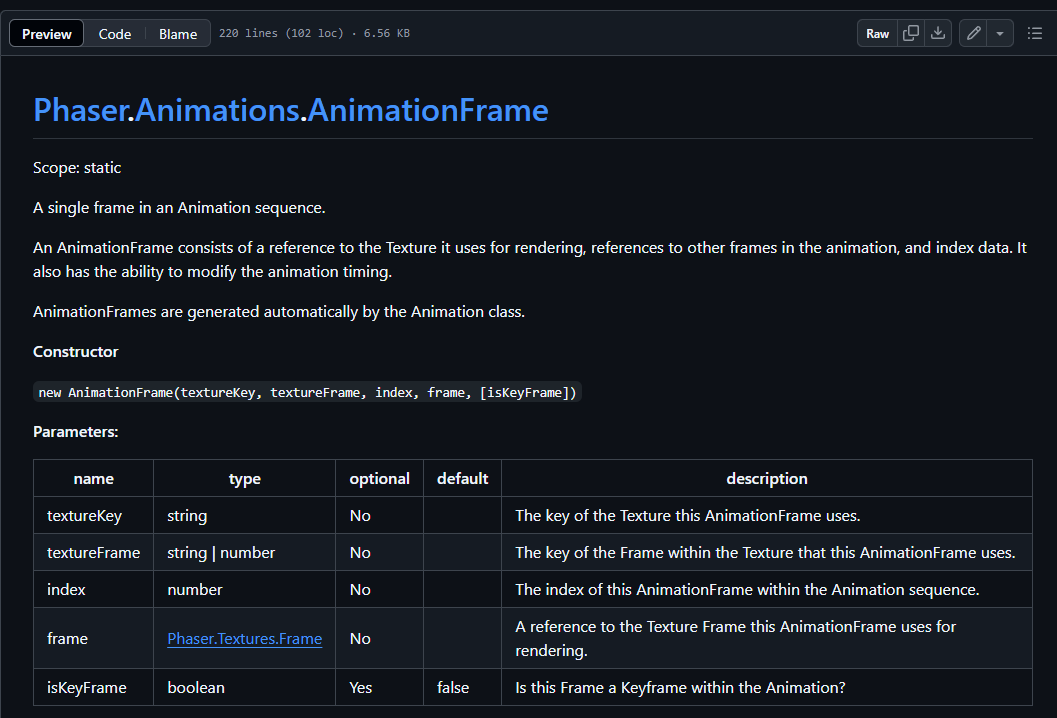

👨💻 Francisco - Phaser Documentation

These past weeks I've been working on updating the Phaser documentation. On the current API Phaser docs site, a new path has been added that allows users to access the latest published version of Phaser by simply adding latest instead of the current version number. Try it out at the following link:

https://newdocs.phaser.io/docs/latest/Phaser.Game

I have also fixed several issues that our dear users reported to us, such as problems with certain links in the sidebar.

Documentation: A New Awakening

We’re also working on a brand-new documentation site too!

Continuing with the documentation, but now something a little more exciting. I've been working on a new exporter for the Phaser documentation that will help us convert what we have in JSDocs to Markdown, making the documentation cleaner and easier to read. At this time, I can't reveal more about this project, but I'm sure Richard will announce something exciting in the future.

👨💻 Zeke - Phaser Documentation

Hi there 🙂

This week has been an exciting week as we begin migration and updating our current documentation to the Hashnode Docs platform. With the help of rexrainbow and samme, we're looking to create some solid documentation that is readable, simple to understand and loaded with example code.

My week was spent diving deep into Phaser documentation while examining documentation written by Rex and Sam. To kick things off, Richard led our team in discussing the new documentation structure and format with the goal of making it easy to maintain, scale and migrate (if the needs arises in the future).

My role involves reading through all 3 sets of documentation above, deciding what would be most helpful and merging each piece of writing into a single coherent document.

My thought process when putting together this documentation is as follows:

What is the natural flow of thought a user might have when learning about this component?

How can some light narrative be weaved into the documentation to make it more readable?

Where can code examples be added to further clarify how each method, function or feature works?

From reading through pages of documentation, Sam's writing style flows more naturally and is readable while Rex is more technical and goes deep into every property and method available. While different, both complement each other really well.

Here's what I've worked on this week:

Phaser Scene. Richard has kindly written a proper introduction to all of Phaser's core components including the Scene that I have added to this piece of documentation. Following the structure of what Sam has written, more code examples and technical details were added from Rex's document.

Phaser Events. Sam provided a good fundamental explanation of how events work in Phaser and Rex provided comprehensive technical details to implement this is code. Rex also provided a more advanced implementation showing how to extend Phaser's EventEmitter to create your own custom event emitter class.

Phaser GameObject. The most detailed documentation worked on for the week with over 1200 lines of text and code. It covers the full usage of creating game objects, methods to change its properties, interactivity, rendering, data management, events, dealing with common problems and even creating custom game object classes. Sam provides the structure, flow and narrative while Rex injects solid code examples with detailed explanations.

Phaser Game. The heart of a Phaser game, all games start by creating an instance of the Phaser Game class. Sam shows you the fundamentals of creating a Phaser game instance and explains basic configuration properties. He also explains the differences between WebGL and Canvas rendering and how it could affect your game. I have also added Rex's global members in the Game class that includes the Scene Manager, Data Manager, Time, Game Config and Window size. Also included are details on how to pause/resume your game and handling browser events that affect gameplay.

Phaser Input. Only Rex's documentation was added here as Sam did not have documentation that deals solely with Input. Rex goes deep into explaning how interactivity works in Phaser. It includes touch / mouse input, keyboard input and how to create keyboard combo input, gamepad input and using the mouse wheel.

Overall, it's been a productive week reading into various documentation, compiling and editing writings from 2 accomplished Phaser users, and thinking how to best present it to our fans and developers.

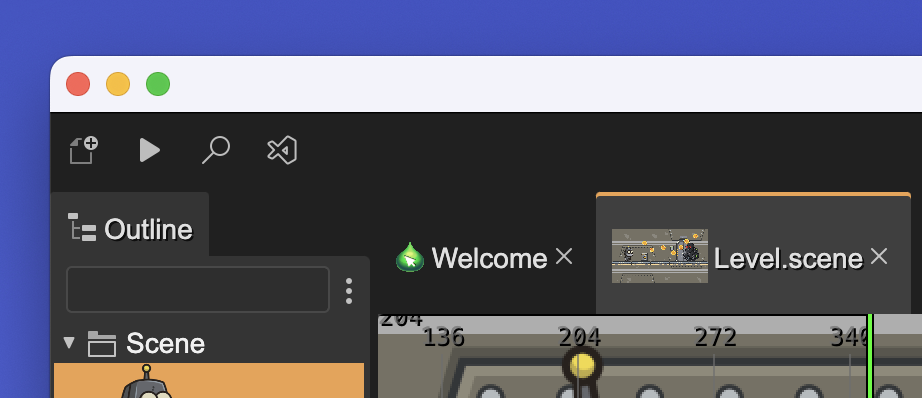

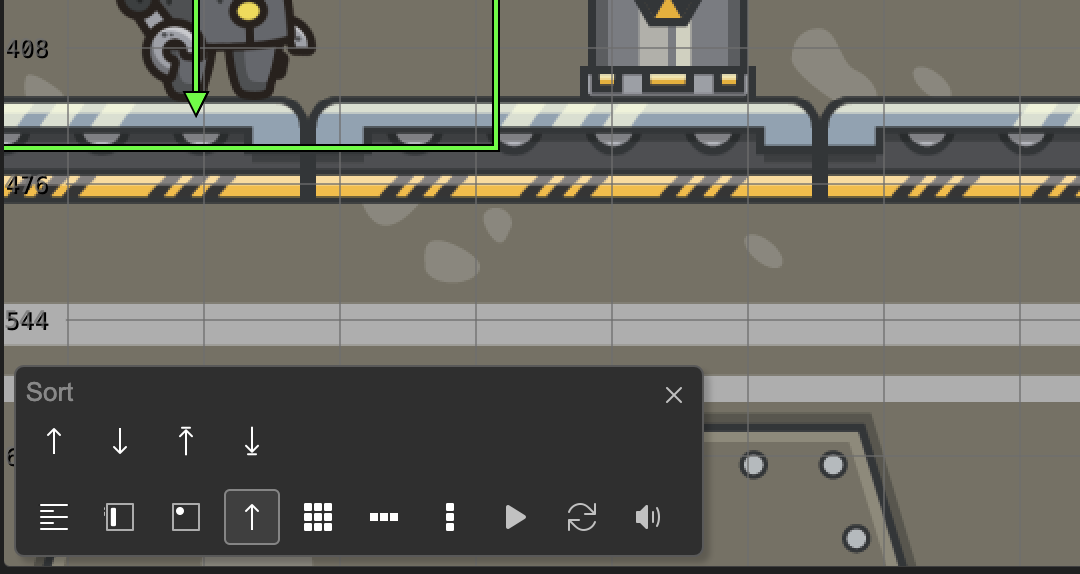

👨💻 Arian - Phaser Editor

Hello!

This week we finally released the new version of Phaser Editor. The team has also been working on a new Trial service for the editor, with which you can use the editor for 30 days. After the storm of a release comes the calm? Not in Phaser Studio! So we immediately got to work on the new release. Although we may have an interim release with some changes we've made as we're busy refreshing our studio image, and we're making promotional videos. For these videos I added some sugar to the editor. Here's what we've done:

A button to launch Visual Studio Code. If you're working on a scene, the code for that scene will also be displayed in Code.

We've added commands to sort objects in the Tools Pane of the scene.

And what's even more exciting, you can now play sprite animations right in the scene, in real time!

For this mid-release we are evaluating a better way for you to contact us, directly from the editor. Stay tuned!

Tales from the Pixel Mines

September 16th 2024 by Ben Richards

I have spent this week arguing with myself. It's been very productive.

Last week saw the release of Phaser Beam Technical Preview 4. We've already gotten some good feedback just by letting people experiment with it. When the possibilities are endless, we can't find every edge case by ourselves!

This week, I've got two stories about arguing with myself. The first is about parallel shader compilation. The second is about framebuffers. These are technical, but cool.

Parallel Shader Compilation

I said I wouldn't work on this. Then I worked on it anyway. Let's not ask why; let's just look at what it does for you.

Shaders do all the rendering in WebGL. With the new shader factory system, we can deliver a wide range of shaders, optimized to the demands of the moment. However, every time we compile a new shader, we have to... compile it. That takes time.

That time will vary depending on device, but on my desktop, I find it takes 10-15ms. A full frame is 16.7ms, so a single new shader will eat most of a frame. Two new shaders will eat multiple frames, causing stutter as there's now no way for the frame to finish in time. And this gets worse with more complex scenarios, as we add more effects or rendering optimizations, or as you add detail to your games.

If only there were a way to keep on rendering while a shader compiles in the background.

There is! It's the KHR_parallel_shader_compile extension. With this, we can start a shader compiling, and efficiently poll WebGL to ask, 'Is it ready yet?'. If it's not ready, we can simply skip drawing with that shader.

So I've created the render config option skipUnreadyShaders. This is off by default. When activated, it enables the extension and stops doing stuttering, blocking checks of shader completion. This results in silky smooth frame rates even when multiple new shaders are compiled at once.

But there is a cost: an object that needs a shader won't render while the shader is compiling. This is typically almost unnoticeable, but that's not ideal.

Also, KHR_parallel_shader_compile is not presently available in all browsers. While Firefox may add the extension at some point, right now only Chromium and Webkit renderers appear to have it.

The best practice, whether we are using the extension or not, is to pre-touch shaders. That is, use the shader in an unobtrusive way before it's actually needed. Once it's been used, it will remain available in the render system, and there will be no further interruptions. You might do this on a loading screen, or as your main menu or titles load in.

But how do you know which shaders are going to be used? Unfortunately, that's a technical question that can only really be answered with comprehensive analysis of the shader factory system. The practical answer is, just be thorough. Pre-touch each type of game object, in the conditions that you expect it to be used. Include lighting and special effects. If you still observe pop-in, check exactly what you're doing and try to replicate it more thoroughly.

Pre-touching will ensure that everything is ready before you need it. It may only save a few frames, but that helps keep your games running smoothly. And it works now, even before the render config option is released.

Framebuffers

The last big development for the Beam renderer is around framebuffers. We've been working towards this objective for months. It's not just a performance upgrade, or a few extra features; it's a whole rework of the system, making it more reliable, powerful, and flexible than ever before.

This is very important to get right, so of course, I've been arguing with myself all week. What is the best approach? How much do we keep the same, versus change or improve? Are the benefits clear? Etc etc.

We think we've come up with a pretty cool approach. None of this has been coded up yet, so consider it a work-in-progress subject to change without notice. But it's enough of a change that I want people to start thinking about it - if I'm doing something really unhelpful, I want to know!

The New Approach

Rather than explain how things work right now, which is complicated, I'll just propose what I want to do.

DynamicTextureuses a command queue and a manual render command.A new

Stampobject renders without reference to a Camera.A new

Boxobject wraps GameObjects in FX.

DynamicTexture Command Queue

The DynamicTexture is a Texture containing a framebuffer where you can make persistent drawing operations. This is used in the RenderTexture GameObject, and can also be used directly. It's convenient for composite graphics which don't need to update frequently.

We will use a command queue to render a DynamicTexture. This records all the draw operations you want to perform, then renders them all at once when you call DynamicTexture.render(). This is more efficient than drawing each operation as you make it, and can take advantage of the full render system, making drawing even more efficient and predictable.

In practice, you may never notice the command queue. You'll still just call commands like stamp() and draw() and erase(). You just need to call render() once you're done.

Commands like beginDraw() or batchDraw() will be removed, because you no longer need to consider how the renderer works. This removes the possibility of bugs, such as a missing endDraw() command or running batch commands without beginDraw().

This makes DynamicTexture more reliable and straightforward to use, which we think is a good trade-off for one new render() command.

Stamp GameObject

The new Stamp object will be like an Image, but it ignores cameras. This is similar to how a DynamicTexture handles stamps already, but by becoming its own object, the Stamp can be more efficient. As well as being used in DynamicTexture operations, the Stamp can be added directly to your game scene. It could be useful for HUD elements or other purposes.

This is more efficient because cameras apply a transformation matrix to everything they see. Most of the game's performance budget goes on those matrices! By eliminating them, we improve performance. The Stamp isn't suited to many things in a game world, but if your camera never moves, it could be amazing.

Box GameObject

The new Box object is a wrapper around another GameObject. Its main purpose is to handle all the FX in the game.

A Box has two sides: 'inside' and 'outside'.

The 'inside' is the world inside the Box. The Box might be moving in the outside world, but the inside doesn't change. This is similar to the 'preFX' available on some GameObjects right now, but expanded from the concept of just affecting a texture. For example, we want lighting to still work inside the Box, as one of the more obvious rendering technologies that we're using. It doesn't work with preFX, because that technique takes control of rendering, rather than allowing objects to render as they wish.

The 'outside' is the Box in the world. Once the Box is positioned on the screen, 'outside' effects run in screen space. This is similar to the 'postFX' available on most GameObjects right now.

Why are we making this change? Why not let objects manage their own FX?

Well, this method makes it easier to compose a scene render graph. It's far quicker for us to develop new rendering technologies if everything is modular like this. And at your end, when you're developing a game, it's easier for you to see precisely where your game is using framebuffers. Just count the Boxes.

This modular approach to rendering should overcome some longstanding issues with flexibility. Where objects were once restricted in their use of blend modes or FX within Containers, we can now nest Boxes inside other collections, and be confident that they'll do the same thing at all levels of the scene hierarchy.

Speaking of Containers (and Layers), they now gain some predictability. Previously, if you added FX to these collections, it would change some aspects of the way they were blended with the scene. With Boxes, we can remove this multi-function rendering, and give you confidence that collections will always render exactly the same. You can still put a Collection in a Box to add FX to it.

This is a big change to FX, but we think it's going to make it easier to see how a game is put together. Each Box represents a framebuffer and a new set of WebGL calls, which can help you reason about game performance more easily. You can also see where your game is rendered to a framebuffer. And, of course, it makes complex hierarchies more reliable and flexible.

I argued with myself about every part of this design. (You should see the Discord logs. I really did argue with myself.) There are lots of ways to approach this problem, and I've looked at most of them! This seems to be the best.

Masks

In theory, I've spent most of 2024 working towards improving masks. Boxes with FX make it possible to do masks in a really powerful way, so that's great!

We intend to treat masks as FX, going forward. With the 'inside'/'outside' concept of a Box, we can make masks much more flexible. This is probably as simple as a shader which erases the masked region of the image.

Current mask behaviour is in screen-space. A mask FX in the 'outside' does exactly this. But how do we make a mask which follows an object? Just put the mask in the 'inside' of the Box instead.

And with masks as FX, we can do really cool things, like apply a mask in between other FX. Imagine: recolor an object, trim it with a mask, and apply bokeh blur to the trimmed result. This is how we want masks to work, and we're so close now!

Next Week

Next week, I'll start getting this stuff working. The DynamicTexture is first on my agenda. And then I'll get to grips with the shiniest, shader-iest part of the whole render system, and work on FX and Boxes. Looking forward to it!

Submit your Content

Created a game, tutorial, sandbox entry, video, or anything you feel Phaser World readers would like? Then please send it to us! Email support@phaser.io

Until the next issue, happy coding!

Subscribe to my newsletter

Read articles from Richard Davey directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by