Monitoring AI at Scale: A Comprehensive and Layered Approach

Pronod Bharatiya

Pronod Bharatiya

In the dynamic and evolving world of Artificial Intelligence (AI) and Machine Learning (ML), continuous monitoring across the AI lifecycle is critical to ensure optimal performance, maintain quality, and deliver desired business outcomes. As organizations scale their AI initiatives, effective monitoring strategies become essential. These strategies must encompass data management, model governance, infrastructure oversight, and a robust observability framework.

This comprehensive guide delves into the two primary levels of monitoring AI at scale, offering insights into the tools and frameworks involved in each layer. By providing a structured approach, organizations can effectively manage their AI systems at both the data, model, and infrastructure levels, and further, ensure a well-integrated observability stack.

Monitoring AI Components - Data, Model, and Infrastructure

At the first level, monitoring focuses on three core components: data, model, and infrastructure. Each of these layers plays a pivotal role in ensuring the efficiency and accuracy of AI systems.

1. Data Monitoring

Data serves as the foundation of AI systems, from training and validation to inference. Monitoring data ensures its quality, relevance, and consistency throughout its lifecycle.

Key Data Monitoring Tools:

Great Expectations

Functionality: Allows teams to define "expectations" or rules for the data, such as valid value ranges or column types, ensuring data quality.

Use Cases: Ideal for data engineers and scientists to validate and monitor data quality across ML pipelines.

Best Practices: Set expectations early in the data pipeline to catch errors before they impact downstream processes.DataRobot

Functionality: An end-to-end platform that automates data preprocessing, model building, and deployment, along with monitoring capabilities.

Use Cases: Suitable for organizations seeking a unified solution for the entire AI lifecycle.

Best Practices: Regularly review performance metrics to detect data drift and adjust models as necessary.Apache Airflow

Functionality: A powerful tool for scheduling, monitoring, and orchestrating complex data workflows in real time.

Use Cases: Particularly valuable in environments with complex data pipelines and ETL processes.

Best Practices: Use clear naming conventions for tasks (DAGs) to enhance readability and future maintainability.

2. Model Monitoring

AI models are the algorithms that transform data into actionable predictions. Continuous model monitoring helps detect performance degradation over time and ensures models adapt to new data patterns.

Key Model Monitoring Tools:

MLflow

Functionality: Provides tracking of model experiments, managing models, and facilitating easy deployment and reproducibility of models.

Use Cases: Beneficial for teams needing a system to manage multiple models and track experimental outcomes.

Best Practices: Use the centralized tracking server to log experiment details, model performance, and metrics.Weights & Biases

Functionality: A collaborative platform for tracking ML experiments, visualizing metrics, and evaluating model performance.

Use Cases: Ideal for data scientists involved in hyperparameter tuning and model evaluations in a team setting.

Best Practices: Maintain detailed logs of experiments to provide clear context and facilitate team collaboration.Neptune.ai

Functionality: A metadata store for machine learning, allowing teams to log experiments, monitor model performance, and store metadata.

Use Cases: Useful for teams focusing on organized collaboration during the model training process.

Best Practices: Integrate Neptune into CI/CD pipelines for continuous tracking and reporting of model changes.

3. Infrastructure Monitoring

The infrastructure layer consists of the hardware and software that power AI models. Monitoring the infrastructure ensures sufficient resources are available for training and deployment while identifying potential bottlenecks.

Key Infrastructure Monitoring Tools:

Prometheus

Functionality: An open-source monitoring and alerting toolkit designed to collect and analyze metrics from different systems.

Use Cases: Widely used in cloud-native applications and microservices environments.

Best Practices: Focus on defining clear and business-relevant metrics and use Prometheus’ alerting features to notify teams of anomalies.Grafana

Functionality: A visualization platform that integrates with Prometheus, allowing for the creation of interactive dashboards to monitor system health.

Use Cases: Best suited for visualizing metrics related to application performance and infrastructure utilization.

Best Practices: Regularly update dashboards to reflect real-time changes in infrastructure and business needs.Kubernetes

Functionality: A container orchestration platform that simplifies the deployment and scaling of containerized applications. It includes built-in monitoring capabilities for resource management.

Use Cases: Widely adopted for managing large-scale microservices-based systems.

Best Practices: Use Kubernetes monitoring tools (e.g., Kube-State-Metrics) to monitor cluster health and set alerts for abnormal resource usage.

The Observability Stack - Ensuring System Health and Performance

The observability stack is the second critical layer of monitoring AI systems, focusing on deeper insights into system health, performance bottlenecks, and data integrity. Observability allows organizations to troubleshoot issues proactively and optimize AI performance through a detailed view of logs, metrics, and traces.

1. Logging Tools

Logging is a foundational component of observability, allowing teams to capture and analyze events and system states across the AI lifecycle.

Key Logging Tools:

ELK Stack (Elasticsearch, Logstash, Kibana)

Functionality: A widely adopted stack for managing and analyzing log data. Elasticsearch offers log storage and querying, Logstash processes incoming logs, and Kibana provides visualizations.

Use Cases: Particularly useful for monitoring logs in cloud and hybrid environments.

Best Practices: Implement structured logging in your applications to enhance log analysis and simplify the extraction of meaningful insights.Fluentd

Functionality: An open-source data collector designed to aggregate logs from various sources and centralize them for analysis.

Use Cases: Ideal for large, multi-cloud environments where logs are dispersed across different services.

Best Practices: Ensure Fluentd forwards logs to a central repository for streamlined analysis and troubleshooting.

2. Monitoring and Alerting Tools

Monitoring and alerting tools help detect anomalies in real time, ensuring the smooth operation of AI systems.

Key Monitoring and Alerting Tools:

Grafana

Functionality: As mentioned earlier, Grafana’s visualization capabilities also extend to monitoring and alerting for system performance.

Use Cases: Grafana’s integration with multiple data sources makes it valuable for setting up monitoring dashboards across various AI components.

Best Practices: Combine Grafana with Prometheus for robust monitoring and use its alerting features to send real-time notifications.Alertmanager (Prometheus ecosystem)

Functionality: Part of Prometheus’ ecosystem, Alertmanager handles alerts by processing rules and sending notifications based on predefined conditions.

Use Cases: Beneficial in environments where real-time alerts on infrastructure metrics are crucial.

Best Practices: Define clear thresholds for alerts to avoid unnecessary noise and ensure critical incidents are prioritized.

3. Performance Monitoring Tools

Performance monitoring focuses on ensuring AI applications are responsive and performant, especially under high-load conditions.

Key Performance Monitoring Tools:

Sentry

Functionality: A real-time error monitoring tool designed to track application crashes and performance issues.

Use Cases: Ideal for monitoring both web and mobile applications, ensuring a seamless user experience.

Best Practices: Configure Sentry to send alerts on critical issues and integrate it with DevOps workflows to reduce response times.Datadog

Functionality: A full-stack monitoring service that provides detailed insights into cloud applications, performance, and resource utilization.

Use Cases: Used for performance monitoring in highly distributed cloud environments.

Best Practices: Leverage Datadog’s APM (Application Performance Monitoring) features to identify slowdowns and resource-intensive processes.

4. Distributed Tracing Tools

Distributed tracing tools enable teams to visualize and track requests across microservices, helping identify performance bottlenecks and issues in complex AI systems.

Key Distributed Tracing Tools:

Jaeger

Functionality: An open-source tool that provides end-to-end distributed tracing for monitoring and debugging microservices-based applications.

Use Cases: Crucial for understanding how requests flow through AI systems and identifying latency issues.

Best Practices: Ensure all critical services are instrumented to provide complete visibility into request traces.OpenTelemetry

Functionality: A collection of APIs, SDKs, and tools designed to enable consistent observability by collecting distributed traces and metrics across systems.

Use Cases: Useful for establishing a unified observability strategy in microservices architectures.

Best Practices: Utilize OpenTelemetry across various platforms and languages to ensure a consistent observability approach.

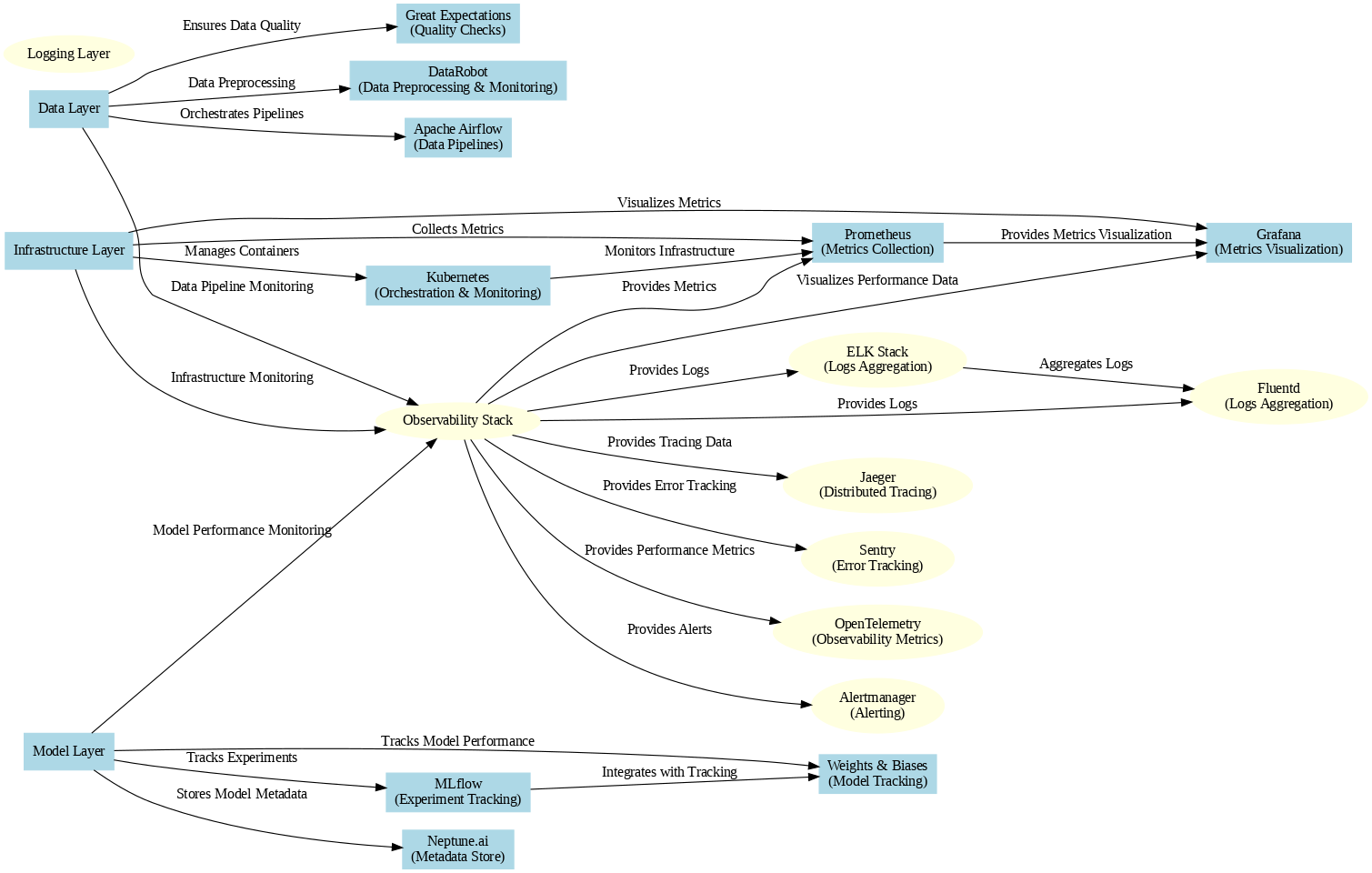

Here is a chart which shows the interdependence of various tools for AI monitoring.

AI Monitoring at Scale - FlowChart

Python code link for the above flowchart -AI_Monitoring_at_Scale_FlowChart.ipynb

Integrating Tools for Effective AI Monitoring

For comprehensive AI monitoring, organizations need to integrate tools from both levels—data, model, infrastructure, and observability stacks—to achieve a holistic monitoring solution.

1. Define Metrics and KPIs

Start by identifying the most important Key Performance Indicators (KPIs) and metrics that matter for your AI system's success. These metrics will serve as the foundation of your monitoring strategy and tool selection.

Data Metrics: Include data quality metrics such as data completeness, consistency, and accuracy.

Model Metrics: Focus on performance metrics like accuracy, precision, recall, and F1-score, along with monitoring for concept drift.

Infrastructure Metrics: Track resource utilization metrics, such as CPU, memory, and I/O operations.

2. Establish Reliable Data Pipelines

Leverage tools like Apache Airflow to create robust data pipelines that consistently feed high-quality data into your AI models. Utilize Great Expectations for data quality monitoring to ensure the integrity of your pipelines.

Data Validation: Implement validation checks at various stages of the data pipeline to catch issues early.

Data Lineage: Use tools that provide data lineage tracking to understand the flow of data through the pipeline.

3. Monitor Model Performance Regularly

Employ MLflow or Weights & Biases to maintain a close eye on model performance and training outcomes. Regular assessments of model performance against defined metrics are crucial for detecting drift and determining retraining needs.

Model Drift Detection: Set up alerts for any significant changes in model performance, indicating potential drift in the underlying data.

Re-evaluation Schedule: Establish a routine schedule for model evaluations based on business cycles or changes in data patterns.

4. Set Up Alerts and Dashboards for Real-Time Insights

Use Prometheus and Grafana to create dashboards that provide insights into system health. Set up alerts based on your defined metrics to ensure proactive incident management.

Alerting Thresholds: Define clear thresholds for each metric to minimize false positives and ensure alerts are actionable.

Dashboard Design: Design dashboards that are intuitive and focused on high-priority metrics, allowing teams to quickly assess system health.

5. Implement Logging and Tracing for Deeper Insights

Integrate logging tools like the ELK Stack or Fluentd to collect log data systematically. Use distributed tracing tools such as Jaeger to gain insights into the flow of requests across microservices.

Centralized Logging: Ensure all logs from various services are centralized to facilitate easier searching and correlation.

Trace Context Propagation: Implement context propagation across microservices to correlate logs with traces effectively.

6. Automate Processes for Efficiency

Streamline monitoring through automation by setting up data collection and analysis processes. Feedback from monitoring efforts should drive continuous improvements in both model performance and data quality.

Automated Reporting: Generate automated reports on model performance and data quality to keep stakeholders informed.

Continuous Integration/Continuous Deployment (CI/CD): Integrate monitoring tools into your CI/CD pipeline to catch issues early in the deployment process.

Challenges in Monitoring AI at Scale

As organizations strive to monitor AI systems effectively, they may encounter various challenges that can impede their progress:

1. Data Quality Issues

Maintaining the quality and integrity of data is a common challenge. Inconsistent or poor-quality data can lead to unreliable model predictions and overall system performance.

2. Model Drift Over Time

AI models can experience performance degradation as the underlying data distribution changes. Continuous monitoring is necessary to detect and address model drift proactively.

3. Increasing Infrastructure Complexity

Scaling AI initiatives often leads to complex infrastructure, making it challenging to monitor all components efficiently. A well-defined strategy for infrastructure monitoring is vital.

4. Collaboration Between Teams

Effective communication and collaboration among data scientists, engineers, and business stakeholders are essential for successful monitoring. Breaking down silos within organizations can enhance observability efforts.

5. Resource Allocation Concerns

Monitoring tools can consume considerable system resources. Organizations need to balance performance monitoring with application performance to avoid detrimental impacts.

Best Practices for Successful AI Monitoring

To navigate challenges and build an effective monitoring strategy, organizations should consider the following best practices:

1. Prioritize Key Metrics

Focus on a limited set of critical metrics that directly influence business outcomes. Avoid overwhelming teams with excessive data that may dilute focus.

2. Automate Monitoring Processes

Streamline monitoring through automation to reduce manual overhead. Automation can enhance response times and ensure consistent monitoring practices.

3. Foster a Culture of Observability

Encourage teams to adopt observability as a core principle. Ensure that monitoring is viewed as a shared responsibility across departments, not just within IT teams.

4. Regularly Review and Update Monitoring Strategies

As technologies and business needs evolve, it’s crucial to periodically assess monitoring strategies and tools to ensure they remain aligned with organizational goals.

5. Invest in Training and Skill Development

Provide training for teams on utilizing monitoring tools effectively. A well-informed team can interpret data accurately and respond to incidents promptly.

Conclusion

Monitoring AI at scale is an essential element of successful machine learning initiatives. By focusing on the core components of data, model, and infrastructure in Level 1, and leveraging an observability stack in Level 2, organizations can gain comprehensive insights into their AI systems. Integrating various tools and adhering to best practices empowers teams to navigate the complexities of AI monitoring effectively.

As the reliance on AI and machine learning continues to grow, so will the importance of effective monitoring. Investing in the right tools and fostering a culture of observability will enable businesses to unlock the full potential of their AI initiatives, driving innovation and value in an increasingly competitive digital landscape. By implementing a structured monitoring strategy, organizations can enhance operational efficiency, improve decision-making, and ultimately achieve their business objectives in the AI-driven world.

This structured and detailed approach to monitoring AI at scale can help organizations ensure their systems are not only performing optimally but are also capable of adapting to changes in data and operational demands.

Subscribe to my newsletter

Read articles from Pronod Bharatiya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pronod Bharatiya

Pronod Bharatiya

As a passionate Machine Learning and Deep Learning enthusiast, I document my learning journey on Hashnode. My experience encompasses various projects, from exploring foundational algorithms to implementing advanced neural networks. I enjoy breaking down complex concepts into digestible insights, making them accessible for all. Join me as I share my thoughts, tutorials, and tips to navigate the exciting world of ML and DL. Connect with me on LinkedIn to explore collaboration opportunities!