Programmatically Removing & Updating Default Lakehouse Of A Fabric Notebook

Sandeep Pawar

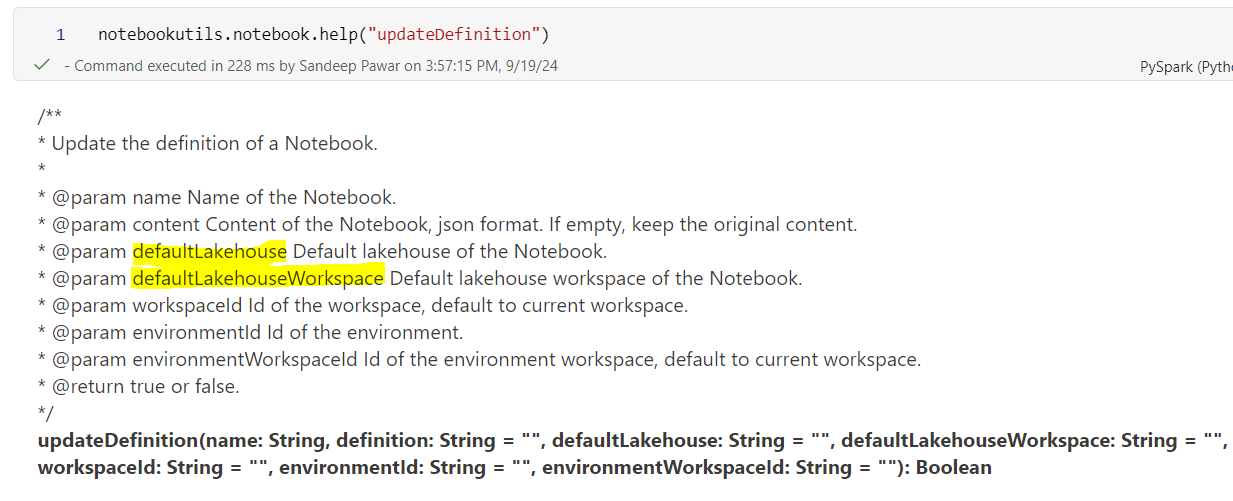

Sandeep PawarI have written about default lakehouse of a Fabric notebook before here and here. However, unless you used the notebook API, there was no easy/quick way of removing all/selective lakehouses or updating the default lakehouse of a notebook. But thanks to tip from Yi Lin from Notebooks product team, notebookutils.notebook.updateDefinition has two extra parameters, defaultLakehouse and defaultLakehouseWorkspace which can be used to update the default lakehouse of a notebook. You can also use it to update environment attached to a notebook. Below are some scenarios how it can be used.

Get Notebook Definition:

Use the notebookutils.notebook.getDefinition to get the notebook in a ipynb format as below:

import json

nb = json.loads(

notebookutils.notebook.getDefinition(

"<notebook_name>", #nane of the notebook

workspaceId='<workspace_id_notebook>' #workspaceid of the notebook

)

)

#returns a json with ipynb

Get the default lakehouse of a notebook:

import json

nb = json.loads(

notebookutils.notebook.getDefinition(

"<notebook_name>", #nane of the notebook

workspaceId='<workspace_id_notebook>' #workspaceid of the notebook

)

) ['metadata']

## Returns

"""

{'language_info': {'name': 'python'},

'kernelspec': {'name': 'synapse_pyspark',

'language': 'Python',

'display_name': 'Synapse PySpark'},

'kernel_info': {'name': 'synapse_pyspark'},

'widgets': {},

'microsoft': {'language': 'python',

'language_group': 'synapse_pyspark',

'ms_spell_check': {'ms_spell_check_language': 'en'}},

'nteract': {'version': 'nteract-front-end@1.0.0'},

'spark_compute': {'compute_id': '/trident/default'},

'dependencies': {'lakehouse': {'default_lakehouse': 'af9de4fd-e3-772099b80f9d',

'known_lakehouses': [{'id': 'af9de4fd--772099b80f9d'},

{'id': 'ab14c0f6-4f284707'},

{'id': 'c9c60586-dc95ac3c'},

{'id': '63c748e6-7b34c7f3'},

{'id': '1d289df0-b9585c'},

{'id': '12414a55-cd3be'}],

'default_lakehouse_name': 'workspace1',

'default_lakehouse_workspace_id': 'd70c1ed700a13145ff'},

'environment': {}}}

"""

As you can see above, the definition returns a list of all the lakehouses mounted in the notebook, including the default lakehouse.

Remove All Lakehouses

To remove all lakehouses, we can remove all known and default lakehouses as below:

import json

def remove_all_lakehouses(notebook_name, workspace_id):

try:

nb = json.loads(notebookutils.notebook.getDefinition(notebook_name, workspaceId=workspace_id))

except:

print("Error, check notebook & workspace id")

if 'dependencies' in nb['metadata'] and 'lakehouse' in nb['metadata']['dependencies']:

# Remove all lakehouses

nb['metadata']['dependencies']['lakehouse'] = {}

# Update the notebook definition without any lakehouses

notebookutils.notebook.updateDefinition(

name=notebook_name,

content=json.dumps(nb),

workspaceId=workspace_id

)

print(f"All lakehouses have been removed from notebook '{notebook_name}'.")

# e.g.

remove_all_lakehouses("Notebook 1", "<workspaceid_of_notebook>")

Add A New Default Lakehouse

To add a new lakehouse and make it a default lakehouse without removing any existing alkehouses:

(notebookutils

.notebook

.updateDefinition(

name = "<notebook anme>",

workspaceId="<worskpace id of the notebook>"

defaultLakehouse="<lakehouse name of the new lakehouse>",

defaultLakehouseWorkspace="<workspace id of new lakehouse to be added>",

)

)

# content parameter is optional which keeps the existing content

Remove All Lakehouses And Add New Lakehouse

To remove all existing lakehouses attached to the notebook and make a new lakehouse the default lakehouse:

# first rmeove the lakehouses

remove_all_lakehouses("Notebook_name", "workspaceid_of_notebook")

#add new lakehouse

(notebookutils

.notebook

.updateDefinition(

name = "Notebook_name",

workspaceId="workspaceid_of_notebook"

defaultLakehouse="<lakehouse name of the new lakehouse>",

defaultLakehouseWorkspace="<workspace id of new lakehouse to be added>",

)

)

Needless to say, when you make these changes, be sure to reference to the right paths in your code. You can use the .lakehouse for it (see here).

Subscribe to my newsletter

Read articles from Sandeep Pawar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sandeep Pawar

Sandeep Pawar

Principal Program Manager, Microsoft Fabric CAT helping users and organizations build scalable, insightful, secure solutions. Blogs, opinions are my own and do not represent my employer.