Normalization and Denormalization in Databases

Cañete,Brandon L.

Cañete,Brandon L.Table of contents

- What is Normalization?

- Why Normalization is Important for Maintaining an Efficient and Consistent Database Structure Documentation

- Normal Forms

- Advantages of Normalization

- What is Denormalization?'

- Why Denormalization is sometimes necessary to improve performance, especially in large-scale Databases where read-heavy operations are common Documentation

- When to Use Denormalization

- Compare Normalization vs. Denormalization

- Practical Examples

In this Research we will understand the differences between Normalization and Denormalization in Databases

What is Normalization?

- The purpose of normalization in database design is to organize data to minimize redundancy and improve data integrity. This process involves structuring a database according to a series of normal forms (rules) to ensure that the relationships between tables are logical and efficient.

Why Normalization is Important for Maintaining an Efficient and Consistent Database Structure Documentation

Reduces Data Redundancy - Normalization eliminates duplicate data by organizing it into related tables. This minimizes redundant storage of data, which conserves disk space and improves data management.

Improves Data Integrity and Consistency - By ensuring that each piece of data is stored only once, normalization reduces the risk of anomalies (insertion, update, and deletion anomalies) that can corrupt data integrity.

Facilitates Better Organization and Query Performance - A normalized database is organized into tables with minimal redundancy and clear relationships between them (via foreign keys), making it easier to query and manage.

Improves Data Flexibility - By structuring data in a normalized way, it is easier to maintain relationships between tables. This supports more complex queries and relationships, such as one-to-many or many-to-many relationships.

Optimizes Update and Delete Operations - With reduced redundancy, update and delete operations become more efficient. Changes only need to be made in a single table or a limited set of records, rather than across multiple tables where duplicate data might exist.

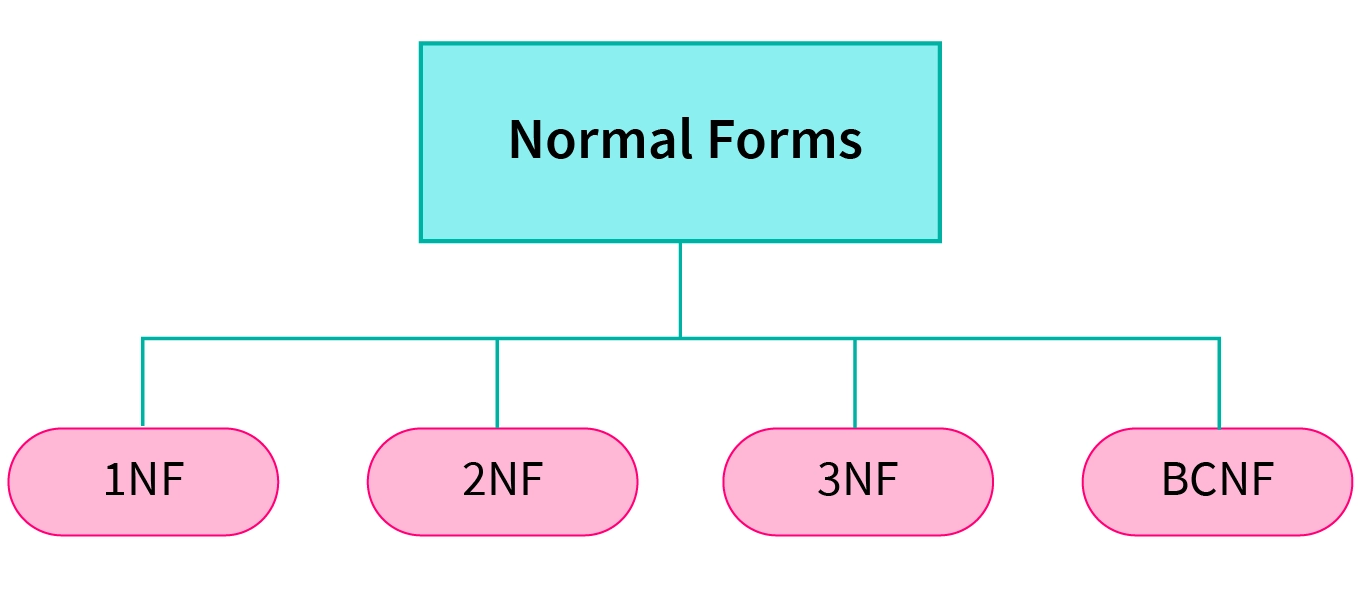

Normal Forms

First Normal Form (1NF): Ensures that each column contains atomic (indivisible) values, and each record is unique.

Second Normal Form (2NF): Builds on 1NF by ensuring that all non-key attributes are fully dependent on the primary key.

Third Normal Form (3NF): Ensures that there are no transitive dependencies, meaning non-key attributes depend only on the primary key.

Boyce-Codd Normal Form (BCNF): A stricter version of 3NF that ensures even more precise handling of functional dependencies.

Advantages of Normalization

Reduces redundant data.

Provides data consistency within the database.

More flexible database design.

Higher database security.

Better and quicker execution.

Greater overall database organization.

What is Denormalization?'

- Denormalization in database design is the process of deliberately introducing redundancy into a normalized database to improve performance, especially for read-heavy applications

Why Denormalization is sometimes necessary to improve performance, especially in large-scale Databases where read-heavy operations are common Documentation

Improved Read Performance - In large-scale databases, read operations often involve multiple

JOINqueries across several normalized tables, which can be slow. Denormalization reduces the need for these joins by storing related data in the same table, allowing faster access during read-heavy operations.Reduced Query Complexity - Denormalized tables simplify queries, reducing computational overhead, which is especially important for large databases with high traffic.

Caching and Aggregation Benefits - Denormalization can be helpful when aggregating data, such as totals, counts, or averages, which would otherwise require multiple

GROUP BYoperations on large datasets. Pre-aggregating and storing frequently accessed data in a denormalized form reduces the need for real-time computation during read queries, which improves performance.Avoiding High Latency in Distributed Systems - By denormalizing, you store related data together in a single location or replica, which can reduce access times, especially in global-scale systems.

Handling Read-Heavy Workloads - Denormalization optimizes the database for frequent reads, prioritizing fast data retrieval over strict adherence to normalization principles.

When to Use Denormalization

Denormalization is particularly useful in large data warehouses with significant volumes and concurrent connections, as it can optimize query efficiency and simplify data structures.

Denormalized data is well-suited for analytical systems with heavy read operations, such as a data warehouse used for business intelligence reporting, where complex queries analyze large volumes of data from multiple sources.

Denormalization can be beneficial in read-optimized databases (like Data Warehouses or OLAP systems) where the primary goal is to improve the performance of read-heavy operations.

Denormalization can significantly enhance read performance and simplify query structures, it also introduces challenges related to data integrity and increased storage needs. Careful consideration is required to balance these trade-offs based on the specific use case.

Compare Normalization vs. Denormalization

Normalization and denormalization are two key concepts in database design, each serving a specific purpose. The goal of normalization is to minimize data redundancy and dependency by organizing data into well-structured tables. Denormalization involves combining tables that have been normalized to improve query performance and simplify data retrieval.

Normalization reduces data redundancy by organizing data into related tables, which minimizes duplicate data. It enhances data integrity by enforcing relationships through foreign keys, ensuring that data is consistent and accurate.

Denormalization improves read performance by reducing the need for joins. Data is often more accessible in fewer tables.

Practical Examples

Real-world examples or case studies where Normalization are applied:

Employee Records: An employee database could have a single table with employee name, department, job title, salary, and contact information. Normalization would involve creating separate tables for Employees, Departments, and Job Titles, linking them with foreign keys.

Inventory Management: An inventory system may begin with a single Products table. Normalization could involve creating separate tables for Products, Product Categories, Suppliers, and Warehouses, linked appropriately.

Real-world examples or case studies where Denormalization are applied:

Amazon (E-commerce Platform): Amazon's platform handles millions of transactions every day, with users frequently searching for products, reviewing order histories, and updating personal information. To optimize read performance, Amazon denormalizes data such as product information, user profiles, and shopping cart details. For example, when a user views an order, the system may store a snapshot of the product details (price, description) at the time of purchase in the order record, instead of joining with the products table every time.By denormalizing, Amazon reduces the number of database joins, ensuring faster retrieval of user orders and product information, which is critical during peak times like Black Friday sales.

Situations where databases are first normalized for data integrity and then selectively denormalized to optimize performance:

Content Management Systems (CMS):

Normalized Situation: A CMS like WordPress might normalize content into separate tables for posts, authors, categories, and tags to maintain data integrity and structure.

Denormalization Situation: To optimize page load speeds, CMS systems might denormalize data by creating a materialized view or cache that stores all relevant post information (author, category, and tags) in a single structure, making it faster to retrieve in high-traffic scenarios.

Subscribe to my newsletter

Read articles from Cañete,Brandon L. directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by