K8s-Why, What and How

Subbu Tech Tutorials

Subbu Tech Tutorials

Why Kubernetes ?

Historical context for Kubernetes:

Let's take a look at why Kubernetes is so useful by going back in time.

Example:

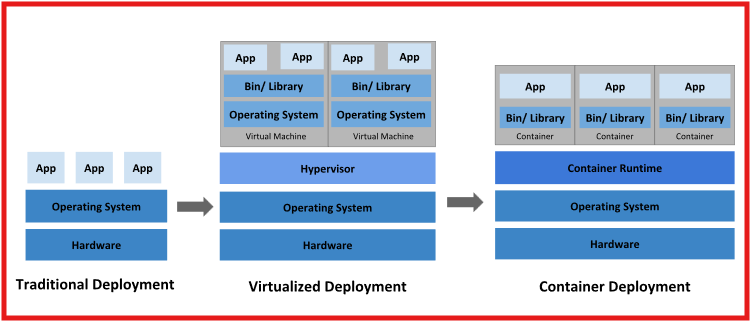

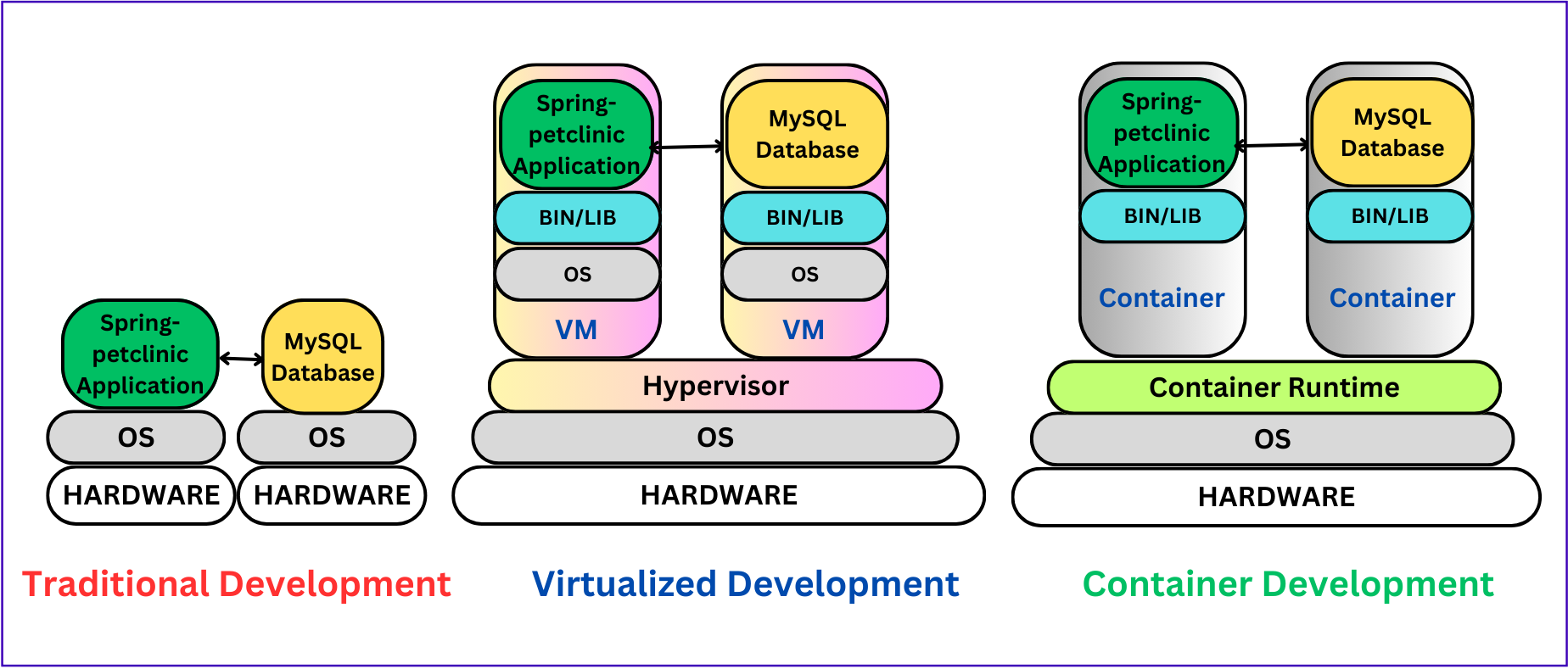

Traditional Deployment Era:

Applications ran on physical servers, but resources weren't efficiently shared.

Limitations:

Inefficient Resource Utilization: Applications ran on physical servers, with resources like CPU and memory often underutilized.

Resource Contention: A single app could monopolize resources, leading to degraded performance for others on the same server.

Scalability Issues: Scaling required buying and setting up new physical servers, which was time-consuming and expensive.

No Isolation: Applications running on the same server could interfere with each other (security and stability risks).

Manual Operations: Server maintenance, application deployment, and updates were mostly manual.

Virtualized Deployment Era:

How Virtualization Overcomes limitations of Traditional Deployment:

Efficient Resource Use: Virtual Machines (VMs) allowed multiple apps to run on a single physical server by dividing resources.

Isolation: Each VM runs its own OS, isolating applications, thus reducing interference and increasing security.

Scalability: Virtualization enabled faster and more cost-effective scaling by deploying VMs rather than physical servers.

Automation: Tools like hypervisors allowed automated management of VMs, reducing manual intervention.

Limitations of Virtualized Deployment:

Overhead: Each VM includes its own OS, which uses a lot of resources (CPU, memory, disk space).

Boot Times: VMs take longer to boot because they need to start a full OS.

Complexity in Management: Managing many VMs and their OS instances can be complex and resource-intensive.

Portability: Moving VMs between environments (development, testing, production) often requires reconfiguration and is not seamless.

Container Deployment Era:

Containers are like lightweight VMs but share the same OS, making them faster and more resource-efficient.

How Containers Overcome limitations of Virtualized Deployment :

Lower Overhead: Containers share the same OS kernel, drastically reducing resource consumption.

Faster Boot Times: Containers start almost instantly since they don’t need to boot a full OS.

Portability: Containers are lightweight and can run consistently across environments (development, testing, production), making them more portable than VMs.

Microservices Architecture: Containers support microservices by enabling small, independent services to run and scale individually.

What is Docker ?

Docker is an open-source platform that allows you to package, distribute, and run applications in isolated containers. It focuses on containerization, providing lightweight environments that encapsulate applications and their dependencies.

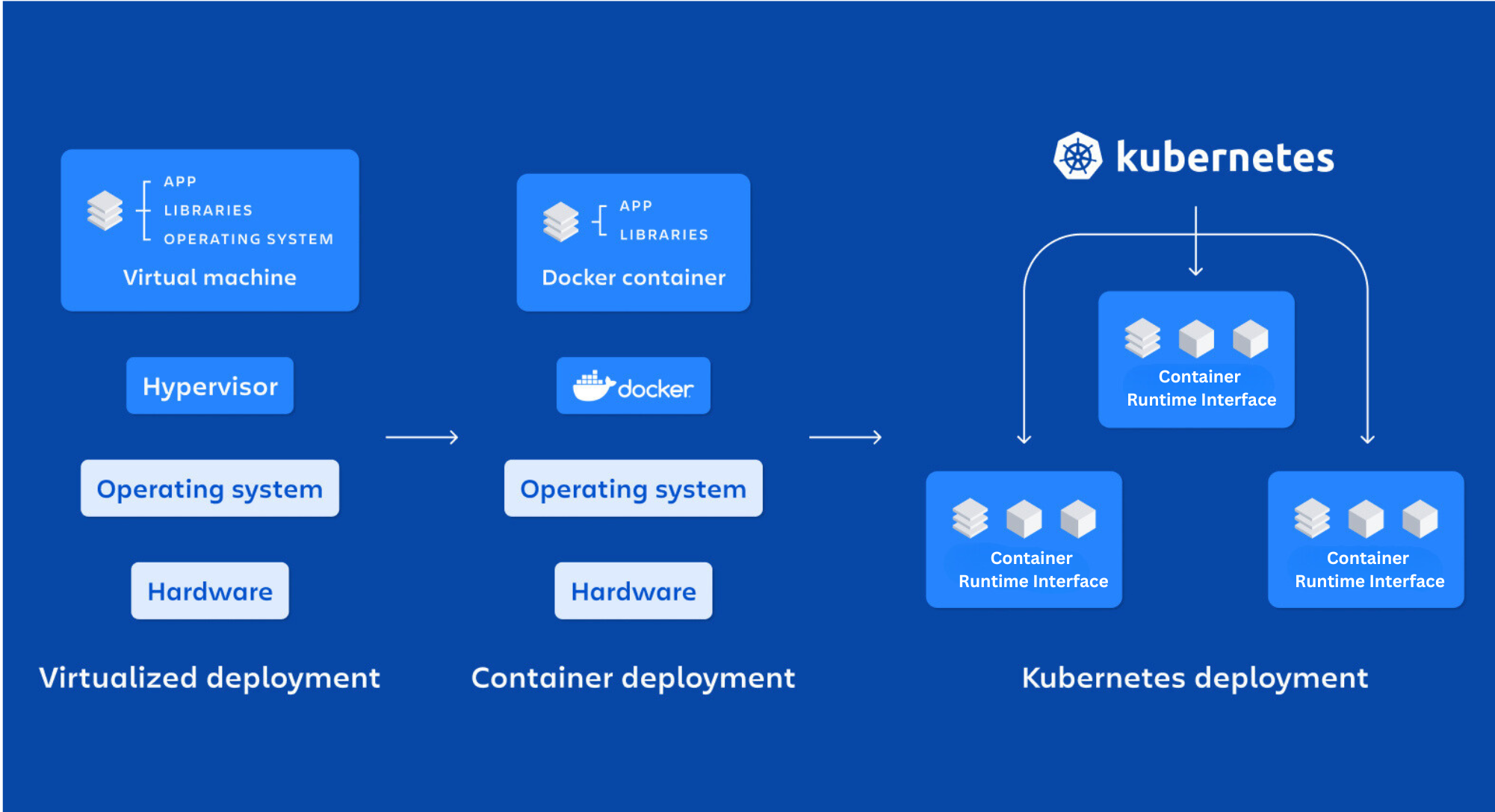

If Docker is good for containers, then why Kubernetes?

Docker operates at the individual container level on a single operating system host. You must manually manage each host and setting up networks, security policies, and storage for multiple related containers can be complex.

Docker cannot automatically heal containers if they crash; you need to manage them manually, as there is no automation process for this in Docker. Additionally, Docker does not support auto-scaling, load balancing, rolling updates, or rollbacks.

Limitations of containers Deployment:

Manual Management: Managing containers manually across multiple hosts is difficult.

Scaling Challenges: Scaling containers efficiently requires complex manual processes.

Service Discovery & Load Balancing: No built-in service discovery or load balancing between containers.

Networking Complexity: Managing communication across containers and hosts is complex.

Persistent Storage: Containers are stateless, making storage management difficult.

Fault Tolerance: No automatic recovery for crashed containers or hosts.

Multi-Container Coordination: Managing interconnected containers is difficult.

This is why Kubernetes came into the picture.

Kubernetes operates at the cluster level. It manages multiple containerized applications across various hosts, automating tasks like load balancing, scaling, and maintaining the desired state of applications.

Here are the key reasons Kubernetes is needed even with Docker:

Orchestration: Manages large numbers of containers across multiple hosts.

Automated Scaling: Scales applications dynamically based on demand.

Load Balancing: Distributes traffic across containers for high availability.

Self-Healing: Automatically replaces failed containers.

Rolling Updates: Enables smooth updates and rollbacks of applications.

Multi-Container Coordination: Manages complex applications with multiple services.

Resource Management: Optimizes CPU, memory, and other resource usage.

Kubernetes vs Docker

Now that we have looked at the individual features and benefits of Kubernetes and Docker, let's compare them:

| Features | Kubernetes | Docker |

| Containerization | Allows to run and manage containers | Allows to create and manage containers |

| Orchestration | Allows to manage and automate container deployment and scaling across clusters of hosts | Does not have native orchestration features. It relies on third-party tools like Docker Swarm |

| Scaling | Allows for horizontal scaling of containers | Allows for vertical scaling of containers |

| Self-healing | Automatically replaces failed containers with new ones | Does not have native self-healing capabilities. It relies on third-party tools like Docker Compose or Docker Swarm |

| Load balancing | Provides internal load balancing | Does not have native load balancing capabilities. It relies on third-party tools like Docker Swarm |

| Storage orchestration | Provides a framework for storage orchestration across clusters of hosts | Does not have native storage orchestration capabilities. It relies on third-party tools like Flocker |

What is Kubernetes?

Kubernetes is a portable, extensible, open source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation.

Kubernetes Key Features:

Self-Healing

Service Discovery and Load Balancing

Automated Rollouts and Rollbacks

Horizontal Scaling

Horizontal vs Vertical Scaling:

Horizontal Scaling (Scaling Out): Adds more instances (e.g., servers or containers) to handle increased load.

Vertical Scaling (Scaling Up): Increases the capacity of a single instance by adding more CPU, memory, or storage.

Storage Orchestration

Secret and Configuration Management

Designed for Extensibility and many more

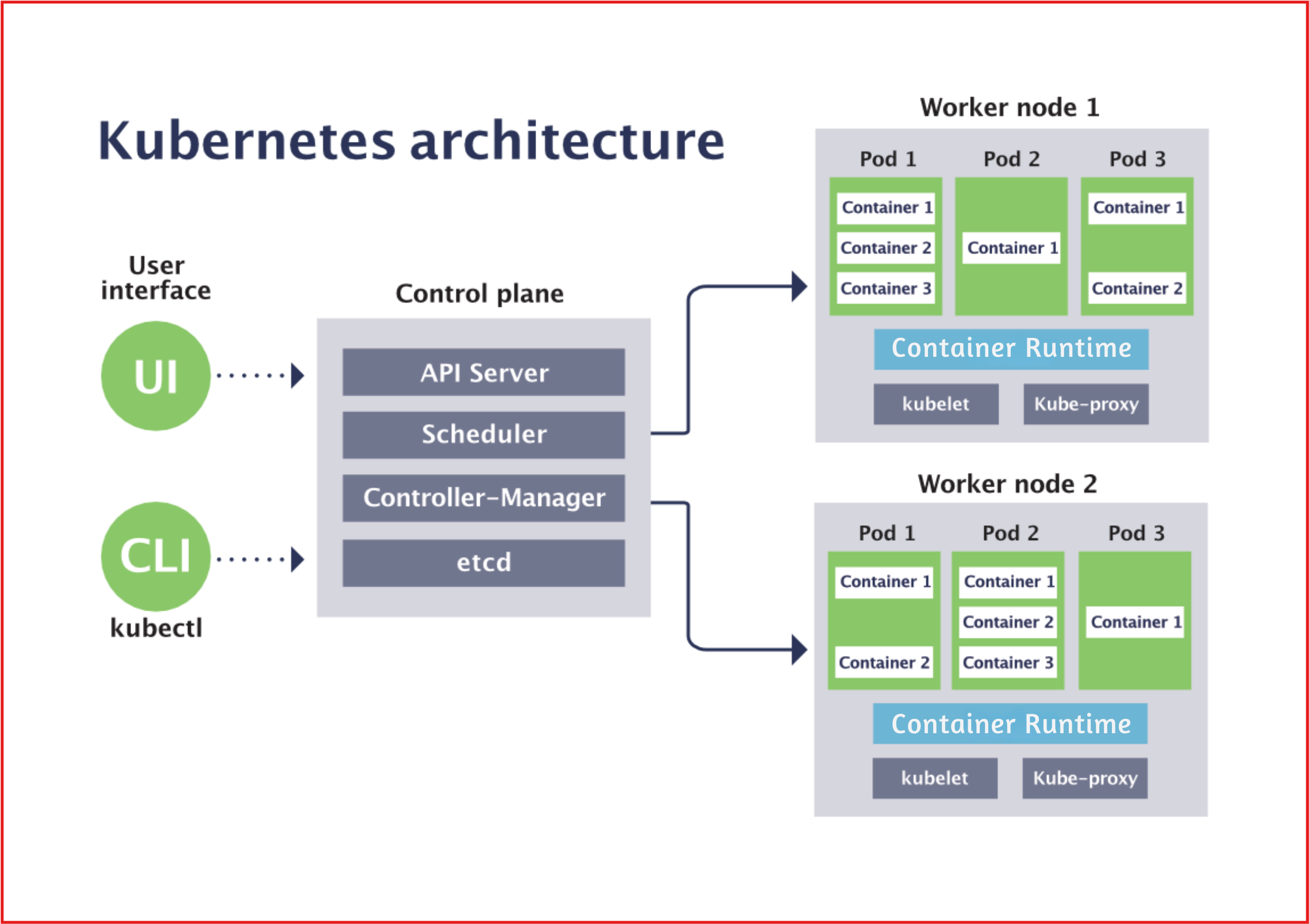

How Does Kubernetes Work?

Kubernetes follows a client-server architecture, with a control plane that manages the overall state of the system and a set of nodes that run the containers. The control plane includes several components, such as the API server, etcd, scheduler, controller manager and cloud-controller-manager(optional). These components work together to manage the lifecycle of applications running on Kubernetes.

Kubernetes organizes containers into logical units called "pods," which can run one or more containers together. Pods provide a way to group related containers and share resources, such as networks and storage. Kubernetes also provides a declarative model for specifying the system's desired state, allowing users to define their applications' configuration and deployment requirements in a simple and scalable way.

Resources:

Subscribe to my newsletter

Read articles from Subbu Tech Tutorials directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by