PEGA Customer Cloud Deployment Models

Arslan Ali Ansari

Arslan Ali Ansari

We all know that PEGA began embracing the true essence of cloud-native starting from version 8.6. Now, with the release and planning of its new version system, starting with PEGA 23, we understand that a major release is scheduled every year. All upcoming releases incorporate the concept of separation of concerns at their core, which means that third-party services like Kafka, Elastic Search, and Cassandra have been externalized, allowing you to share your enterprise deployments of these services. This architecture is further implemented in the core of PEGA services, with Hazelcast now serving as the clustering service that connects to your enterprise Kafka layer. Additionally, you can have a single clustering service feeding and managing the cache of multiple PEGA environments. This architecture is followed in Constellation and even for the Search and Reporting Service.

By establishing such an externalized architecture, PEGA can now be deployed in over a dozen different configurations. In this article, we will discuss some of the most important ones.

PEGA CLASSIC DEPLOYMENT

A classic Pega deployment entails deploying all components for every environment. For example, consider an insurance company that requires Pega to be installed in multiple Kubernetes clusters, with each cluster representing a distinct environment.

Each cluster must have its own database, Kafka, Elasticsearch, Cassandra, and more. In addition to third-party services, Pega services are also deployed individually within each cluster. This type of deployment is recommended for various reasons, but it has its drawbacks. On the one hand, it can be advantageous since each environment is entirely isolated from the others, eliminating the need to worry about micro-configurations for each service to manage environments simultaneously. On the other hand, it necessitates at least twice the hardware resources to run everything and will be twice as expensive to maintain each environment, as each one must be managed separately.

PEGA CONNECT DEPLOYMENT

A connected deployment is one in which the customer already has running clusters of one or more third-party services, such as Elastic Search, Kafka, or Cassandra, etc. We often encounter customer requirements where they mention having existing clusters of any of the aforementioned third-party services and wish to utilize them for PEGA as well.

Generally, it is not recommend sharing these services with other Pega applications; however, it is always a design decision to make based on the Cloud Topology Architecture. We see no harm in implementing this kind of design, where we only deploy the PEGA platform and utilize the customer-provided clusters for third-party applications. This setup reduces the friction in rolling out the PEGA platform, uses significantly fewer resources compared to the classic model, and sometimes is more efficient and performant as well.

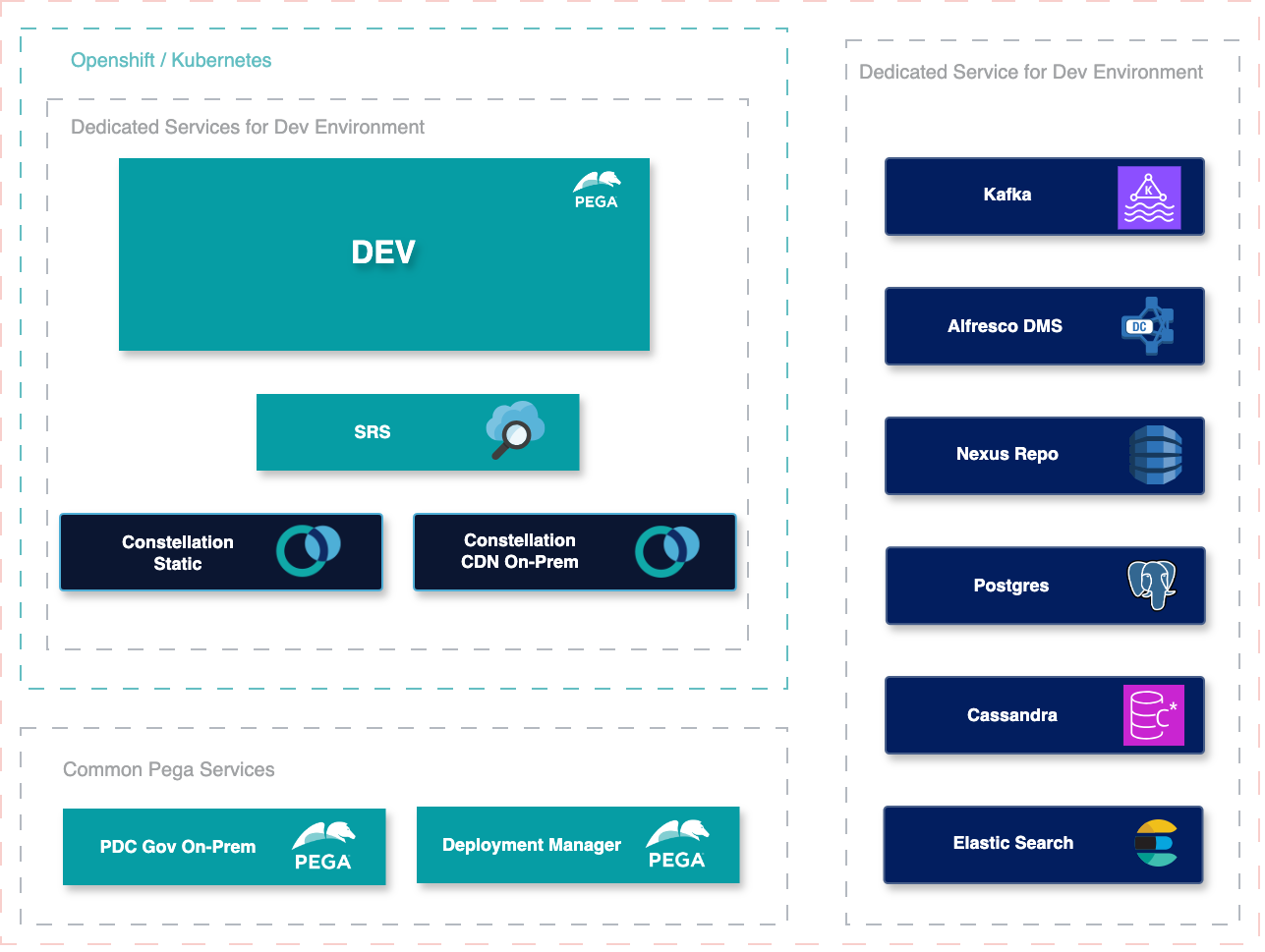

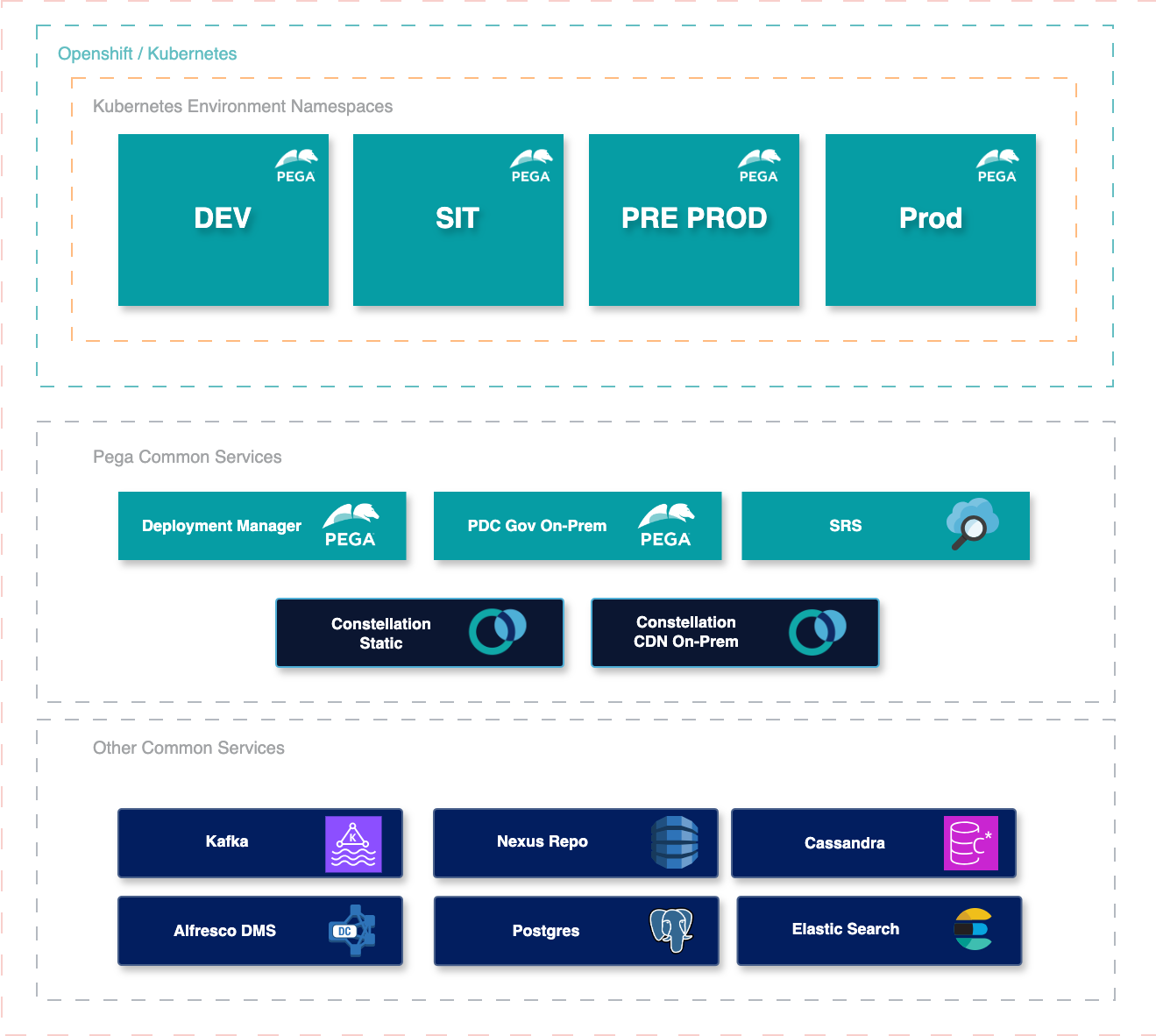

PEGA SHARED DEPLOYMENT

A shared deployment is when we deploy and share many different shareable PEGA Services across different environments and platforms. Most of the services are highly recommended to be shared as it serves the reasons of breaking down Pega into microservices. Lets drill down this deployment in more detail by understanding different scenarios.

Shared CDN-ONPREM

CDN is something that you can not only share among all of your environments without any hesitation but you may also utilize the PEGA-provided CDN for this reason (only if your pods have access to internet).Shared Constellation App Static

This service keeps track of your custom components and serves these components to your PEGA Applications which use constellation UI instead of Traditional UI. It is highly recommended that you share your Constellation App Static service among your environments. One of the major reasons is that you will get consistent Static Custom Components across all environments which means once deployed and tested a component will never be required to test again even after a feature update by DM in the Pega Environment. One scenario where I might recommend using a separate Constellation App Static is when you are using a Third-Party Pipeline Manager like Jenkins to trigger DM Pipelines along with other third-party deployment pipelines like React App, Mobile Apps etc, this way you can trigger a Constellation App Static Custom Component Publish in each environment as a Deployment Pipeline Task.

Shared Search and Reporting Service

SRS is a multi-tenant service, which means many environments with different environment/tenent IDs can connect to a single SRS service. In a Kubernetes cluster, we can deploy it along with a single Elastic Search Cluster serving many different environments.

Deployment Manager and PDC

PDC and Deployment Manager are designed as a shared service for Multiple Environments, DM requires multiple environments to perform code promotions and feature deployment from one environment to the other, however, the the PDC is also a multi-tenant multi-environment service. This means you can use Single PDC for numerous Pega environments for multiple customers/business units.

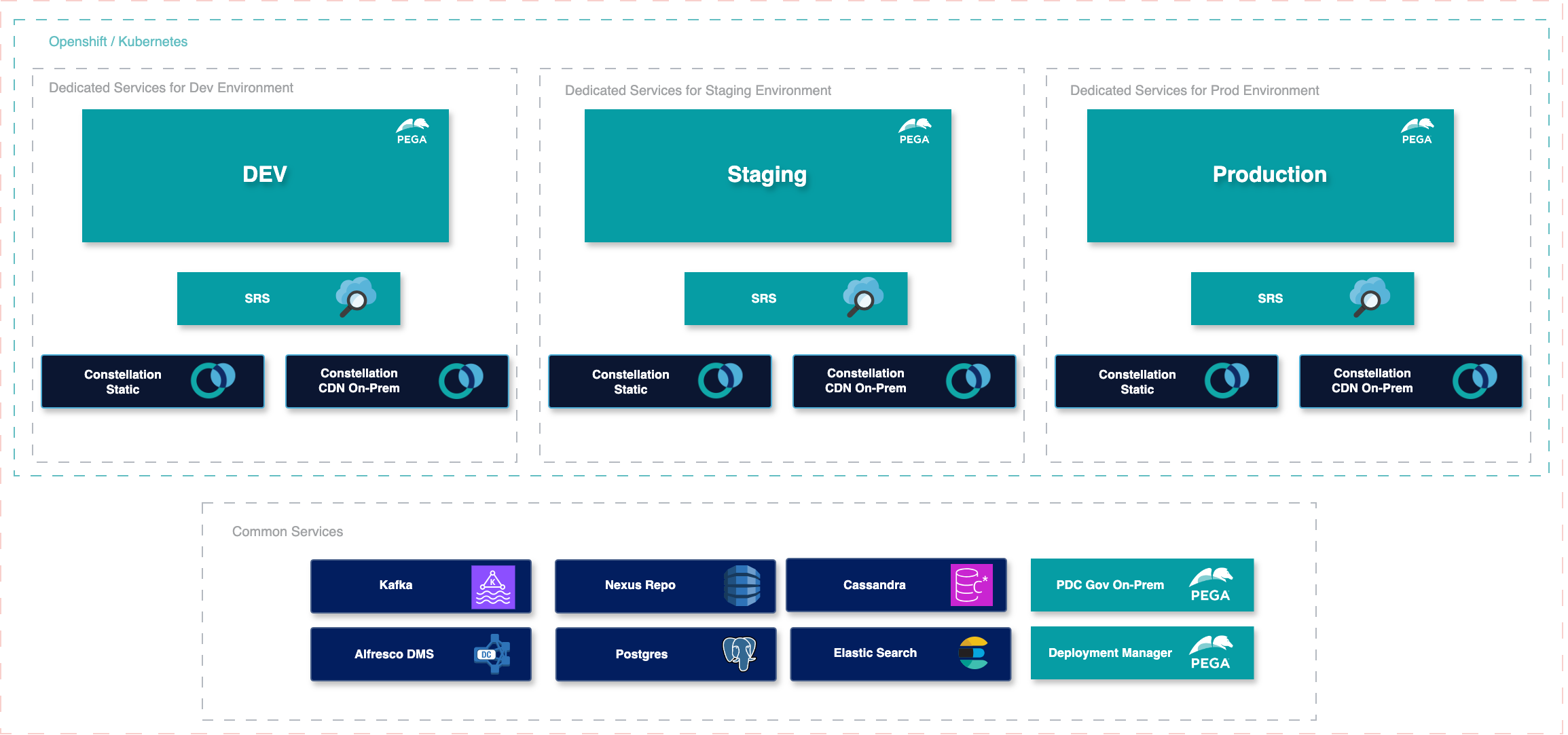

The following figure shows a fully shared model, where all multi-tenant applications and services of Pega as well as other shareable third-party services are shown:

Deploying Pega for enterprises has long been a challenge for Infrastructure and DevOps teams. While finding skilled Infra/DevOps professionals is common, however, individuals with both Pega Admin and Kubernetes DevOps experience are rare. This scarcity is one of the primary reasons many Pega customers face difficulties in managing and maintaining their enterprise Pega deployments.

With the introduction of Pega Cloud, enterprise customers can significantly reduce the complexity and burden of implementation and ongoing maintenance once migrated to Pega Cloud. However, the transition can still be a complex and time-consuming process for organizations with multiple integrations between Pega and other on-prem applications.

As a consultant, I have had the privilege of working with numerous enterprise customers who faced complex application integration challenges. With over two decades of experience in software engineering and cloud technologies, I specialize in designing intricate, scalable architectures tailored to unique business needs. My expertise extends to creating comprehensive communication matrices well in advance, ensuring seamless coordination and faster deployments. I have consistently delivered projects in record time, helping organizations save millions of dollars while achieving their digital transformation goals efficiently.

So feel free to contact me for any design, implementation, or infrastructure management reviews or consulting requirements.

Subscribe to my newsletter

Read articles from Arslan Ali Ansari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Arslan Ali Ansari

Arslan Ali Ansari

A technology evangelist and entrepreneur passionate about software development using cloud-native technologies. Currently leading a cross-Atlantic team of enthusiasts developing and extending the core of Kubernetes at kaiops.io